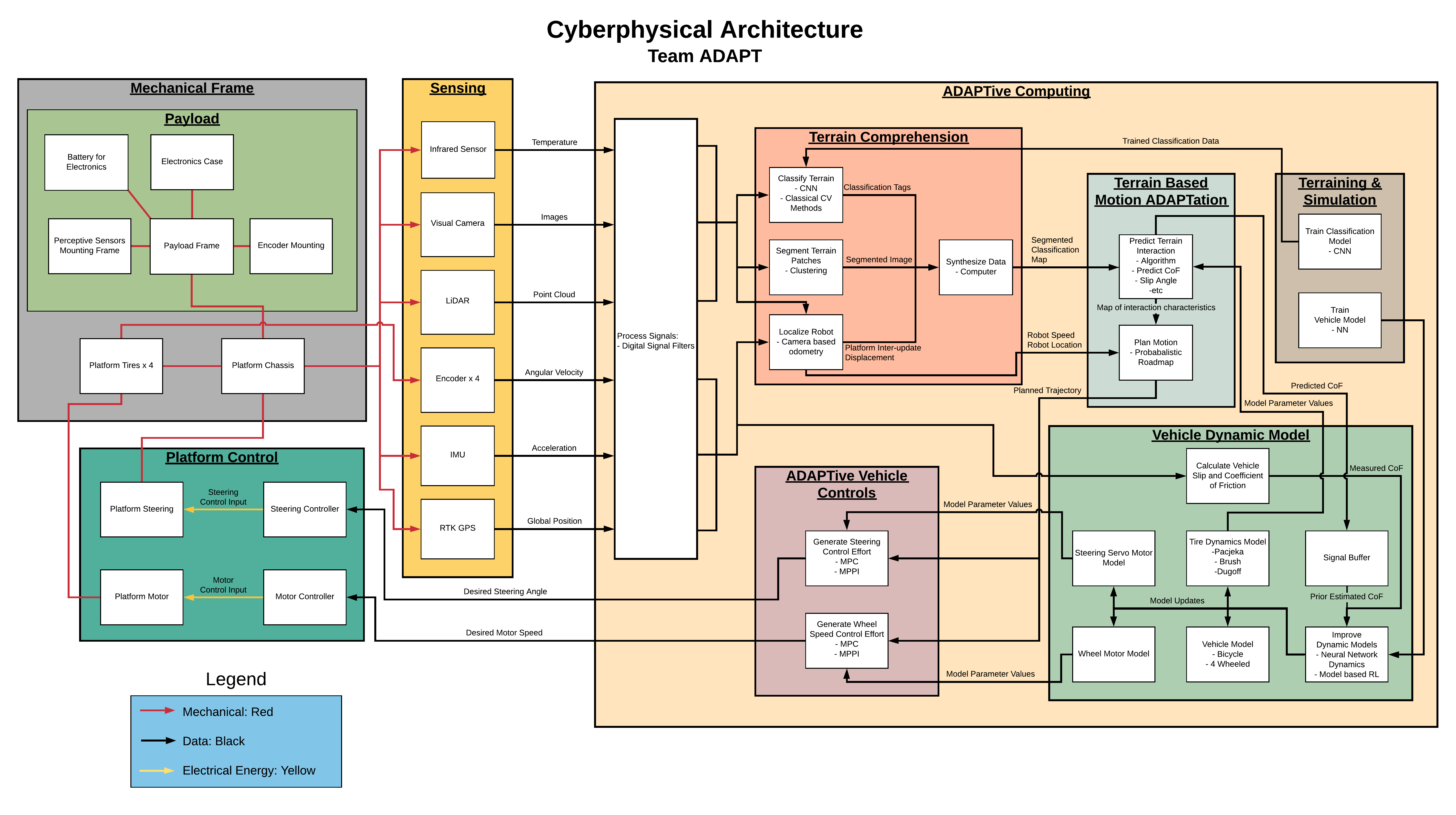

The first major section of the Cyberphysical Architecture is the Mechanical Frame block. It consists of the entirety of the RC camera car and items that will be mounted on the car such as the compute box, electronics case, and sensors. The sensing block contains the sensors that will be used to obtain data necessary for the system to perform its task. The block includes the infrared sensor, camera, LiDAR, encoders, IMU, and GPS. The data collected from those sensors are processed and utilized in various blocks like the Terrain Comprehension block. In this block, the terrain is classified and segmented, and the robot localizes itself relative to its current environment. This information is synthesized and used to predict the tire-terrain interaction and plan the robot motion in the Terrain Based Motion Adaptation Block.

The first major section of the Cyberphysical Architecture is the Mechanical Frame block. It consists of the entirety of the RC camera car and items that will be mounted on the car such as the compute box, electronics case, and sensors. The sensing block contains the sensors that will be used to obtain data necessary for the system to perform its task. The block includes the infrared sensor, camera, LiDAR, encoders, IMU, and GPS. The data collected from those sensors are processed and utilized in various blocks like the Terrain Comprehension block. In this block, the terrain is classified and segmented, and the robot localizes itself relative to its current environment. This information is synthesized and used to predict the tire-terrain interaction and plan the robot motion in the Terrain Based Motion Adaptation Block.

In the Training and Simulation block, a convolutional neural network is trained on labeled terrain data, and a separate neural network will learn the dynamic parameters of the vehicle model. The pre-trained convolutional neural network is used to classify the terrain and segment terrain patches. The learned dynamic parameters are used in the Vehicle Dynamic Model block. In this block, calculations are performed using all models of the vehicle dynamics, which are used to generate a steering control effort and a wheel speed control effort in the Adaptive Vehicle Controls. The control efforts lead to a desired steering angle and desired motor speed commands that are sent to the steering controlling and motor controller in the Platform Control block. The result is the vehicle being controlled to navigate adverse terrain.