The parking spot is detected by identifying an ArUco marker placed close to the lane center line. The initial idea was to change the position of the ArUco marker based on the direction of the desired parking spot. If the tag was detected on the right side of the lane, the robot would know to park in the right-hand spot, and if the tag was detected on the left side of the lane, the robot would know to park in the left-hand spot. For the final implementation, however, we simplified the problem by placing the tag on the center line in both cases, and only flipping the tag. We used the different detected orientations of the tag to inform which side to park on.

Once a parking spot has been found, the system generates a goal in the center of the parking spot. For a smoother parking maneuver, we developed a three-waypoint parking strategy, where the first goal is roughly one parking space before the ArUco tag, the second goal is at the foot of the parking spot and the third goal is the aforementioned center of the spot. The algorithm sends each of the three goals as the robot approaches the previous goal.

As another feature improvement, we perform filtering of the detected ArUco tag to increase accuracy. For this, we maintain a pose queue with the previous 20 detected poses. To extract the least noisy pose out of those 20 poses, we perform RANSAC with the distance metric being the quaternion distance between any two poses. The pose with the most inliers is voted as the final tag pose that we pass to the parking waypoint generation algorithm to create the three waypoints discussed above.

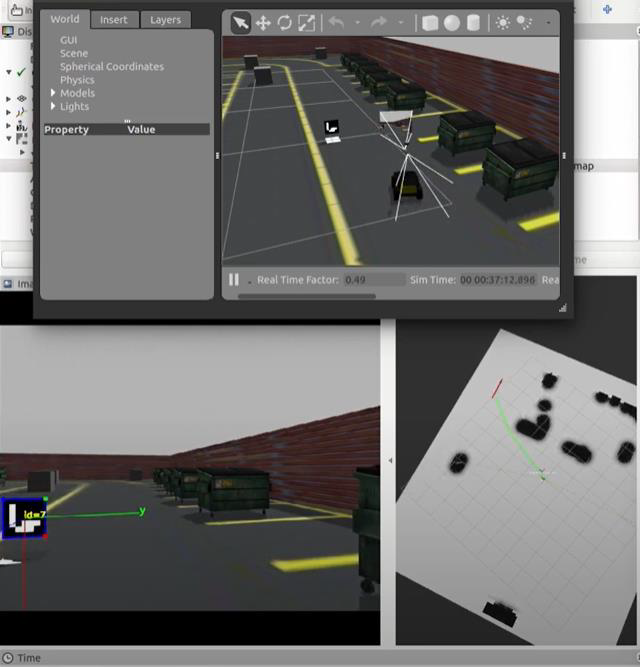

Simulation:

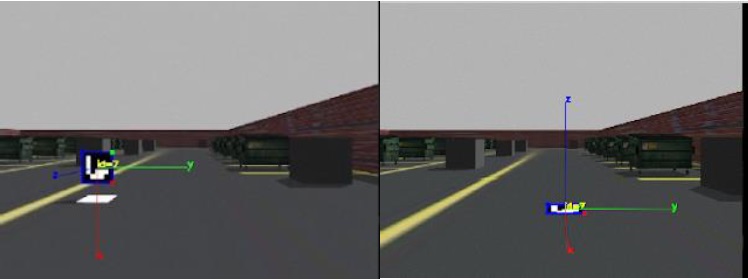

First, we imported and placed an ArUco tag model in Gazebo. We then used the detection package by ‘pal-robotics’ to detect and return the pose of the tag. On experimenting with the different positions and orientations to place the tag for the best use in our problem, we finally settled on keeping the tag floating vertical and close to the yellow centerline on the parking lot. The tag was satisfactorily detected with low errors (under 20cm) in x, y, and z directions. The image below shows how the tag was detected and a path (shown in green) was generated towards the parking pose.

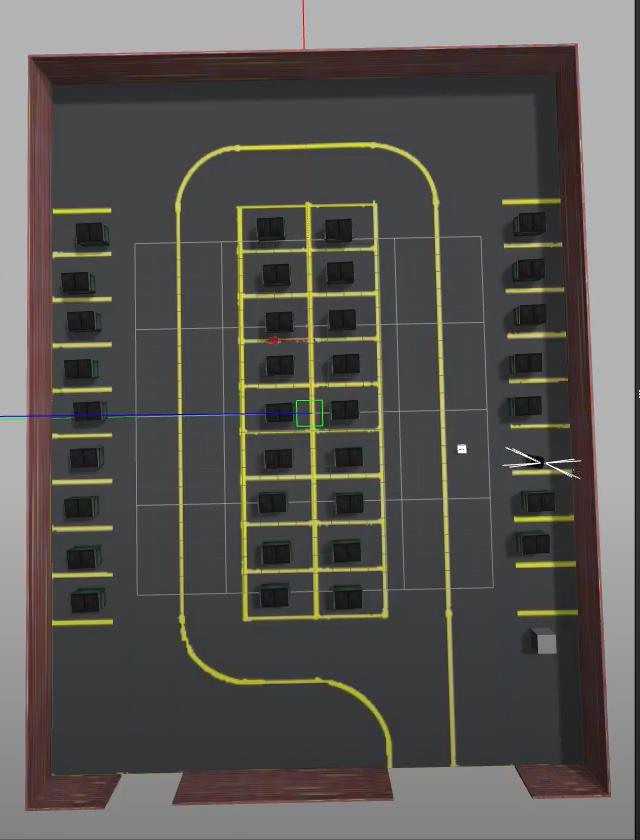

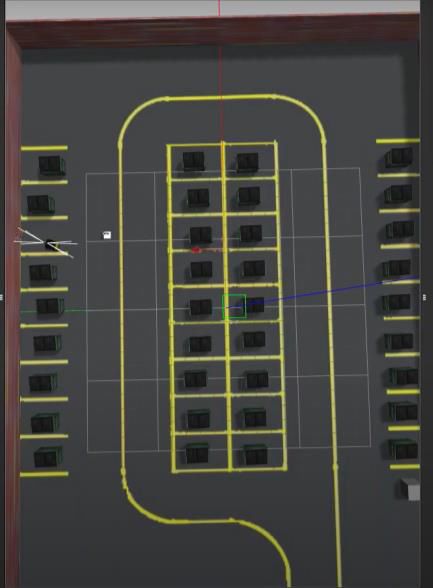

We created four new Gazebo worlds (shown below) to show off the two new features we added to our system, which are the ability to perform left parking and left turns. The four worlds were: two right turns + right parking (RRR), two right turns + left parking (RRL), two left turns + left parking (LLL), and two left turns + right (LLR) parking.

RRL world

RRR world

LLR world

Hardware:

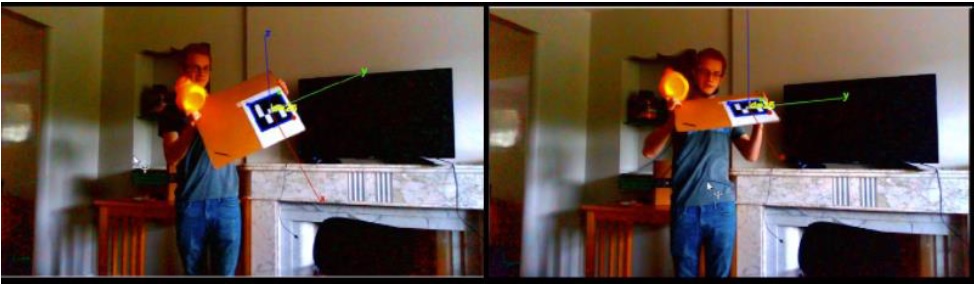

After successfully detecting the tags on our simulated world, we also tested the package on hardware in the parking lot. The results were similar and the figure below also shows the corresponding ‘tf’ being published in RViz. On visual inspection, the tf also seemed to be accurately positioned.