The below image depicts our system and environment setup, which consists of the Robot arm (UR5e), our 3D printed gripper and camera mount, the Intel RealSense camera, shelving unit, and containers with fresh pre-prepared ingredients. Additionally, we will also be placing a weighing scale and a cooking pot on a platform beside the table for dispensing.

Figure 1: System setup

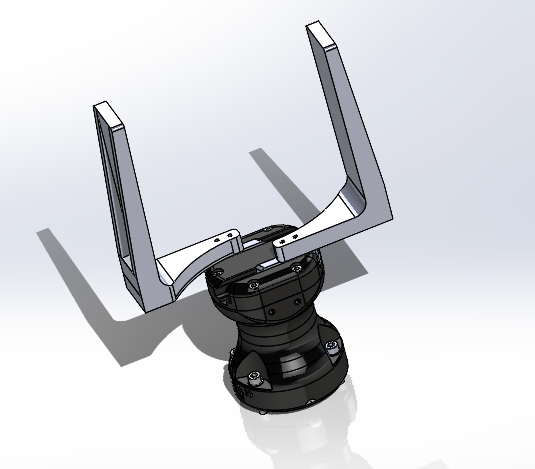

The final gripper design after multiple iterations is as follows:

Figure 2: Final gripper design

The major subsystems of our project are summarized in the following sections.

Hardware subsystem

The hardware framework for Ratatouille is broken down into three main parts

- Robotic arm

- Gripper

- Environmental setup

The robotic arm that we would be using for this project is UR5e. We chose this primarily because of its availability. The robotic arm also comes with an inbuilt force torque sensor which is required to fulfill M.P.R.5. Given the reliability of the robotic arm, we have not chosen any alternative.

We will be using a Robotiq Hand-E adaptive gripper. The gripper stroke will be modified to cater for different sizes of the containers. We will also be using friction padding on the jaws to avoid container slippage. This was the only gripper available in the inventory. We would test this gripper to see if it is suitable for our requirement. Alternatively, if the Hand-E gripper doesn’t suit our needs, we would be purchasing a Robotiq 2F-85 or 2F-140 gripper.

The environment has shelves with a variety of containers placed on them at predetermined locations. Initially, the containers will have markers on top of them for easy pickup. We would be having separate containers for different types of ingredients. The choice of the containers will take into consideration the ingredient, tolerance and quantity of the ingredient that needs to be dispensed.

Sensing Subsystem

The following sensors shall be used in our system

- Intel Realsense D435

- Multispectral camera

- Dymo Weighing Scale

- Force torque sensor

The RGB-D camera that we would be using in our system is the Intel Realsense D435. The camera has been reliably used in a similar setup. So no alternatives have been chosen.

We will be using the NIRvascan diffuse reflectance multispectral camera for our project. Near Infrared Spectroscopy combined with visual data can help with the identification and validation of food ingredients with high precision and recall. It can also help with the identification of spoiled food ingredients, thus contributing to the food safety aspects that our robot strives to achieve in M.N.R.4. This camera should ideally suffice for solid food ingredients. In case we extend our validation to liquid ingredients, we should go for the transmissive model of the same spectrometer. An alternative to this could be the Perkin Elmer DA7440 online NIR Instrument, which is ranked 2nd in our trade study.

The weighing scale is a simple but very critical component. It must provide real-time high accuracy weight updates for the dispensed materials and it should ideally be able to communicate with the PC over USB. The one that we currently have may be replaced by a better scale that suits our needs. There are a wide variety of postal and lab weighing scale options available in the market. A formal trade study has not been conducted at this point.

The Force Torque sensor comes in-built with the UR5e robotic arm. The technical specification sheet mentions the accuracy of the sensor to be 15g. As we would be using the sensor to primarily check if there is sufficient quantity in the container, we believe the inbuilt sensor to meet our needs. An alternate force torque sensor by Onrobot can be used if the built-in sensor fails to adhere to any of our requirements.

Perception subsystem

The perception subsystem has involves four major tasks

- Verify if the container contains sufficient quantity of the ingredient

- Localize the container in 3D space

- Validate the ingredient present in the container

- Determine the physical state of the ingredient when required by the controls

The first task which can be viewed as a binary classification problem shall be performed in two stages. In the first phase, image data shall be used to determine if the container is empty or not. This simplification of task 1 allows for detecting empty containers without requiring the robot to pick them up thereby saving time. Once the robot picks up the container, the weight estimate from the force-torque sensor shall be used to verify if the container actually contains the required weight of the ingredient.

Tasks 3 and 4 are also classification problems and shall be approached using deep learning based techniques. State-of-the-art image classification models such as EfficientNet or ResNet would be used as backbone networks for ingredient classification. Since other data modalities are also to be leveraged for this classification, further experimentation is required to identify the appropriate architecture. For food ingredient image data, even though numerous datasets for entire food dishes are available such as Food101, there are very few datasets of raw chopped/prepped food ingredients. Therefore, to keep this manageable, we have narrowed down our validation to a list of ~15 ingredients that cover all varieties of food ingredients that we plan to handle. We will manually collect image data for the above list of ingredients and use models pre-trained on large-scale datasets so that training and validation is faster and more accurate.

As an alternative, we shall also be considering smaller models such as EfficientNetV2 which have lower number of parameters so that real-time performance is good and the model training will be faster.

For pose estimation, we will be going with Visual Fiducial systems such as april tags or aruco markers. Another approach would be to leverage deep learning based pose estimation approaches if we find that the tags or markers are causing occlusion and hindering the validation of food ingredients or verifying the sufficiency level of ingredients.

Planning Subsystem

The planning subsystem involves two major classes of activities – task planning and motion planning. The task planning will include the portion of the state machine that determines the sequence of operations that have to be performed for each ingredient. The operations involved will also vary based on the results of the various condition checks involved in the process flow. For example, ingredient validation through the spectral camera might be necessary only if the visual information is insufficient to classify the ingredient with the necessary confidence.

While the task planner determines the movements that the robot is required to make, the motion planner determines the path that the robot shall use to reach the goal location. ROS MoveIt package shall be used to manage the overall motion planning framework. Note that as a lot of the movements that the robot shall make will be repetitive, for most cases predetermined way points shall be used and actual planning might not really be necessary. The motion planner will also be responsible for generating the trajectories for moving between the waypoints. Tools and utilities available in the MoveIt package shall be used for the same.

Control Subsystem

The control subsystem pertains to the feedback control process required to dispense the required quantity of ingredients into the cooking pot. This is the most critical aspect of the project as it is directly tied to one of the core functionality of the systems. The primary function of the controller is to use real-time feedback from the load cell under the cooking pot to provide a control policy to dispense the ingredient while meeting the contranints set by M.P.R.5, M.P.R.6, and M.P.R.7.

Though various control strategies exist for general purpose robotic control, most of the sophisticated control methodologies require the availability of a process dynamics model. The dynamics of the material flow during the dispensing process is highly non-linear and extremely difficult to model. There is also limited existing literature that successfully showcase the use of approximate models for solving such tasks.

Hence, PID control, which does not require a system model, shall be used as the control methodology. This choice has also been substantiated with various recent literature and insights from subject matter experts. Each ingredient shall have its own controller with hand tuned parameters.

During the Fall semester, efforts shall be made to replace the standard PID controller with a more advanced control policy. Some potential ideas being explored are the use of reinforcement learning to learn optimal parameters, transferring the PID controller to a new neural network and refining the control policy further with learning based methods.

Simulation

The simulation subsystem is for testing different control algorithms in a virtual environment before deploying those algorithms on the UR5e robot. The 3D simulator that we plan to use is a common robotics simulator called Gazebo. The environment, robot and the gripper would be modeled and imported as URDF files. The environment model will emulate the containers and the shelves that would be placed in the real world. We want to simulate picking up a container placed in a predefined 3D location on the shelf, planning a trajectory from shelf to the cooking pot and trying out different dispensing control strategies. We would then be using ROS in a Real Time Kernel enabled computer to test these behaviors on the real world robot.

If it is difficult to model the environment accurately, then we may simplify the system in simulation but perform extensive testing in the real world until we come up with robust control strategies.