Progress Review 8

GUI

- Mission control GUI implemented with PyQT5

- Displays:

- Agent status: active, battery, feedback frequency, IP

- Task allocator visualization

- High-level system metrics: # active agents, # tasks completed, # victims found

- Mission timer

- User controls: start, stop, homing

- Integrating with system

- Changed architecture of task allocator to be wrapped in new MissionCommander object

- Getting battery, feedback frequency

- Challenging figuring out PyQt GUI design quirks

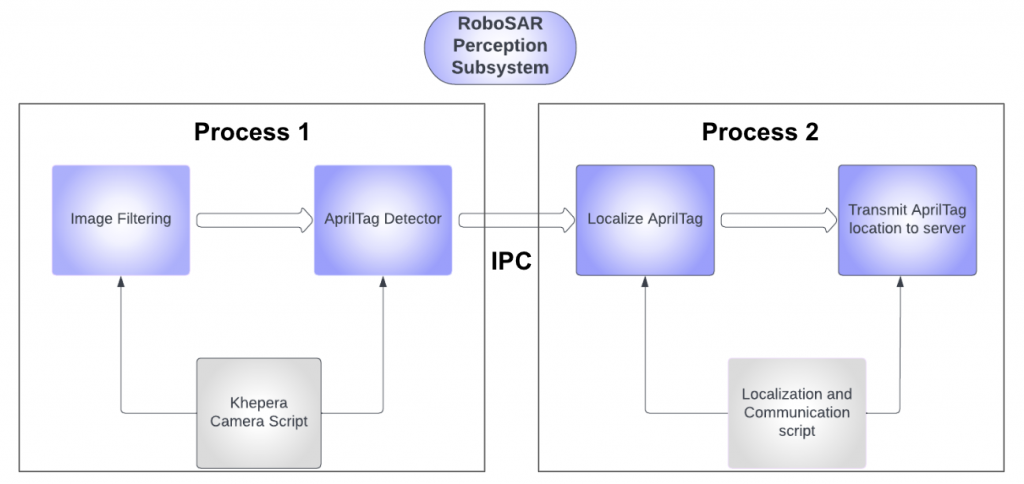

Perception

- Goal of the subsystem : Detect victims (dummied as apriltags) and report relative pose to the server for localisation

- Runs on every robot in the fleet!

- Able to detect apriltags at < 4m distance

- Challenges:

- Latency of image capture with sufficient resolution ~ 1sec

- Mass deployment of software (like all our other software) isn’t possible in the fleet because of camera calibration

- Associating apriltag detections with our dynamic pose graph! Perception x MrSLAM subsystem!

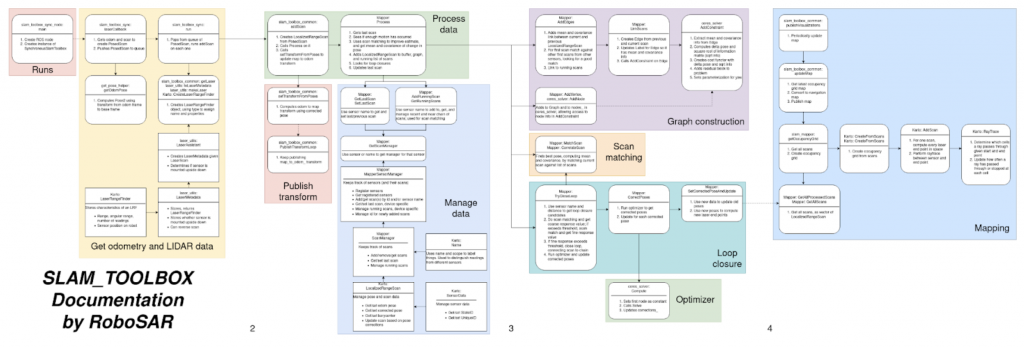

MRSLAM

- Initial research on how to extend slam_toolbox to be able to do multi-robot SLAM

- Understanding slam_toolbox codebase and open issues

- Working prototype in Gazebo sim

- Added capability of multiple lasers

- Since robots will start in same room, they will know relative initial positions

- Build single pose-graph for all agents

- Visualization of built graph + constraints

- Loop closure tests look successful

Navigation

Lazy Traffic Controller

- Our new centralised multi-robot controller

- Converts 2D paths into feasible collision free velocities for each robot in the fleet

- Lazy:

- Each robot has a narrow neighbourhood used for collision detection

- Avoid collision checking unless something detected in this neighbourhood

- Collision avoidance with velocity obstacles

- Traffic:

- Couple preferred velocities with attractive and repulsive forces to adopt swarm behaviours

- Controller:

- Generates velocities for the robots to execute!

- Initial research into velocity obstacles

- Model khepera robots as discs

- Transform these discs to the velocity space for each robot : this is a velocity obstacle

- Choose feasible velocities for each robot outside these velocity obstacles closest to the preferred velocities