Functional Requirements

| Mandatory M.F.0. System shall identify and localize victims in environment using fiducial markers within desired rescue time M.F.1. System shall localize robots with a known map of the environment M.F.2. System shall dynamically allocate tasks to agents in the fleet for optimizing time required for the search M.F.3. System shall ensure simultaneous navigation of agents which have been allocated a task M.F.4. System shall adapt to changes in the fleet size like addition of agents by the users or loss of robots during operation. | Desirable D.F.0: Mapping of unknown environment and search shall happen simultaneously during operation D.F.2: Robots shall manipulate movable obstacles to clear the terrain for motion D.F.3 : Two or more robots shall collectively manipulate larger movable obstacles to clear the terrain for motion D.F.4. : Server shall use Flocking/Formation control to navigate the robots in tight spaces D.F.5: Robots shall be able to navigate dynamic obstacles |

Non- Functional Requirements

N.F.0. : Visualisation of environment, locations of humans and robot agents during operation N.F.1. : Cost of the system N.F.2: Maintenance of the system N.F.3: API to add/subtract robot agents during operation N.F.4: Robots return home after the search |

Performance Requirements

| M.P.0: System will detect 75% of victims in the environment. M.P.1. System will localize victim position within 20cm. M.P.2. System will finish searching environment 20% faster than the naive algorithm for a typical indoor environment. M.P.3. System will have downtime of less than 5s for busy agents. M.P.4. System will update agent status for user at 1Hz frequency. M.P.5. System will handle at least 3 agents in the fleet. M.P.6. System will search 70m^2 within 3 minutes |

Functional Architecture

Functional Architecture: Server

Functional Architecture: Agents

Cyberphysical Architecture : Agents

Cyberphysical Architecture : Server

System Design

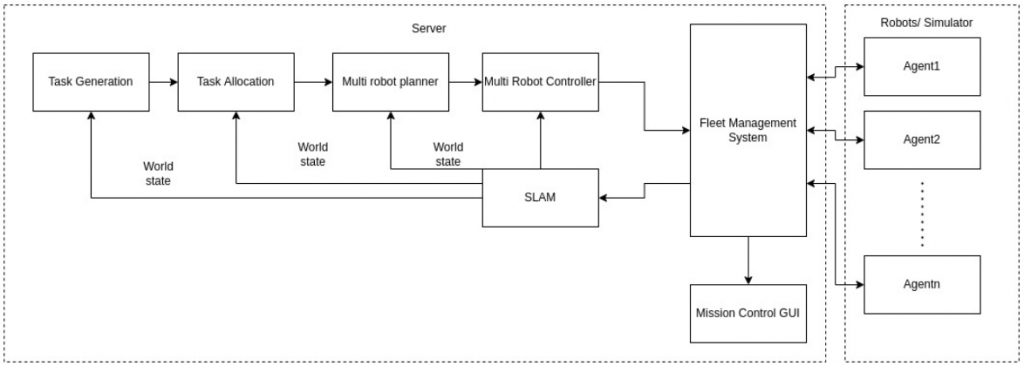

The RoboSAR system is centralized, with a central server running the high level algorithms, and issuing commands to the robots over WiFi. The diagram above shows a high level overview of the system design:

- The robots transmit sensor data over WiFi to the server. This includes wheel encoders, LIDAR scans, and AprilTag detections

- The server uses this information to construct a map of the environment, and determine the poses of the AprilTags and robots on the map

- The map is used to generate tasks. There are two types of tasks:

- Frontiers: Areas to explore, extending the current map

- Coverage: Areas to exploit, to find AprilTags in the environment

- Task allocation determines what robot should do what task, taking into account the value of each task, and the each robot’s distance to that task

- The multi robot planner uses the robot’s pose, its assigned task, and the map of the environment to plan a motion path for each robot

- The controller uses the path assigned to each robot to compute linear and angular velocities needed to accomplish the path

- The fleet management system transmits the velocity commands for the robots to execute, and receives their sensor data for the server to process

The system is a standar laptop running several ROS nodes, connected to a WiFi router. The robots are Khepera IV robots, outfitted with LRF modules for 2D LIDAR scans, and battery packs to support longer operations.

Subsystem Design

SLAM

The Multi-Robot SLAM system, called MRSLAM, is responsible for generating a map, and estimating the poses of the robots and the AprilTags within that map. It uses wheel encoder values to estimate change in pose for the robots, then this estimate is refined using laser scan matching of the LIDAR scans. A single pose graph is constructed for all the robots in the fleet using the sensor data, and then this pose graph is used to construct a map. The map and the robot poses are necessary for all other subsystems to function.

Task Generation

The map is used to find frontiers, which is where the map “ends.” These areas are characterized by free cells touching unknown cells in the occupancy grid map. These frontiers are found using an RRT. While most of the tree is inside free space, if the tree expands an runs into unknown space, this is flagged as a frontier. Filtering is done to ensure frontiers are large, stable, and reachable. The map is also used to generate coverage tasks; large areas with good visibility in the map are found and highlighted. These areas are useful for robots to search for victims, as they provide high information gain when searching for AprilTags in the environment.

Task Allocation

Once tasks have been generated, the task allocator determines an efficient assignment of robots to tasks. The task allocator takes into account distance between a robot and its assigned task, the potential information gain at that task, and several other factors to form the Hierarchical Information Gain Heuristic (HIGH). HIGH has been demonstrated to outperform greedy allocation based on distance between robots and its assigned tasks, which is the standard procedure in unknown environments.

Navigation

The navigation subsystem takes in a robot poses, their assigned tasks, as well as a map of the environment to generate a path from the robot to its task. The controller then computes control signals to track this trajectory. This subsystem ensures that the agent is able to navigate towards its assigned task without colliding with the environment or with other robots.

Perception

The perception subsystem run on each robot, and takes in sensor data from the robot’s camera. It filters the images and constantly runs an AprilTag detection algorithm, and reports sightings to the server.

Fleet Management System

The fleet management system (FMS) communicates with the agents and monitors their status. It is responsible for establishing socket connections between the server and agents. It is located on the central server. FMS takes in agent configuration and current members of the agent class (such as agent pose, battery, heartbeat etc) as input and is given the intelligence to make various decisions on behalf of the agents such as when to avoid obstacles, when to remove/add an agent etc based on these inputs. If an agent is lost or added, this information will be relayed to the rest of the system through the FMS.