Weeding Manipulator Subsystem

Linear Stage Motion Demo

Angular Motion Demo

Two videos above shows manual commands of manipulator and individual accuracy in position the tool.

Our weeding manipulator is designed for precision and speed, effectively directing the end effector to targeted areas with two degrees of freedom. This is achieved through a linear carriage powered by a BLDC motor and a hinge actuated by a linear actuator, providing full ground coverage.

Design and Construction

- Frame and Mounting: The subsystem is securely mounted on a Husky robot using a robust aluminum frame bolted onto a structural steel plate. The frame extends 8 inches above the Husky, ensuring sufficient workspace and an optimal field of view for the downward-facing camera. A cable tray accommodates cable flexibility as the carriage moves.

- Actuation Mechanism: A steel cable system, chosen for its high strength-to-weight ratio, drives the linear carriage. This minimizes oscillating mass and improves response times. The cable is powered by a custom-machined aluminum pulley connected to a BLDC motor, with tension ensuring smooth operation. A secondary pulley redirects the cable for optimal alignment.

Control System

- ROS Integration: A single ROS node controls the manipulator, including laser activation. The BLDC motor uses a rotary encoder for relative positioning, complemented by a homing routine to map encoder counts to absolute distances.

- Precision Control: Both actuators are managed by a proportional-derivative controller with static friction compensation. The system detects when the actuator is stationary and applies additional force to reach the desired position. This compensation adapts geometrically for the BLDC motor near workspace limits, overcoming increased static friction.

Performance Highlights

- Accuracy: The manipulator system accurately targets small weeds (~1 cm²) within its workspace, achieving a 90% success rate in tests. Even under challenging conditions, such as varied weed sizes and placements, the system maintained an 80% accuracy rate.

- Reliability: All failures during testing were attributed to detection limitations or workspace boundaries, not the manipulator system itself.

Additional video here demonstrates the angular motion accuracy in a slight variation.

Video below demonstrated manipulator accuracy. The video is a combination of manipulator accuracy test, eye-safe laser functional, and downward detection accuracy. The video is a part of SVD records.

Weeding Mechanism

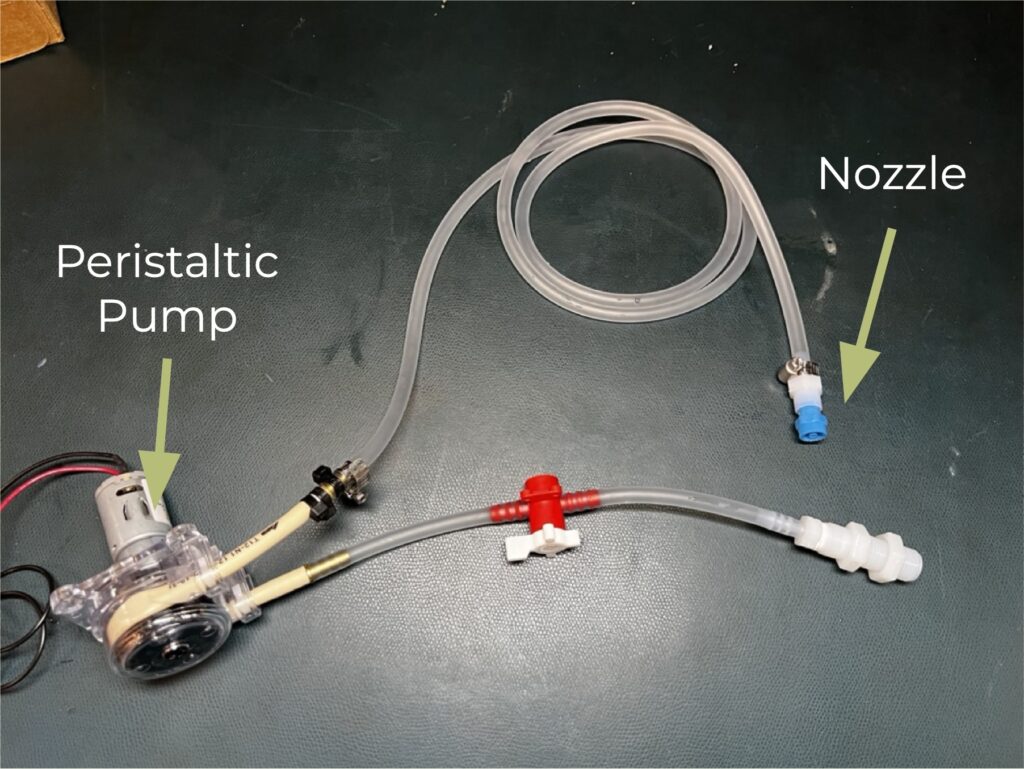

The mechanism will be capable of both chemical spraying and laser weeding, with designs allowing for easy interchangeability. The spraying system uses a peristaltic pump and atomizing nozzle, while the laser system involves a custom PCB and diode laser with heatsink.

The dual-solution approach allows for flexibility and adaptability to different weeding scenarios. Precision spraying offers simplicity, whereas laser weeding provides an environmentally friendly solution. The trade study led to this dual approach over singular methods to capitalize on the strengths of both systems. In the Fall Semester, having proven the laser’s effectiveness, we focused on integrating the laser control with our system.

Laser Mechanism

Traditional weeding methods in agriculture are labor-intensive, environmentally harmful, or require bulky machinery. Our system builds on mossTM’s autonomous mobile platform by introducing a lightweight laser-based weeding tool. This innovative approach avoids the drawbacks of conventional tools, ensuring compatibility with the existing autonomous system.

Design Choices

- Laser Diodes vs. CO2 Lasers: Unlike CO2 lasers, which are inefficient (≈20% efficiency) and require massive power (e.g., 22.5 kW for Carbon Robotics’ 30-laser tractor system), our solution leverages laser diodes. These diodes offer a much higher efficiency range (≈50–80%) and are compact, eliminating the need for additional systems like vacuum pumps.

- Laser Specification:

- Full-Power Laser: A 5 W, 447 nm laser diode was chosen for its cost-effectiveness in safety equipment compared to higher wavelengths.

- Eye-Safe Laser: A 515 nm laser diode operating below CMU’s safety limit (4.99 mW) ensures safe operation.

Safety Measures

Developing a laser system involves significant safety precautions:

- Compliance with CMU’s Laser Safety Program.

- Use of laser safety goggles tailored to the chosen wavelengths.

- Targeting strategies informed by research, such as focusing on the weed’s meristem for optimal damage.

Results

- Eye-Safe Laser: Successfully tested to meet SVD requirements for functionality and power limits.

- Full-Power Laser: Demonstrated effective weed damage, meeting the SVD requirement for killing weeds.

Performance Highlight

The system effectively balances precision and safety, offering a lightweight yet powerful solution for autonomous agricultural weeding.

Effect of full power laser damage to plants @ 50% capability

Video below demonstrated eye-safe laser functional. The video is a combination of manipulator accuracy test, eye-safe laser functional, and downward detection accuracy.

Sprayer Mechanism

Why We Chose a Sprayer

- Reuse of Existing Components: Our sponsor’s system already included a sprayer mechanism, allowing us to repurpose spare parts.

- Ease of Development: Sprayers are simple to build and test, enabling rapid, iterative development.

- Fallback Safety: With limited expertise in laser development, the sprayer provided a reliable backup plan.

Design and Construction

- A herbicide reservoir tank (not shown in figures).

- A peristaltic pump connected to the tank and an atomizing nozzle via hoses.

- A Phidgets module controlling the pump for seamless integration with the manipulator.

The final design ensures herbicide delivery through the nozzle to target weeds efficiently.

Performance Results

The sprayer subsystem was evaluated based on two criteria:

- No Leakage: All runtime tests confirmed a leak-free system.

- Consistent Output: Trials demonstrated uniform spraying performance. Table below shows the mass output results during SVD and SVD Encore trials:

| Trial | SVD (g) | SVD Encore (g) |

| 1 | 6 | 7 |

| 2 | 7 | 7 |

| 3 | 8 | 8 |

Weed Perception Subsystem

The perception subsystem plays a critical role in detecting and tracking weeds within the robot’s environment. It ensures fast and reliable results, enabling visual servoing to precisely target weeds.

Deep Learning Model and Data

We fine-tuned the YOLOv8 Segmentation model for weed detection, leveraging approximately 2,000 labeled images with ground-truth segmentation masks. These datasets were collected from:

- Lake Forest Gardens tree nursery (outdoor environment near Pittsburgh).

- Mill 19 testing environment (two distinct camera orientations: forward-facing and downward-facing).

Key Observations:

- Models trained on one perspective had reduced performance when deployed on another, necessitating pre-training on combined datasets followed by fine-tuning for the target orientation.

- Data augmentation techniques (e.g., Gaussian noise and simulated exposure variations) improved robustness against motion blur and auto-exposure inconsistencies.

Performance Metrics

Our model met precision and recall benchmarks across environments, as shown in Table below:

| Environment | Precision | Recall | mAP@50%IOU |

| FVD Forward-facing | 98% | 91% | 97% |

| FVD Downward-facing | 99% | 95% | 99% |

| Lake Forest Gardens | 80% | 62% | 72% |

FVD Evaluation

During the Functional Verification Demonstration (FVD), detection models excelled:

- High precision and recall enabled consistent weed detection and tracking.

- Visual servoing achieved a success rate exceeding 80%, surpassing key performance metrics.

Localization Subsystem

The localization subsystem achieves two primary goals:

- Weed Localization:

- Determine weed positions in the robot’s base frame for:

- Developing a farm-wide weed density map.

- Providing input cues to the targeting system.

- Robot-to-World Mapping:

- Track the robot’s position in the world frame for waypoint navigation.

- Convert weed positions to the world frame for accurate weed map generation.

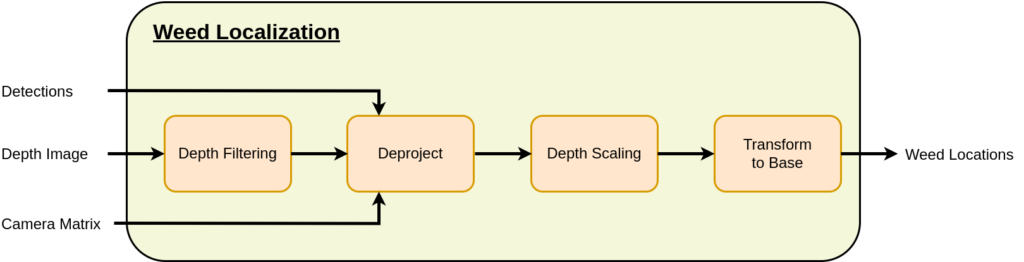

Weed Localization Process

The localization pipeline transforms camera detections into robot-frame coordinates. Key steps:

- Input Data:

- Detections and depth images from the Realsense D435 Camera.

- A pre-calibrated camera matrix.

- Processing:

- Filter out invalid depth values (0 readings).

- Smooth depth images to remove noise.

- De-project detections into camera-frame coordinates using the camera matrix.

- Transform camera-frame coordinates to robot-frame coordinates using the Camera-to-Base Transformation matrix.

Validation:

To verify accuracy, Aruco markers with known positions were used as stand-ins for weed detections. The detected positions were compared against ground-truth coordinates, ensuring reliability.

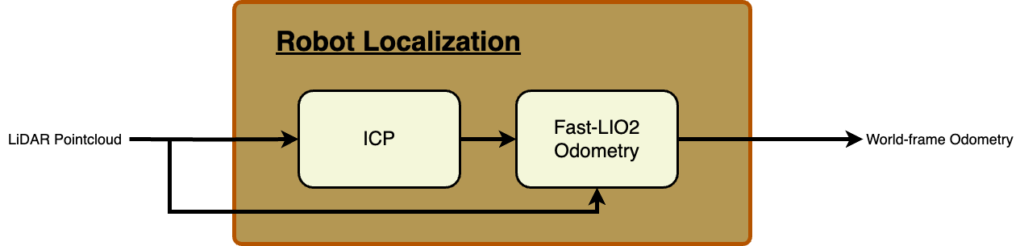

Robot and Weed Mapping in the World Frame

Mapping robot and weed positions to the world frame involved:

- Pointcloud Generation: Using Ouster OS1-64 LiDAR.

- Initialization: Aligning LiDAR pointclouds with a known environmental map via the ICP (Iterative Closest Point) algorithm.

- Odometry Calculation: Leveraging the Fast-LIO2 algorithm to calculate robot motion from LiDAR data.

This integration provided accurate world-frame odometry, enabling waypoint navigation and weed mapping.

Performance Highlights

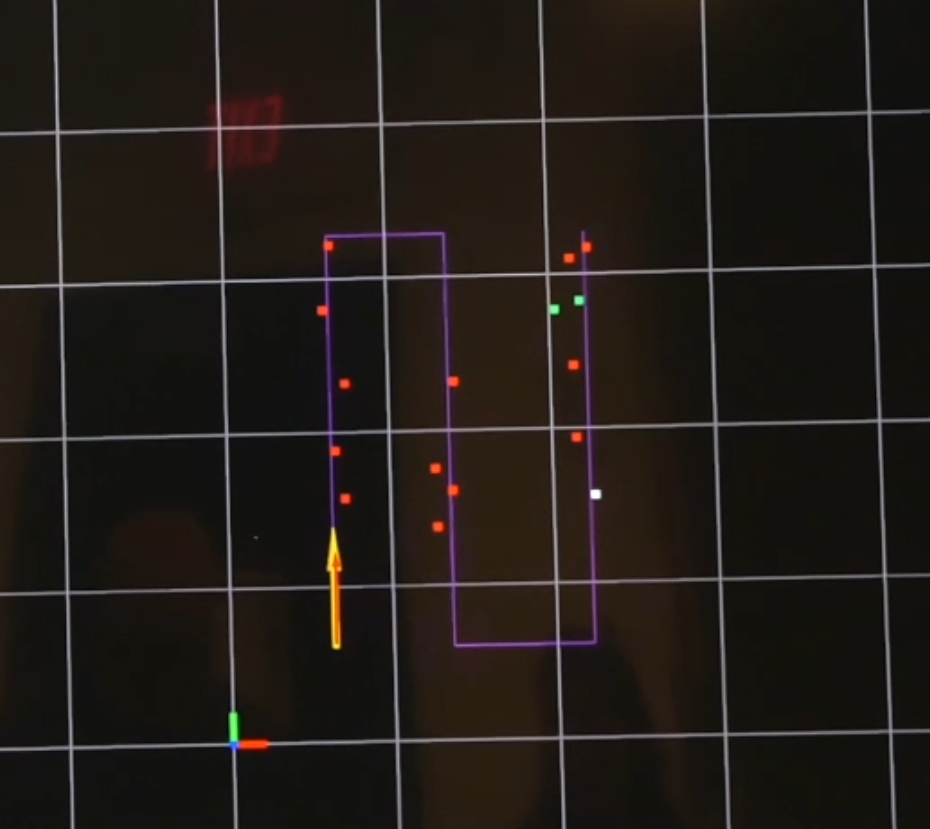

The localization subsystem excelled during the Functional Verification Demonstration (FVD):

- Weed Localization: Accurate world-frame weed positions were showcased using the downward-facing camera.

- Waypoint Navigation: The robot successfully navigated world-frame waypoints and recorded weed removal, allowing for effective planning and mapping.

The weed map below, in red (killed weeds) and green (live weeds), as well as robot position in yellow demonstrate the localization subsystem performance.

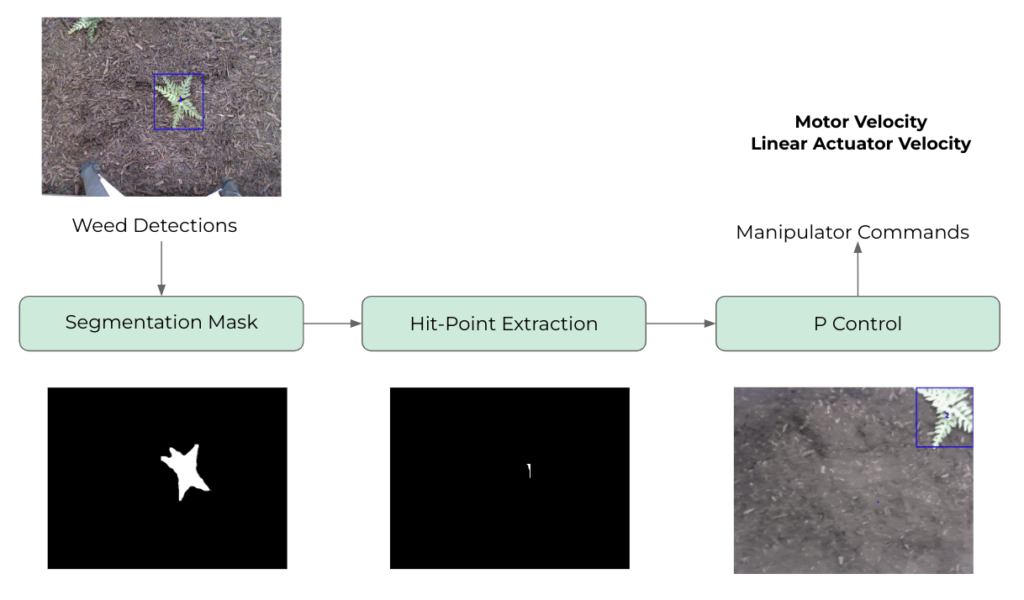

Targeting Subsystem

The Targeting System is a closed-loop control mechanism that uses weed detections from the perception subsystem to precisely align the laser with weeds. By employing visual servoing, it integrates image processing, robotics, and control theory to ensure accurate weed targeting and elimination.

Downward facing demonstration is a combination of manipulator accuracy test, eye-safe laser functional, and downward detection accuracy. The video below shows the result of the implementation.

How does it work?

The Targeting System processes inputs from the downward-facing camera and weed detections to precisely align the laser with the weed’s center using visual servoing. It identifies the exact hit-point by converting the weed’s segmentation mask into a binary image, applying an erosion loop to reduce noise and isolate the central region, and calculating the hit-point by averaging the remaining coordinates. Simultaneously, the system ensures alignment with the laser-pixel using a Proportional Controller (P-controller), which adjusts the manipulator to minimize the error between the hit-point and the laser-pixel. Calibration determines the fixed laser-pixel position, enabling precise and consistent targeting.

System Interface

Implemented as a ROS Action Server, the Targeting System provides scalability and modularity. The /kill_weed endpoint accepts a weed’s tracking ID and returns a success or failure status, while streaming real-time feedback. This feedback includes the L2-norm error between the hit-point and the laser-pixel, allowing continuous monitoring of the targeting process.

Performance and Results

During the Functional Verification Demonstration (FVD), the system achieved less than 20 mmaccuracy, significantly surpassing the required 200 mm threshold (PR-7). Challenges, such as targeting unreachable weeds and hit-point fluctuations, were addressed by tightening the targetable area and enhancing the erosion cycle, resulting in a stable and reliable targeting process. These improvements ensured outstanding performance during both the FVD and FVD-Encore demonstrations.

Behavior Subsystem

The Behavior Node, humorously nicknamed the “Aladeen Node” after The Dictator, serves as the command center for the autonomous weed-killing robot. It orchestrates navigation, weed detection, and manipulator control, dynamically assigning tasks based on real-time feedback from sensors and systems. Built on the ROS2 Humble framework, the Behavior System ensures efficient and seamless operation.

Functionality and Architecture

At the core of the system, the Behavior Node processes data from:

- Odometry: Received through /odometry/wf, this data determines the robot’s position for navigation planning.

- Weed Detection: Published through /downward_facing/detections, the camera data is integrated into a live weed map via the WeedMapper library.

- Global Planner: Generates waypoint sequences, enabling the robot to traverse the field while avoiding obstacles.

The Behavior Node operates as a finite state machine with three primary states:

- IDLE: Standby mode, waiting for tasks.

- MOVING: Navigation between waypoints, updating the live weed map.

- KILLING: Halts movement to align with and target weeds using visual servoing.

Dynamic Decision-Making

The system dynamically prioritizes reachable, unattempted weeds and seamlessly transitions between states as needed. If a weed moves out of view, a timeout mechanism prevents delays by reprioritizing tasks. This decision-making approach ensures efficient operation and adaptability in dynamic environments.

Key Features

- Weed Mapping: Tracks live, killed, and aborted weeds using WeedMapper, publishing maps for real-time monitoring.

- Post-Operation Reporting: Generates a summary of weed statuses for analysis and system optimization.

- Asynchronous Communication: Interfaces with the KillWeed action server, enabling multitasking while targeting actions are in progress.