PR6 Achieved Results (SVD Encore)

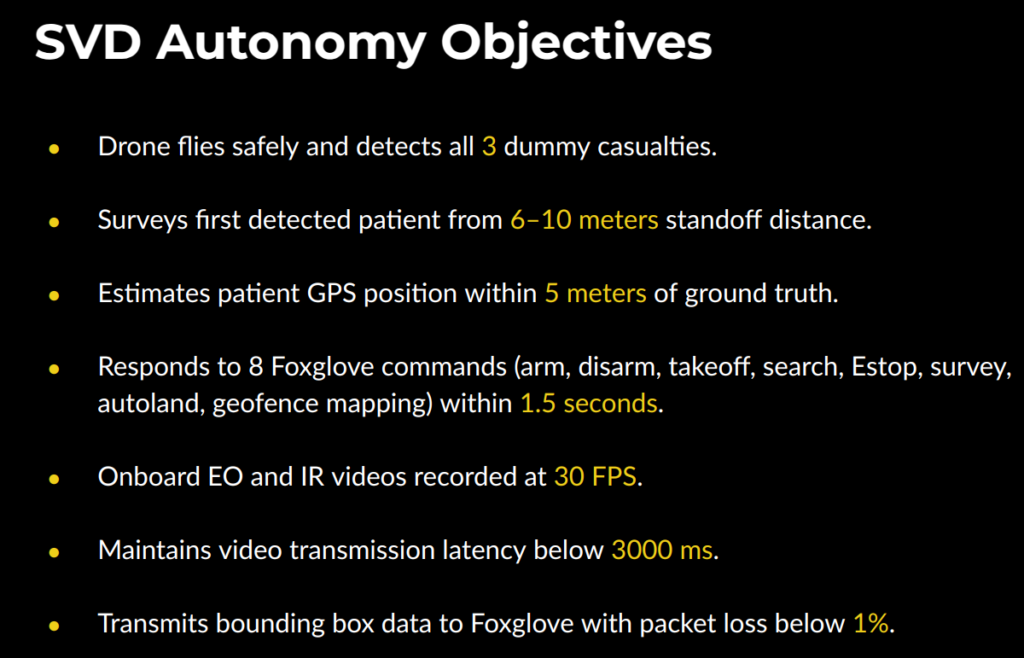

Our team successfully demonstrated a complete autonomous UAV mission including takeoff, geofence mapping, casualty re-identification, identification, and autonomous landing. The system met the requirements from SVD Encore Objectives, and showcased improved stability, a wider operational area, and enhanced visualization overlays for mapping and search progression compared to SVD.

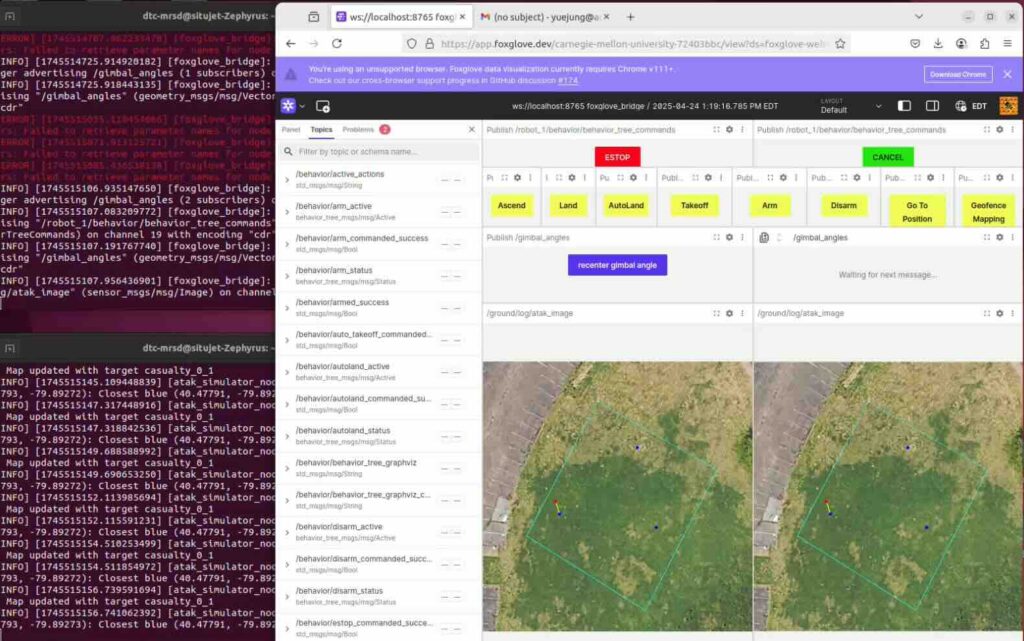

Additional showcased feature compared to SVD: Foxglove UI & ATAK Visualization.

Issue found:

- One of the victims (Gwen) was missed during the scan, due to potential issue with radio communications, but further validation is needed.

- The demo lacked quantitative real-time performance metrics despite the GUI visualization of geofence and casualty detection.

PR5 Achieved Results

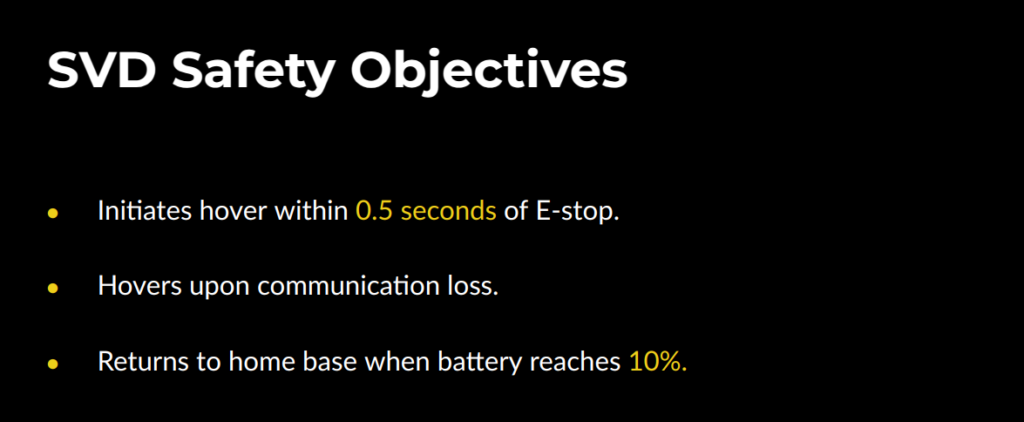

Our team executed a successful autonomous UAV demonstration that included full victim detection, autonomous navigation, and partial triage behaviors. All three casualty targets were detected. We also performed safety systems, including hover upon estop, manual override, hover upon geofence breaking, hover upon potential UAV deconfliction, hover upon communication loss. The system effectively achieved those tasks. Autonomous flight and key capabilities were clearly demonstrated.

– Issues found:

- The triage orbital behavior was correct, but the absence of live camera feed reduced interpretability.

- No analysis or validation was provided to confirm goal completion.

Pose Estimation on Ground Robot — Experiment Result

https://drive.google.com/file/d/11FOfW6hk6HXlVpGJ5pP-KQFuSvQV5Szh/view?usp=sharing

This algorithm, deployed on the ground plane, provides the same quantative measurement that would be used from the aerial context, depending on the pose of the person. We note that on the level ground, standing targets at 15 meters away were detected, with poses accurately extracted. This is a viable heuristic that indicates that an aerial component with a person laying down will likely have similar performance.

PR4 Component Testing

Inter-UAV Deconflict

Patient Detection Demo

https://drive.google.com/file/d/1Nanxn3Ck5MWdhX6B4VPADjgThlq42yNg/view?usp=sharing

Building off our prior research with our inhouse perception algorithms, we validated that the aerial component of our project is viable, with a drone at an altitude of 18 meters, we were able to still extract a stable pose estimation and bounding box of a moving ground target, exceeding our performance requirements.

3D Pose Detection Demo — RGB Camera

https://drive.google.com/file/d/1W3jcaDZW4pEALWbgKcMEjYFC-4o_aSWp/view?usp=sharing

Similar to the above, but with extraction of the pose in the context of the three dimensional frame, as opposed to two dimensional projection. This will be critical for patient pose and localization within the larger teaming context.

3D Pose Detection Demo — Thermal Camera

https://drive.google.com/file/d/1FbSXCte49-OYUwo004bbGJAXdw64GNl-/view?usp=sharing

An experimental study on the above for our FLIR camera. While not in our existing performance metrics, we were tasked with evaluation of the potential of our existing algorithm in this field, as it will prove critical for the DARPA Triage Challenge night runs. In here, we note that the estimation is unstable, but semi-viable, in that detections are intermittedly stable to a filterable degree.

GUI

PR3 Component Testing

GUI

PR2 Component Testing

Gimbal Lock-on

https://drive.google.com/file/d/17iAl945GJU6922liOV_KRBjE58mtjINb/view?usp=sharing

Planner & GeoFence

GUI

PR1 Component Testing

ROS2 Integration