Perception Subsystem

The Perception Subsystem executes five critical functions within the operational framework: pepper segmentation, coarse and fine pose estimation, peduncle segmentation, and peduncle point-of-interaction (POI) determination.

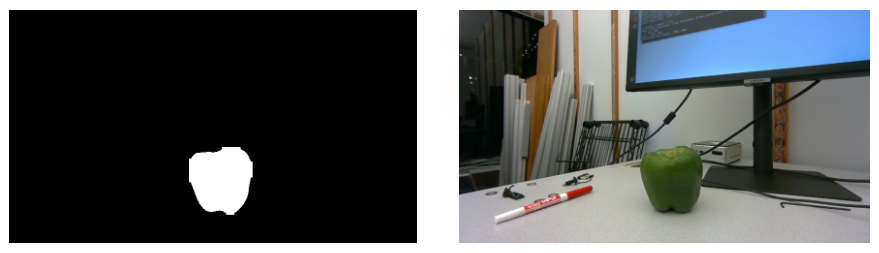

Image Acquisition and Pepper Segmentation

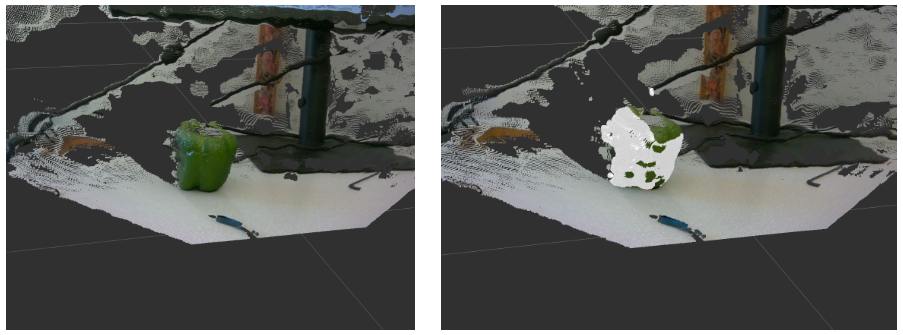

The system employs a Realsense D405 camera for primary image acquisition. Pepper identification utilizes a YOLOv8 instance segmentation model that effectively discriminates pepper objects from environmental elements. Following segmentation, the system identifies the proximally closest pepper specimen, extracts its mask, and correlates it with depth imaging to generate a three-dimensional point cloud representation. This process facilitates accurate centroid calculation of the target specimen.

Pepper Pose Estimation

The pose estimation procedure operates in two sequential phases:

- Coarse Pose Estimation:

- Leverages the YOLOv8-generated segmentation masks to isolate the pepper fruit point cloud

- Calculates the geometric centroid of the point cloud as the initial positional estimate

- Publishes this data via a unified ROS message format that encapsulates both positional and dimensional parameters

- Defines the pepper’s volumetric properties using cylindrical primitive representations

- Integrates both fruit and peduncle morphological data within a consolidated message structure

- Fine Pose Estimation:

- Refines the coarse pose using a custom superellipsoid fitting method.

- Activation occurs upon successful navigation to the pre-grasp position

- Provides high-precision spatial coordinates essential for grasp planning and execution

- Serves as the deterministic input for final manipulation operations

Peduncle Analysis and Interaction Point Determination

The peduncle segmentation module implements a YOLOv8 model specifically optimized for stem structure identification. The system extracts the medial axis of the segmented peduncle utilizing the Skimage Python library’s morphological functions. This axis is subsequently modeled through parabolic curve fitting.

The orientation determination employs least squares error analysis between the extracted medial axis points and the fitted parabolic curve to ascertain optimal vertical or horizontal alignment. The critical point-of-interaction for the cutting operation is algorithmically established at a position equivalent to half the total curve length, measured from the superior aspect of the pepper fruit.

Perception Subsystem – Fall Semester

The main changes made to the perception subsystem are in terms of 1) additional dataset collection, and 2) dual camera architecture.

- To gain better performance from our perception subsystem and train the YOLOv8 segmentation model further, we implemented a data collection and training routine outlined in (https://github.com/VADER-CMU/MLOps). We gathered 1.3k+ training examples by taking pictures of green peppers in indoor and outdoor situations, with lighting, orientation, and perspective variations. We then individually annotated each image with masks of the peduncle and the fruit, partially assisted using the Segment Anything Model (SAM). The resulting segmentation model for the fruit has an mAP@50 metric of 0.97 indoors, and 0.978 outdoors.

- To use the cutter camera fully, we included additional logic that runs pose estimation (via our current pipeline) on the cutter camera hardware, and integrated logic to process these pose estimates alongside the gripper camera pose estimates in the State Machine, which will be outlined below.

The final results of the segmentation model are outlined below.

Extraction Subsystem – Spring Semester

The Extraction Subsystem comprises two primary components: the Gripper Mechanism and the Cutting Mechanism, each designed with specific functionality to support the extraction process.

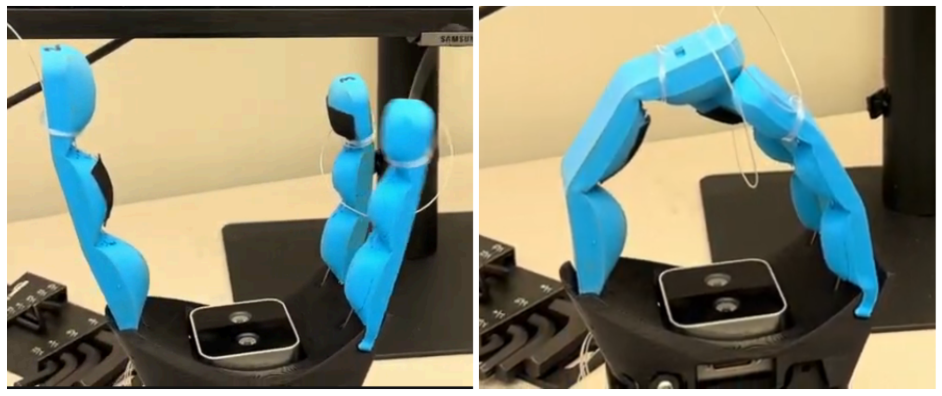

Gripper Mechanism

The Gripper Mechanism features a tridigital design with three soft-bodied fingers. These fingers operate via a tendon-driven system connected to Dynamixel motors that provide precise control over gripping pressure and position. The flexible nature of the fingers enables adaptive grasping of irregular objects.

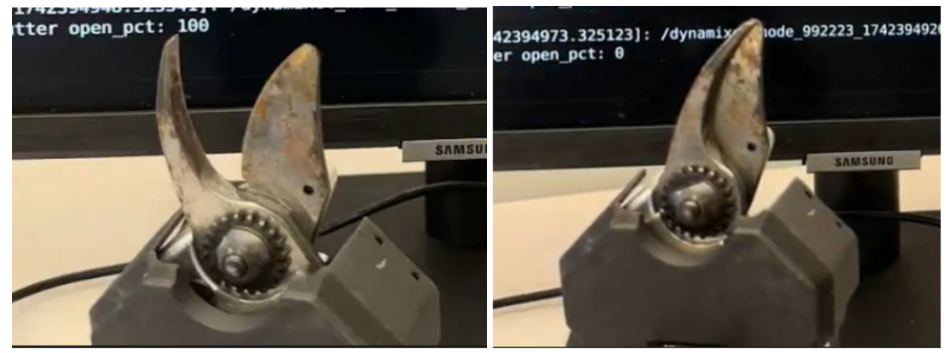

Cutting Mechanism

The Cutting Mechanism employs a similar tendon-based design principle. It utilizes a single Dynamixel motor that actuates a tendon system, which in turn rotates a specialized blade. This blade delivers shear forces to effectively sever the peduncle during the extraction process.

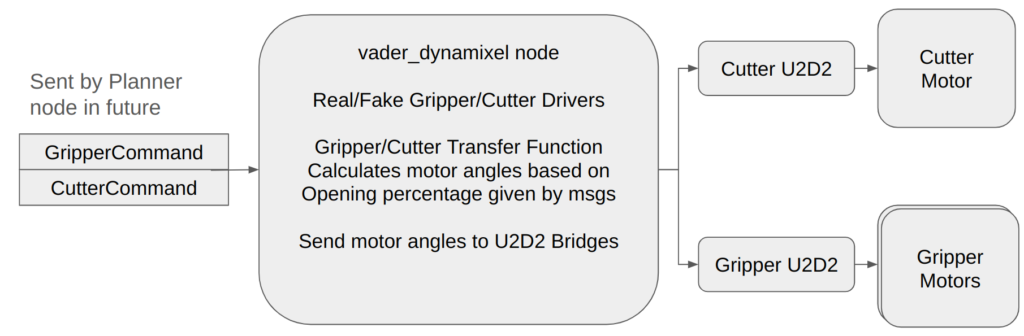

Control Implementation

The system architecture incorporates an end-effector node that instantiates separate driver modules for each mechanism. This modular approach enables independent control of the gripper and cutter components. The control system:

- Monitors incoming command messages for both mechanisms

- Processes normalized operational parameters (0-100% range)

- Employs transfer functions to convert these normalized values into precise joint angles

- Receives dedicated GripperCommand and CutterCommand message types through separate channels

This implementation ensures precise coordination between gripping and cutting operations during the extraction sequence.

Extraction Subsystem – Final Implementation

The hardware and the software components largely remain the same. The finalized software is available here (https://github.com/VADER-CMU/vader_dynamixel/releases/tag/FVD).

The only major modification performed was adding a cutter camera holder, which allows the mounting of an additional RealSense D405 camera. This secondary camera is then used with the Perception Subsystem, which produces cutter palm centric pose estimates as well.

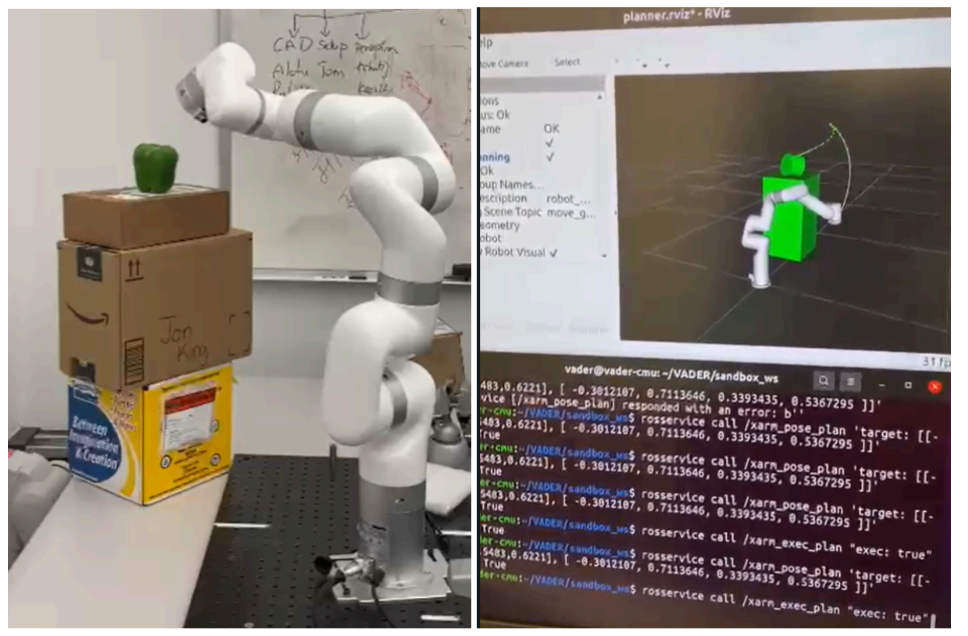

Planning Subsystem

The Planning Subsystem orchestrates trajectory generation and execution for robotic arm movement during the pepper extraction process. This component interfaces with perception data to establish optimal motion pathways while maintaining system safety through comprehensive collision management protocols.

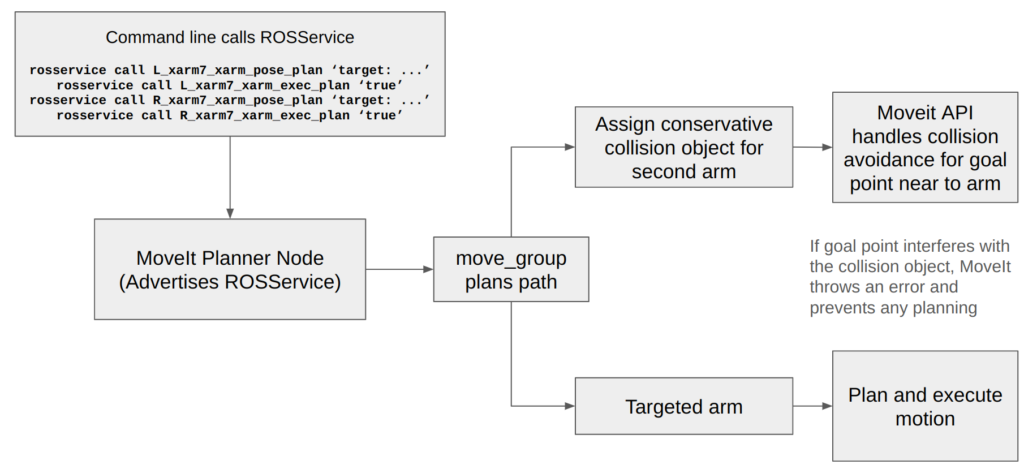

Dual-Arm Collision Avoidance Framework

The system implements a sophisticated collision avoidance methodology to address the constrained operational environment where arms operate in proximity to agricultural structures, produce, and each other. The architecture segregates manipulators into discrete move groups and employs a specialized subroutine that:

- Identifies the currently operational move group

- Generates conservative collision boundary objects encapsulating the non-operational move group

- Integrates with the MoveIt API to prevent trajectory plans that would result in inter-arm collisions

- Automatically terminates planning operations when target positions interfere with established collision boundaries

This approach enables simultaneous operation of dual manipulators within shared workspace environments while maintaining system integrity.

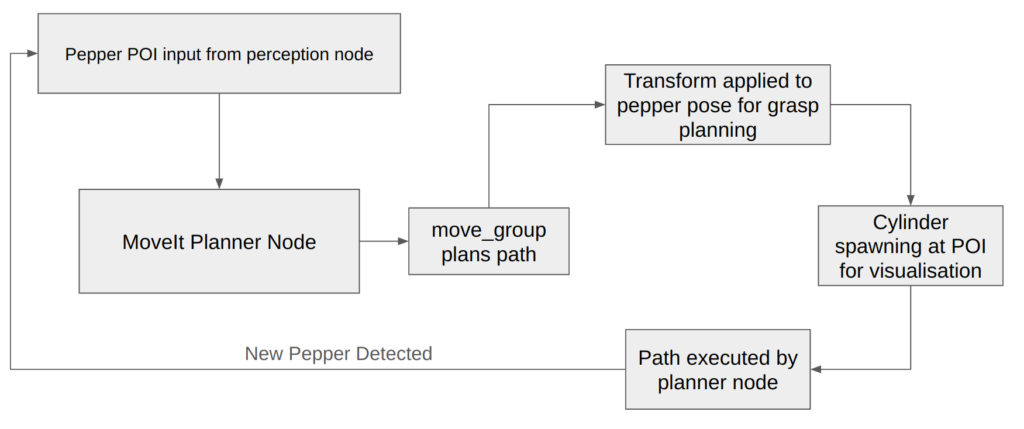

Grasp Pose Planning

When the system receives pepper position data from the perception subsystem, it applies a critical transformation to accommodate the specialized grasping requirements. The default orientation alignment between the end-effector’s z-axis and the pepper’s vertical axis is unsuitable for lateral grasping. The system addresses this through:

- Application of a static transform that rotates the target pose orientation by 90 degrees around the target’s x-axis

- Forwarding of this modified orientation to the MoveIt planner for trajectory calculation

- Retention of the original orientation parameters for visualization purposes in the simulation environment

- Strategic offset positioning of the target cylinder to optimize planning operations

Execution Sequence

The system workflow consists of a sequential process wherein pepper pose data received from the perception node undergoes transformation for grasp planning. The planning node:

- Spawns representative cylindrical objects at points of interest within the simulation environment

- Calculates optimal path trajectories to these targets (visualized as waypoint sequences)

- Executes the planned motion sequence through the ROSService interface

- Dynamically manages simulation objects by replacing previous targets when new points of interest are generated

This cyclical operation enables continuous harvesting operations with efficient resource management and accurate spatial representation.

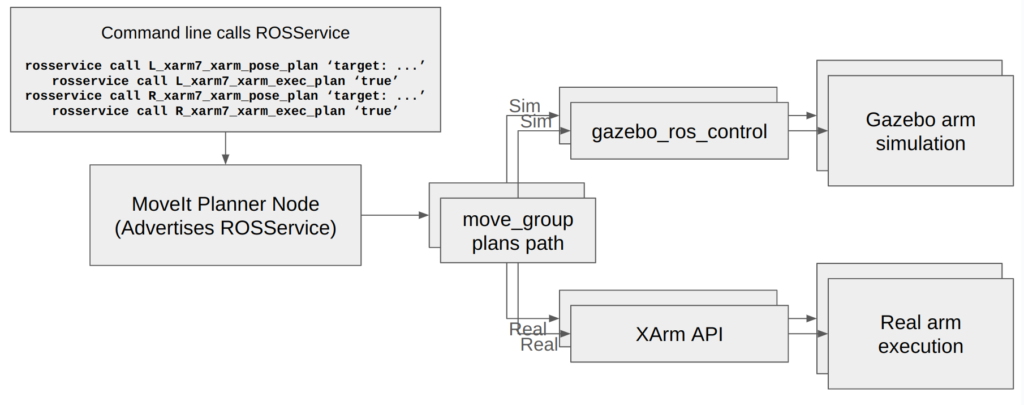

Dual Arm Motion Planning

Planning – Fall System Implementation

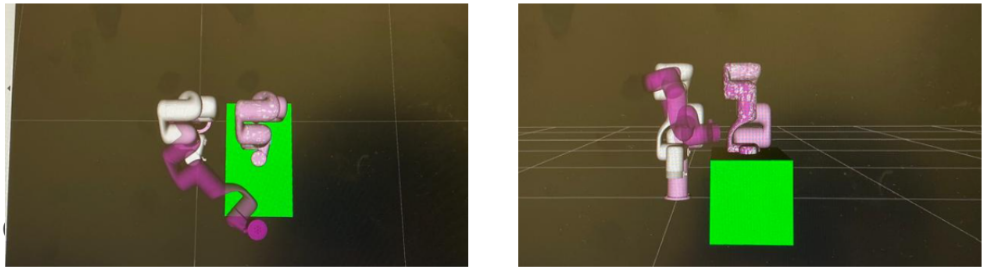

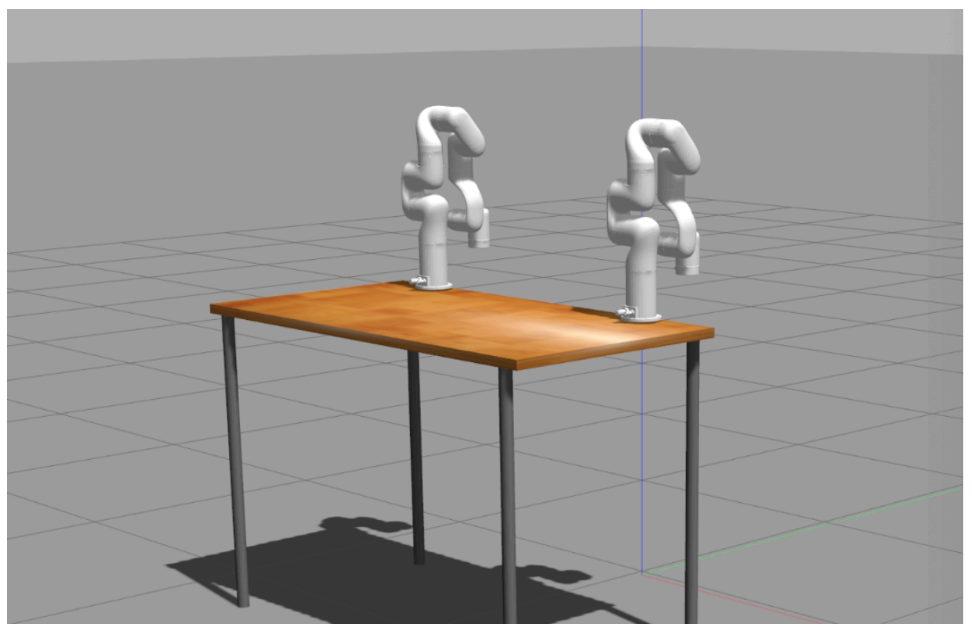

There are major differences in the planning stack for the spring validation demo and the fall validation demo, primarily due to the use of a second arm, both of which are mounted horizontally. The main features are 1) Simulated integration testing of the planning stack, and 2) A mixture of the RRTStar and Cartesian planner for different stages of the task.

First, a Simulation Director program was created to evaluate the performance of the planner in large batches (N>=100), with uniformly randomized coordinates for the simulated bell pepper. To avoid the computational overhead and clunkiness of the Gazebo simulator, all of the runs are performed in RViz, and the trial is considered a success if the system is able to complete all harvesting stages and store the nonexistent pepper.

This Simulation Director helped us uncover and understand potential limitations of our planner. For example, shown below in the figure is the binned outcomes by the horizontal position of the pepper. Y=0 corresponds to the base of the gripper, while Y=0.5 corresponds to the cutter. As we can see, the pepper remains reachable for Y=0.15~Y=0.35, but beyond this range, the cutter and gripper arms sometimes run into singularities due to not having enough reach to harvest the pepper. This allowed us to uncover potential bugs and reason about reachability of the target peppers, which helped us deliver a success demonstration.

Secondly, we dramatically changed the way we use the two main types of planners – RRTStar, and Cartesian. During spring, the RRTStar planner was primarily used, and the Cartesian planner was only used when going to the grasp pose and lowering the pepper into storage. In the fall semester, we further increased the usage of Cartesian planning by also using it in the pre grasp stage, and the retraction after harvesting stage. This means that the RRTStar planner is now only used in the initial homing stage as well as the move-to-storage stage. An outline of our planning task flow is shown in the below figure.

Additionally, while the MoveIt 1 architecture we used only allowed single arm motion execution at a time, we parallelized the planning and execution of motion plans for both arms to save time. By putting a collision wall between the arms centered by the horizontal position of the pepper, we may guarantee exclusive workspaces for both arms, resulting in the guarantee of the other arm not intruding on the workspace. This means we can make valid motion plans while the other arm is executing motion, and we parallelized each of the Cartesian movements highlighted in orange to save over 20 seconds in total compared to sequential RRTStar execution.

Human-Robot Interaction

The Human-Robot Interaction subsystem consists of the State Machine and the User Interface.

The State Machine directs the overall harvesting task flow, prioritizes detected peppers, and selects the highest priority pepper for harvesting by sending its pose estimate to the planner through the different harvesting stages. Additionally, the State Machine handles failure recovery logic by reverting to Homing the arms in case of failures in the planning subsystem (typically due to arm singularities or unreachable peppers). The overall flow of the State Machine is shown below.

The User Interface displays the system current state information to the user through the RViz tool, including the detected poses of peppers within the scene, currently generated plan for the manipulators, and all collision objects found in the scene (including robot self-collision, shared workspace collision, et cetera).

Teleoperation

In teleoperation mode, the user uses the GELLO teleoperation consoles that have the same kinematic structure as the xArm 7s, with a trigger mechanism attached to each ending link. The joints for the teleoperation arms are mapped to the actual joints of the manipulators, while the triggers control the percentage of overall opening/closing for either end effector (so that the gripper and cutter are fully closed when the trigger is fully pressed).

The Teleoperation loop is able to run up to 45Hz on the Orin, and is able to smoothly replicate motion on the arms. The fastest record for a harvest post-FVD-Encore was 17 seconds.

Platform

The Platform subsystem refers to the mechanical and electrical components required to let the overall VADER system function.

The mechanical structure is created using 80/20 aluminum extrusion rails, and secures the manipulator arms against the mounting points of the Warthog platform. A sturdy base is secured off of the central base of the Warthog body, with extrusions extending off the side of the platform to secure the arms; an additional extrusion supports the arms horizontally by supporting the hanging manipulators against the Warthog side panel, reducing overall sag of the manipulators.

The electrical system is split into two parts – the actuators system, powered by a quick-swappable Amiga battery, supporting the manipulator arms, the gripper/cutter motors, and the teleoperation arms; and the computing system, powered by the internal power supply of the Warthog platform, which supports our Orin AGX computer, the user display, and the onboard network switch and USB hubs. A complete overview of the electrical components is provided below.

For additional depictions of our finalized fall system, check out our Poster page and our Media page!