Since the robot’s main physical characteristics were predefined—specifically, the use of the Unitree Humanoid G1 platform—we concentrated our efforts on leveraging the various features and capabilities offered by the Unitree system.

Given that the humanoid robot must autonomously navigate and traverse the factory floor, we directed our efforts toward learning-based approaches. Deep learning methods, in particular, offer the potential to generalize across the diverse scenarios the humanoid may encounter in such an environment.

With the robot’s 27 degrees of freedom, we chose to simplify the control problem by strategically partitioning the roles of the lower and upper body. The lower body was designated to handle stabilization and mobility, operating independently from the upper body. In contrast, the upper body was tasked with performing bimanual manipulation, which we controlled through inverse kinematics.

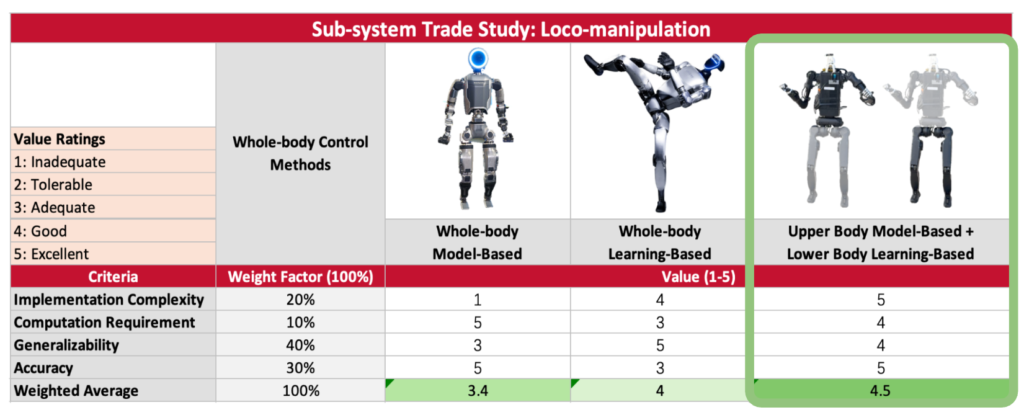

Based on the sub-sytem level trade study shown above, the hybrid approach combining upper body model-based control with lower body learning-based control achieves the highest overall weighted score (4.5/5). This suggests that combining the strengths of both methodologies provides the best balance of performance across all criteria.

The pure learning-based approach (4.0/5) outperforms the pure model-based approach (3.4/5), primarily due to its superior generalizability, which carries the highest weight factor (40%).

The model-based approaches excel in accuracy, while learning-based methods offer better generalizability. The hybrid solution effectively leverages the strengths of both approaches, achieving excellent scores in both implementation complexity and accuracy while maintaining good performance in computation requirements and generalizability.

We then chose to sub-divide our efforts into three main categories:

With the “User Interface” a goal for the Fall semester.

Perception

The perception system consists of Unitree’s integrated LiDAR and a RealSense depth camera. The depth camera will be utilized for estimating the pose of the tote as well as for localizing the robot within its environment.

Motion Planner

Motion planning for humanoid robots involves two key components: trajectory planning and whole-body motion planning. This work focuses on developing efficient algorithms that enable a humanoid robot to navigate in a structured environment while avoiding obstacles and ensuring smooth movement.

To develop and test the trajectory planning approach, a MuJoCo simulation was set up. The simulation environment provides a physics-based platform to evaluate motion planning strategies before deploying them on real hardware.

- Initially, a map of the known space was manually created, defining obstacles and free-space regions in XML format, which MuJoCo reads to construct the environment.

- This manually constructed map serves as an input for testing and may later be replaced with a more realistic warehouse or factory layout to reflect real-world conditions.

Path Planning Algorithm

For trajectory planning, an A* (A-star) algorithm was implemented—a well-known graph-based search algorithm for finding the shortest path from a start pose to a goal pose while avoiding obstacles. The process involves:

- Input Handling: The planner takes in:

- The environment map (free space and obstacles).

- The start pose of the humanoid robot.

- The goal pose where the robot needs to reach.

- Path Generation: Using the A* algorithm, the planner computes an optimal trajectory, ensuring minimal cost while considering obstacle constraints.

- Obstacle Avoidance: The algorithm ensures the robot avoids all obstacles and follows a collision-free path.

To enhance interpretability, MuJoCo’s visualization libraries were integrated to render the planned path within the simulation, enabling better debugging, analysis, and fine-tuning of the trajectory planner.

Locomotion & Manipulation

To address the complex demands of humanoid mobility and bimanual manipulation, we adopted a hybrid control strategy. Locomotion is handled via a learning-based policy trained in simulation, allowing the robot to generalize across uneven terrain and dynamic environments. For manipulation, we implemented model-based inverse kinematics to enable precise, real-time control of the upper body. This separation of control enables robust performance across diverse factory scenarios while maintaining real-time responsiveness.