Perception

We utilize the onboard Intel RealSense D435i RGB-D camera for both robot localization and pose estimation of target objects. Due to the lack of native video streaming support on the Unitree’s onboard Orin computer, the RGB and depth streams are accessed directly from the camera.

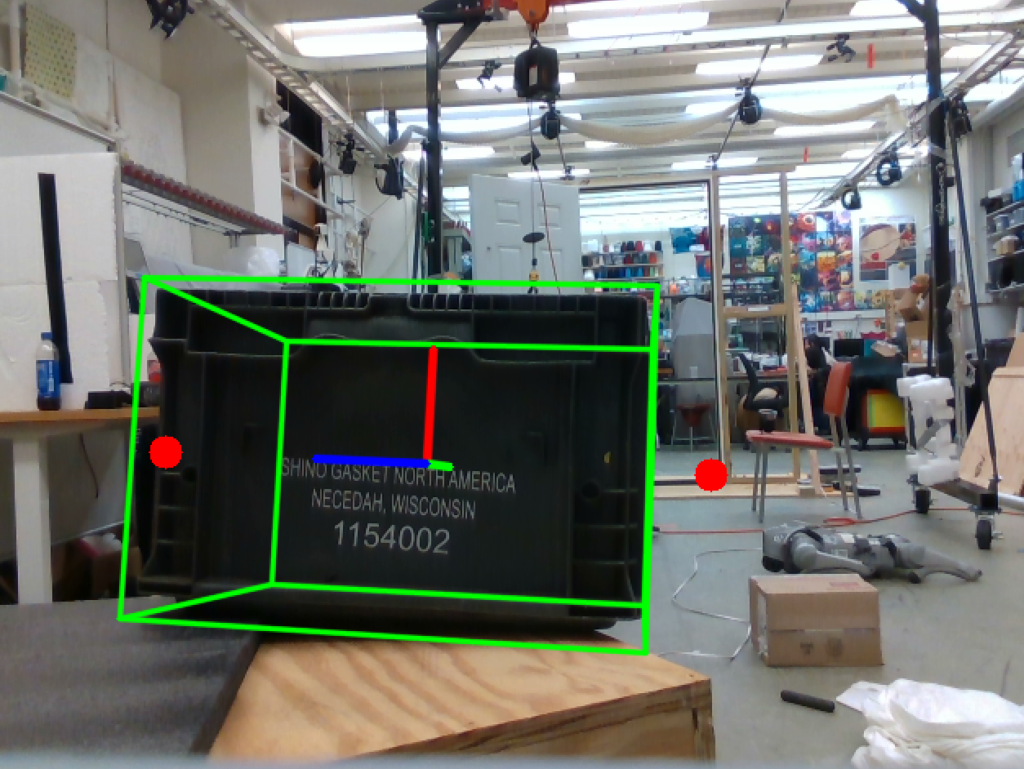

For pose estimation, we employ NVIDIA’s FoundationPose model, which provides single-shot pose predictions using a pre-existing mesh of the target object—in our case, a tote located in the robot’s immediate vicinity. The mesh was generated using a LiDAR-enabled iPhone through an AR application and was subsequently refined manually in Blender to ensure accuracy.

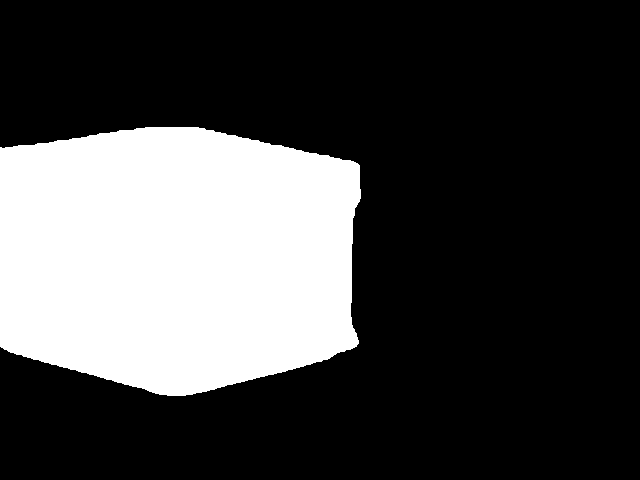

To initialize the pose estimation process, the model also requires a binary segmentation mask of the target object in the initial input frame. This mask is generated in real time by prompting Meta’s Segment Anything Model (SAM) using text-based descriptions encoded via CLIP.

|  |

| RGB image | Binary mask |

The complete input—comprising the RGB-D image, refined mesh, texture, and binary mask—is streamed in real time into the FoundationPose model to enable accurate and efficient object pose estimation.

The model then outputs a bounding box along with approach points—represented as (x,y,z,w) coordinates—for both of the robot’s arms. These outputs are subsequently forwarded to the Motion Planner, which utilizes them to generate appropriate motion trajectories for the robot’s manipulators.

Motion Planning

We divided Motion Planning into two key components: Trajectory Control and Whole-Body Motion Planning.

- Trajectory Control is responsible for guiding the robot smoothly and accurately from one point to another along a predefined path. It ensures that the robot follows the desired position, velocity, and acceleration profiles over time.

- Whole-Body Motion Planning, on the other hand, coordinates all degrees of freedom in the robot to perform complex tasks such as picking up and placing a tote. This involves planning feasible, collision-free motions for the entire robot body, taking into account constraints like balance, reachability, and task-specific objectives.

Locomotion & Manipulation

We decoupled the robot’s whole-body control into two subsystems: lower-body locomotion and upper-body manipulation, each implemented using a method best suited to its functional demands.

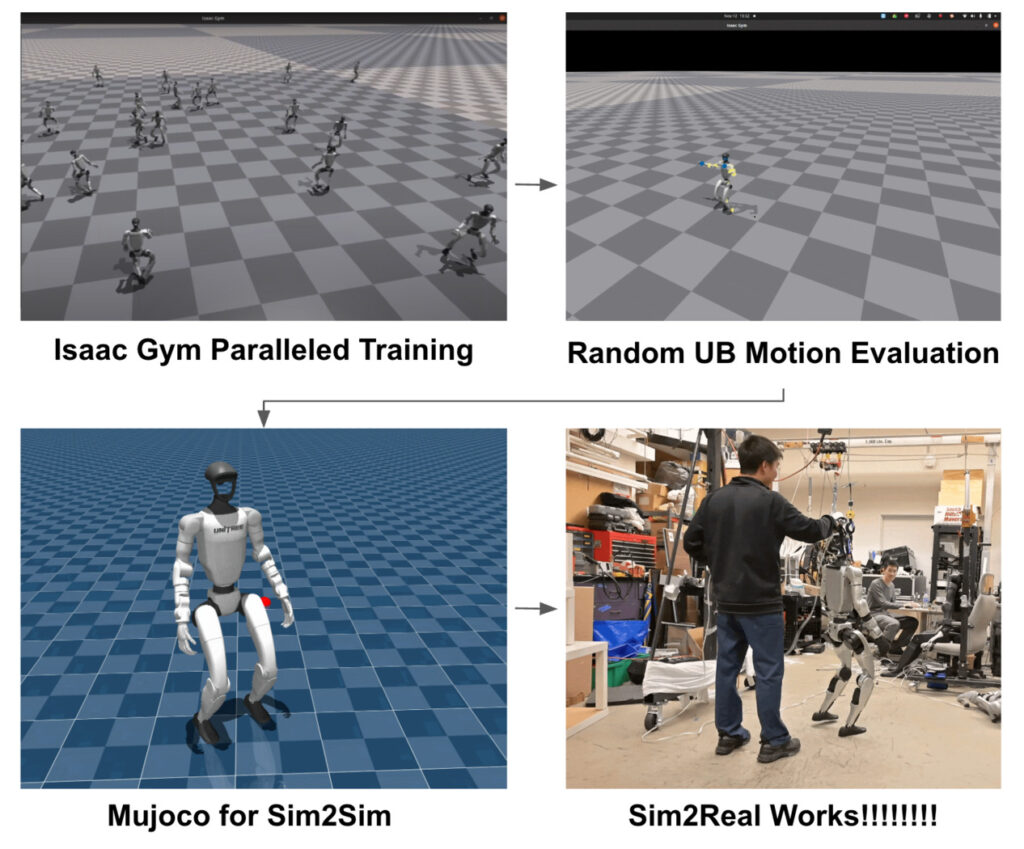

Lower Body: Reinforcement Learning for Locomotion

To enable robust and adaptive mobility, we trained a reinforcement learning (RL) policy for the Unitree Humanoid G1’s lower body using the Isaac Gym simulator. The policy was optimized for stable bipedal walking while carrying variable upper-body payloads. The RL framework provides resilience to external disturbances, allowing the robot to maintain balance during manipulation tasks and while interacting with dynamic environments.

The policy outputs joint-level torque commands based on proprioceptive inputs including joint positions, velocities, and inertial measurements. These commands are deployed directly on the robot in real-time through a low-latency control loop, ensuring fast recovery and adaptability during locomotion.

Upper Body: Inverse Kinematics-Inverse Dynamics for Manipulation

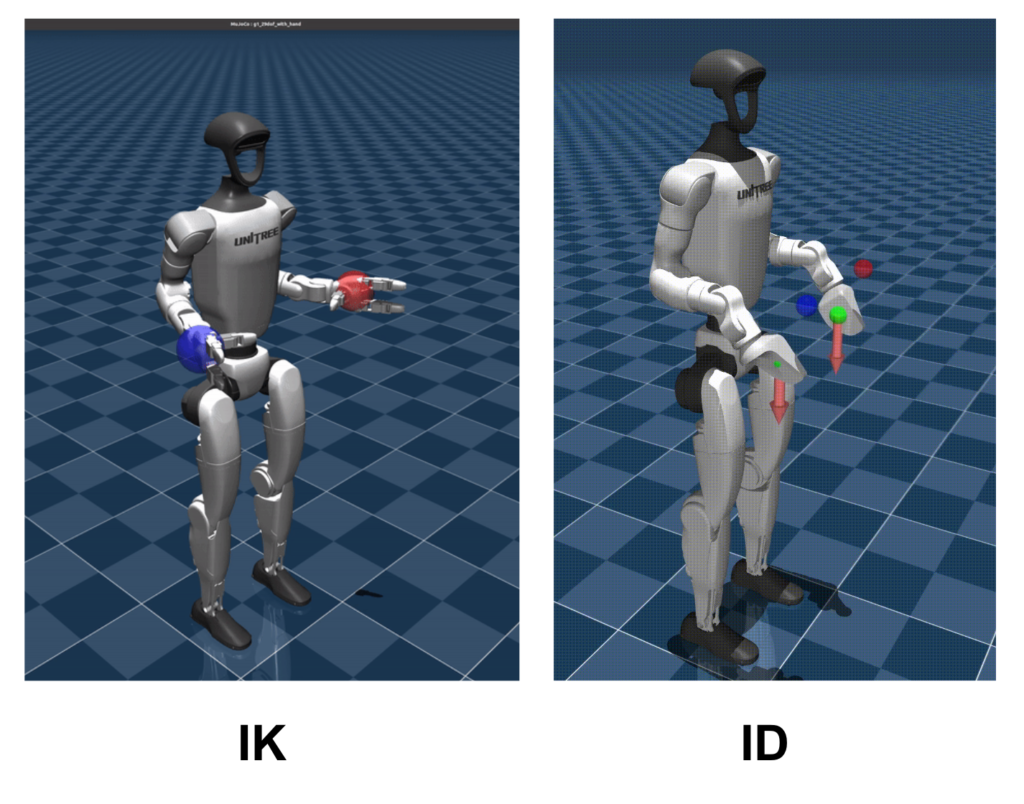

For manipulation, we implemented a model-based Inverse Kinematics-Inverse Dynamics pipeline to control the robot’s arms. Given target end-effector poses provided by the perception and motion planning modules, we first compute joint configurations using inverse kinematics. These joint targets are then converted into torque commands through inverse dynamics, allowing smooth, precise motion control even during high-payload or contact-rich interactions.

Fall 2025

Upper-body Manipulation:

Following the requirement from our sponsor that we should explore a larger workspace for manipulation. We opted for a single hand pickup strategy with Nvidia’s Foundation model GR00T N1.5. This enabled us to achieve a generalized motions for box pickup from any orientation. We LoRA fine-tuned GR00T with custom data collected from a human tele-operator.

Data Collection

We collect data with human tele-operation of the upper-body via motion retargeting and the Apple Vision Pro headset.

Here is a demonstration of the collection process with the Apple Vision Pro. The human tele-operates the robot to perform the task and collect the data.

Below we show the multitude of episodes (150+ for this task) collected and the joint angles associated with one episode.

Nvidia GR00T N1.5

Nvidia’s GR00T model has a slow thinking System 2 for high-level reasoning. It takes in image and language tokens and outputs a vector for the the diffusion transformer that outputs robot joint action tokens.

See our Generalized Manipulation Demo in the Media Section!

Force-adaptive Whole-body RL Controller

In order to handle the external payloads from the totes on the end-effectors, we proposed the FALCON (https://lecar-lab.github.io/falcon-humanoid/) controller:

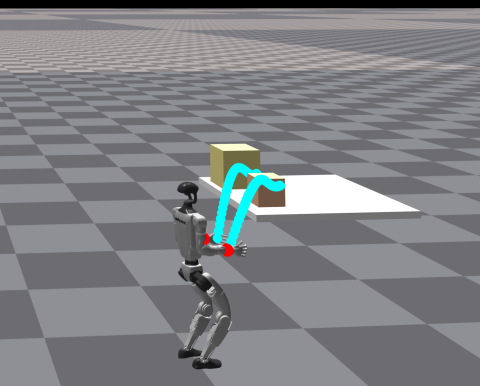

FALCON is a dual-agent reinforcement learning framework that decouples locomotion and manipulation with separate rewards for efficient training. During learning, a torque-limit-aware 3D force curriculum applies progressively stronger external forces to the end-effectors, maximizing force adaptivity while ensuring safety. FALCON can be seamlessly trained and deployed on different humanoid robots without embodiment-specific reward or curriculum tuning, showing its cross-platform generalization capability.

Besides, to expand the workspace, we add additional tracking rewards for the torso orientation to allow the robot to bend and twist the torso. During deployment, we use Apple Vision Pro to transform the head pose to the humanoid torso pose, and the hand poses to the humanoid end-effectors poses.

On FVD and FVD Encore, we showed expressive force-adaptive whole-body control capability on the Unitree G1 robot. Check the FALCON website (b.io/falcon-humanoid/) and our media for the videos!