Spring System Performance

For the Spring Semester, our team set a target to complete the depth estimation, odometry, and mapping subsystems and all system-level requirements associated with those subsystems. The complete list of targeted requirements is shown in the table below.

| Requirement | Status | Subsystem |

|---|---|---|

| PR.M.1 Sense the environment farther than 1m away from itself. PR.M.2 Sense the environment closer than 5m away from itself. | Passed | Depth Estimation |

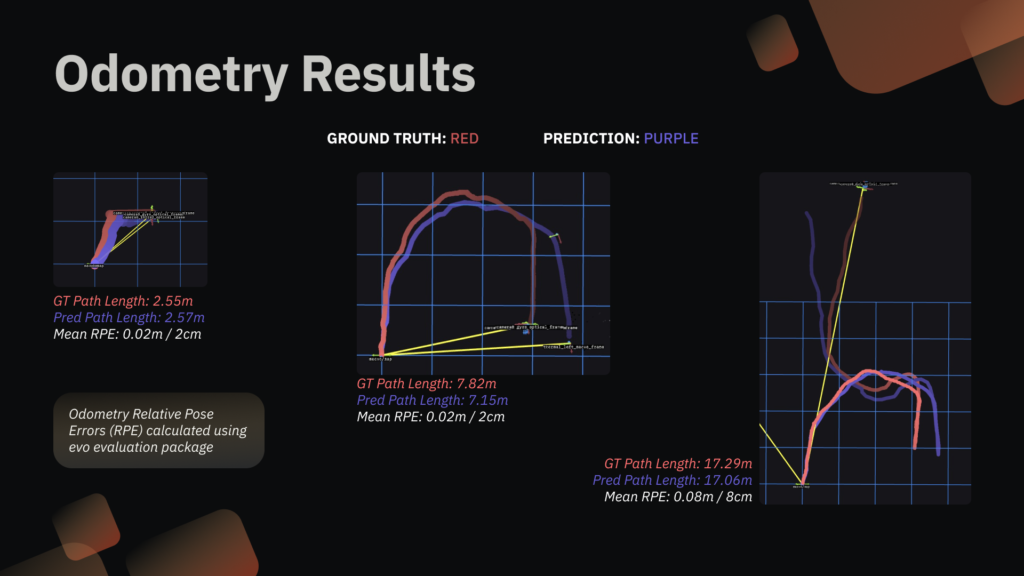

| PR.M.3 Perform odometry with a Relative Path Error <2m. | Passed | Odometry |

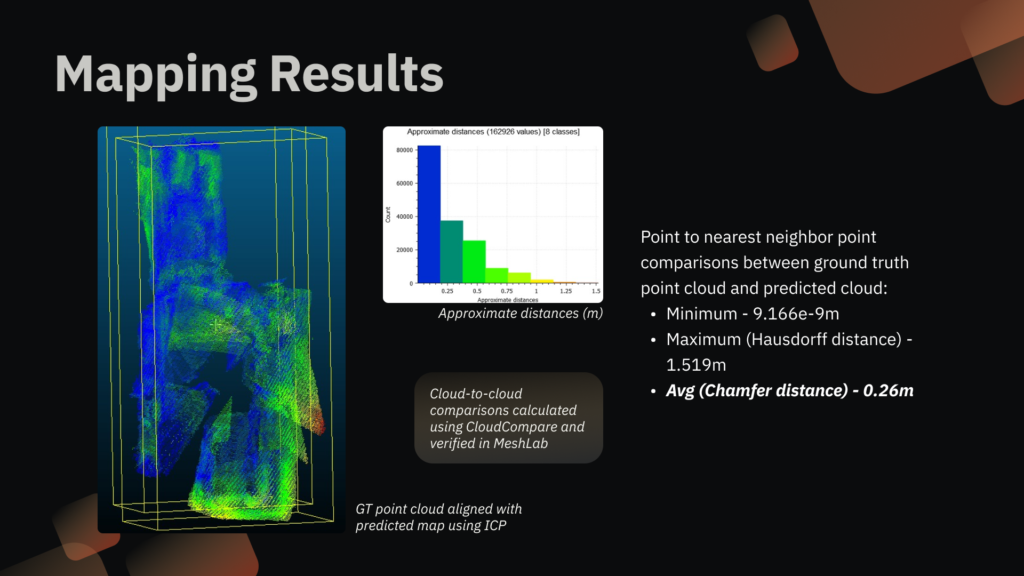

| PR.D.3 Updates the dense-depth map visualization at minimum 0.2Hz. PR.D.4 Chamfer distance is less than 5m from ground truth point cloud. | Passed | Mapping |

| PR.M.6 Communicate with an operator with a maximum latency of 500ms. PR.M.7 Communicate with an operator within 25m. | Passed | Networking |

By the end of spring, the overall system is comprised of the Phoenix Pro drone and the Ground Control Station (GCS). Through a Local Area Network, the drone wirelessly transmits raw sensor feeds as well as the predicted depth, odometry, and global map to the GCS, where operators can view the visualized data in real time. All compute is executed onboard the drone.

We demonstrated these capabilities at SVD through the following demonstrations:

| Procedure | Success criteria | Result |

|---|---|---|

| The operator flies the drone around a building that is emitting thick smoke. The drone relays the output of the sensors to the ground control station in real-time. | Flight verification: Continuous flight for greater than 3 minutes Networking verification: Communication Latency <5s (visual verification) | Flew outside continuously around smoke for 3 min 40 secs. Latency on average for all communication was less than 500ms. |

| An indoor environment is filled with dense smoke, and the drone is navigated in the indoor environment. The drone localizes itself in the environment and recovers dense metric depth through dense smoke and darkness. The robot then transmits the sensor feeds and output of the algorithms to the ground control station. | Perception verification: Sense the environment from 1m to 5m Depth estimation verification: Dense depth updates should happen at 0.2Hz Depth estimation verification: Chamfer distance less than 20cm. Odometry verification: Relative Path Error < 2m when compared to Visual SLAM ground truth. Networking verification: Communication Latency <5s (visual verification) | Perceived through 0.5m to 20m. Dense depth updates come in at 0.2Hz. Chamfer distance less than 20cm. Relative Path Error was 2cm for easier trajectories and 8cm for the SVD complex indoors trajectory. Latency on average for all communication was less than 500ms. |

Fall System Performance

For the fall semester, our team set a target to show the system working in both of its use cases. This meant demonstrating the complete mapping, PoI detection, networking and UI, and autonomous flight subsystems and all system-level requirements associated with those subsystems. The complete list of targeted requirements is shown in the table below.

Although we demo’ed mapping in the spring semester and passed the requirements, one thing we did not achieve was odometry at >10Hz. In order to achieve that, we moved from a learning based VO algorithm to classical VIO. This allowed us to integrate the odometry with the flight controller for autonomy. So in order to regression test, we had to ensure our odometry and mapping performance requirements were still met, which they were.

| Requirement | Status | Subsystem |

|---|---|---|

| PR.M.4 Detect humans in one pose PR.M.5 80% accuracy given 80% visibility PR.D.1 Localize POI within 5m of ground truth or in the correct room 50% of the time, whichever is applicable | Passed | PoI Detection |

| FR.M.4 Teleoperation in atleast altitude hold mode and hover in position hold mode PR.D.2 Travel at 10 cm/s PR.D.5 Any commanded behavior occurs within 2s on the drone | Passed | Autonomy & Flight |

| FR.M.6 Communicate with an operator PR.M.6 Latency < 500ms PR.M.7 Range ≤ 25m | Passed | Networking & UI |

| FR.D.3 Compute onboard PR.D.7 75% or more algorithms must execute on the onboard Orin module | Passed | Real-time Performance |

We tested these requirements through the following demonstrations:

| Procedure | Success criteria | Result |

|---|---|---|

| The drone autonomously hovers and lands in thick smoke. The operator can move the drone around without cognitive load. The drone relays the output of the sensors to the ground control station in real-time. | Flight verification: Continuous flight for greater than 3 minutes Networking verification: Communication Latency <5s (visual verification) Autonomy verification: Minimum or no intervention from operator during hold or land | – Flew outside continuously around smoke for 3 min 40 secs. – Latency on average for all communication was less than 500ms. – No operation intervention was needed |

| An indoor environment is filled with dense smoke, and the drone is navigated in the indoor environment. The drone localizes itself in the environment and recovers dense metric depth through dense smoke and darkness. The robot then transmits the sensor feeds and output of the algorithms to the ground control station. | Perception verification: Sense the environment from 1m to 5m Depth estimation verification: Dense depth updates should happen at 0.2Hz Depth estimation verification: Chamfer distance less than 20cm. Odometry verification: Relative Path Error < 2m when compared to Visual SLAM ground truth. Networking verification: Communication Latency <5s (visual verification) PoI Detection verification: Detect 80% humans in the scene when atleast 80% visible. | -Perceived through 0.5m to 20m. – Dense depth updates come in at 0.2Hz. – Chamfer distance less than 20cm. – Relative Path Error was 2cm for complex multi-room FVD path – Odometry ran at 45 Hz on average. – Latency on average for all communication was less than 500ms. – Detected 6 out of 6 humans in the environment even with partial visibility |

Final System Results

Thermal Depth Estimation:

Flight Autonomy: