System Requirements

Mandatory Functional Requirements

| Functional Requirement | Performance Requirements |

| M.F.1 Sense and estimate part pose | M.P.1 Detect given grasp points with 90% of the time |

| M.F.2 Plan arm trajectory and move to desired point | M.P.2.1 Plan movement inside valid zones 100% of the time M.P.2.2 Plan desired paths for both arms within 4 ∓ 3 seconds |

| M.F.3 Pick Up and Manipulate Part | M.P.3.1 Pick up and manipulate 90% of the parts successfully M.P.3.2 Pick up objects up to 2kg |

| M.F.4 Perform 3D Reconstruction | M.P.4.1 90% completeness with accuracy < 3cm for all points M.P.4.2 Construct within 10 minutes M.P.4.3 Outliers: Hausdorff distance < 5cm, RMSE < 5cm |

| M.F.5 Avoid causing surface damage to samples | M.P.5 No surface change to part after manipulation 80% of the time |

Mandatory Non-functional Requirements

| Non Functional Requirement |

| M.N.1 Robust to environmental changes |

| M.N.2 Incorporate safety measures |

| M.N.3 Be reliable |

| M.N.4 Robust to sensor and behavior failures |

Desired Functional Requirements

| Functional Requirement | Performance Requirement |

| D.F.1 Render 3D reconstruction | D.P.1 Display 30 frames per second |

| D.F.2 Handle irregular shape objects | D.P.2 Successfully grasp object 80% of the time |

| D.F.3 Optimize 3D Reconstruction | D.P.3 Target millimeter level accuracy |

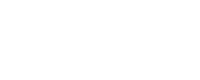

Functional Architecture

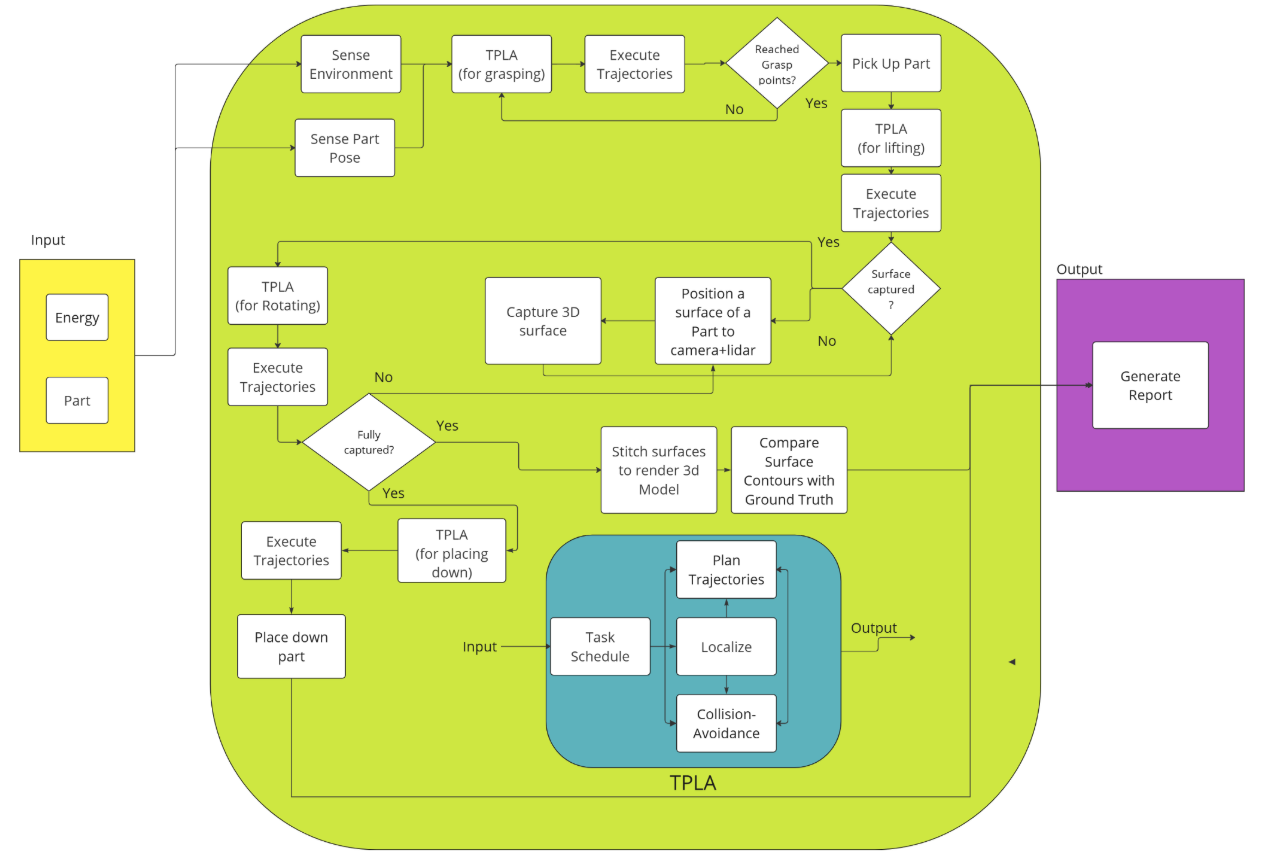

Cyberphysical Architecture

System Design

Overall System

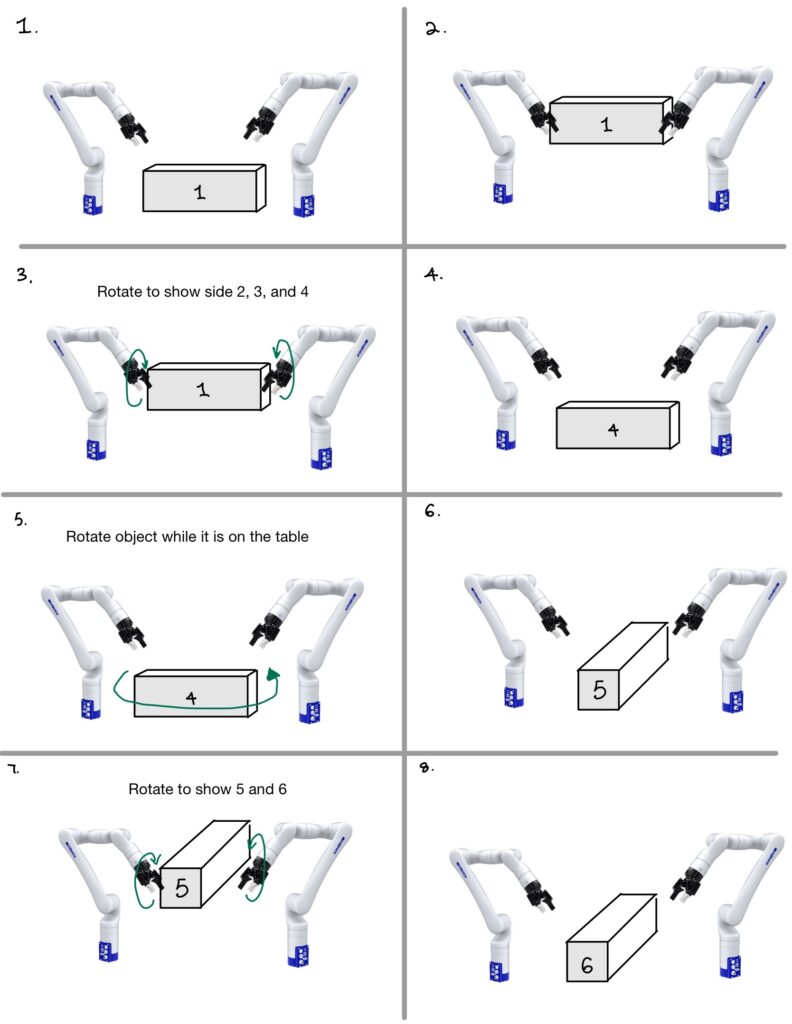

The overall system involves two Kinova arms on a work table. These arms include an E-stop and can be teleoperated for safety purposes. These manipulators will work in coordination to manipulate objects mid-air in so that our perception system can produce a 3D reconstruction of the object. There will be one camera looking towards the workspace that is perpendicular to the robot arms for the 3D Reconstruction. There will also be a second camera overhead that will be used for estimating the pose of the object as well as environmental awareness.

Perception Subsystem

The perception subsystem is responsible for detecting the 6-dimensional pose of objects being manipulated. The inferred pose of the object is used to identify grasp points for the manipulator within the workspace and serves as feedback to the planning subsystem for executing the designated manipulation policy.

The perception subsystem is also responsible for 3D reconstruction by segmenting, tracking, and capturing depth data of objects manipulated by robotic arms. After considering many options, we have settled on using a ZED Gen 1 stereo camera, transitioning from the ZED SDK to OpenCV and Python. This camera will be placed on the opposite end of the workstation to ensure both arms and the entire object are in its field of view. The reconstruction will be performed after the motion strategy has been completed.

Planning and Control Subsystem

Motion planning allows robot arms to rotate the object and show all sides for the perception subsystem to detect defects.

For the control system, the objective is to take the trajectory planned by the global planner and tracking this trajectory for both the arms to pick the test sample. Once the test sample is picked up, the controller has to follow the trajectory calculated based on the feedback policy from the vision system. There will be a residual effect due to sim-to-real conversion like slipping of the test sample which has to be accounted and compensated by the controller.