1. Human Detection

We mainly used Aptil-tag for human detection. In the one robot- one human system, we do not require the entire map. Instead we just need the coordinates and orientation of the human relative to the Turtlebot 1. Fortunately the April Tag library provided by Professor Michael Kaess of CMU gives us a quite accurate relative position and orientation of the April tag. The information given by the April tag library is x, y, z and row, pitch, yaw. Additionally it also gives the Euclidean distance. The navigation node we set up uses this information from the April tag and tries to reduce the relative pitch and also reduces relative Euclidean distance to 100 cm. The result is that the Turtlebot 1(central Turtlebot) is 100 cm away from the human and is facing the human face to face.

2. Facial Expression Recognition with IntraFace:

Intraface is a robust facial expression recognition software developed by Professor Fernando De La Torre of Human sensing Lab in CMU. This state of the art software detects a total of 49 interest points on a human face along with the gaze direction as well as head pose of the face. The Human sensing lab has given us a license for our project. And we have got the stable version of Intraface that can connect to ROS, so that it’s easy to merge to our project.

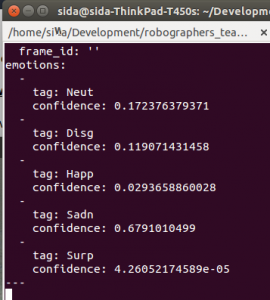

As shown in Fig. 2, there are 5 facial expressions given by the software, expressed in percentage of the probability of that facial expression. The 5 facial expressions are: neutral, disgusting, happiness, sadness, and surprise. And we can also get the information of head pose position, landmark values from Intraface.

Fig. 2. Intraface description.

3. Extracting Person-of-Interest:

(a). Pipeline Design

The general idea of solving multi-people problem is that how we can detect the person from beginning to the end of the process without switching the person. As we can not change the core code of IntraFace, the idea we’ve come up is that extract the frame of the person with April tag and send this image message to IntraFace, so that there will be only 1 person’s face for IntraFace to detect his or her facial expression.

(b). Implementation

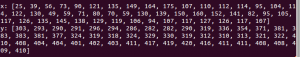

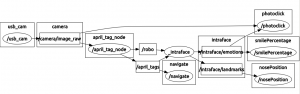

The position of the frame with the April Tag person is one problem. In x direction, we assume that the x coordinate value of the person’s face is the same with x coordinate value of April Tag. So we used April Tag’s x position as the center of the person’s face. And we adjusted the size of the frame according to the distance from the camera to the person. As the turtlebot will stop at 1 meter away from the person with April Tag, so we tried different parameters, and at last we’ve found 200 pixels size in x direction shows the best performance. The rqt_graph is shown in fig.3.

Fig. 3. rqt_graph of detection subsystem.

(c). Results

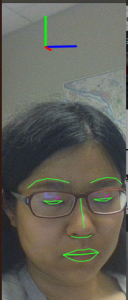

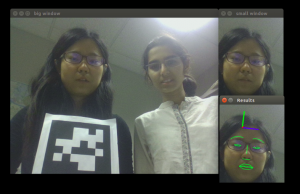

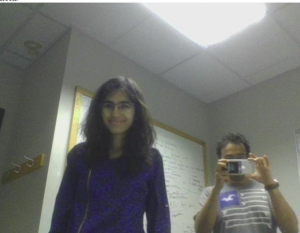

The result of the detection system can be seen in Fig.4. Sida was with the April Tag and Gauri was without April-tag. And the whole frame can be seen in “big window”. And the “small window” frame shows the human face above the April Tag. In this way, Gauri will not be extracted. And “Results” frame shows the IntraFace detection situation, which is the image message that “small window” shows. So that Intraface will only detect Sida’s face because Sida was holding April Tag.

Fig. 4. Extracting region-of-interest and publish to Intraface.

As shown in Fig., although Intraface only detect the person with April Tag, the camera will still shoot the whole scene as final photo. Because it will be strange if there is only human face in the photo.

4. Photo Clicking:

(a). Pipeline design

The basic idea of photo clicking function is that after a person detected by IntraFace has been smiled for 2 seconds, then the system would automatically shoot his or her smiling photo.

(b). Implementation

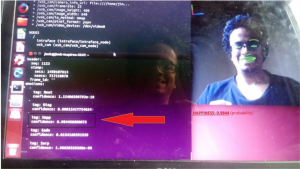

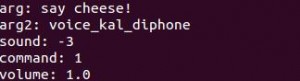

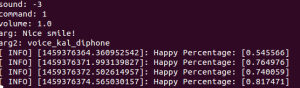

Once the system started receiving the topics in a synchronous manner, the photo-clicking implementation became straight forward. Intraface’s emotion topic is basically a six float number array in which each location of the array contains the six probability values of basic human emotion based on the expression and they are – Sad, Disgust, Happy, Anger, Neutral and Surprise as shown in fig.5.

Fig. 5. Facial expression provided by Intraface.

Fig. 6. Photo Clicking for smiling person.

We basically extracted the third element of the array which corresponds to the probability value of Happy expression. As Intraface is a very robust and one of the best expression recognition software, it gives out high probability values for a very explicit emotion which is what we wanted to capture. The goal of project is to capture obvious smiling faces and Intraface does a great job in recognizing them giving probability values as high as 0.99996 for a perfectly smiling face. Now to improve the stability and assure that the person is indeed smiling, We kept a check if the person smiles for a duration of 3 seconds after which only photo will be clicked. This ensures good quality of photo. Other features that made the photo aesthetic is to keep the face in the center of the frame using the face tracking using pan tilt algorithm we created during fall validation. The final output of photo-clicking node is shown in fig.6.

5. Voice Command:

(a). Pipeline design

The general idea of voice command functionality is that after Intraface has been launched, if the person does not smile for some time(for example, 5 seconds), then the master computer will send a voice signal “say cheese” to the person. And this process will loop if the person sill does not smile. In this way, the system will send “say cheese” to the person every 5 seconds until he or she smiles. After the person smiles for 2 seconds, the system will send another voice command “nice smile” to the person, and also smiling photo will be taken.

(b). ROS package: sound_play

ROS has its internal package for voice signal called <sound_play> package. The sound_play node considers each sound (built-in, wave file or synthesized text) as an entity that can be playing, playing repeatedly or stopped. Nodes change the state of a sound by publishing to the robotsound topic. Multiple sounds can be played at once. Thanks to the built-in package, I don’t have to setup everything by myself. Instead, I can just learn to install the package and study how to use the user interface.

(c). Use <sound_play> package in our project

We modified the ‘photoclick.cpp’ code to add the functionality of voice command. First we included sound_play.h in the code. And then, we can use “sc.say(“Say Cheese”);” to send the voice command, as shown in Fig. 7. And after the node has received the smiling percentage, which indicates that Intraface has launched. And when Intraface has been launched, and count the time. If the person still does not smile for 5 seconds, then will run “sc.say(“Say Cheese”);” until the person smiles, then will run “sc.say(“Nice smile”);” to the person, as is shown in Fig. 8.

Fig. 7. Voice command to send “Say cheese” signal when person doesn’t smile.

Fig. 8. Voice command to send “Nice Smile” signal when person smiles.

6. Human Face Tracking:

Arduino subscibes from IntraFace Software to get the y value of the person’s nose, and subscribe from April-tag for x value. And Arduino uses these 2 values to control pan-tilt unit for the purpose of face tracking.

The original algorithm is trying to maintain the nose at the central point, but the servo motor moves one degree each time. One degree could be too much and lose the target again, then the motor would move backward but still lose it again. That’s way the servos keep vibrating all the time. The way to solve the problem is to put a rectangle inside the proportional tracker.

Here we will go through the simplified idea of proportional tracker to make this statement intelligible. First we catch the whole image form intraface, and we transfer the pixels to according servo motor angles to keep tracking the face. According to experiment and monitoring, the result is pan angle form 55 to 125 degree with X pixel from 0 to 700 and tilt angle from 0 degree to 25 with Y pixel from 0 to 450. The outcome is distorted, though, during the transformation from laptop to Arduino board. We even get minus 50 Y pixel value from the Arduino monitor. An algorithm similar to Celsius-Fahrenheit transformation solved this problem, and a low-pass filter was also used to smooth the noises generated in the transmission from intraface to testing board.

7. Best photo comparator (multiple Robot system)

In our system, we have multiple robots and all of them have Intraface node running which publishes head pose and smiling expression. Now each of these robots will be placed at -30, 0 and 30 degrees with respect to the person. Each Turtlebot will be viewing different angles, different features of the face and our system has to determine which robot can best capture the expression of the face.

Each Turtlebot sends the happy emotion value and head pose value to the central server which has a best photo comparator node that compares which robot has the highest happy emotion value and head pose. A cost function is generated for comparison. This cost function is a function of both the head pose and the emotion probability. The Turtlebot that has the best or the highest cost-function means that that Turtlebot captures the best picture. The Turtlebot with highest cost function is sent a flag which triggers that corresponding Turtlebot to click a photo as explained in above sections.

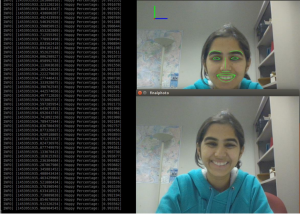

Figure: Person facing towards three cameras (Laptop webcam) . All three are detecting her face and publishing emotion value and head pose value to the central HUB

Figure: Person facing towards three cameras (Laptop webcam) . All three are detecting her face and publishing emotion value and head pose value to the central HUB

Figure: The Camera that best captures the emotion and the head pose clicks the photo (This is the result after photo- clicking)

Figure: The Camera that best captures the emotion and the head pose clicks the photo (This is the result after photo- clicking)