The analysis and testing was done keeping the requirements in mind. We rewrite the requirements again. Since the major two subsystems that we were to be verified were

- Human Detection and Navigation

- Face and Expression Detection

Subsystem 1: Human Detection & Navigation

Requirements to be fulfilled

- Detect human in the vicinity

- Approach the human once detected

- Move with a speed of 15 cm/sec

- Stop at 1 meter away from the human

The following questions were framed as which if answered would lead to the fulfillment of the above mentioned requirements. If all of these questions are answered “yes” (in performance) by the subsystem then we could confidently say that the subsystem works.

Testing Criteria for Human detection and navigation subsystem:

- Does the robot rotate in place?

- Does the robot stop rotating in place instantly if April tag is detected for at least 70 percent of the times?

- Does the robot move toward the April tag?

- Does the robot stop at 1 meter away from the Human?

- How much does the final distance deviate from 1m?

- Does the robot stop when it is face to face with the human ?(no relative orientation difference)

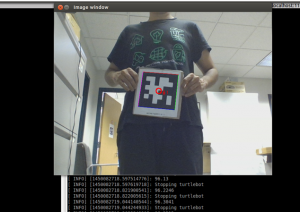

Figure 1: Jimit testing out the Navigation subsystem on the requirements

Figure 2: values show that turtle bot stops at 1 meter from human with no more than 10 percent error

Subsystem 2: Face & Smile Expression Detection

Requirements checked

- Detect Face

- Pan tilt unit tracks the face using head pose estimate from IntraFace

- Accurate smile expression detection

Similarly for this subsystem following questions were framed as which if answered would lead to the fulfillment of the above mentioned requirements. If all of these questions are answered “yes” (in performance) by the subsystem then we could confidently say that the sub-system works.

Testing criteria for face detection and expression detection

- Does IntraFace detect face at least 80 percent of the time

- Does the pan tilt unit adjust itself such that face is in center of the frame?

- Do we get expression output every time?

- Time taken to do the above task?

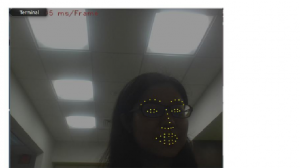

Figure 3 : Output of Intraface showing 49 Interest points of a face

Figure 3 : Output of Intraface showing 49 Interest points of a face

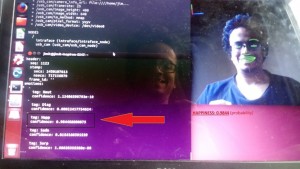

Figure 4: Intraface Expression Output shows the probability that subject (Jimit) smiles

Figure 4: Intraface Expression Output shows the probability that subject (Jimit) smiles