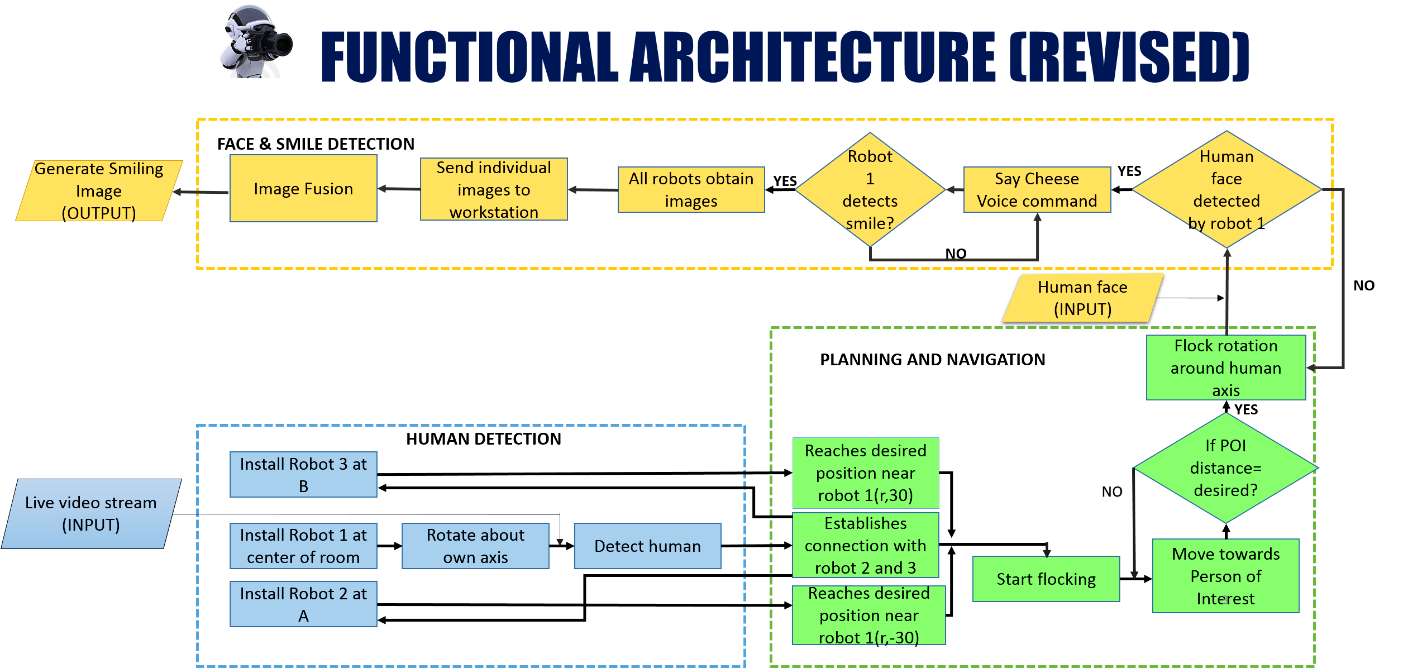

The functional architecture for Robographers is as shown in figure 2, which can be divided into 3 subsystems: Human detection, planning-navigation & Face-smile detection. It is structured such that the inputs & outputs are on the left and its internal architecture is enclosed in 3 boxes, one for each subsystem on the right hand side.

Work flow:

1. Turtlebots 1, 2 & 3 will be installed prefixed locations in the specified room. Location for the Turtlebot 1 will be the center of the room while the locations for the robots 2 & 3 will be the any 2 corners of the room.

2. Robot 1 at the center of the room will rotate about its own axis.

3. Web camera on the robot 1 will obtain live video stream (input to the system) of the surroundings in the room while rotating.

4. The camera will look for the persons in the room and will detect the very first person seen through its video feed.

5. Once the desired person is detected, the robot will stop rotating and stay stationary at the position at which it detected the first person. This completes the working of the human detection subsystem. The detected human will be an input for the working of the subsequent planning and navigation subsystem.

6. After human detection, the robot 1 will communicate with the other two robots. Robots 2 & 3 will perform relative localization and will look for the position of the robot 1 using the cameras mounted on them. After detecting the robot 1 position, they will

navigate towards robot 1 at their prefixed relative positions with respect to robot 1.

7. All 3 robots will form a flock and will start moving collaboratively towards the person of interest, detected by the robot 1, performing relative localization.

8. The flock will start moving when the flock reaches a position which is equal to a prefixed desired distance from the person of interest (1 meter). Note that these robots will be oriented in the same manner as decided in step 6. This means that each of the 3 robots will be at 1 meter distance from the human, at angles -30 degrees (robot 2 position, 0 degrees (robot 1 position), 30 degrees (robot 3 position) respectively. Robot 1 will at the 0 degrees position, which means that it will stand at 1 meter from the person of interest, where the person will be facing the camera directly.

9. If the person turns his/her head, the flock will rotate around the human, with the camera on the Turtlebot 1 trying to find the persons face. The system will reorient itself around human at -30 degrees, 0 degrees, 30 degrees respectively as explained before in step 8.

10. After detecting the face, the human will hear a voice request from the system, saying ‘say cheese’, asking him to smile. Face detection will be performed by the pan tilt camera unit motor movements.

11. When the camera on robot 1 detects the smile and identifies if the smile estimate is greater than 80% (pre-defined threshold), all 3 cameras mounted on the respective robots will click the photo.

12. Images clicked by the robots will be sent back to the workstation. Workstation will identify the common points and perform image fusion.

13. The final output will be the generation of an accurate and clear smiling image.