Computer Vision Subsystem

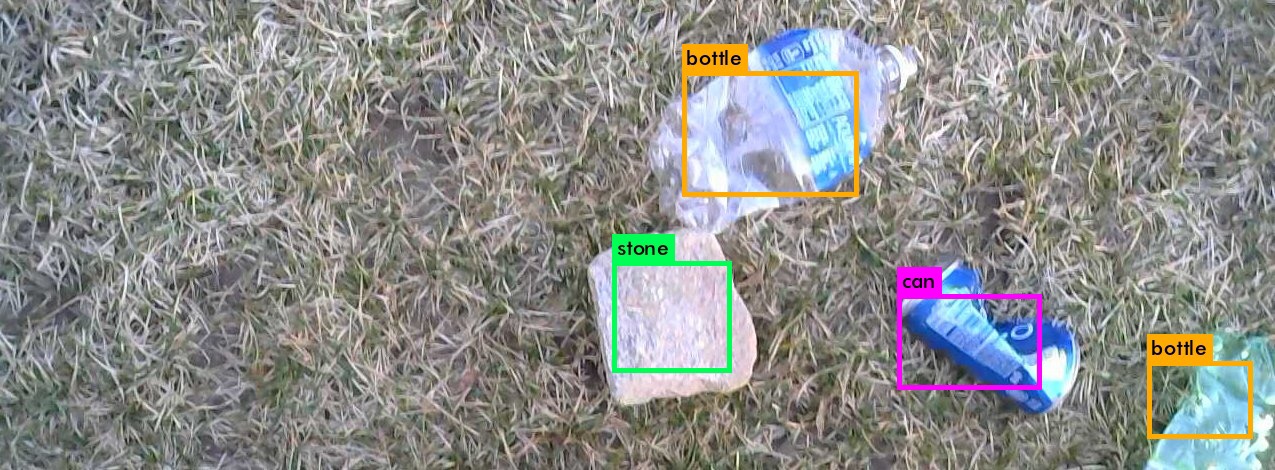

The computer vision subsystem’s goal is to build up an object detection and classification system, which can identify litter in the environment. This system is used to assist Mell-E to detect and identify cans, bottles, and other items before picking them up. For our litter detection and classification, we need the algorithm to perform in real-time while also being accurate. This semester we only focused on litter detection. We had tried a few different algorithms to achieve real-time and accurate litter detection. There exist trade-offs between accuracy and computational speed. We had tried image segmentation and also feature extraction using SIFT. The segmentation algorithm had good accuracy but was slow. Feature extraction had relatively faster detection but was inaccurate due to occlusion and noise from blades of grass. Therefore, our team developed a heuristic method to achieve goals which turned out to be good and computationally light. The heuristic method was fast enough for our purposes but there was a small accuracy trade-off in different lighting conditions. Currently, the subsystem can be divided into two stages: the first is object detection and angle estimation, and the second is object classification. Yolo (You only look at once): Although we already have the workable detection algorithm that can satisfy the minimum requirement of the CV part, we are not confident that it will work stably when integrated into all the other subsystems. Therefore, we are going to use limited time to try out another promising detection metheod: Yolo! Yolo applys a single neural network to the full image, and this network divides the image into regions and predicts bounding boxes and probabilities for each region. The bounding boxes are weighted by the predicted probabilities. Because Yolo is much faster than RCNN and current our team got the laptop, it would be promising if we can use Yolo to do the detection.  The outcome of Yolo Downview camera: After far range camera detect and estimate the angle, our robot base will turn to that angle and move towards the targeted objects. And when the targeted objects appear in the camera frame, we will output the x-y coordinates and inform the arm to pick it up through customed message in ROS. Then we conducted the visual servoing in order to let the cans appear in the center of the frame, making the arm easier to pick them up.

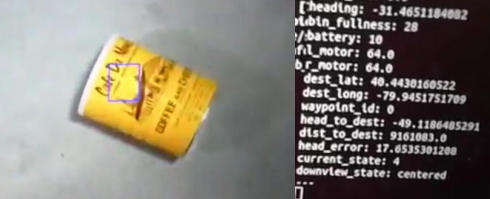

The outcome of Yolo Downview camera: After far range camera detect and estimate the angle, our robot base will turn to that angle and move towards the targeted objects. And when the targeted objects appear in the camera frame, we will output the x-y coordinates and inform the arm to pick it up through customed message in ROS. Then we conducted the visual servoing in order to let the cans appear in the center of the frame, making the arm easier to pick them up.

The output of downview camera

Downview camera visual servoing

Promising approaches to improve detection/Recognition that were investigated but not implemented in the final system:

- Pure Computer vision based techniques without machine learning.

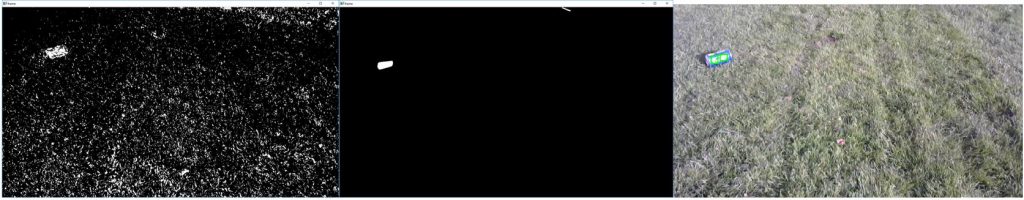

Far range camera: Object detection will be conducted using the far range camera, and is based on background-subtraction, contour-finding, and merging of bounding-boxes. Since the background of the locations Mell-E must patrol will be relatively uniform (mainly on grasses and sidewalks), we used OpenCV’s background-subtraction function to retrieve a statistical estimation of the background and subtract it. Then, we estimated the contours of prominent objects which don’t belong to the background. Since the base is continuously moving, the contours that we get are noisy. To solve this problem, we built a minimum bounding rectangle box on each contour; then using the previous five frames, we merged the rectangles together to get the stabilized estimated object’s boundary.

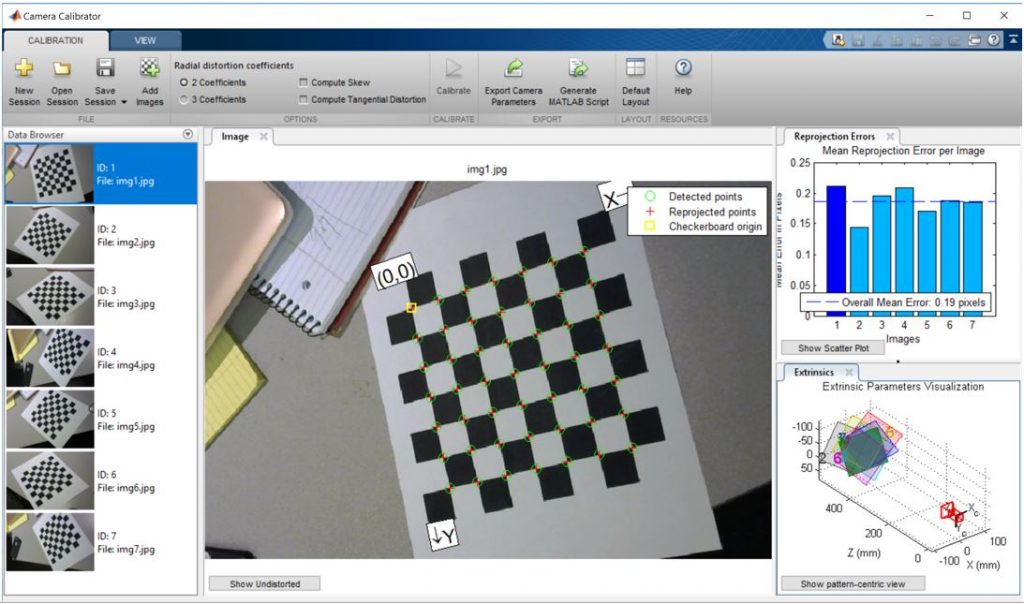

Object detection outcomes To get the intrinsic matrix of the camera, we used the MATLAB Toolbox cameraCalibrator to calibrate the camera.  Camera Calibration Next, we used the center point of the rectangles to represent the object’s x-y coordinate value. Based on camera geometry, we calculated the relative angle between an object’s center to the camera center.

Camera Calibration Next, we used the center point of the rectangles to represent the object’s x-y coordinate value. Based on camera geometry, we calculated the relative angle between an object’s center to the camera center.  Relative angle calculation The current CV subsystem can detect the desired objects (focusing on cans) at above 75% accuracy rate in real-time capture, and could simultaneously give the relative angle between objects and the camera’s center line. Next semester we will implement the classification work using neural networks to determine whether the detected objects are classified as litter. The computer vision system will also need to be integrated with the other subsystems during Spring semester. 2. TensorBox: TensorBox is an existed package developed based on TensorFlow. And the training process is similar to Yolo but easier than Yolo. The following is the outcome of TensorBox:

Relative angle calculation The current CV subsystem can detect the desired objects (focusing on cans) at above 75% accuracy rate in real-time capture, and could simultaneously give the relative angle between objects and the camera’s center line. Next semester we will implement the classification work using neural networks to determine whether the detected objects are classified as litter. The computer vision system will also need to be integrated with the other subsystems during Spring semester. 2. TensorBox: TensorBox is an existed package developed based on TensorFlow. And the training process is similar to Yolo but easier than Yolo. The following is the outcome of TensorBox:  3. Haar Detection: Besides the above learning methods, we also found another method named Haar detection. Because the current detection for far range camera sometimes will miss the cans, and based on background subtraction and contours merging depends heavy on the environment settings including the condition of grass, light and etc. Therefore I was seeking for opportunities to further improve it. Because currently I haven’t really used the color information and the region features to detect the cans on grass. Therefore the intuitive idea was why not utilize these features and added on our existed mechanism. So, I found Haar Cascades method. A Haar-like feature considers adjacent rectangular regions at a specific location in a detection window, sums up the pixel intensities in each region and calculates the difference between these sums. This difference is then used to categorize subsections of an image. Having found many customized trained examples online to detect different objects, I found the already trained classifier to detect bottle/can, and after setting up the environment, I got the following detection outcome:

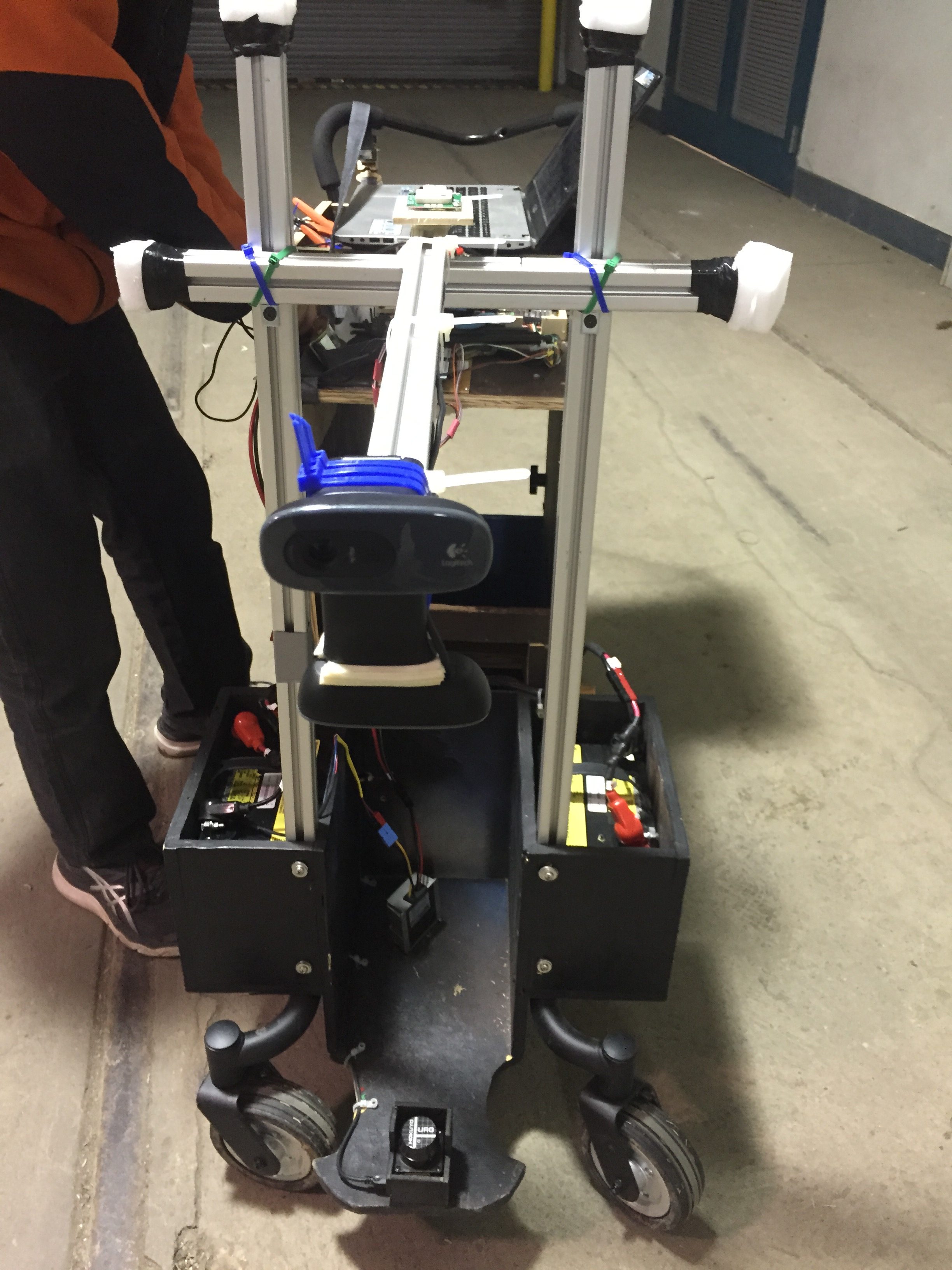

3. Haar Detection: Besides the above learning methods, we also found another method named Haar detection. Because the current detection for far range camera sometimes will miss the cans, and based on background subtraction and contours merging depends heavy on the environment settings including the condition of grass, light and etc. Therefore I was seeking for opportunities to further improve it. Because currently I haven’t really used the color information and the region features to detect the cans on grass. Therefore the intuitive idea was why not utilize these features and added on our existed mechanism. So, I found Haar Cascades method. A Haar-like feature considers adjacent rectangular regions at a specific location in a detection window, sums up the pixel intensities in each region and calculates the difference between these sums. This difference is then used to categorize subsections of an image. Having found many customized trained examples online to detect different objects, I found the already trained classifier to detect bottle/can, and after setting up the environment, I got the following detection outcome:  Current CV subsystem status: Currently, we already trained Yolo for both far range camera and down-view camera. We chose three kind of objects: cans, bottles and stones(non-litter), and collected 300 images lively for each class with appropriate resolution that fits with far range camera and down-view ones. We mounted the two cameras as follows:

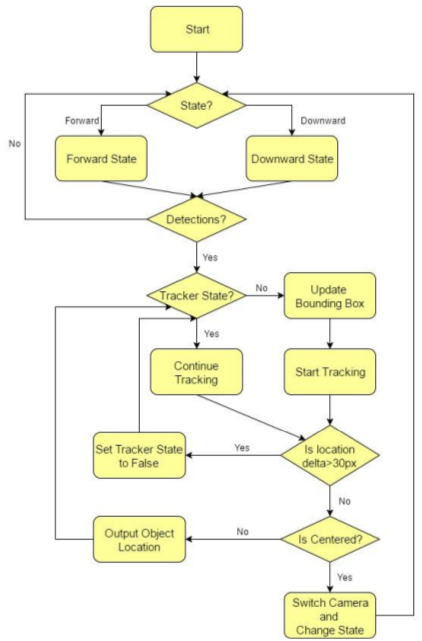

Current CV subsystem status: Currently, we already trained Yolo for both far range camera and down-view camera. We chose three kind of objects: cans, bottles and stones(non-litter), and collected 300 images lively for each class with appropriate resolution that fits with far range camera and down-view ones. We mounted the two cameras as follows:  And according to our logic, the far range camera is for detecting possible targeted objects while the down-view camera is used for classifying the objects, here we need to build the switching mechanism so that the far-range and down-view cameras won’t disturb each other. Also once Yolo detect the objects, we used the object tracker built in opencv to keep smooth and robust tracking. Here is the overall mechanism:

And according to our logic, the far range camera is for detecting possible targeted objects while the down-view camera is used for classifying the objects, here we need to build the switching mechanism so that the far-range and down-view cameras won’t disturb each other. Also once Yolo detect the objects, we used the object tracker built in opencv to keep smooth and robust tracking. Here is the overall mechanism: