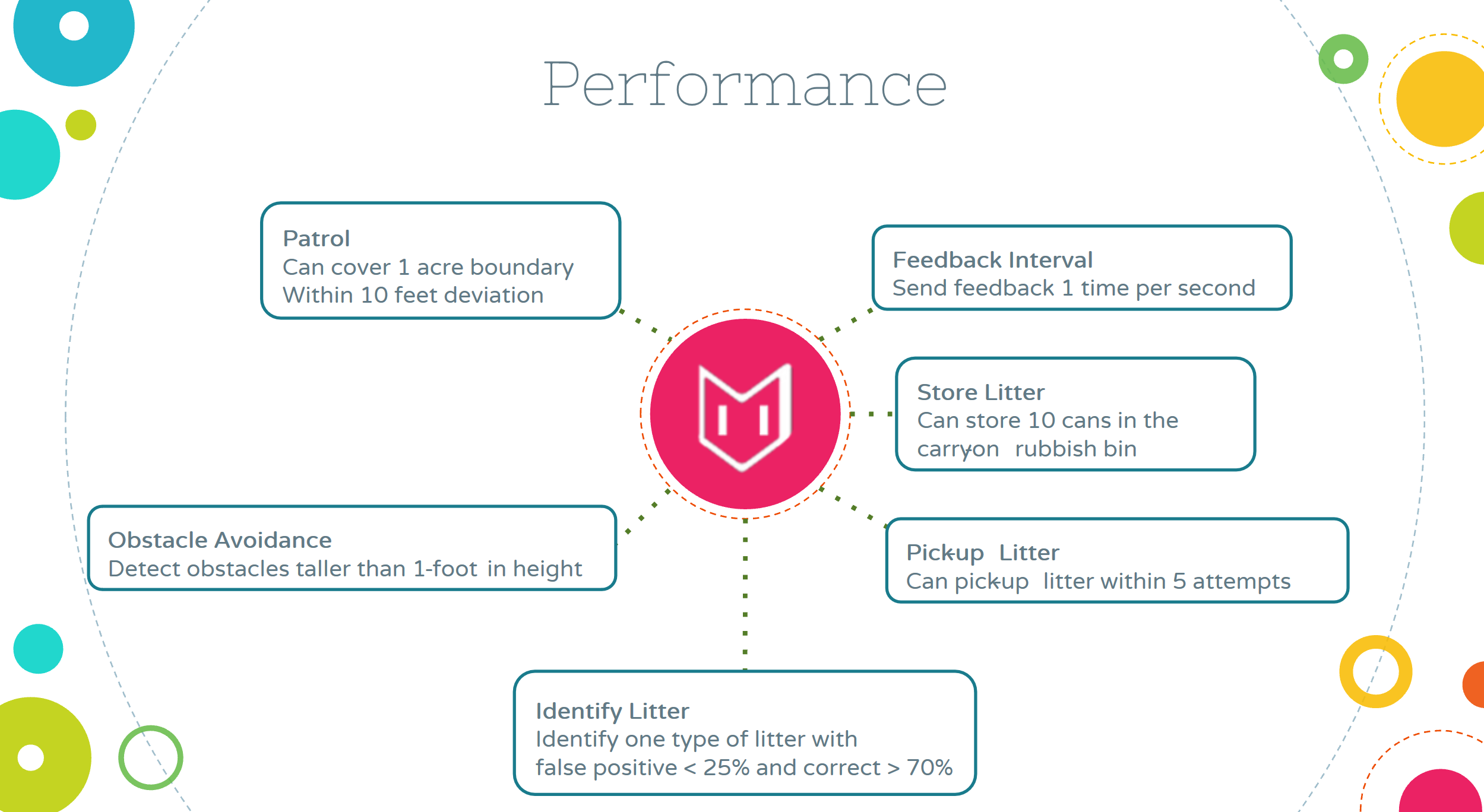

The final performance of Mell-E is yet to be determined; nevertheless, we have the following performance requirements which we hope to achieve by the end of the project term.

The figure above shows our performance requirement.

As of the Spring Validation Experiment, we have achieved all the performance requirement we set forth in the beginning of the project. The details are as follows:

Success Criteria:

- Mell-E should always stay within the boundary. If it leaves the boundary, it should not go more than 10 feet beyond the boundary.

- Show the path taken by Mell-E in the app as diagnostic data.

- Avoid obstacles without incurring damages. At the end of the test, inspect the test area, obstacles and Mell-E for any visible signs of damage.

- Mell-E should correctly pick up at least 3 out of the 4 litter items and should not pick up any non-litter items (simulated by stones).

- If the object is a soda can or bottle:

- Move the arm to the litter.

- Activate the vacuum to pick up the litter. Mell-E must pick up the litter in less than 5 tries.

- Plan arm path to the bin.

- Move arm to the bin and release litter. The litter should fall into the bin.

- Check bin fullness and update server.

- If the object is not a soda can or bottle:

- Ignore and move along the calculated coverage pattern

Test Results:

- Mell-E always stayed in the boundary. Even when there was a deviation it wasn’t by more than 10 feet. One rare occurrence was Mell-E completely losing the GPS lock which was potentially due to cloud cover which was not anticipated or seen earlier.

- Diagnostic data which included battery power, signal strength, bin fullness and path taken was shown in the app. The update latency was within the requirement of 10 seconds.

- All obstacles were avoided in the test. As expected, some obstacles were nudged once they were beyond the front of the robot, but there was no visible damage to the obstacles set up in the field.

- Mell-E was able to move to 3 out of the 4 litter objects. Pick up of the litter item was successful within 5 tries except for one can which was moved by the wind.

- As a whole, we were able to meet all the requirements that we had set out in the beginning of the project.

The main strengths of our current system are as follows:

- Drive system

- Currently our drive system is fairly robust. It is able to climb small hills and also go over grass. However, the wheels slip in certain conditions, namely when the ground is wet.

- The navigation system also includes a joystick control mode. This mode allowed us to easily move Mell-E from test location to storage and vice-versa.

- Arm subsystem

- The arm subsystem is able to pick up the litter within 5 tries.

- The arm also carries out a coverage pattern within its workspace to account for uncertainties in the positions reported by the CV subsystem.

- Obstacle avoidance

- Our obstacle avoidance system is capable of handling stationary obstacles very well as the objects move into view gradually.

- The obstacle avoidance system is also able to react to any immediate obstacles within its immediate 180 degrees view

- Object Detection

- Object detection in normal conditions (temperature of around 20 degrees Celsius and moderate sun) works very well.

- Object tracker in combination with YOLO provided a smoothened tracking system for visual servo.

- The YOLO neural network was able to run in real time on a small, low powered GPU.

- Feedback to the app (App and server subsystem)

- The feedback system is a robust system. It is able to maintain a latency of around 1s provided that the signal strength is stable.

- The app also provides an intuitive user interface which allows the user to easily manipulate Mell-E.

The main weaknesses of our system are as follows:

- Navigation accuracy

- We have been solely reliant on GPS. We have come to realize that GPS is not a reliable system provided that the modules that we were using have only an accuracy of about 10 feet.

- There was no odometry feedback that prevented us from having a more sophisticated feedback control system and model.

- Object detection robustness in different conditions

- Object detection in sunny weather with temperatures above 20 degrees Celsius overheats our laptop and causes a lag in the object detection and tracking pipeline.

- Accuracy of classification drops when the Sun is directly overhead due to excessive glare and low dynamic range camera.

- Arm subsystem

- Although the arm was able to meet our requirements, it was still a little slow executing a command. The arm is also not capable of handling multiple waypoint requests in a clean manner.

- Obstacle avoidance

- As mentioned in the test result section above, there was one occurrence during the test where the base nudged one of the obstacles slightly. Although it did no damage to the obstacle, it is something that we wanted to improve by adding either one or more planar lidars to give a full 360 degrees view of the area rather than the narrow 180 degrees it currently has.

As of the Fall Validation Experiment, we have achieved the following capabilities in terms of performance (Based on the goals set for Fall):

Navigation Subsystem and App and Server Subsystem

Success criteria to meet:

- Show that Mell-E reaches all the waypoints based on the markers placed on the grass.

- If any deviation occurs it should not move further than 10 feet from the marker.

- While moving, waypoints are marked as covered by showing the path taken on the app.

- While moving, the diagnostic data is sent and displayed on the Android app.

Test performance:

- Able to hit all waypoints within 10 feet accuracy.

- Continuously moved from one waypoint to another with little or unnoticeable jerky motions.

- The path taken was shown on the app along with other diagnostic data such as battery level, signal strength and bin fullness.

Obstacle Avoidance Subsystem

Success criteria to meet:

- While moving Mell-E finds an obstacle in path of motion 75% of the time.

- Mell-E moves around the obstacle in the direction of least resistance 75% of the time.

- Visually inspect whether Mell-E successfully passed the obstacle without damaging itself or the obstacle.

Test performance:

- Able to avoid the obstacles all the time.

- Stops when blocked from all sides.

- Picks and moves in the direction of least resistance all the time.

- Did not damage itself or injure the person acting as an obstacle.

Arm Subsystem

Success criteria to meet:

- Arm will move the end effector towards the input location to a position within 3 centimeters in either direction.

- If the location is not reachable, the software should report this. The work plane will be about ¼ of an annulus with a major radius of about 509mm and a minor radius of about 60 mm

Test performance:

- Able to reach the specified point on the grid with an accuracy of <3cm.

Litter Detection and Identification Subsystem

Success criteria to meet:

- Show the camera-feed on a laptop.

- Show the objects-of-interest being picked up in the camera-frame. The system detects at least ¾ objects-of-interest placed on its path.

- Show the angle (degrees) to turn to reach the litter. Mount a protractor on the camera and measure the angle by extending a rope from the camera’s centerline to the can. The angle tolerance will be 20 degrees.

Test performance:

- Able to detect all cans in consistent lighting.

- The angle accuracy was within 10 degrees.

Strength and Weaknesses

The main strengths of our current system are as follows:

- Drive system

- Currently, our drive system is robust. It can climb small hills and go over grass. However, the wheels slip in certain conditions, namely when the ground is wet.

- Since our requirement is to be able to run in fair weather conditions with no precipitation on the ground, we can meet this requirement.

- We are planning to improve traction by either making the drive wheels bigger or changing the tires to higher traction tires.

- Arm subsystem

- Our arm subsystem is able plan paths efficiently and also execute it within a 3cm accuracy.

- We think that this will be good for our litter pickup accuracy later given our requirement that the arm should be able to pick up the litter in 5 tries.

- Obstacle avoidance

- Our algorithm for obstacle avoidance is currently robust. It can detect different obstacles and avoid them. Mell-E can stop if it is blocked from all directions.

- The algorithm works robustly in most lighting conditions, but when it is in direct sunlight, it faces some issues due to specularity. This can be solved by blocking some of the sunlight by shading the Hokuyo. To make it more robust we may add some filtering to ignore certain points.

- Feedback to the app (App and server subsystem)

- This is one of our major strengths. This subsystem is close to completion. We are able to get the feedback data from Mell-E at a rate of 1Hz.

- The only thing left to be implemented in the next semester is the uploading of litter images for classifier training and also implementing remote start/stop for Mell-E.

The main weaknesses of our system are as follows:

- Navigation accuracy

- For us to be able to have a good coverage of the area, we need relatively precise localization.

- Currently, by using the phone’s GPS and IMU we are able to get an accuracy of within 10 feet. We are planning to improve this in the Spring by adding another sensor (possibly visual odometry).

- Object detection robustness in different lighting

- Currently, our computer vision subsystem is able to detect litter outside in constant light conditions.

- The detection accuracy and rate decreases when there are irregularities in lighting such as shadows and bright sunlight. The effect is most pronounced when we have cans partially in sunlight and partially in shadows.

- We will be improving this in the next semester by doing the detection and classification through neural networks.

- System integration

- This semester we were not able to get the system integrated as well as we had earlier planned. Currently, the obstacle avoidance and navigation subsystems are still separate. However, in the future, this needs to be integrated.

- Most of our integration work will be concentrated on in the Spring semester.