Navigation Subsystem

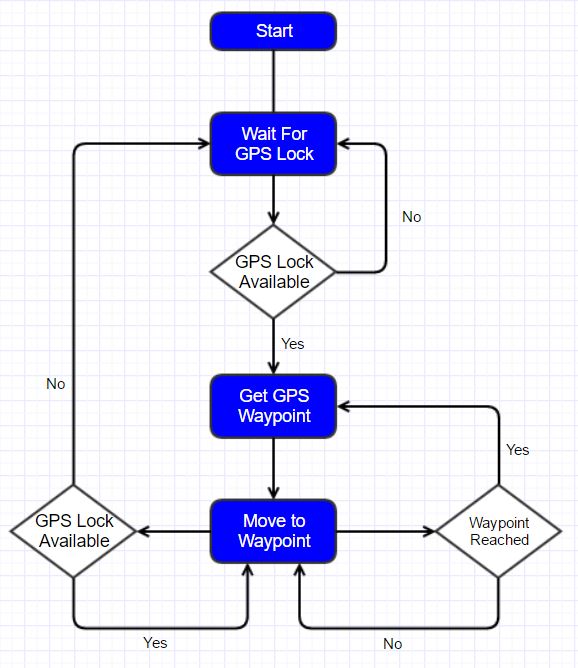

The navigation subsystem is currently based on GPS. The requirements for the navigation subsystem is for Mell-E to be able to localize in a boundary provided by the user and remain within the boundary. In the case, there is any deviation from the boundary, Mell-E should not go beyond 10 feet outside the boundary. The current software flow for the navigation subsystem is shown in figure below:

The navigation subsystem software flowchart

After our GPS and IMU modules failed to meet our requirements and given the time-crunch we faced as we approached the Fall Validation Experiment, we were looking at different sources for sensor data. The only promising option that we could validate within the allotted time was using a mobile phone as a source of sensor data. The phone’s GPS sensor was better since its antenna was more integrated with the chip allowing for better GPS signal reception. The phone’s location services rely not only on the GPS signals but also considers data from cell tower, phone signal, and other data. Also, the phone’s sensors are better shielded from interference. Using the phone, we could get a good refresh rate and also better heading accuracy. These sensors allowed Mell-E to get within 10 feet of a waypoint autonomously. In the spring semester, we wanted to integrate all the subsystems together before improving them. Another change that we made from the Fall Semester is to change our data source from an Android Phone to a integrated GPS and compass module. Using this module we were able to get a performance close to the FVE performance in navigation. We have finally integrated the navigation, obstacle avoidance, object detection and visual servo and the arm subsystems together. The motor controller is also now more streamlined with its own APIs.

Under normal use conditions, the user will provide Mell-E with a boundary. Mell-E will then generate waypoints in to match a lawn mover pattern within the boundary given. Once the waypoints are generated, a PID controller is used to control the motion of the robot to each waypoint. Mell-E determines its current location and the location of the next waypoint, and it calculates the distance and angle error between its current location and the next waypoint. We implemented only a PI controller instead of the full PID controller. This is mainly because of the lack of sensor modalities on the base. All our navigation and motion planning was accomplished solely based on readings from the GPS module and the visual-servoing was solely based on the camera module. Since both of these did not give us a reliable measurement of the speed of the base, we dropped the D term and only implemented a PI controller. We were also not able to use more sophisticated SLAM-based methods due to the lack of features in the environment that we were working on. The planar lidar did not produce any features since our environment is sparse and the camera was unreliable since different patches of grass look similar to one another. Based on the magnitudes of the error, we generate a motor speed which is then communicated to the motor controller via the Arduino Mega microcontroller.

The navigation subsystem also handles the state transitions for the base. The navigation subsystem mainly keeps track of 4 different states. The default state is the joystick state. This state encompasses both the “wait” state described above and also enables the manual controls via the joystick. For the joystick we used an XBox controller. The controller allows us to control the max speed, move the base, enable and disable debug messages and force state-switches. The next state it keeps track of is the navigation state in which it carries out its lawn mower coverage pattern. When it detects a piece of litter, it switches to litter pickup state in which it also changes the PI controller to the one that is tuned for visual servo. For visual-servoing, Mell-E takes in the current location of the object in the camera frame and the desired location of the object and calculates the error in between them and carries out PI control to get to the final location. Finally, Mell-E also keeps track of the arm state. While the arm is in operation, the navigation subsystem ignores all other messages and waits for the arm to signal that it is done with its task before starting to move again.

For more details, please visit https://github.com/LitterBot2017 repo.