Oct. 18th Progress Review 1

Camera Calibration

To achieve the precise position and orientation of the AprilTag, camera calibration is needed to get the intrinsic matrix of the camera we use. Intrinsic matrix tells how to transform 3D points in camera frame into 2D points in images.

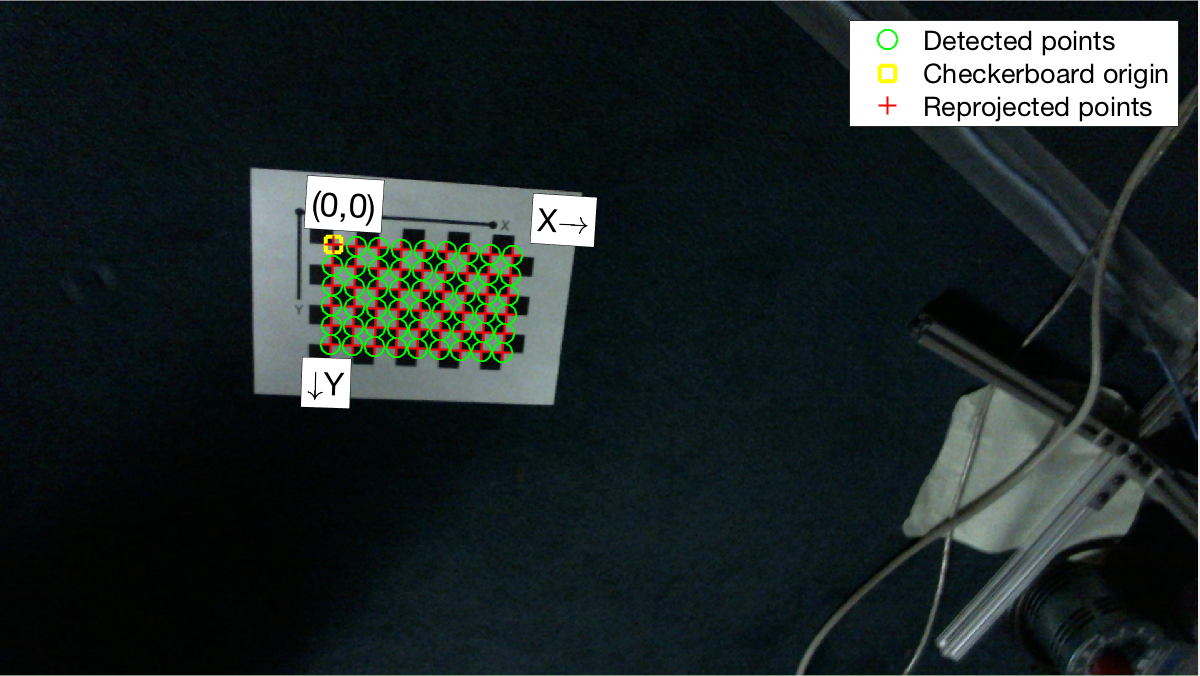

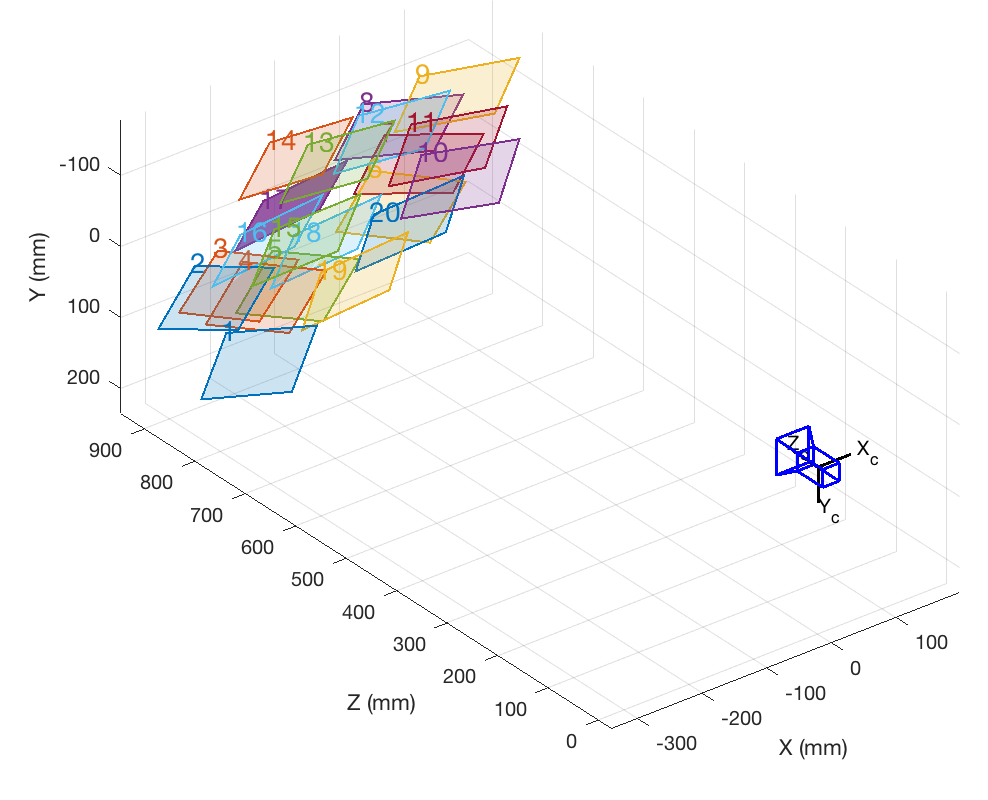

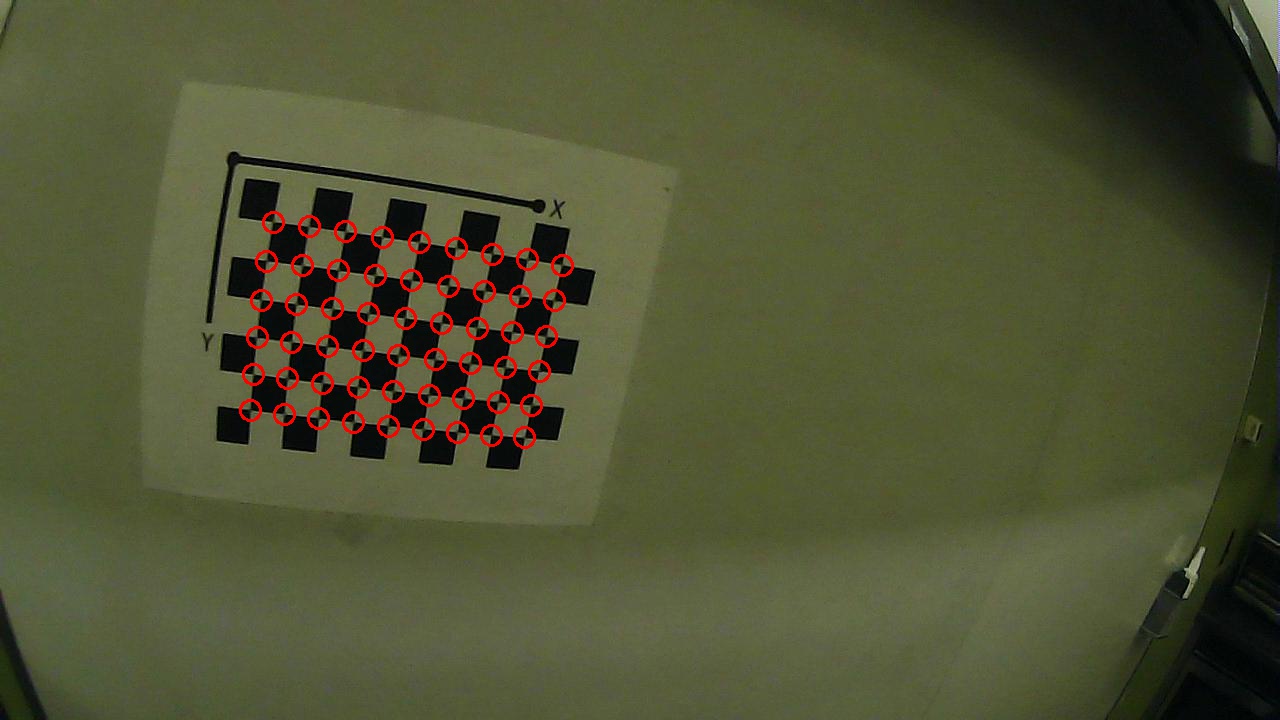

Here, I used MATLAB toolbox to do the camera calibration automatically. First, I selected 30 images that clearly captures the checkerboard by the target camera. Then, I fed these images to the camera calibrator. The toolbox will detect each intersection point of the checkerboard, calculate their 3D position and re-project them back to image by the intrinsic matrix. According to the mean pixel errors, I deleted the outliners and re-calibrated to the get the more accurate result.

Chessboard with detected intersection points.

The orientation of camera for each image.

Calculation of Bounding Box

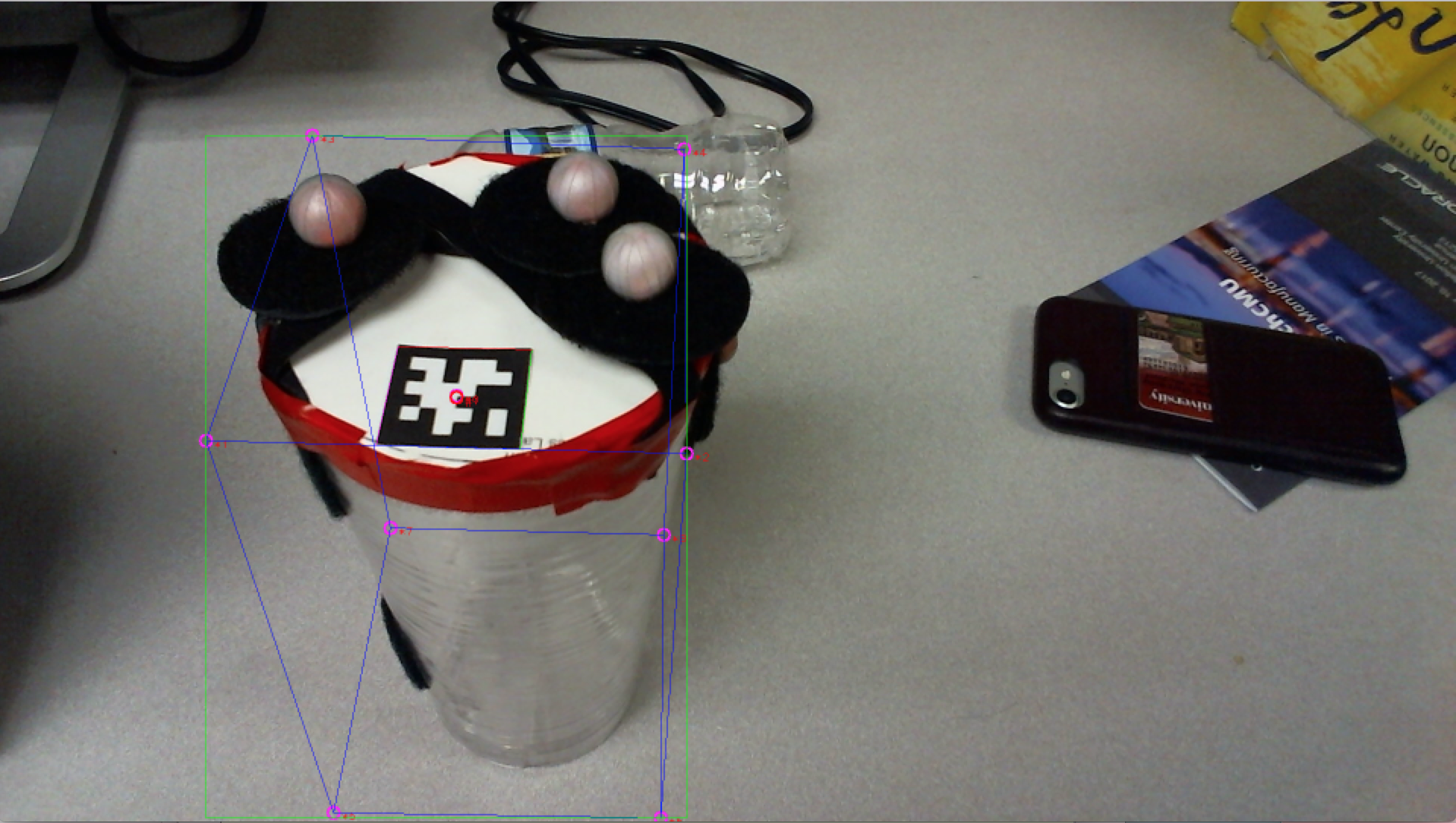

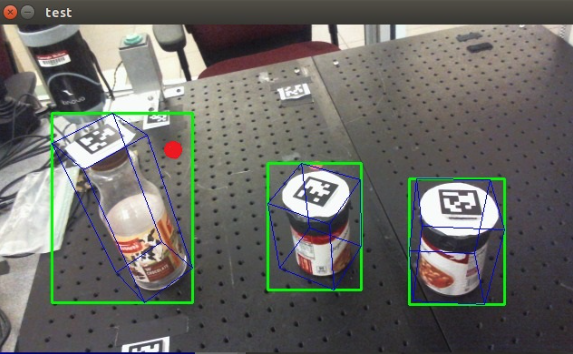

In this part, the goal is to get the 2D bounding boxes of objects, which are later used to calculate the probability of gaze intention. This can be divided into the following steps: First, hardcode the object model. Second, get the pose and orientation of AprilTag. Third, transform the model into camera coordinate. Forth, transform the 3D position of the model into 2D position in the image. Fifth, get the 2D bounding box from these set of 2D points.

For the first step, to make life easier, the model is hardcoded as the minimum cuboid of the object, instead of its real shape. For the 6D pose of the object, I adopted the AprilTag library (MIT version). This library will output the x, y, z and also the roll, pitch, yaw of camera coordinate. Then the transformation between camera coordinate and AprilTag coordinate is calculated.For the last two steps, I used the intrinsic matrix to transform 3D points from camera coordinate to 2D image. In the figure below, we can see the pink points with the label from 0 to 9, which represents the center of AprilTag and the eight vertexes of the model. Label 0 is hardly seen because it almost overlaps with the original detection label of AprilTag, which shows the accuracy of camera calibration. The other eight points together show the framework of the 3D bounding box of the object, which is shown in blue lines. From these eight points, I calculated the top, bottom, left and right edge of the model in the image, which is shown in green rectangular. This green box is the one that fed for gaze probability.

Bounding Box

Oct. 27th Progress Review 2

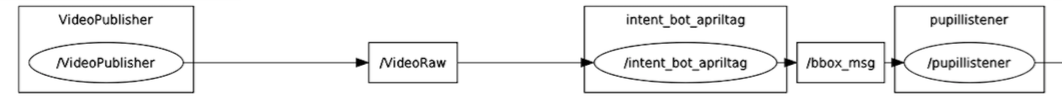

This week I mainly did the ROS integration for gaze node and AprilTag node. The integration should be straightforward — AprilTag node publishes messages including bounding box information and gaze node subscribes them. I built AprilTag node in ROS with a bounding box publisher. Besides, an extra node was built for reading the images from the PupilLab socket, downsampling the images and publishing them to the AprilTag node. The rosgraph below shows the structure of these nodes.

The rosgraph structure.

Nov. 17th Progress Review 3

Testing New Glasses

For the new glasses, there are two pupil cameras, which means the gaze detection will be much more accurate. But one thing that really affects us is that the outward camera is a fisheye camera, which has obvious distortion. Though the detection of AprilTag was not affected, the new intrinsic matrix and distortion coefficients were needed.

After that, I first took a video of the chessboard with the fisheye camera and selected 20 clear images for the camera calibration. The raw image, the image with intersection corners detected and the undistorted image are shown below.

The raw image.

The image with intersection corner detected.

The undistorted image.

Subsystem Robustness

Initially, for the convenience, I used the consecutive number of AprilTag ID in my code to match them with the hardcode models of each object. Besides, the parameters of camera and models were written in code. I put them all in a config file to make the code easier to maintain.

Visualization

Initially, the visualization of AprilTag detection and bounding boxes were drawn in AprilTag node. Now, I published the 2d and 3d bounding box as the message to the Main node and integrated them together in one image with the gaze detection.

The visualization.