October-27-2017

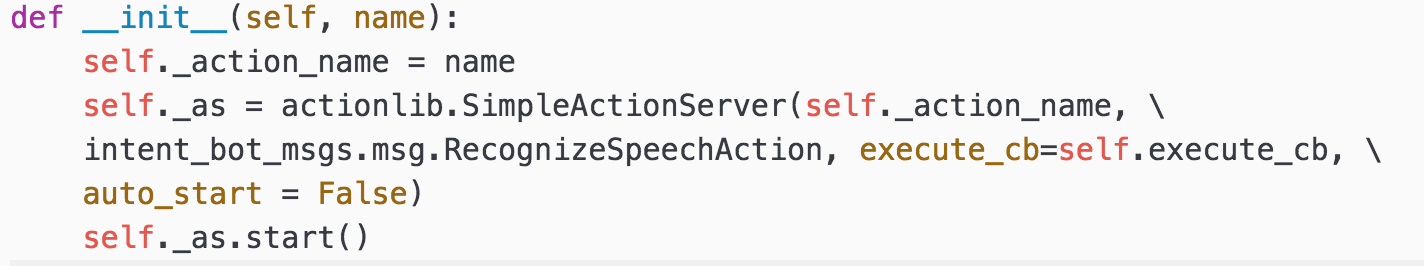

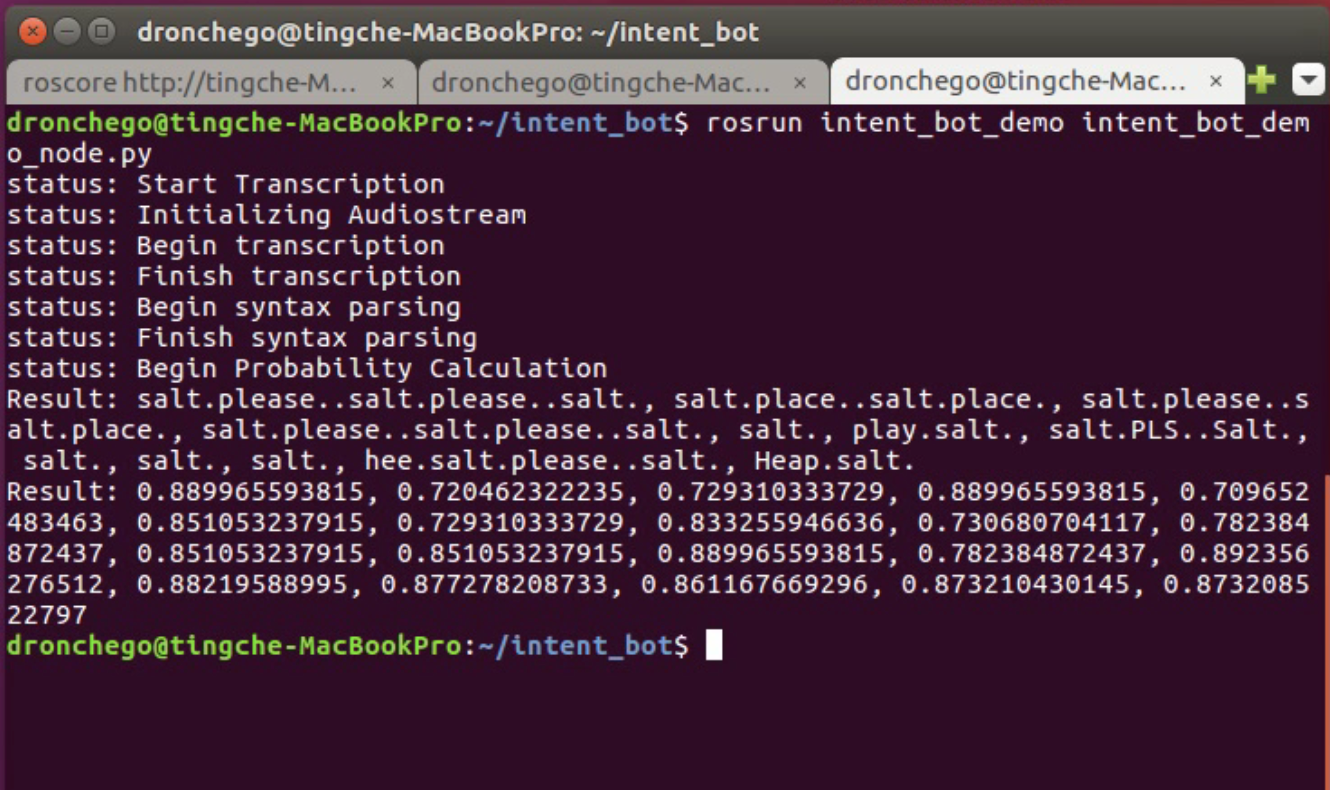

For this week I integrated my speech probability distribution node into ROS. Due to the long blocking time required for Google API to transcribe the speech to text, I used the action library to prevent blocking the main loop during transcription. I created a simple action server using action.lib and python. The callback function of this server is my speech transcription and probability distribution calculation sequence.In each step of the probability calculation, feedback is sent to the action client to notify it of the current progress. The first figure shows the initialization of the simpleActionServer. To test my simple action server, I wrote a simple action client that calls the simple action server. The action client would send a goal to the server and listen for feedback. Once the goal is completed, the result is printed out. The second figure is the printed result of the simpleActionClient

November-10-2017

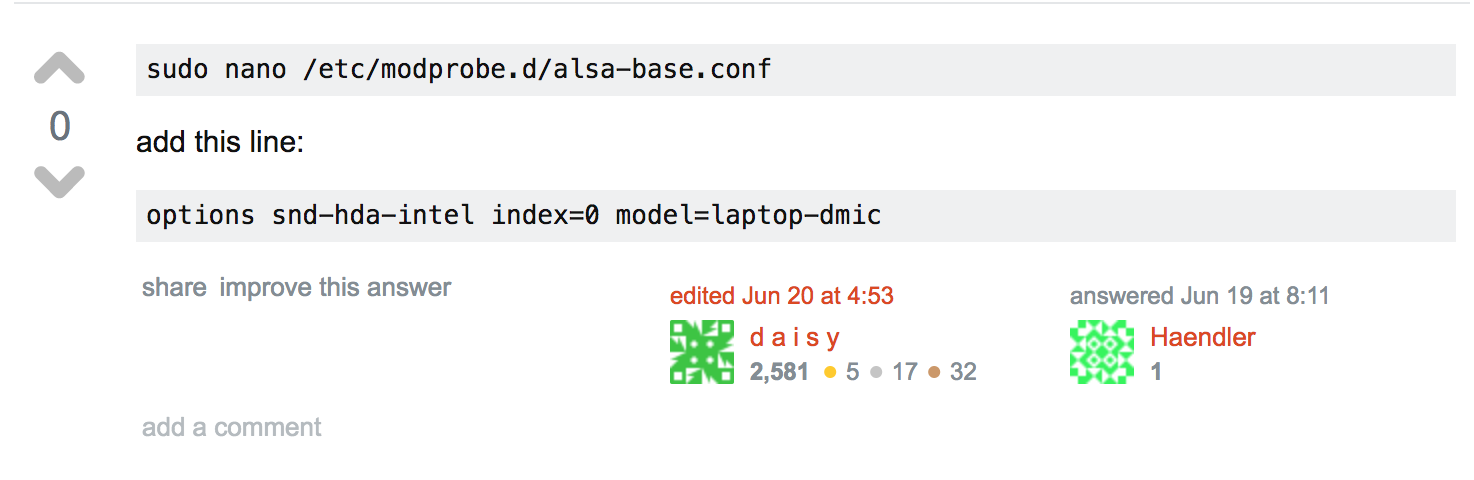

This week we tried to migrate the Intentbot ROS software into the laptop HARP-Lab provided for us. However, half-way through integration, I realized that the microphone input on the HARP laptop is non-functional. There are several possible solutions posted online regarding updating and upgrading the sound card driver and the Ubuntu operating system. However, one of the requirements given by our sponsor was not to update or upgrade any software on the laptop to prevent breaking any crucial dependencies. This problem as finally resolved when I an option line in the base-alsa.config file.

November-17-2017

I integrated the Graphical User Interface with Ben. He used the TKinter library to create a GUI that would display the waveform of the Speech, the predicted object detected from the speech recognizer, and control for starting and stopping the trial. I also helped with the debugging and wrote the functions that linked the GUI to the rest of the Intent Bot System.