1. System summary

With a surge in e-commerce logistics, more efficient package delivery mechanisms are required to deliver more packages in less time at a smaller cost. A hybrid-vehicle autonomous delivery system which uses both Autonomous Ground Vehicles and Unmanned Aerial Vehicles can be time efficient while maintaining the cost for delivery. The major problem with delivering packages using UAVs is robustly navigating to the correct house and dropping the package at an accessible drop zone (like the front door), without harming humans or damaging property.

This project aims to solve the problem of efficiently navigating to a house and robustly detecting and landing at the drop zone, while avoiding obstacles. The UAV shall be able to take off and land at any visually marked platform, enabling it to be used in conjunction with ground vehicles for hybrid delivery systems.

This page contains the following items:

- Problem Description

- Use Case

- System Requirements

- Functional Architecture

- Cyberphysical Architecture

- System Performance

Currently, package delivery truck drivers hand-carry packages door to door. This model is used by Federal Express (FedEx), United Postal Service (UPS), United States Postal Service (USPS), and Deutsche Post DHL Group (DHL). We believe that drones have the potential to expedite this system.

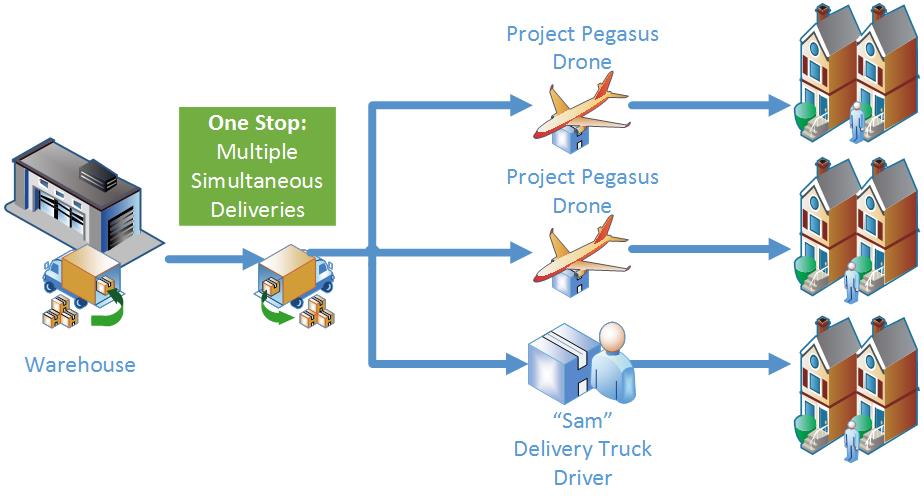

Amazon is developing Prime Air with the same intent. However, we believe the most efficient system combines delivery trucks with Unmanned Aerial Vehicles (UAV’s) which saves time, expense, and improves customer’s satisfaction.

Project Pegasus aims to deliver packages to a house using UAVs.

Given the coordinates of the house, a UAV with a package takes off from point A, autonomously reaches close to the house, scans the outside of the house for a visually marked drop point, lands, drops off the package, then takes off again to land on another platform at point B.

Sam drives a package delivery truck for one of the largest parcel delivery companies. He arrives each morning to a pre-loaded truck and is handed his route for the day. Even though he has an assigned route, he sometimes is tasked with delivery packages to additional streets. These are often the packages that should have been delivered the day before. Thus it’s critical that packages make it to the right house on time today.

Now that his company uses drones, Sam can cover more area in less time. He drives out to his first neighborhood for the day with two packages to deliver. He can quickly deliver the first package, which is heavier. The second package is lighter but a street over. After parking, he quickly attaches the second package to a drone and selects the address on the base station computer. The drone takes off and disappears over a rooftop as Sam unloads the first package.

Having delivered the first package, Sam gets back in the truck and starts driving. In the past, he would have driven to the next house and dropped off the package. Nowadays, Sam knows that the drone will deliver the package to the right house and catch up. This saves him a few minutes which adds up over the course of the day to real time savings. This makes Sam a little happy.

Meanwhile, the drone has moved within vicinity of the second house. It begins scanning around for the visual marker outside the house. The drone finds the marker and moves in for a landing. It’s able to avoid people on the sidewalk and the large tree outside the house. The drone lands on the marker and does a quick confirmation, it checks the RFID code embedded in the marker. Confirming the correct house has been found, the drone releases the package and notifies the package delivery truck’s base station. The base station then updates the drone on the delivery truck’s position.

The drone catches up to Sam at a red light and they continue on their way. Sam’s day continues this way.

On a major street, he has several packages to deliver in the area. He quickly loads up a few drones, selects the addresses, and watches to drones do all the work. Sam had to get a gym membership since he’s no longer walking as much, but he’s happy to be getting through neighborhoods substantially faster. Because the drones allow one driver to do more, the delivery company is able to offer package delivery at a more competitive rate with more margin. This makes customers happy in addition to getting their packages faster. In turn, they are more likely to use the delivery company, which makes the company pleased with their investment.

Late in the day, the base station on Sam’s delivery truck notifies him that an adjacent route wasn’t able to deliver a package. In the past, this would have meant that the package would be driven back to the warehouse to be resorted and delivered with tomorrow’s load. This was a substantial waste of fuel and manpower. Today, routes can be dynamically updated. A drone will deliver the package to Sam’s truck and once he’s in the correct area, the drone will deliver the package. The customer will never know there was a problem, and the delivery company saves money.

Sam arrives back at the warehouse, his truck empty. He’s satisfied in the work he’s accomplished, customers are happy that received their packages on time, and the delivery company is exceptionally happy with the improved efficiency and customer retention.

The critical requirements for this project are listed below under Mandatory Requirements. These are the ‘needs’ of the project. Additionally, the team identified several value-added requirements during brainstorming. These ‘wants’ are listed below under Desired Requirements.

Mandatory

Functional Requirements

M.F.1 Hold and carry packages.

M.F.2 Autonomously take off from a visually marked platform.

M.F.3 Navigate to a known position close to the house.

M.F.4 Detect and navigate to the drop point at the house.

M.F.5 Land at visually marked drop point.

M.F.6 Drop package within 2m of the target drop point.

M.F.7 Take off, fly back to and land at another visually marked platform.

Non-Functional Requirements

M.N.1 Operates in an outdoor environment.

M.N.2 Operates in a semi-known map. The GPS position of the house is known, but the exact location of the visual marker is unknown and is detected on the fly.

M.N.3 Avoids static obstacles.

M.N.4 Sub-systems should be well documented and scalable.

M.N.5 UAV should be small enough to operate in residential environments.

M.N.6 Package should weigh at most 100g and fit in a cuboid of dimensions 9.5″ X 6.5″ x 2.2″.

Desired

Functional Requirements

D.F.1 Pick up packages.

D.F.2 Simulation with multiple UAVs and ground vehicles.

D.F.3 Ground vehicle drives autonomously.

D.F.4 UAV and ground vehicle communicate continuously.

D.F.5 UAV confirms the identity of the house before dropping the package (RFID Tags).

D.F.6 Takes coordinates as input from the user.

D.F.7 Communicates with platform to receive GPS updates (intermittently).

Non-Functional Requirements

D.N.1 Operates in rains and snow.

D.N.2 Avoids dynamic obstacles

D.N.3 Operates without a GPS system.

D.N.4 Has multiple UAVs to demonstrate efficiency and scalability.

D.N.5 Compatible with higher weights of packages and greater variations in sizes.

D.N.6 Obstacles with a cross section of 0.5m x 0.5m are detected and actively avoided.

D.N.7 A landing column with 2m radius exists around the visual marker

D.N.8 – Not reliant on GPS. Uses GPS to navigate close to the house. Does not rely on GPS to detect the visual marker at the drop point.

Performance Requirements

P.1 UAV places the package within 2m of the target drop point.

P.2 UAV flies for at least 10 mins without replacing batteries.

P.3 UAV carries packages weighing at least 400g.

P.4 UAV carries packages that fit in a cube of 30cm x 30cm x 20cm.

P.5 One visual markers exists per house.

P.6 Visual markers between houses are at least 10m apart.

P.7. A landing column with 3m radius exists around the visual marker

P.8 Obstacles with a minimum cross section of 1.5m x 0.5m are detected and actively avoided.

Subsystem Requirements

S.1 Vision

S.1.1 The size of the marker must be within a square of side 1.5m.

S.1.2 Error in the X,Y,Z position of the marker from the camera should be correct upto 10% of distance from it.

S.2 Obstacle Detection and Avoidance

S.2.1 Obstacles must be detected with a range of 50 cm to 150 cm from the UAV.

S.2.2 Obstacles should be at least in 90% of the situations/positions.

S.2.3 Distance to the obstacle should be correct with a maximum error of 20cm.

S.2.4 Natural obstacles around a residential neighborhood should be detected.

S.3 Flight control

S.3.1 UAV must reach the GPS waypoint with a maximum error of 3m.

S.3.2 UAV should be able to fly 10 minutes without replacing the batteries.

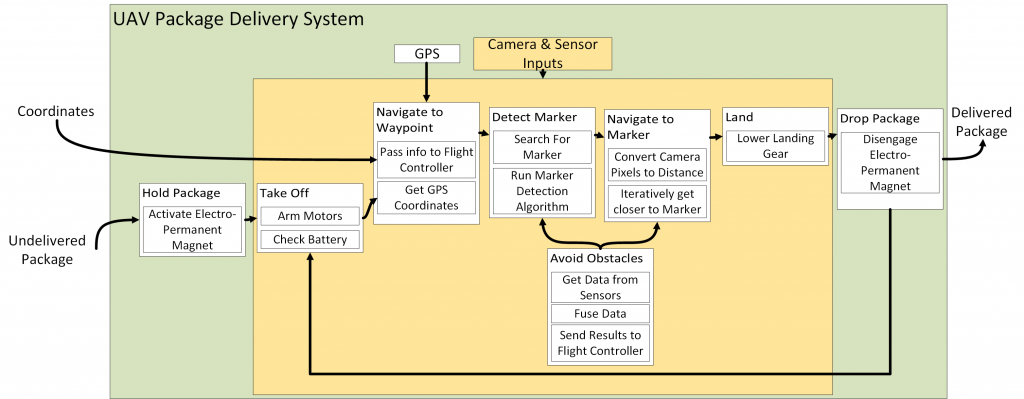

Viewing the whole system as a black-box, there are 2 inputs – the package to be delivered and GPS coordinates of customer location. The output of the system is the package successfully delivered at the destination.

Looking inside the black box now, the UAV initially holds the package by activating an electro- permanent magnet. Coordinates of the customer location are input to the User Interface. The developed Plan Mission software decides the navigation waypoints and plans a path to the destination. This information is then relayed to the UAV by the communication interface. The mission planning software continuously receives the current coordinates from the UAV and sends updated coordinates back to the UAV.

Meanwhile the UAV checks the battery status. If there is sufficient battery, the UAV arms the motor and takes off. The UAV navigates using the waypoint to the vicinity of the destination using GPS input. It then switches to the marker detection code. The UAV takes input from the camera and starts to scan the vicinity of the customer destination for the marker put up by the customer. It moves in a predetermined trajectory for scanning. Once the marker is detected the vision algorithm maps the size of the marker in the image to actual distance of the UAV from the marker. The UAV continuously receives this information and moves towards the marker. The UAV finally lowers lands on the marker. It drops the package by disengaging the electro-permanent magnet and flies back to the base station using waypoint navigation.

During the ‘Detect Marker’ &’ Navigate to Marker’ functions, the UAV continuously runs an obstacle avoidance algorithm on-board. The obstacle-avoidance algorithm continuously receives data from sensors, fuses the data and asks the flight controller to alter its trajectory if there is an obstacle in its path.

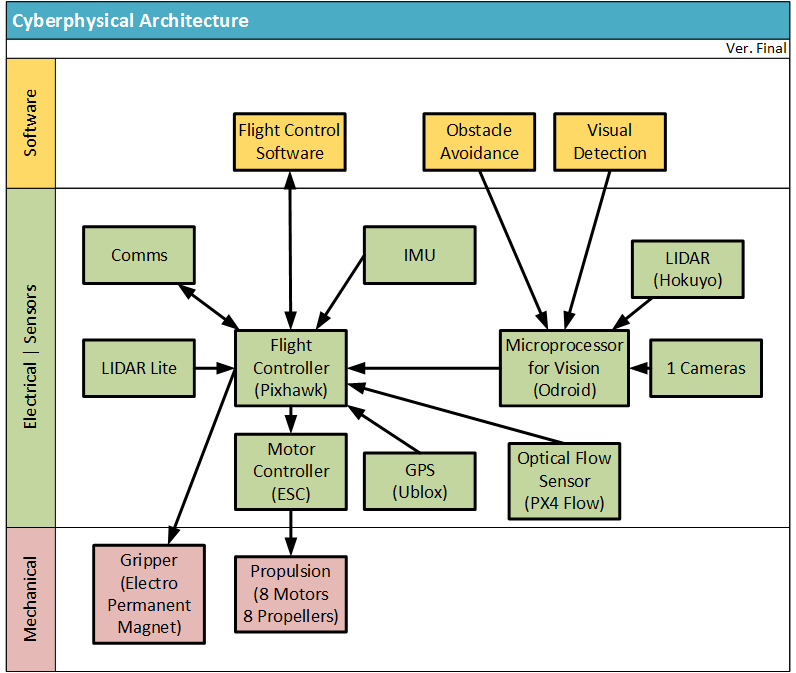

The cyberphysical architecture can best be understood by the figure below. On a high level, the system can be broken down into three major categories: mechanical components, electrical components, and software. The electrical components are the bridge between the software and the mechanical actuation.

d. System design description

Mechanical System

We are using 3DR X-8+ UAV for our project. The mechanical system of our project consists of the propulsion system and the gripper. The propulsion system is part of the 3DR kit that we purchased but must be controlled appropriately by our software. The gripper is made by NicaDrone — an electro- permanent magnet. This gripper is the interface between the vehicle and the package and must allow the package to be dropped off upon arriving at the destination. It will be controlled by our flight control system which is the brain of the UAV.

Electrical System

The electrical system is composed on a high-level by the flight control board, the vision subsystem hardware, sensors, and the communications hardware. The flight controller is Pixhawk the brain of the entire system and runs all critical flight control software. The flight controller interacts with two sensors on the vehicle: the IMU and GPS. The flight controllers takes commands from the main processing board which directs it to avoid obstacles and land on visual markers. The output from the flight controller goes to the motor controller and is then converted into appropriate signals to control the propulsion system.

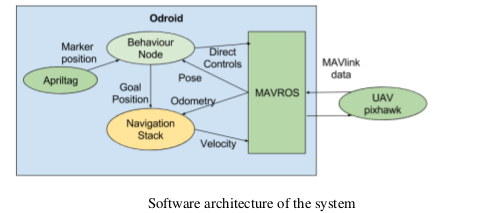

Odroid – microprocessor for running visual algorithms connects to the camera and Lidar on board the UAV. Odroid runs vision and obstacle detection algorithms and outputs the result to the flight controller.

Software

The software of the system comprises of 2 main levels. The lower level control is implemented in the flight controller. This runs the control environment to monitor the position and orientation of the UAV to maintain stable flight. It uses the GPS, Compass, IMU and a Barometer as sensors.

The higher level control runs the application specific program and controls the UAV through the lower level control. It interfaces with the camera and the Lidar. It runs the behavior program in addition to the vision processing algorithms (AprilTag detection), obstacle detection and planning algorithms.

Fall Validation Performance Evaluation Matrix

| Requirement number | Requirement | Subsystem | Performance |

| MN3 | Detect static obstacle of minimum size

1.5 m X 0.5 m & 2m X 2m |

Obstacle detection | Successful within error margin of 20cm |

| Detect obstacles of minimum size 1.5 m X 0.5 m in natural environment | Obstacle detection | Successful within error margin of 20cm | |

| MN4 | Marker should be detected in 20cm to 20m range | Vision | Successful |

| Manual flight control | Flight Control | Successful initially

Later compass problem |

|

| MF8 | Take coordinate as input from user | Flight Control | Successful initially

Later compass problem |

| MF9 | Communicate with ground platform to receive GPS updates | Flight Control | Successful initially

Later compass problem |

| MF3 | Waypoint Navigation | Flight Control | Successful initially

Later compass problem |

| MN1 | Operate in outdoor environment | Obstacle Detection & Vision

Flight Control |

Successful

Compass issue |

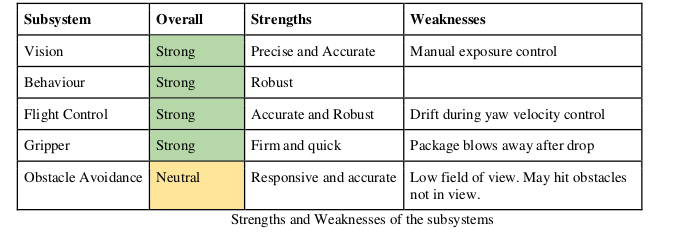

Strengths and weakness of our system – Fall 2015

Strength – Vision System

The vision system has been developed to run using a Logitech webcam on an Odroid. We use nested AprilTag markers and AprilTag detection coupled with Lucas Kanade tracking algorithm to track the marker location. It is able to achieve upto 29 frames per second update rate and can detect the marker upto 20m. To summarize, our vision system looks strong and ready to be integrated. The algorithm used is fast, robust and accurate and based on initial estimates should be able to guide the UAV to land.

Neutral System – Obstacle Detection System

On the Obstacle Avoidance end, 14 ultrasonic sensors are sufficient to cover area of 1.5 m radius around the UAV. Using serial pining we can get rid of interference. The update rate for the system is around 250 ms which is good. The sensors are not very precise and give around +-20cm error when obstacle are not exactly perpendicular to the sensor. However the error of the system is within the limits of our system requirements.

Weakness – UAV/Flight control

We realize that the FireFly6 is the weakest subsystem of our project at this point in time. The UAV is also the most important subsystem of our project and must be made operational as soon as possible. Due to this realization, we are contemplating as part of our risk mitigation to change platforms entirely and go with an octocopter capable of doing everything the FireFly6 does just at slower speeds and with less flight time. Cutting our losses and modifying our project will be the best thing for our project long term and so we believe it is the right move to take at this time.

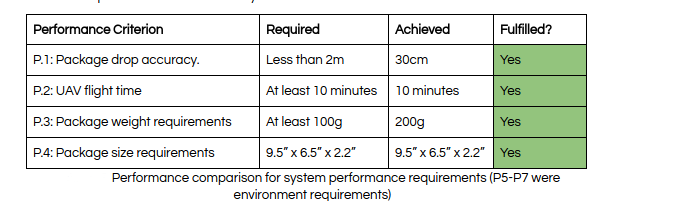

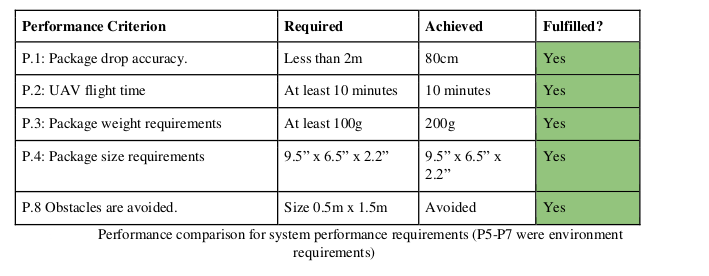

Spring Validation Performance Evaluation Matrix

Package delivery without obstacles

A 9.5” x 6.5” x 2.2” package weighing 200g was delivered 30cm from the center of the marker. The UAV took off from a starting position around 25m away from the house and landed back on a the truck position another 20m away from the house.

Package delivery with obstacles

A 9.5” x 6.5” x 2.2” package weighing 200g was delivered 80cm from the center of the marker. The UAV took off from a starting position around 12m away from the house and landed back on a the truck position another 20m away from the house.

Strengths and weakness of our system – Spring 2016

Overall system performance is strong and robust. Package delivery without obstacles is stable and repeatable. Obstacle avoidance has issues related to field of view.

Detailed strengths and weaknesses of the subsystems are listed in table