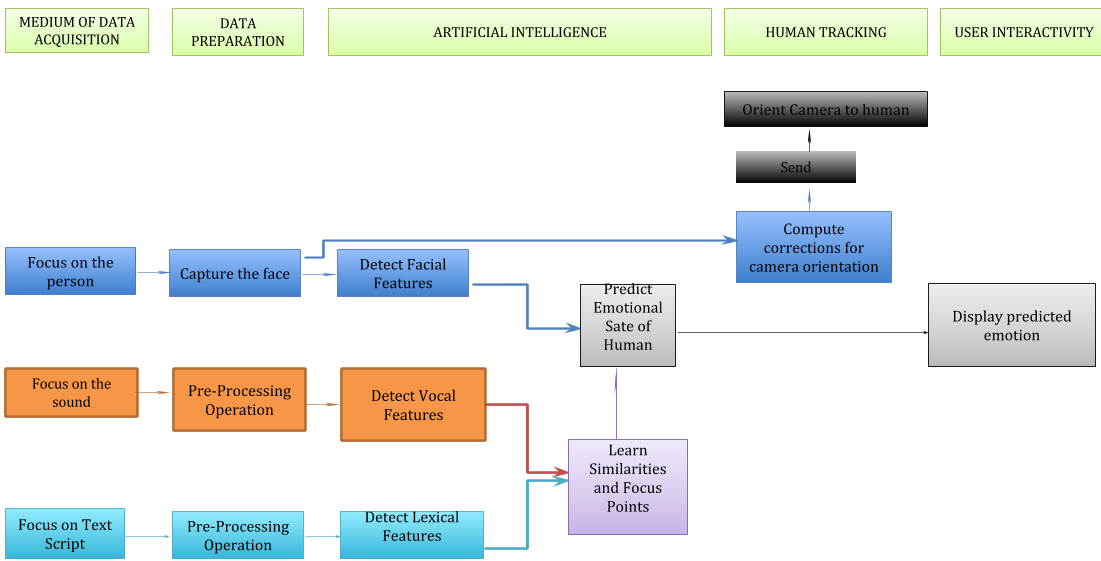

Functional Architecture

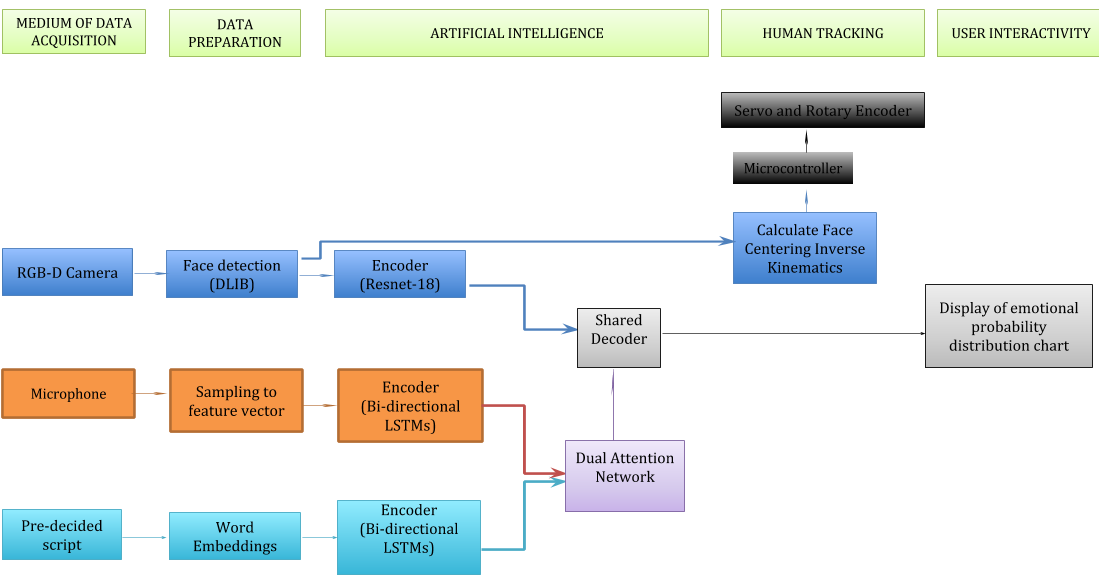

Cyber-Physical Architecture

FUNCTIONAL REQUIREMENTS

- Shall detect emotions from tri-modal data

- Shall output emotion chart

- Shall track user

NON-FUNCTIONAL REQUIREMENTS

- Rests on tabletop

- On/Off switch (Easy to use)

- Under $5000 (Affordable)

- Smaller than a microwave

- Less than 5 kg (Lightweight)

PERFORMANCE REQUIREMENTS

- Will detect emotions from tri-modal data: 60% accuracy

- Will output emotion chart: 1 frame/second

- Will track user: Real-time

SUB-SYSTEM DESIGNS AND DESCRIPTIONS:

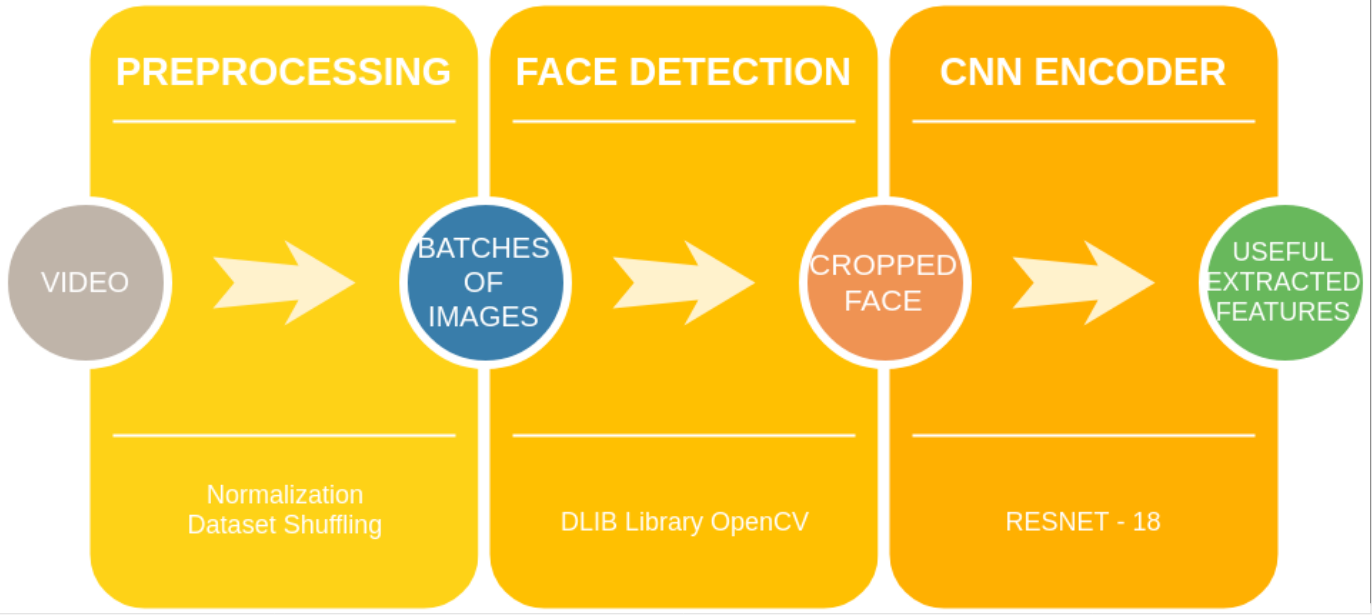

Visual Modality

This modality detects facial features relevant to emotion detection. Batches of images are passed onto Resnet-18 and a feature vector is generated.

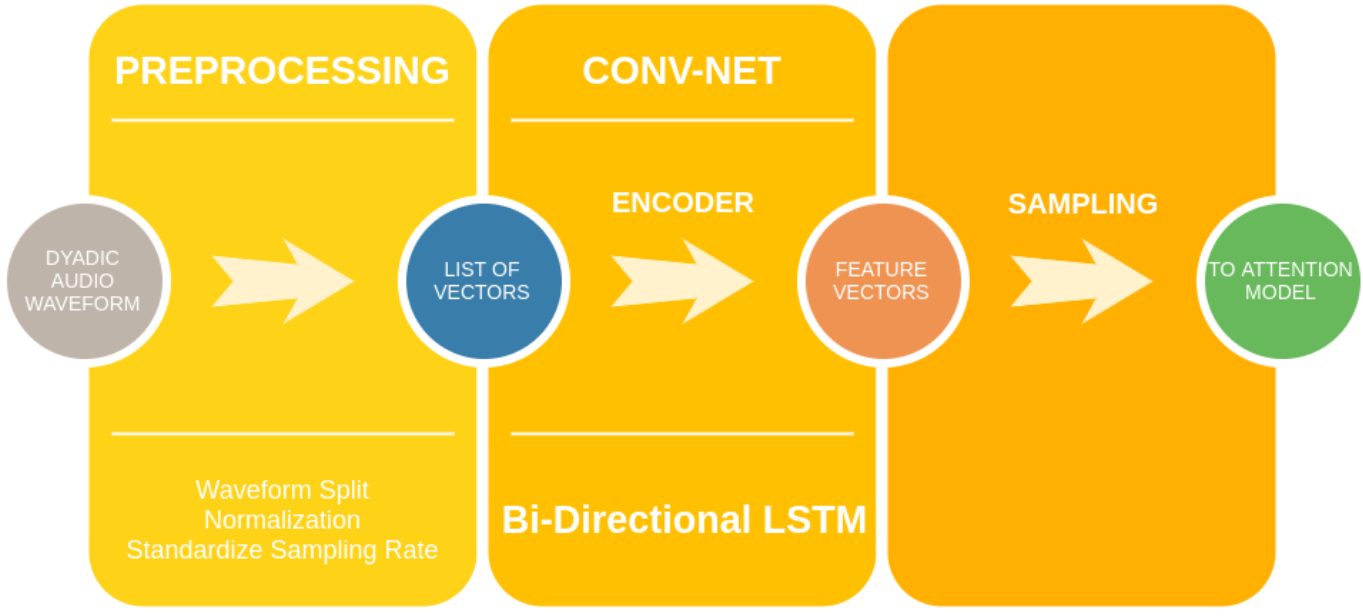

Vocal Modality

Vocal modality combines the audio intonations of a user for emotion detection. It splits the waveform, normalises and standardises the sample rate to generate a feature vector.

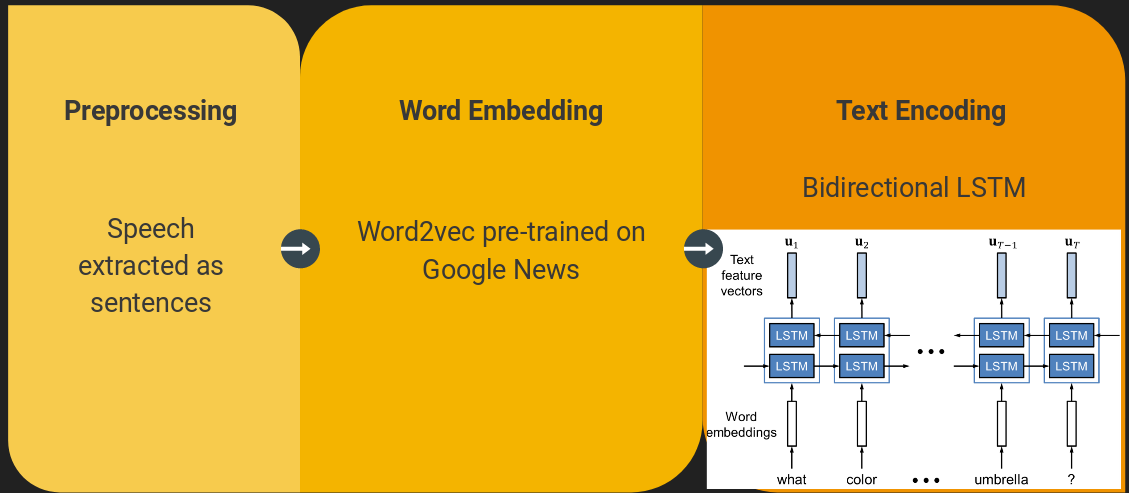

Verbal Modality

The verbal modality processes the text data of speech by splitting the speech, generating word vectors and passing them through a Bidirectional LSTM to get context vectors.

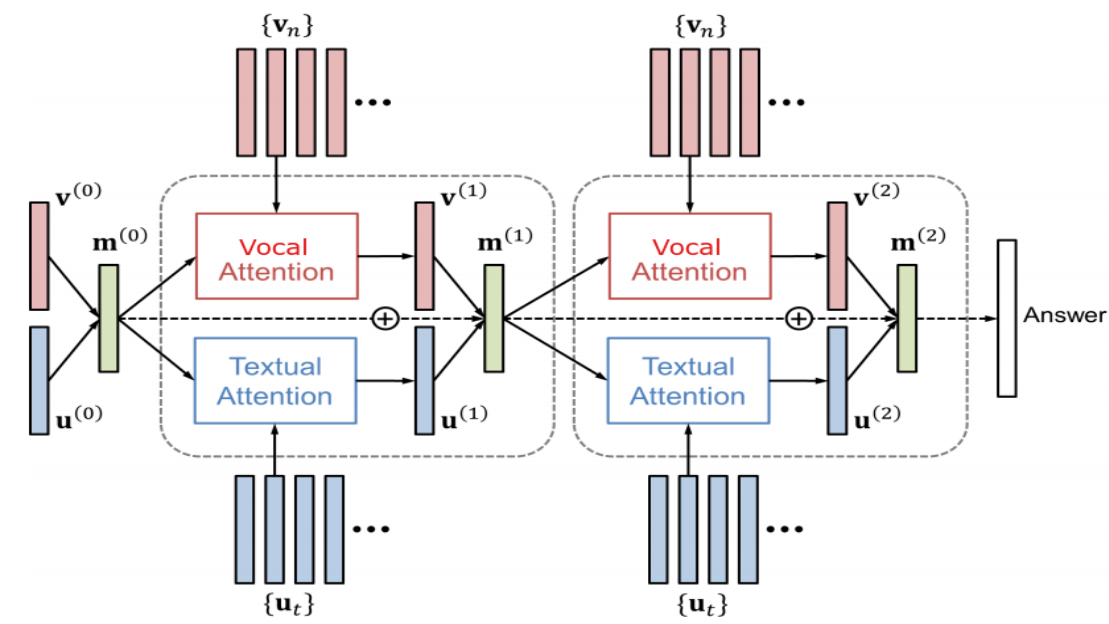

Attention Model

Attention model learns similarities and alignment between different modes of data and learns cooccurring features. This helps the network converge faster.

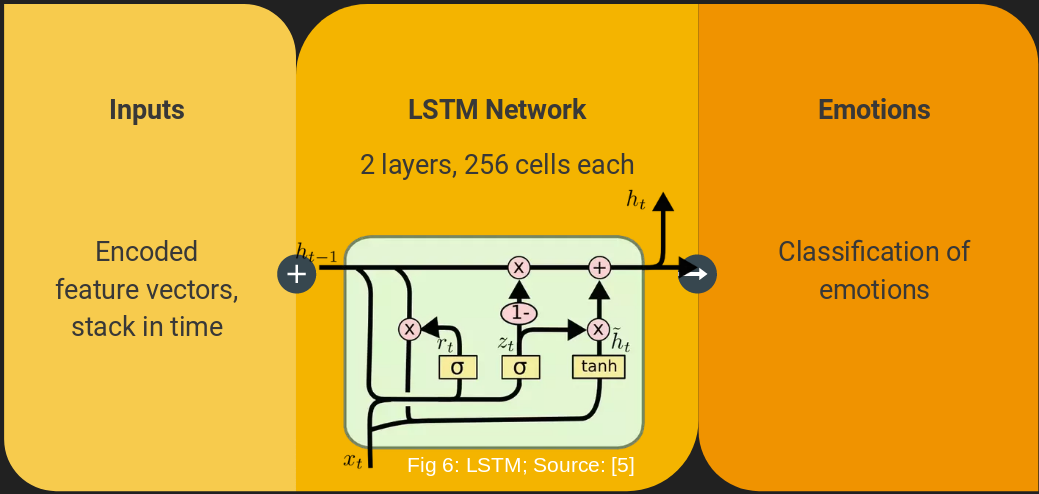

Shared Decoder

The shared decoder predicts emotions. A combined feature vector from the three modes and attention is passed to the LSTM network which learns to predict valence and activation from these features.

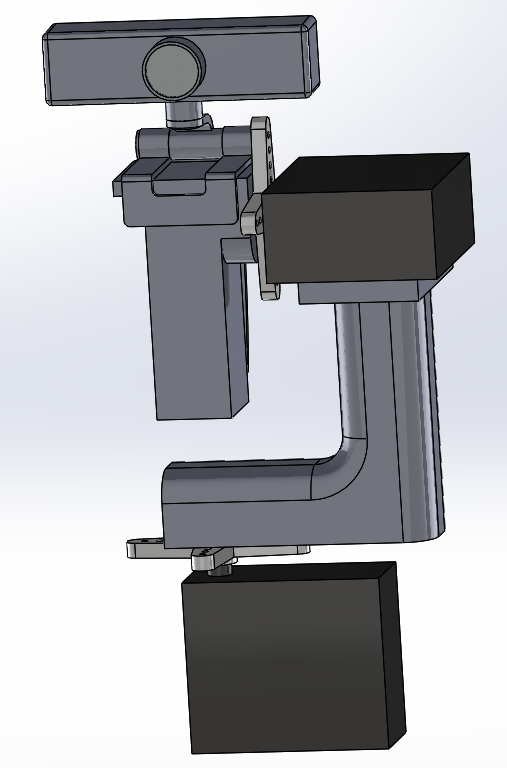

Face Tracking

The video feed is obtained from a 2DOF mounted camera that tracks faces in the vertical and horizontal mimicking the movements of an attentive human. It recognises and tracks the face of the user using two RC servos for dexterity. The 3D design of the set up is shown below.

State Machine

The state machine is an internal module that responds to the detected emotion. It does not generate natural language like humans but selects from pre-prepared responses one that is appropriate for the situation.

UI Subsystem -FVE

This module is the UI interface. It displays emotions of the user and the output from the state machine.

UI Subsystem -SVE