Fall Validation Experiment (FVE)

Experiment A

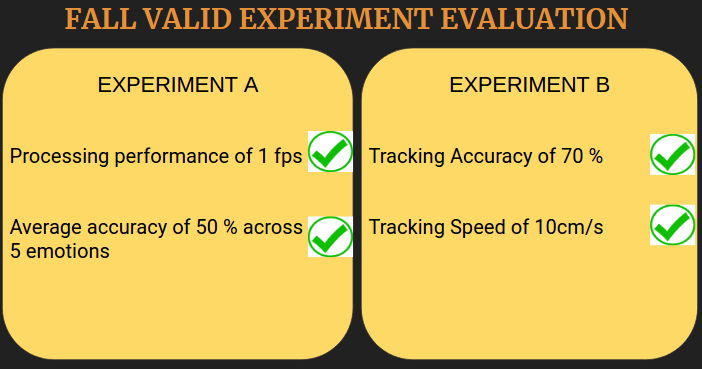

The goal of experiment A was to test the emotion recognition system on the test set of SEMAINE dataset. The success criterion included a processing performance of 1 frame per second and an average accuracy of 50% across 5 emotions across all 8 test videos. Both of those 20 criterion were met. The processing performance of our system was more than 100 fps and our average emotion recognition accuracy across 3 different methods of decoding emotions using valence, arousal, power, and expectation was 50.6%. Therefore we successfully passed both our requirements for experiment A during the FVE and the FVE Encore.

Experiment B

The goal of experiment B was to track a person’s face whilst they moved at a maximum distance of 1 metre from the camera. The success criterion stated that the person should be successfully tracked 70% of the length of the experiment whilst he is moving at a maximum speed of 10 cm/sec left to right in front of the camera. We were able to achieve 100% tracking accuracy and a tracking speed of ~15 cm/sec during the FVE and the FVE Encore

Spring Validation Experiment (SVE)

Experiment A

The goal of experiment A is to showcase lukabots ability to detect emotions from 3 data modalities (visual, verbal and vocal) in the real world. We are no longer doing demonstrations on datasets, but actually doing true to life testing. The text element of the data will come from a pre-decided script that the person will be forced to act out. This experiment will almost capture the totality of the capability of the lukabot.

Experiment B

We will also conduct individual subsystem tests to see how they perform by themselves. The first (experiment B) will be the visual subsystem of our multimodal emotion recognition. Tests will remain the same as multimodal, but this time with only the visual pipeline being used. Since these will be real time tests, the accuracy will be measured according to human assigned ratings.

Experiment C

The second (experiment C) will be the text subsystem of our multimodal emotion recognition. Tests will remain the same as multimodal, but this time with only the visual pipeline being used. The text will be a pre-decided script that will be fed into the text pipeline. The robot will have to predict emotions from that one modality alone at a lower accuracy than the multimodal test.

Experiment D

The goal of experiment D is to demonstrate the ability for the Lukabot to track a human, with 2 degrees of freedom. This means that the robot will be able to track the face of a human as they walk across the room in front of it, and also as they approach or distance themselves from the camera. We will be using servomotors instead of stepper motors and so the robot will detect the centroid coordinate of the face, and use that to send corrective turn signals to the servos so as to align the centre of the receptor field of lukabot with the face. It should be able to do this, while the human walks at 15cm/s across the field of view of the robot, and 15cm/s to and from the robot.

Experiment E

We will conduct a test that will showcase the systems ability to not only detect emotions but respond accordingly. This test will thus be a state machine test where we input an emotional state to the computer and it will output a phrase that is sentimentally relevant with the input emotional state. Note that we are not expecting a syntactically and semantically coherent natural language sentence.

Combined SVE Test

Here, a moving actor who at regular conversation speeds will enact the five emotions in front of our system. Lukabot should keep his face within view 90% of time as well as detect emotions from text, verbal and vocal with an accuracy of at least 50%.

Results

The tests were conducted and the system has been found to meet al requirements. Check out the media tab for demos.