The goal of the project is to develop a system to help combat the growing problem of large scale destruction caused by wildfires.

In the US, in 2020 alone:

- There were 58,950 wildfires compared with 50,477 in 2019

- 10.1 million acres were burned in 2020, compared with 4.7 million acres in 2019

- Six of the top 20 largest California wildfires fires occurred in 2020

- 17,904 structures torched (54% residences)

Our project aims to create a solution to assist firefighters in approaching and extinguishing fires by giving them a means to visualize wildfires at locations they cannot physically access or view.

Primary Use Case:

Firefighters currently need input to help plan their approach routes to a fire. Additionally, they need to know if exit routes become unavailable or may potentially become unusable soon for a team that is already out in the field.

This information must be available in a short time frame as local wind patterns dramatically affect fires and this is not captured in satellite images. For example, a major problem firefighters currently face is when the fire has spread across a street/bridge/water body between two successive visualizations of the fire they receive. This is not particularly helpful to them.

They also require visualization at a very high spatial resolution, something of the order of magnitude of the routes that are of importance to them, visualizing fire maps with grid cells spanning hundreds of meters is again not very useful information for firefighters out in the field. In line with the needs presented above, our system is intended to be used thus:

“Firefighters approach an open field in which a wildfire is actively spreading. A firefighter inputs a GPS estimate of the wildfire location into the drone system and places the drone on the ground. The drone autonomously takes off and flies to the GPS location.

On reaching the location, the drone begins detecting the wildfire by segmenting the fire out of the scene, and then identifies the perimeter of the fire using thermal and RGB data. The drone follows the identified perimeter of the fire while sending data back to the ground station.

This data is then used to visualize the fire on a map for the firefighting crew. After 10 minutes, the drone heads back and lands at its original takeoff position.“

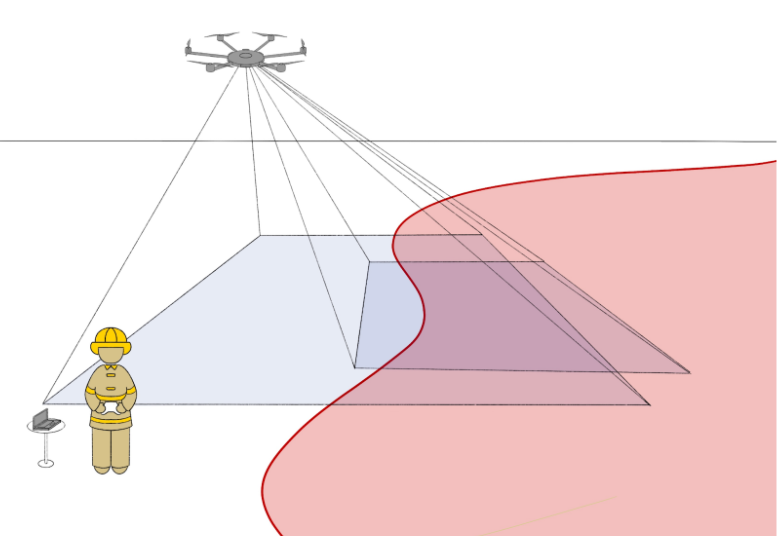

System Graphical Representation:

The figure depicts a firefighter in the bottom left corner with a laptop and a controller in order to communicate with the drone. The drone is shown flying at the top of the image with its field of views visualized in blue; the drone setup includes two separate cameras with differing fields of view as depicted in the image. The images are used to perceive, map, and track the wildfire perimeter while sending relevant data back to the ground station.