System Requirements

Based on the analysis of needs and use cases, the desired system specifications arrived at are as below:

Mandatory Performance Requirements

The system will:

M.P.1 Have an operating range greater than 250 meters

M.P.2 Classify fire pixels from thermal images with accuracy of 70%

M.P.3 Provide fire map updates of at least 25 m x 25 m at a spatial resolution of 0.5m x 0.5m for users at least once every 10 sec

M.P.4 Localize 5 fire hotspots with absolute accuracy of ± 5 meters and relative accuracy of ± 2.5 meters in a 10,000 square meter region within 15 minutes with 0 false positives and 0 false negatives

Mandatory Non Functional Requirements

The system shall:

M.N.1 Meet FAA regulations for an sUAS

M.N.2 Have a user friendly ground station interface

M.N.3 Be easy to use

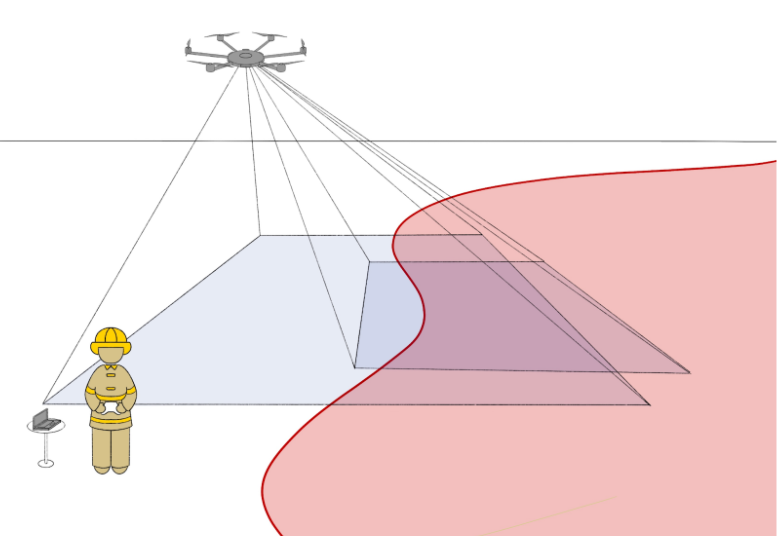

M.N.4 Utilize angled cameras such that a wildfire can be monitored without having to fly the sUAS directly above the fire in the smoke

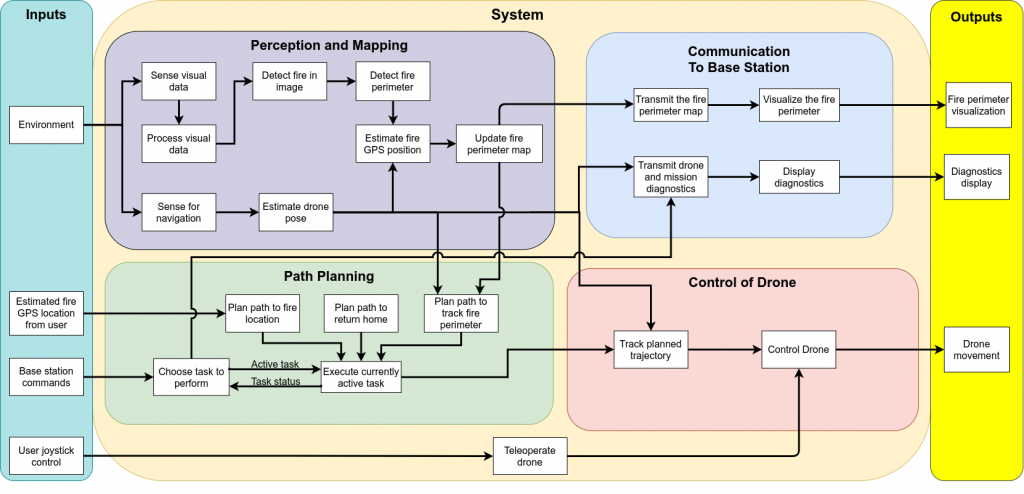

Functional Architecture

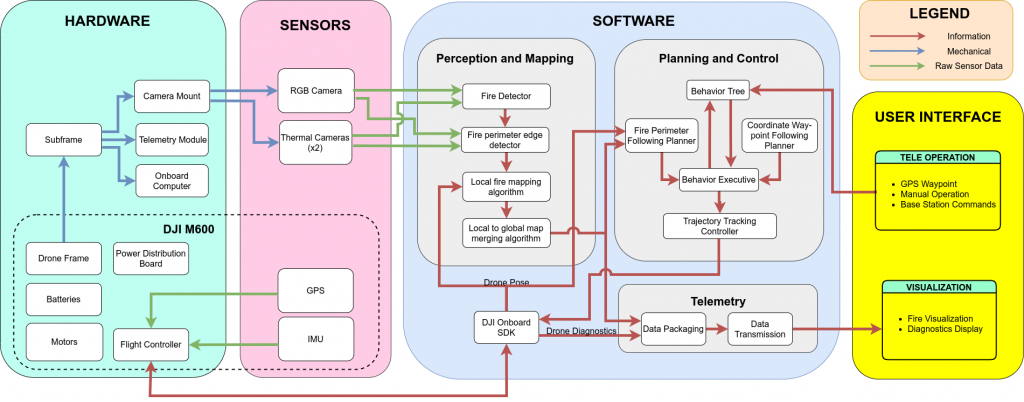

Cyberphysical Architecture

System Design Description

An illustration of how we envision the complete system to work is represented below:

Subsystems:

Hardware platform

We chose to use a DJI M600 Pro hex-rotor UAV as our platform to carry our sensors and navigate the site of the wildfire. The M600 Pro has a payload capacity of 6kg and a no load flight time of 30 minutes and will carry our payload (optics and on-board computer).

This choice was arrived at after considering alternatives among other fixed wing, VTOL and multi-rotor UAVs. The M600 Pro worked out to be the optimal choice in terms of range and maneuvrability.

DJI M600 Pro

Optics

Our optics system will be used to sense the local conditions of the fire to provide input to the perception subsystem. We will be using a FLIR Boson uncooled thermal camera for this purpose as it gives us the advantages of being able to detect a fire through smoke and at night as opposed to an RGB camera. Using a cooled thermal camera is not feasible due to weight and power constraints. Another possible alternative is the use of a radiometric camera, which we decided not to use due to high cost and ready availability of the FLIR Boson.

FLIR Boson

Planning

The fire monitoring path planner subsystem is responsible for generating paths for the drone to follow, allowing the drone to perceive the entire fire perimeter to generate a global map. This is important because the drone will not see the entire fire perimeter from a single location.

From the results of our trade studies, the Importance-Based, Alternating Direction path planner will be used. This planner switches between traveling clockwise and counterclockwise around the perimeter. The planner takes in a global map of the fire perimeter represented as cells in a grid. For each fire front cell, an importance value is calculated based on an estimate of how far the fire will have traveled once the drone reaches that cell since the previous visit. Cells with a high rate of spread and cells that haven’t been visited in a long time have the highest importance.

Mapping

The mapping subsystem will create and maintain a global fire perimeter map. This would include localizing the edge of the fire in the global frame. The map will consist of grid cells, which will then be marked based on whether the cell is on fire or not. This will be done using the GPS coordinates of the drone and ray-tracing. When the drone is flying over an area, it will detect whether the area is on fire or not and then detect the fire’s perimeter. After that, the drone will update the grid cell corresponding to that area in the map. During ray-tracing, the fire region will be assumed to be flat for simplicity.

Perception

The fire front perception subsystem is responsible for detecting if an image contains fire. If it does, the fire front perception will then detect the edge of the fire in the image, which would correspond to the fire front.

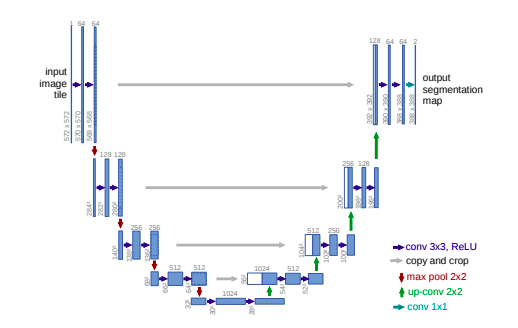

We intend to implement the UNet architecture shown below for our purpose as it works well with less data, something that is a constraint for us, it is small and implementable in real-time and is state of the art.

UNet architecture

(U-Net: Convolutional Networks for Biomedical Image Segmentation, 2015, Ronneberger et.al)