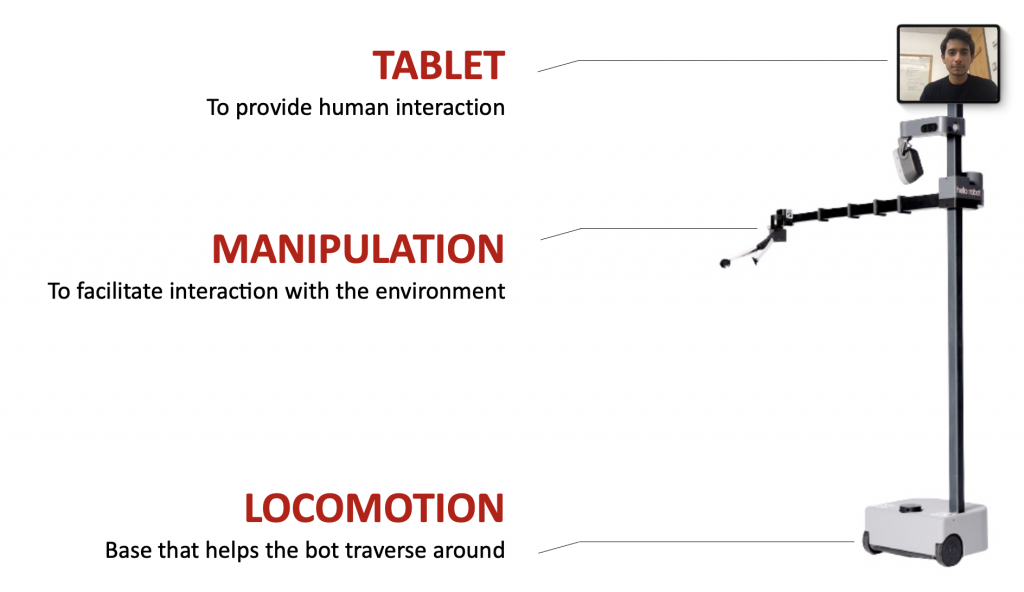

FULL SYSTEM DEVELOPMENT

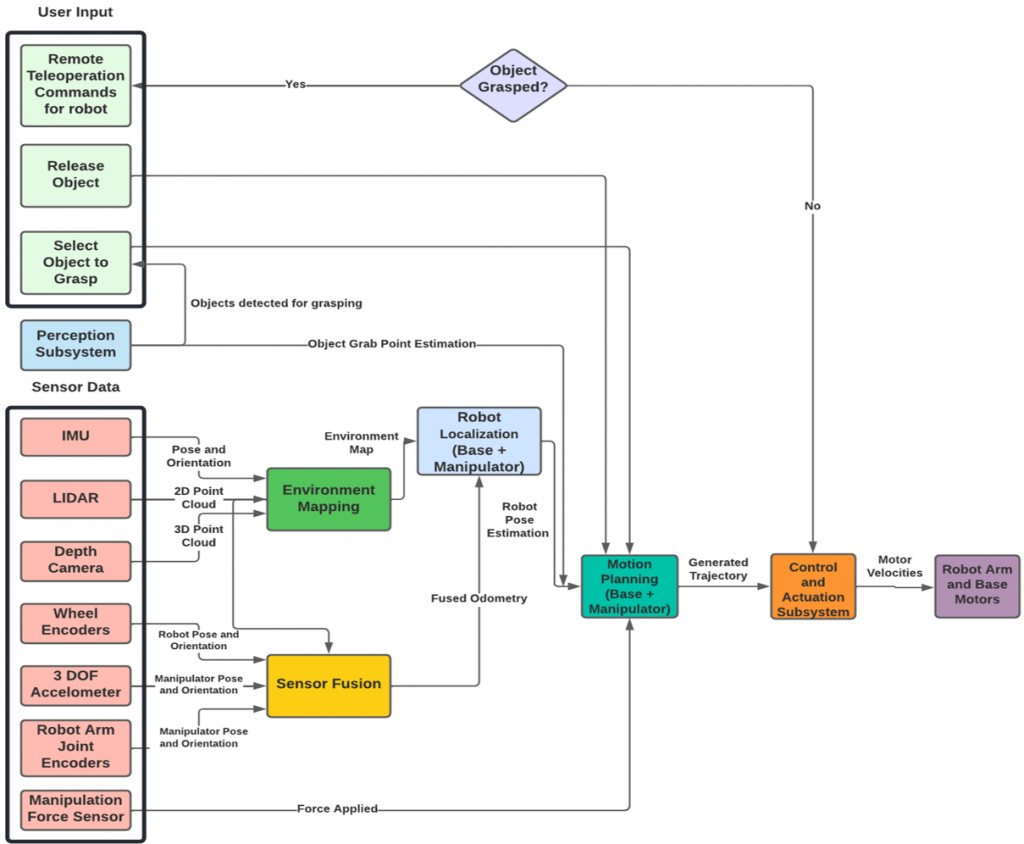

This is the overall system of TouRI. The platform is a Stretch RE1 designed and built by Hello Robot. It consists of a mobile base for navigation and a 2 DOF manipulator with a 1 DOF end effector. It consists of various sensors such as the Intel RealSense d435i camera for performing perception tasks. The RP-Lidar A1which we are using for navigation and obstacle avoidance. A 9DOF IMU which we are fusing with the Lidar and wheel encoders to get a better estimate of the robot’s position during localization. It has wheel encoders in both the base and the arm to get the robot pose. There is also a Pan Tilt display that we are designing and building ourselves which will serve as a video call interface for the remote user during a virtual tour. We are developing this interface so that only the display will have to rotate about, instead of the entire robot when the remote user wishes to interact with something for which he is required to turn in another direction.

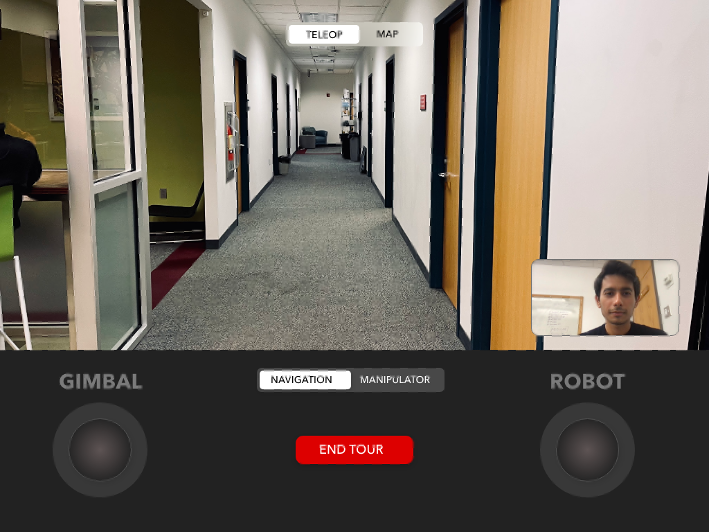

INTERFACE

FVD Update: All user screens for user interface have been developed. Integration of the interface with all the subsystems is completed.

PROGRESS

✅ Tele-op navigation controls

✅ Tele-op manipulation controls

✅ Database-link

✅ Bot-side architecture

✅ Tele-op gimbal controls

✅ Autonomous commands

✅ Audio-video link [Completed in Fall]

✅ Asynchronous operation of the pan-tilt display [Completed in Fall]

✅ System integration [Completed in Fall]

Key

✅ Finished ☑️ On-Going ⏳TODO

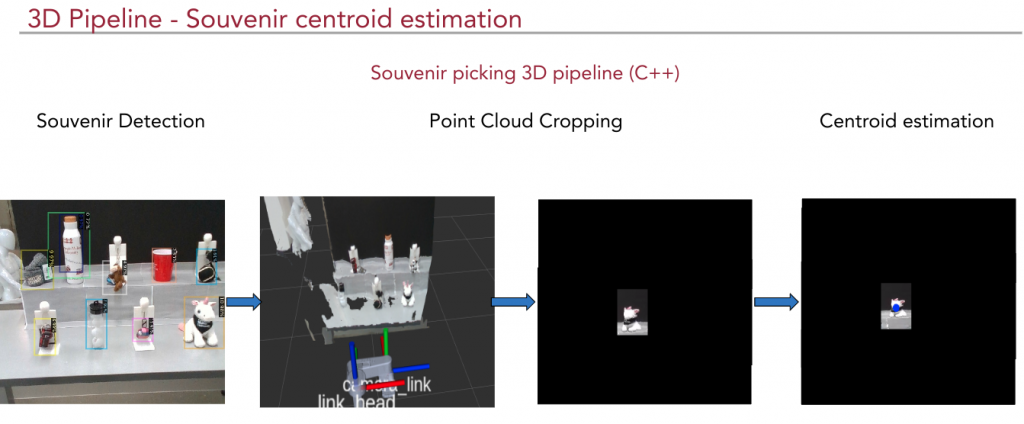

PERCEPTION

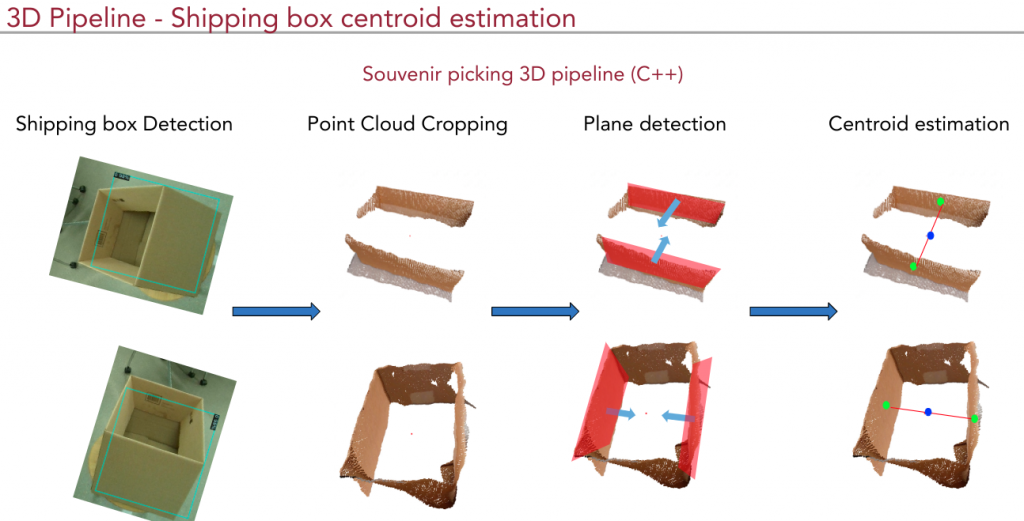

The 2D detection pipeline and 3D centroid estimation pipeline for souvenirs and shipping box have been implemented. Following images describe the pipeline’s high level detail:

All the development planned and integration of the perception system with manipulation and interface has been completed.

✅ Test 2D object detection pipeline and 3D preprocessing pipeline for perception sub-system

✅ Collect training data and train the model for shipping box detection.

✅ Collect training data and train the model for souvenir objects.

✅ Implement dropping pipeline

✅ Refine grasping pipeline for multiple objects

✅ Integration of manipulation and perception subsystems

✅ Integration of picking and placing pipeline

✅ Test the integrated system as a standalone module.

✅ Improve inference speed of 2D pipeline ( socket communication to GPU)

✅ Speed up 3D perception pipeline by porting over code to C++

✅ Test the entire system.

Key

✅ Finished ☑️ On-Going ⏳TODO

NAVIGATION

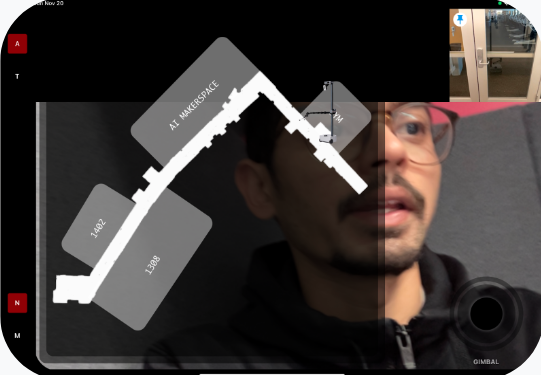

FVD Update : Navigation subsystem is integrated with the user interface and now the user selects the location he wants to tour using the app and the robot navigates to the desired location.

SVD Update : The autonomous navigation subsystem has been set up on the robot and tested. The robot is currently able to localize itself within a map, plan a path to a goal location, and avoid obstacles on the way.

The progress of the navigation subsystem is as follows:

✅ Robot setup to interface software

✅ Sensor data integration

✅ Environment map generation

✅ Autonomous navigation stack setup

✅ Robot localization using AMCL with laser scan and fused odometry (IMU and wheel encoders)

✅ Robot localization using EKF with laser scan, IMU and AMCL pose fusion

✅ Autonomous navigation stack tuning

✅ Dynamic obstacle avoidance integration

✅ Autonomous navigation stack testing and improvements based on testing [Completed in FALL]

✅ Navigation integration with Interface [Completed in FALL]

✅ Full system integration [Completed in FALL]

Key

✅ Finished ☑️ On-Going ⏳TODO

All the planned work for the navigation subsystem has been completed.

MANIPULATION

The autonomous manipulation sub-system will be used when the robot enters the room to manipulate objects such as grasping objects and placing them in a shipping box. Once the perception subsystem detects the objects, the 3D centroid location of the souvenir/shipping box is received by the manipulation subsystem. After this, the cartesian planner plans the movement of the base and manipulator to effectively achieve the picking and placing operation. Also, visual feedback is incorporated to correct the errors while executing the plan.

Currently, all the planned items for the manipulation subsystem have been implemented and the same can be seen below:

✅ Tested and ensured that the manipulation subsystem is working for object grasping and placement

✅ Brainstormed ideas for a different manipulation task

✅ Implement dropping pipeline

✅ Integrate dropping pipeline with perception subsystem

✅ Integration of manipulation and perception subsystems

✅ Test the entire manipulation subsystem as a stand-alone module

✅ Refine grasping pipeline for multiple objects

✅ Improve the robustness of the manipulation subsystem

✅ Integration of manipulation and interface subsystems

✅ Test the entire system

Key

✅ Finished ☑️ On-Going ⏳TODO

HARDWARE

All the planned items in the hardware subsystem are implemented. The following images demonstrate the pan-tilt display in operation and the hardware design of the same.

The progress of the hardware subsystem over the spring and fall semesters is as follows:

✅ Research about hardware to be used

✅ Power Supply and Gimbal Controller Design

✅ CAD Design for Gimbal

✅ Manufacture Gimbal

✅ PCB for Task 6 and Task 11

✅ Development of Interconnect PCB for dynamixels

✅ Write ROS node for Gimbal

✅ Fetch commands from App server and publish them to the gimbal subsystem (FVD)

Key

✅ Finished ☑️ On-Going ⏳TODO