FVD RESULTS

SVD RESULTS

Spring validation demonstration

| Action | Demonstration | Requirements validated | |

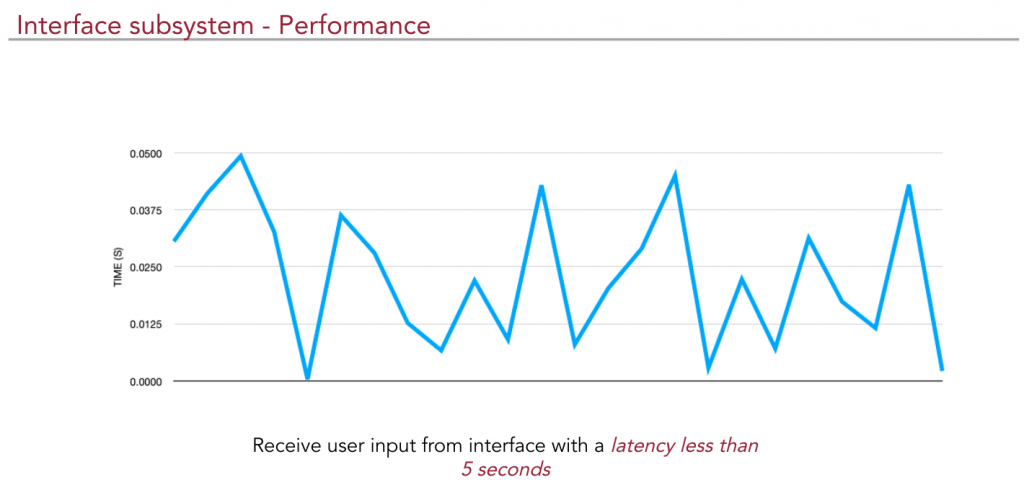

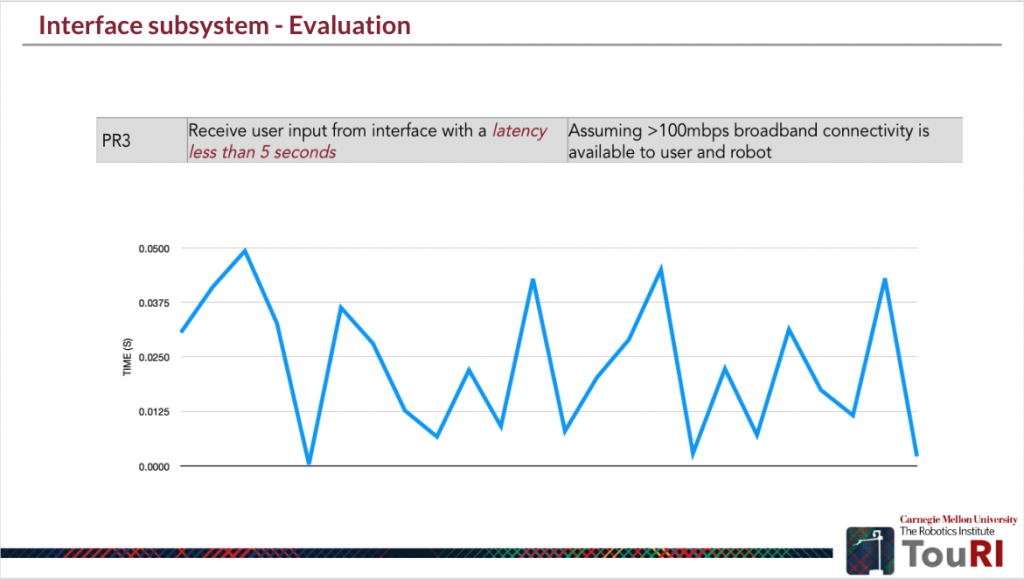

| 1 | User starts the TouRI robot app and selects a location to tour | Demonstrate user interaction with the app and the capability to receive location input from user within 5 seconds and generate the goal waypoint Condition for operation : > 100mbps broadband connectivity | MP3, MNF1 |

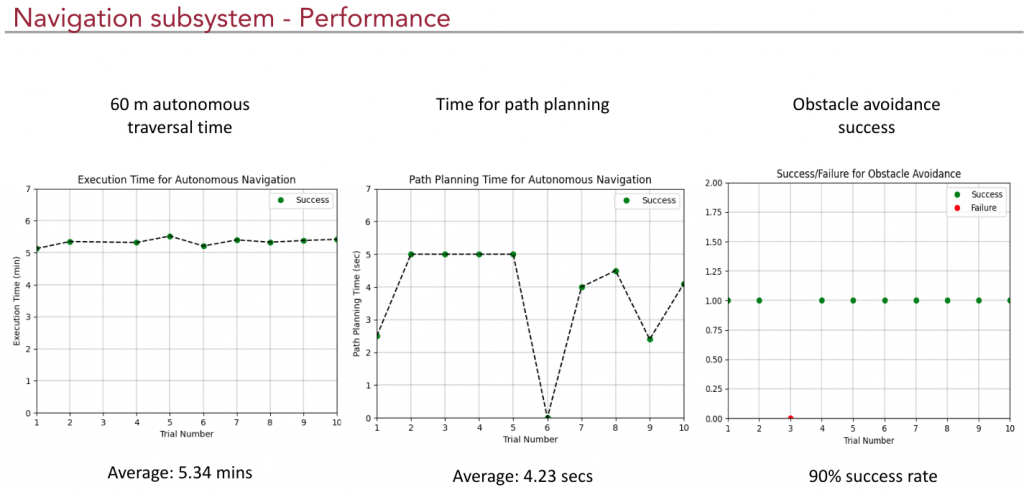

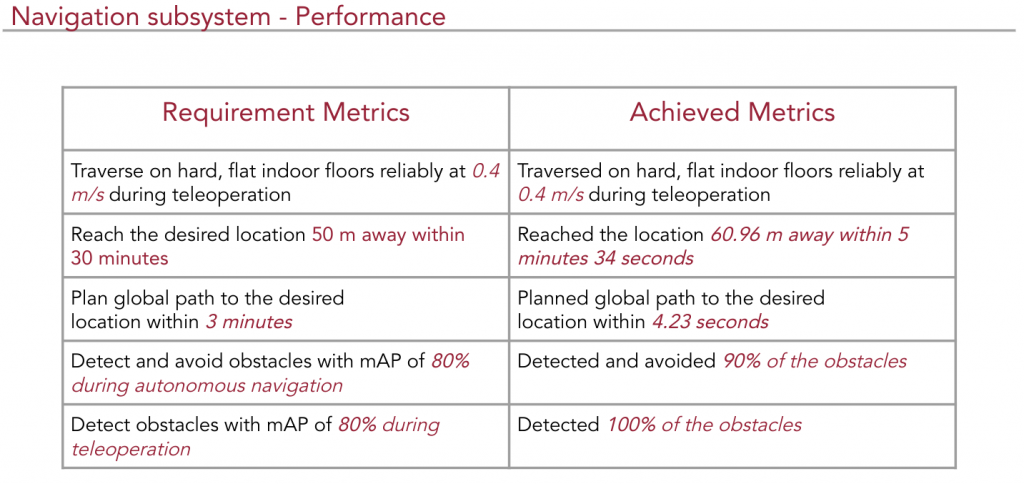

| 2 | Based on the waypoint input, the navigation module generates a global path to the goal location | Demonstrate capability of the navigation module to generate the global path within 3 minutes | MP4 |

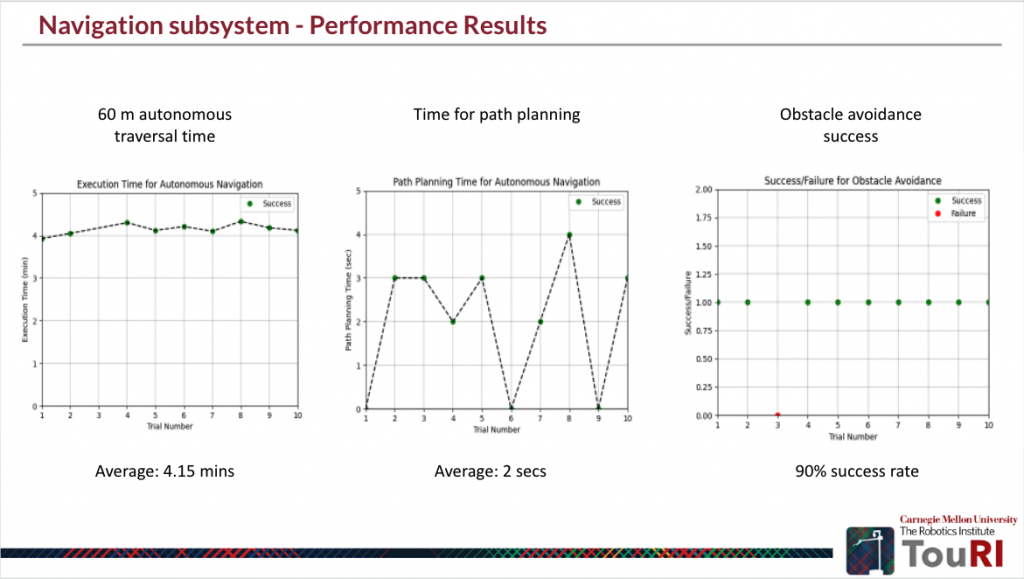

| 3 | The robot detects static and dynamic obstacles and avoids them by following the obstacle avoidance trajectory genereated by the local planner | Demonstrate obstacle detection and avoidance by the robot with an mAP of 60% Condition for operation: For obstacles lying in the FOV of sensing modalities | MP5 |

| 4 | Robot reaches the goal location | Demonstrate ability of the robot to reach the desired location with a speed of 0.4m/s during the traversal Condition for operation: Limited obstacles are present in the surroundings and a path exists for the robot to avoid obstacles and traverse ahead | MP1 |

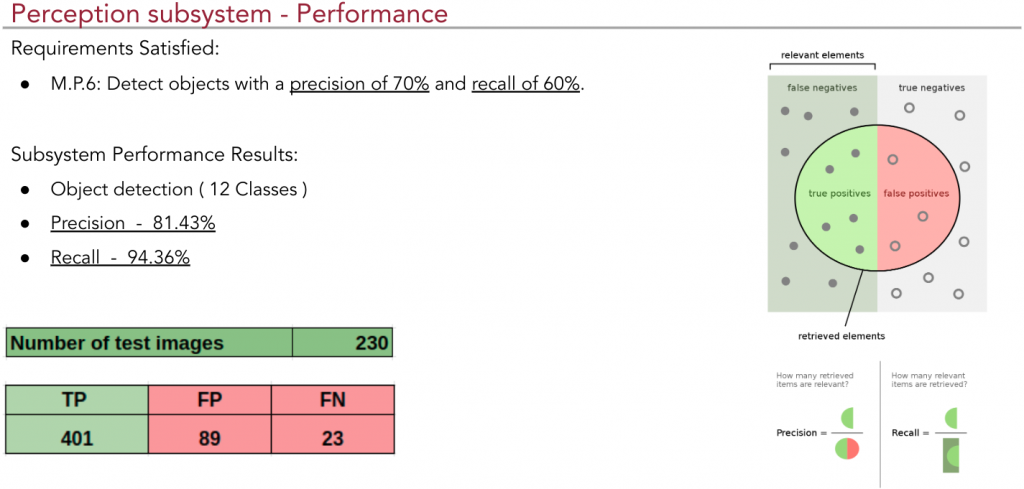

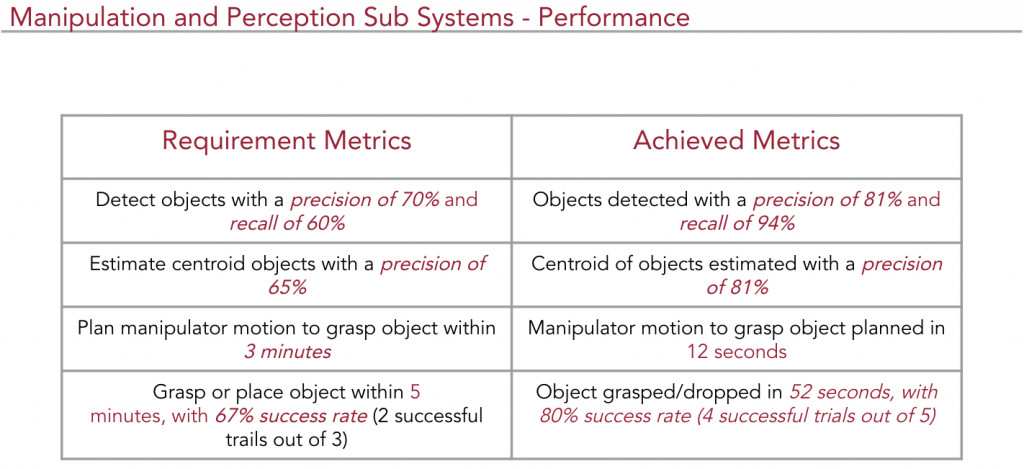

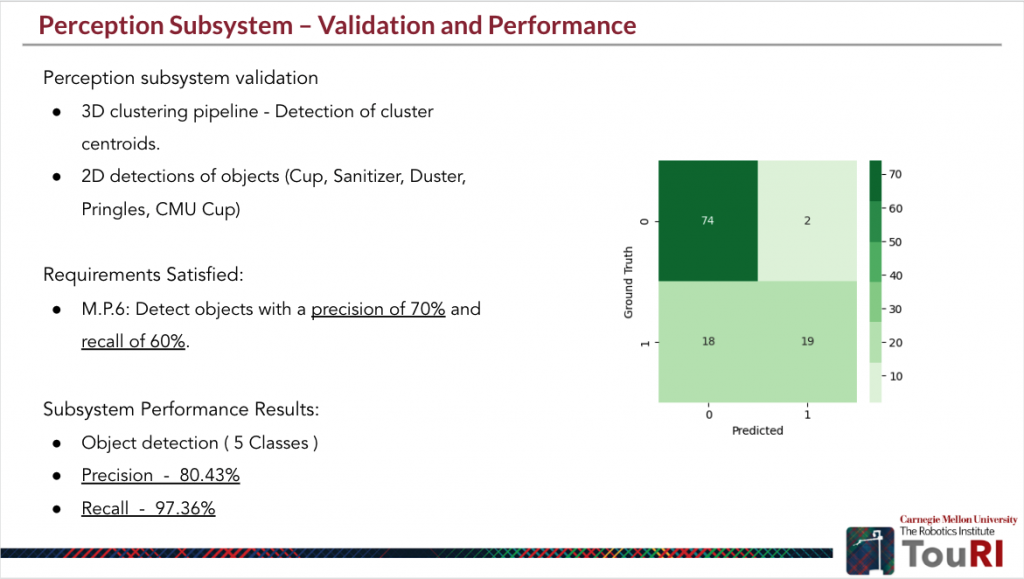

| 5 | The robot detects the object to grasp and estimates its grab point | Demonstrate object detecion with a precision of 70% and recall of 60% Condition for operation: For predefined set of objects in the environment and appropriate lighting | MP6 |

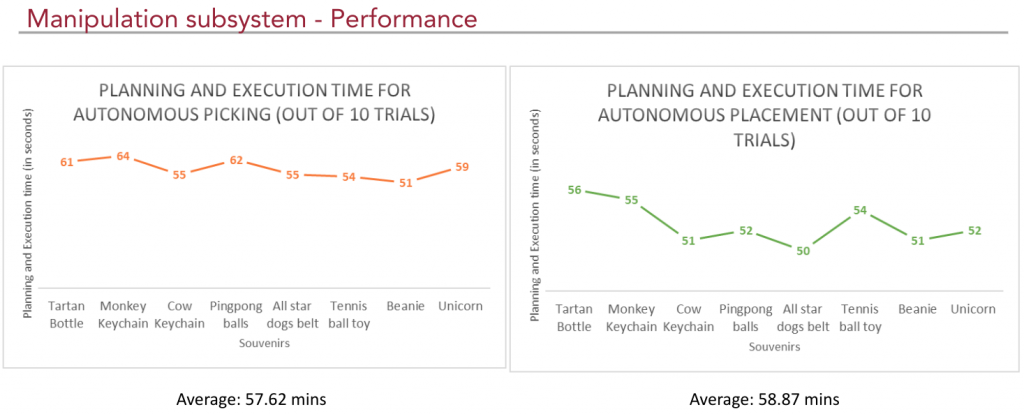

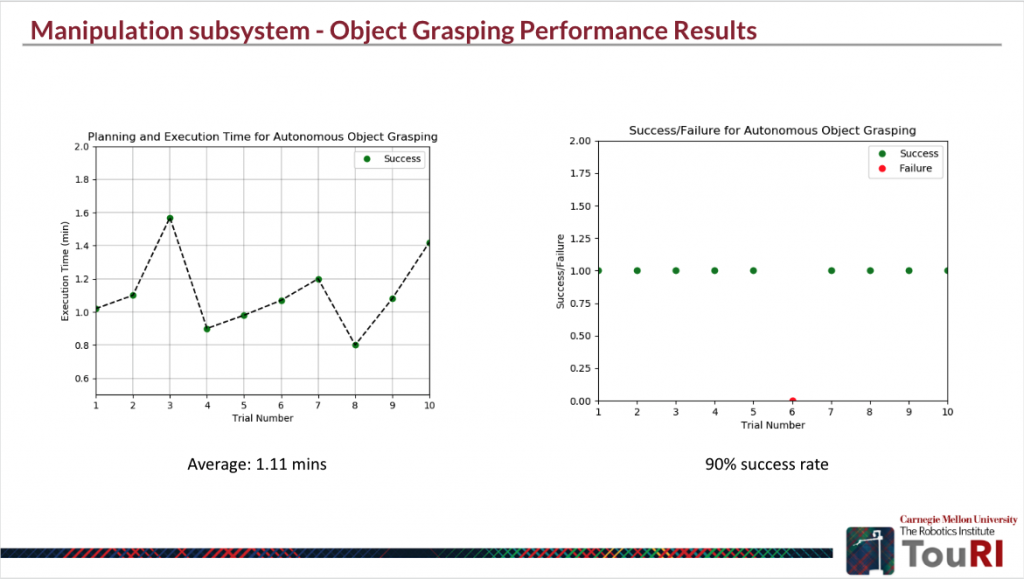

| 6 | Based on the grab point input, the manipulation module plans the trajectory to grasp the object | Demonstrate the motion planning capability of the manipulation module to generate the trajectory within 3 minutes | MP7 |

| 7 | The manipulator executes the planned trajectory | Demonstrate manipulator motion to grasp/pick up object | MP8 |

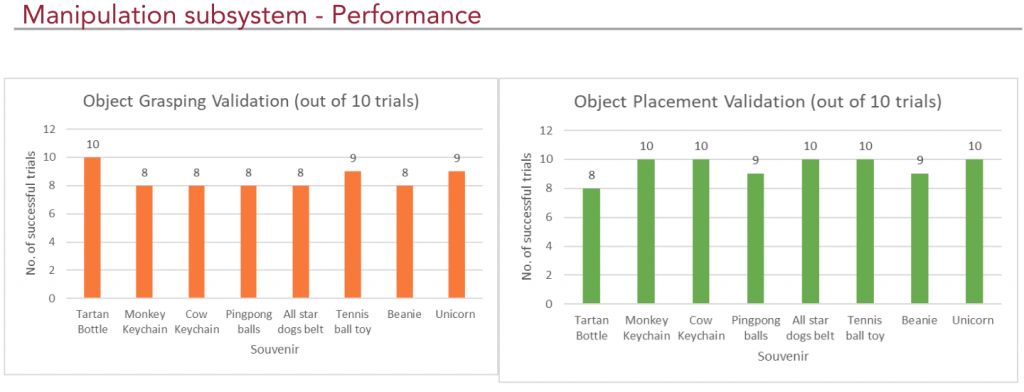

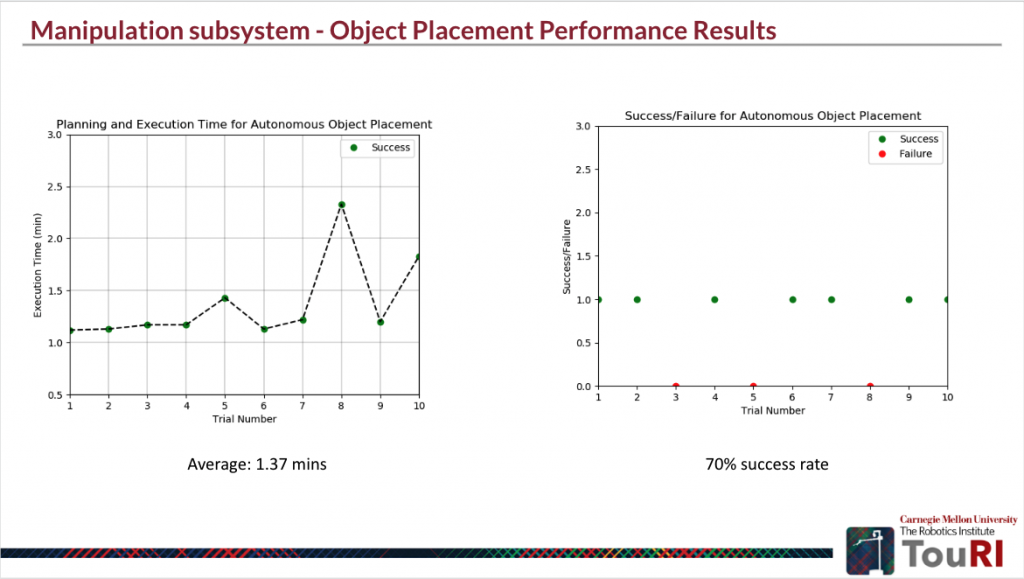

| 8 | The manipulator grasps/places the object | Demonstrate manipulator capability to grasp/place object within 5 minutes, with 67% success rate | MP9 |

| 8 | The standalone gimbal mount rotates the display device to view the surroundings | Demonstrate gimbal motion of 60 degree pitch and 120 degree yaw for display device | MP10 |

| 9 | The app provides the video call interface between user and surroundings | Demonstrate 1080*720 resolution video call with a lag less than 2 seconds | MP11 |

Fall validation demonstration

| Action | Demonstration | Requirements validated | |

| 1 | User starts the TouRI robot app | Demonstrate user-friendly app interface | MNF2 |

| 2 | Based on the location input entered by the user in the app, goal waypoint for the location is generated and sent to the robot | Demonstrate integration between user interface and navigation modules | |

| 3 | During autonomous traversal, the robot detects static and dynamic obstacles and avoids them by following the obstacle avoidance trajectory generated by the local planner | Demonstrate obstacle detection and avoidance by the robot with an mAP of 80% Condition for operation: For obstacles lying in the FOV of sensing modalities during autonomous traversal | MP5 |

| 4 | The robot detects the object on reaching the desired location and estimates its grab point | Demonstrate object detection and the grab point estimation with a precision of 60% and recall of 70% Demonstrate integration between navigation and perception modules Condition for operation: For a predefined set of objects in the environment and appropriate lighting | MP6 |

| 5 | The manipulator executes the planned trajectory to grasp/place the object | Demonstrate integration between perception and manipulation modules Demonstrate manipulator motion to grasp/place objects within 5 minutes with a 67% success rate (2 successful trails out of 3) | MP9 |

| 6 | Robot successfully enters the desired room location | Demonstrate integration between user-interface, navigation, perception and manipulation modules for task execution in 30 minutes Condition for operation: Goal location in the operating area and 100m away from start location of the robot | MP2 |

| 7 | On entering the desired room, robot accepts inputs from user for teleoperated traversal | Demonstrate integration between user interface and navigation modules | MP1 |

| 8 | The robot retracts teleoperation control from user when obstacles are detected and transitions to autonomous mode . The robot detects and avoids static and dynamic obstacles by following the obstacle avoidance trajectory generated by the local planner | Demonstrate situational awareness Demonstrate obstacle detection and avoidance by the robot with an mAP of 80% Condition for operation: For obstacles lying in the FOV of sensing modalities during teleoperated traversal | MP5,MNF4 |

| 9 | The gimbal mount integrated on the robot rotates the display device for user to view the surroundings | Demonstrate integration of gimbal platform with the robot Demonstrate natural interaction | MNF5 |

| 10 | System level requirements | Demonstrate sub-system modularity with APIs | MNF3 |

Spring Validation Demonstration

Location: NSH Basement

Equipment: Hello Robot’s Stretch, gimbal mount, a display device, user device, operator computer, Wi-Fi network, objects for grasping, dynamic obstacles in the environment

Objective: Demonstrate that the user can teleoperate the robot and send location to the robot for the tour, autonomous navigation to the location, detection of pre-defined objects, autonomous grasping/placement of pre-defined objects, manually controlled gimbal motion.

- Teleoperated and Autonomous Navigation and Manipulation test

- Procedure:

- Teleoperate the robot to navigate to the desired location using the user interface.

- Teleoperate manipulator to grab/release pre-defined objects from defined locations.

- Place the robot at the start location on the map and observe robot localization.

- Send goal to the robot through the user interface and observe robot plan paths.

- Observe the robot autonomously navigate to the goal while avoiding obstacles.

- Set pre-defined goal poses for the manipulator to pick and place a pre-defined object.

- Observe the robot plan trajectories for the manipulator to reach goal poses.

- Observe the manipulator pick and place the object.

- Validation:

- User is able to navigate the robot to a location and grab/place an object with end-effector. (M.P.R-1, M.P.R-3, M.P.R-9)

- Robot is able to localize within the map and plan global and local paths to the goal. (M.P.R-2, M.P.R-4)

- Robot can autonomously navigate to the goal with obstacle avoidance. (M.P.R-5)

- Manipulator is able to plan trajectories and grab/release the object stably. (M.P.R-8, M.P.R-3, M.P.R-9)

- Procedure:

- Object Detection Test

- Procedure:

- Place the robot such that the predefined object(s) to be detected are visible.

- Observe the bounding boxes of the detected objects.

- Repeat steps with variations in object pose, ambient lighting and distance from the robot.

- Validation:

- Pre-defined objects are detected in varying conditions. (M.P.R-6, M.P.R-7)

- Procedure:

- Hardware Gimbal Test

- Procedure:

- Check the stability of the robot at maximum velocity with the gimbal and display mounted.

- Setup the gimbal and display workbench and manually send commands for motion.

- Observe the stability of the display during the gimbal motion.

- Observe gimbal actuation cut off when power supplied to it is insufficient.

- Validation:

- Robot remains stable after the gimbal and the display have been mounted.

- The gimbal actuates as per the commands and display remains stable during motion. (M.P.R-10)

- Gimbal is not actuated when it receives low power.

- Procedure:

Fall Validation Demonstration

Location: NSH Basement

Equipment: Hello Robot’s Stretch, gimbal mount, a display device, user device, operator computer, Wi-Fi network, objects for grasping, dynamic obstacles in the environment

Objective: Demonstrate that the user can teleoperate the robot and command the robot to autonomously navigate to the desired locations, detect and autonomously pick and place pre-defined objects, and manually control gimbal motion.

- Navigation (autonomous and tele-operational) and gimbal control via the interface

- Procedure:

- Place the robot at the start location on the map and observe robot localization.

- Send goal to the robot through the user interface and observe robot plan path.

- Observe the robot autonomously navigating to the goal while avoiding obstacles.

- Teleoperate the robot’s gimbal to the desired pitch and yaw using the user interface while the robot is navigating.

- Teleoperate the robot to navigate within the room using the user interface.

- Validation:

- User can send commands to the robot and gimbal remotely. (M.P.R-3)

- Robot is able to localize within the map and plan global and local paths to the goal. (M.P.R-2, M.P.R-4)

- Robot is able to autonomously navigate to the goal with obstacle avoidance. (M.P.R-5)

- User is able to tele-operate the robot within the room and concurrently control the gimbal’s orientation. (M.P.R-1, M.P.R-10)

- Procedure:

- Autonomous and tele-operational pick and place of objects

- Procedure:

- Teleoperate the robot to the location such that objects to be picked are in the field of view.

- Observe the robot detect the pickable objects in its field of view.

- Select an object to pick up from the table using the interface.

- Observe the robot map the environment with the objects in the field of view and localize itself in the map.

- Observe the robot detect the grab points of the selected object.

- Observe the robot plan a navigation and manipulation path to the desired object.

- Observe the robot autonomously pick-up the object from the table.

- Tele-operate the robot to desired drop location and select the drop location using the interface.

- Observe the robot detect the surface to place the object and autonomously place the object on the surface.

- Validation:

- User can send commands to the robot remotely. (M.P.R-3)

- Robot is able to send a list of detected objects to the user. (M.P.R-6)

- Robot is able to estimate the grab points of the selected objects. (M.P.R-7)

- Robot is able to localize in 3D map and plan a path to the selected object. (M.P.R-8)

- Robot is able to successfully pick the object. (M.P.R-9)

- Procedure:

Robot is able to detect the flat surface and place the object on it. (M.P.R-9)