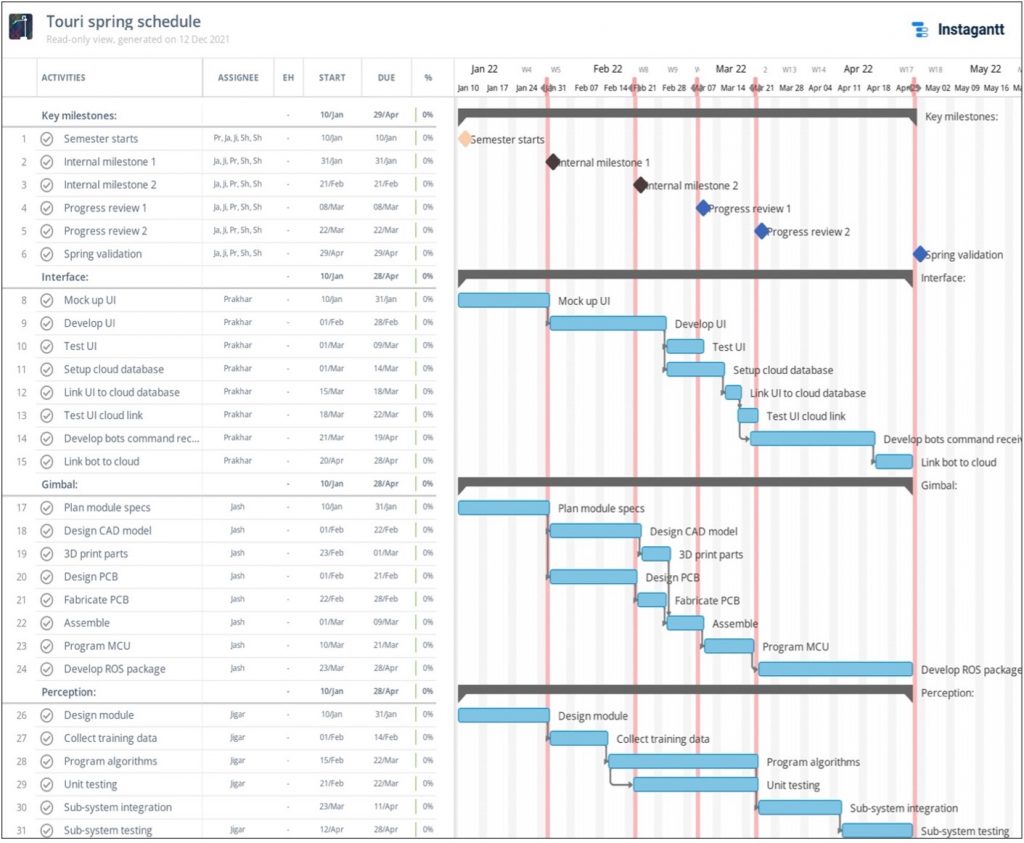

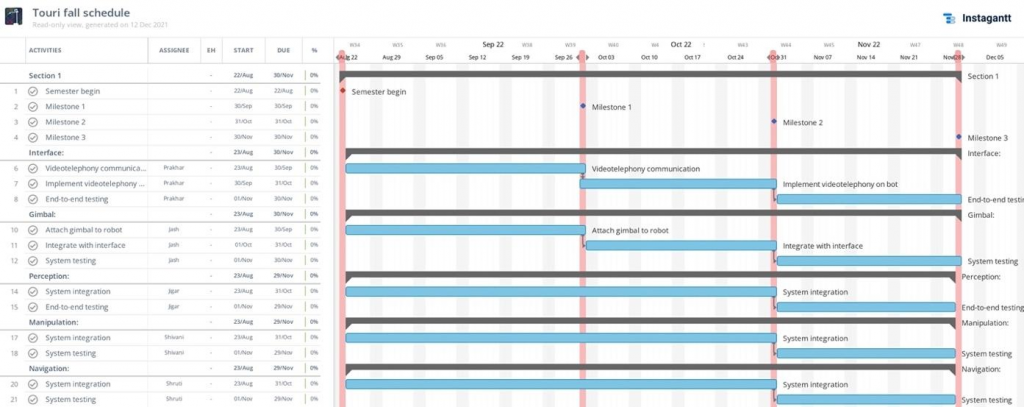

Schedules

Spring semester schedule

Fall semester schedule

Fall Validation Demonstration

Location: AI Makerspace

Equipment: Hello Robot’s Stretch, gimbal mount, a display device, user device, Wi-Fi network, objects for grasping, dynamic obstacles in the environment

Objective: Demonstrate that the user can teleoperate and autonomously navigate the robot to the desired location, detection of pre-defined objects, autonomous grasping/placement of pre-defined objects, manually controlled gimbal motion

Autonomous Navigation & Teleoperation for Touring

- Procedure:

- Place the robot at the start location and observe the robot localize.

- Pick a goal for the robot on the interface and observe the robot plan the path.

- Observe the robot autonomously navigating to the goal while avoiding static and dynamic obstacles.

- Allow the user to control the pan-tilt display during the autonomous traversal of the robot to view the surroundings.

- On reaching the location, teleoperate the robot to tour the AI Makerspace and observe the robot alert the user about obstacles during teleoperation.

- Control the pan-tilt display through the user interface to observe the surroundings and observe audio-visual communication between the remote user and the people in the environment.

- Validation:

- The user can pass the location to tour the robot, through the user interface.

- The robot is able to localize within the map and plan global and local paths.

- The robot is able to autonomously navigate to the goal with obstacle avoidance.

- The user is able to teleoperate the robot and control the pan-tilt display mounted on the robot for interaction.

- The robot is able to detect obstacles around it and alert the user.

Autonomous Pick and Place of Souvenirs

- Procedure:

- After touring, select the souvenir shop to autonomously navigate the robot to it.

- Select the picking option on the interface to select the souvenir to be picked up.

- Observe the robot detects the souvenirs and returns the list to the interface.

- Select the souvenir to be picked through the interface.

- Observe the robot autonomously pick up the selected souvenir and drop it in a box at the counter to be shipped.

- Validation:

- The robot is able to autonomously navigate to the souvenir shop.

- The user is able to teleoperate the robot inside the souvenir shop.

- The robot is able to detect the souvenirs in its field of view.

- The user is able to select the souvenir to be picked up from the interface.

- The robot is able to autonomously pick up the selected souvenir and place it in a shipping container on the counter.

Test plan

Fall semester

| Date | PR | Milestone(s) | Test(s) | Requirements |

| 09/29/22 | 8 | Bring up the autonomous navigation stack and ensure it is working as it was in SVD.Implement object detection for shipping box Implement autonomous manipulation pipeline for placing an object in the boxDevelop user interface with Audio/Video communication | Test 2,Test 7 | MF8, MF9 |

| 10/13/22 | 9 | Re-map the hallway (due to change in environment) and integrate with the autonomous navigation stack.Fine tune user interface + implement asynchronous communicationRefine grasping pipeline for multiple objectsImplement object detection for souvenir objects | Test 3,Test 1,Test 8 | MF1, MF2, MF8, MF9 |

| 11/03/22 | 10 | Design servo actuator bracket, test E-kill with a new app, Update firmware for CMU DEVICE and switch to failback wifi access points in case of the lost primary network.Tune the autonomous navigation stack and the stack with the new map. Integrate all-subsystems | Test 10, Test 5 | MF1, MF2 |

| 11/17/22 | 11 | Assemble Pan/Tilt display on TouRI, Test teleoperation.Integrate obstacle detection using lidar during teleoperation Test data flow throughout the system | Test 9, Test 6Test 4 | MF1, MF2, MF10, MF11 |

| 11/27/22 | FVD | Demonstrate full system functionality | All | All |

Spring semester

| Action | Demonstration | Requirements validated | |

| 1 | User starts the TouRI robot app and selects a location to tour | Demonstrate user interaction with the app and the capability to receive location input from user within 5 seconds and generate the goal waypoint Condition for operation : > 100mbps broadband connectivity | MP3, MNF1 |

| 2 | Based on the waypoint input, the navigation module generates a global path to the goal location | Demonstrate capability of the navigation module to generate the global path within 3 minutes | MP4 |

| 3 | The robot detects static and dynamic obstacles and avoids them by following the obstacle avoidance trajectory genereated by the local planner | Demonstrate obstacle detection and avoidance by the robot with an mAP of 60% Condition for operation: For obstacles lying in the FOV of sensing modalities | MP5 |

| 4 | Robot reaches the goal location | Demonstrate ability of the robot to reach the desired location with a speed of 0.4m/s during the traversal Condition for operation: Limited obstacles are present in the surroundings and a path exists for the robot to avoid obstacles and traverse ahead | MP1 |

| 5 | The robot detects the doorknob and estimates its grab point | Demonstrate doorknob detection and the grab point estimation with an mAP of 40% Condition for operation: For predefined set of doorknobs in the environment and appropriate lighting | MP6 |

| 6 | Based on the grab point input, the manipulation module plans the trajectory to open the door | Demonstrate the motion planning capability of the manipulation module to generate the trajectory within 3 minutes | MP7 |

| 7 | The manipulator is simulated on ROS and it executes the planned trajectory | Demonstrate manipulator motion to open doors (simulation in Gazebo) | |

| 8 | The standalone gimbal mount rotates the display device to view the surroundings | Demonstrate gimbal motion of 60 degree pitch and 120 degree yaw for display device | MP9 |

| 9 | The app provides the video call interface between user and surroundings | Demonstrate 1080*720 resolution video call with a lag less than 2 seconds | MP10 |

Fall semester

| Action | Demonstration | Requirements validated | |

| 1 | User starts the TouRI robot app | Demonstrate user-friendly app interface | MNF2 |

| 2 | Based on the location input entered by the user in the app, goal waypoint for the location is generated and sent to the robot | Demonstrate integration between user interface and navigation modules | |

| 3 | During autonomous traversal, the robot detects static and dynamic obstacles and avoids them by following the obstacle avoidance trajectory generated by the local planner | Demonstrate obstacle detection and avoidance by the robot with an mAP of 80% Condition for operation: For obstacles lying in the FOV of sensing modalities during autonomous traversal | MP5 |

| 4 | The robot detects the doorknob on reaching the desired location and estimates its grab point | Demonstrate doorknob detection and the grab point estimation with an mAP of 60% Demonstrate integration between navigation and perception modules Condition for operation: For predefined set of doorknobs in the environment and appropriate lighting | MP6 |

| 5 | The manipulator executes the planned trajectory to open the door | Demonstrate integration between perception and manipulation modules Demonstrate manipulator motion to open doors within 5 minutes with 75% accuracy | MP8 |

| 6 | Robot successfully enters the desired room location | Demonstrate integration between user-interface, navigation, perception and manipulation modules for task execution in 30 minutes Condition for operation: Goal location in the operating area and 100m away from start location of the robot | MP2 |

| 7 | On entering the desired room, robot accepts inputs from user for teleoperated traversal | Demonstrate integration between user interface and navigation modules | |

| 8 | The robot retracts teleoperation control from user when obstacles are detected and transitions to autonomous mode . The robot detects and avoids static and dynamic obstacles by following the obstacle avoidance trajectory generated by the local planner | Demonstrate situational awareness Demonstrate obstacle detection and avoidance by the robot with an mAP of 80% Condition for operation: For obstacles lying in the FOV of sensing modalities during teleoperated traversal | MP5,MNF4 |

| 9 | The gimbal mount integrated on the robot rotates the display device for user to view the surroundings | Demonstrate integration of gimbal platform with the robot Demonstrate natural interaction | MNF5 |

| 10 | System level requirements | Demonstrate sub-system modularity with APIs | MNF3 |

Parts list

We have created a google sheet to keep track of our parts list. Click here to view the sheet.

Issues log

| Issue Number | Date Found | Date Fixed | Change Made By | Origin | Description | Resolution | Artifact(s) Changed |

| 001 | 3rd Jan, 2022 | 14th Jan, 20222 | Prakhar Pradeep (ppradee2) | Manipulation test | Manipulator is not capable of performing tasks without moving the base | Changed the manipulation subsystem to integrate navigation for small distances | Software architecture (ROS package) |

| 002 | 28th Jan, 2022 | 15th Feb, 2022 | Prakhar Pradeep (ppradee2) | Tele-op performance test | Interface would not reset the joystick X & Y as (0,0) on releasing the joystick (bug in the third party package) | Implemented a custom gesture detector to listen to release of joysticks | User interface source code |

| 003 | 20th Feb, 2022 | 27th Feb, 2022 | Shruti Gangopadhyay (sgangopa) | Robot localisation test | Issues with the set up of the base packages of the robot | Correcting the issues with set up, like transforms, package integration, etc. | Base set up packages |

| 004 | 5th March, 2022 | 23rd Mar, 2022 | Shruti Gangopadhyay (sgangopa) | Robot motion test | Robot translates well on carpet, but accumulates offset during rotation on carpet | Integration of IMU sensor for odometry | Navigation stack |

| 005 | 27th Mar, 2022 | Shruti Gangopadhyay (sgangopa) | Full autonomous navigation stack test | Drift in IMU causes robot to lose localisation when stationary, thus also affecting path planning to goal location | Not yet resolved | ||

| 006 | 8th Feb,2022 | 14th Feb, 2022 | Shivani Sivakumar (ssivaku3) | MoveIT package integration test | Not able to integrate the MoveIT package for the manipulator as it is not supported on ROS, only on ROS2 | Went ahead to work with rhe built in helper packages for the robot for the manipulation planning | |

| 007 | 15th Mar, 2022 | Shivani Sivakumar (ssivaku3) | Manipulation test | Launch file keeps crashing when trying to test manipulaton | Not yet resolved | Manipulation Stack | |

| 008 | 23rd Mar, 2022 | 6th Apr, 2022 | Shivani Sivakumar (ssivaku3) | Manipulation Test | Wrist is sometimes not able to extend when testing full manipulation stack involving base navigation and manipulator planning | Writing our own scripts for actuation without depending much on the helper packages | Manipulation Stack |

| 009 | 31st Apr, 2022 | 7th May, 2022 | Jashkumar Diyora (jdiyora) | Robot Hardware | The robot gripper and wrist could not ping and respond to software commands. | Changed baud rates | 57600 -> 115200 |

| 010 | 16th May, 2022 | 20th May, 2022 | Jashkumar Diyora (jdiyora) | Robot Hardware | Battery over drain causing intel NUC to go into a deep sleep | Hello Robot provided hardware battery protection before the power distribution inside the system. | Battery over-drain protection was added. |

| 011 | 3rd Oct, 2022 | 17th Oct, 2022 | Jashkumar Diyora (jdiyora) | Robot Firmware | The robot has a software issue due to package dependencies causing multiple issues. A decision was made to wipe and reinstall OS on the robot. As the company updated the OS, many software and naming conventions were also updated, causing multiple issues with the previously developed dynamixel software. | All the dependencies cited to the core of RE1 were reprogrammed for REx (As the same software works for RE1 and RE2) | RE1 -> REx |

| 012 | 19th April | 24th April | Shivani Sivakumar (ssivaku3) | Manipulation test | FUNMAP kept crashing the robot | Writing our own planner based for manipulator and base | Manipulation Stack |

| 013 | 23rd April | 30th April | Shivani Sivakumar (ssivaku3) Jigar Patel (jkpatel) | Perception Test | Error when trying to reach centroid of object | Incorporated visual feedback | Perception stack |

| 014 | Oct 28,2022 | Nov5, 2022 | Shivani Sivakumar (ssivaku3) Jigar Patel (jkpatel) | Perception Test | 3D pipeline is very slow hence entire dropping/picking pipeline takes time | Rewrote 3D pipeline as a service in C++ instead of python. | Perception/Manipulation stack |

| 015 | Oct 28,2022 | Nov 6, 2022 | Shivani Sivakumar (ssivaku3) Jigar Patel (jkpatel) | Perception Test | 2D pipeline slow in inference | Set up server/client architecture for detections on local machine gpu | Perception/Manipulation stack |

| 016 | Oct 8, 2022 | Oct 13, 2022 | Shruti Gangopadhyay (sgangopa) | Autonomous Navigation Test | Robot loses localisation while traversing autonomously | Issue due to featureless hallways. Tuned the parameters for mapping and for localisation, increasing the trust on odometry | Navigation stack |

| 017 | Oct 25, 2022 | Oct 27, 2022 | Shruti Gangopadhyay (sgangopa) | Autonomous Navigation Test | Robot finds it hard to localise at the goal | Added features near the goals to uniquely identify the goals helps in localisation near the goals. Also increased the goal tolerance slightly | Navigation stack |

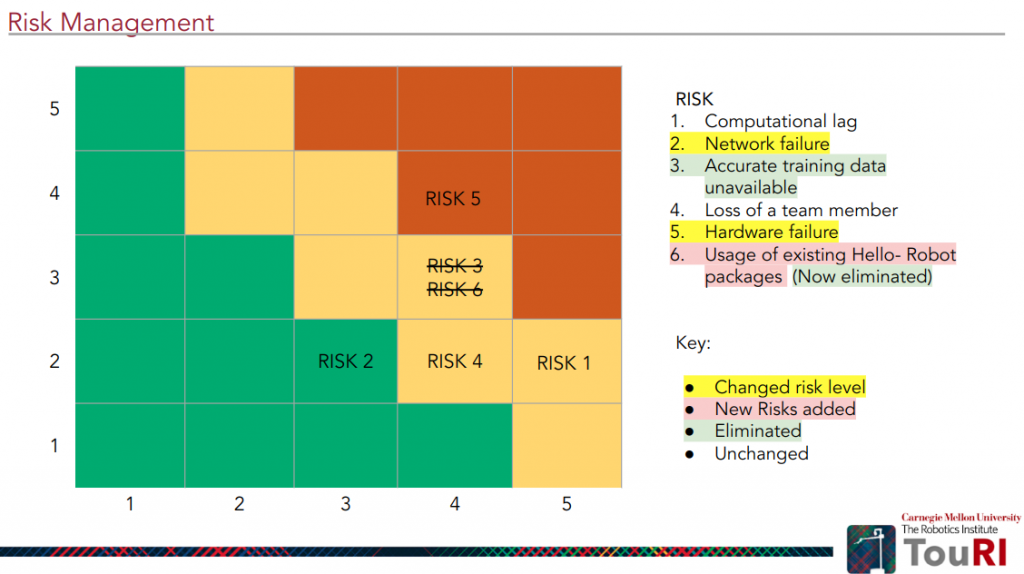

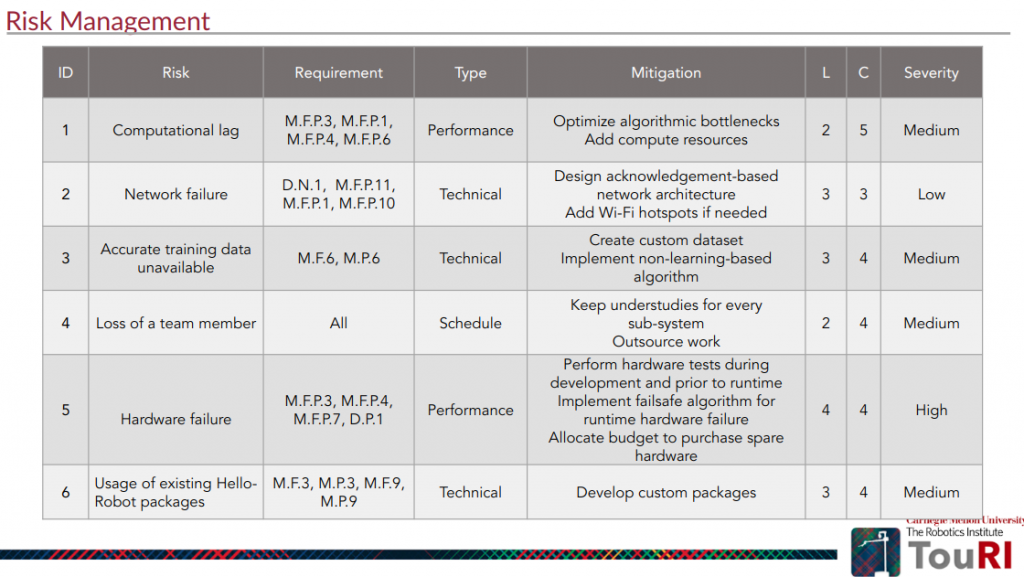

RISK MANAGEMENT