VTOL Subsystem

Flight Controller

Our flight controller is a Pixsurvey Cube V3 running ArduPilot v4.2.0. One of the main issues we faced was not being able to use the MAVLink SET_POSITION_TARGET_LOCAL_NED message for controlling the position of our VTOL from our companion computer. To remedy this issue we have written some additional code on top of the existing ArduPilot codebase to allow our VTOL to accept position commands. Our custom version of ArduPilot can be found here.

Companion Computer

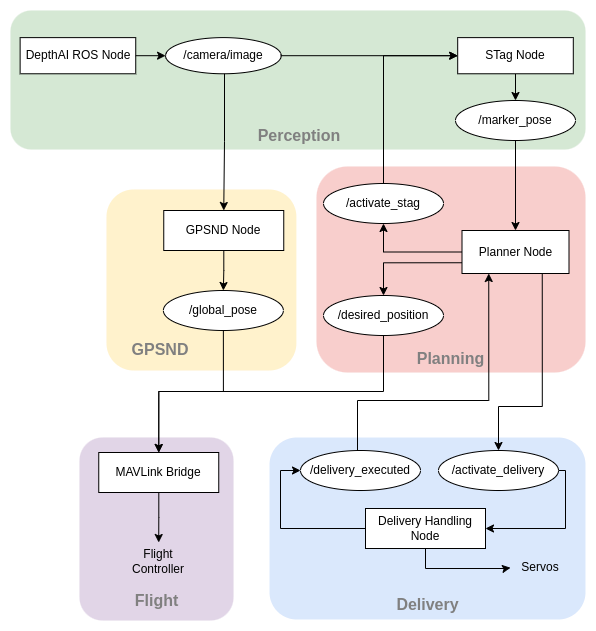

Our companion computer is a Raspberry Pi 4 Model B running Ubunutu Mate 20.04. We will be using ROS Noetic for communication between the various subsystems. Since the flight controller adheres to the MAVLink protocol we will be using MAVROS to facilitate communication from the companion computer to the flight controller. An overview of the ROS nodes and the topics they will publish is given below.

Perception Subsystem

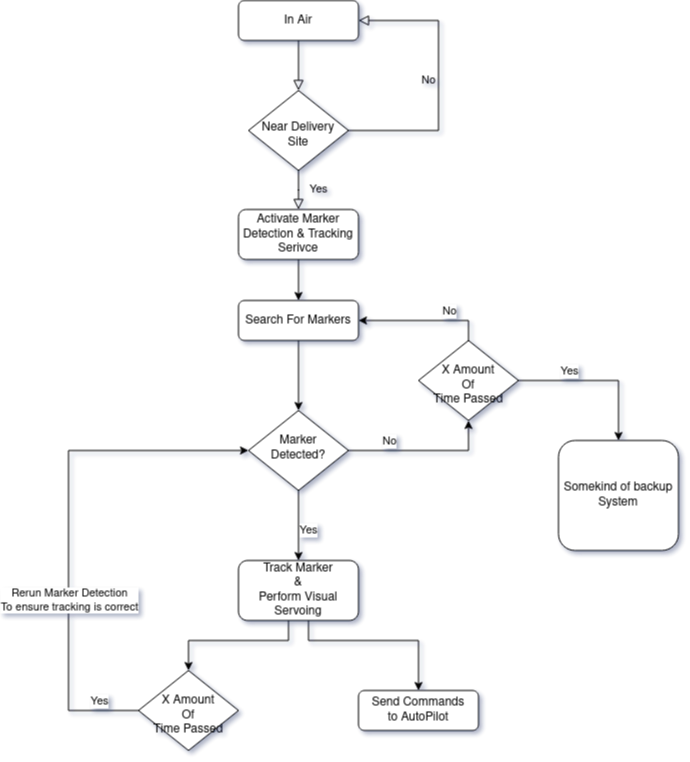

The perception sensor is OAK-D from Luxonis. The sensor has two mono global shutter cameras and one high resolution rolling shutter RGB Camera. This allows us to capture images at different resolutions, exposure, iso-sensitivity and FPS which can then be used to tweak the marker detection sub-system. For the marker-detection we are using the STAG_ROS library. The diagram below explains how the marker detection system is activated and interacts with the rest of the systems.

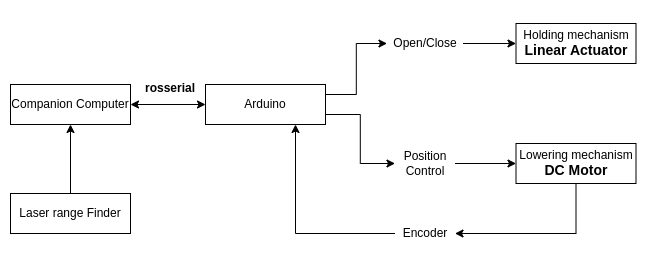

Delivery Subsystem

The software for the delivery subsystem has been developed and tested with ROS and Arduino. With one command the entire subsystem works autonomously. First, the holding mechanism is actuated, the package is then lowered and once the package makes contact with the ground, the passive mechanism relases the package. Then the lowering mechanism retracts and is latched back on the VTOL. Then a signal is sent back to the companion computer to indicate that the delivery is complete. Below is a diagram showing the system setup for information flow.

GPSND

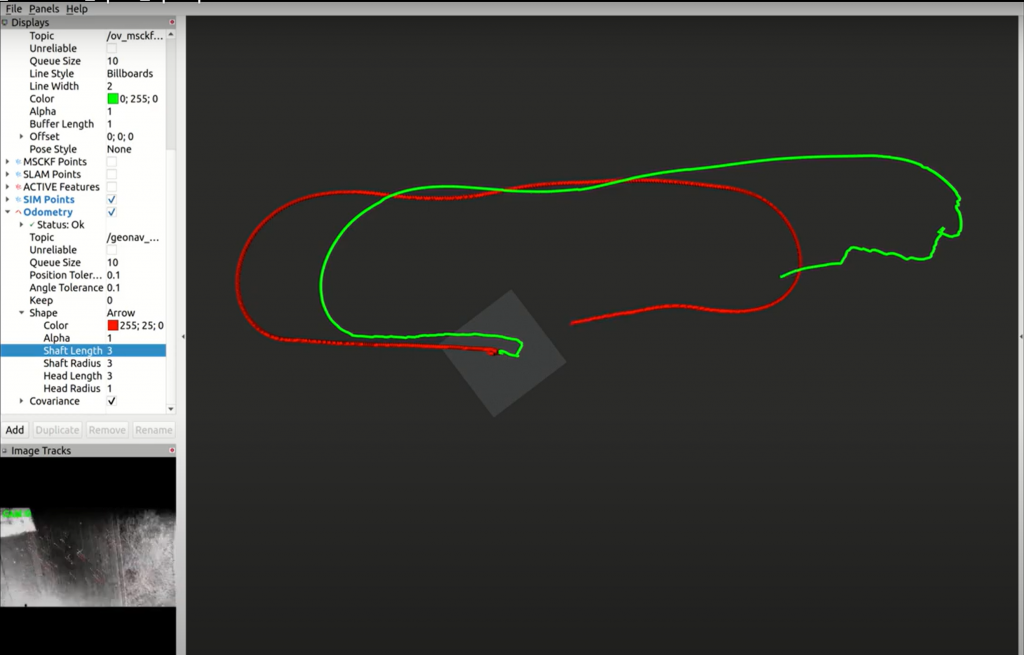

The GPS non-dependence pipeline was built using the open-source algorithm OpenVINS. The aim is to obtain accurate latitude and longitude measurements using the onboard camera and IMU sensor if GPS is temporarily unstable during flight. The OpenVINS algorithm was tuned to provide accurate results for a VTOL whose camera will have a bird’s eye view from a high altitude and the platform itself flies at a high speed. The algorithm was run on the companion computer using bagfiles containing the camera and IMU data. To ensure the safety of our system, the measurements were never used by the flight system.

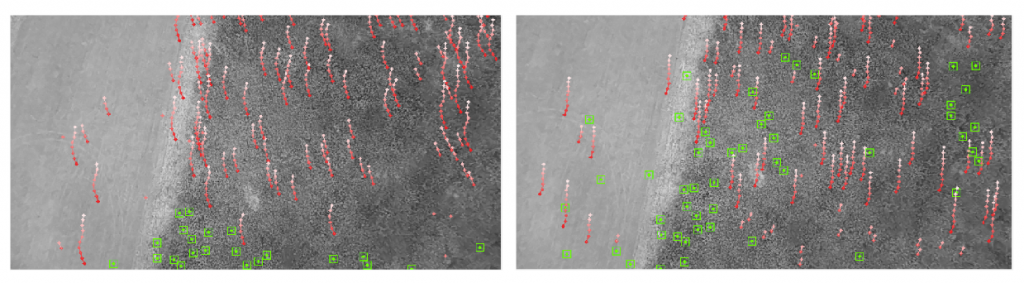

During testing of the OpenVINS algorithm, we noticed that the number of features would rapidly degrade after reaching a certain altitude. OpenVINS has many checks to ensure that features are robustly detected and tracked. One such check is to ensure that the depth of the feature is not greater than some threshold. Since OpenVINS is quite often used within indoor environments this threshold is fairly low. Increasing the threshold results in much better performance when flying at higher altitudes as can be seen in the figure below where the number of tracked features (represented by green boxes) is much higher after the change.