Fall Semester

Autonomous Exploration

Autonomous exploration was highly unreliable right until the FVD Encore. We could not demonstrate it during both the FVD and FVD Encore. The local planner wasn’t reliable. It would often run into obstacles or get stuck because no feasible paths were found.

Within 24 hours after the FVD Encore, we achieved repeatability in autonomy. We replaced the local planner and wrote a position controller and active waypoint filter. With these simple but smartly implemented algorithms, the autonomous exploration worked almost every time. It finally looked convincing and had a strong element of repeatability. We also implemented a safety framework where the robot would halt if the waypoint or velocity commands weren’t confident. The robot also needs the operator to publish an “allowed-to-move” signal at all times to keep moving.

Through this series of implementations, we met nearly all our performance, functional, and non-functional requirements. We scheduled a Final Validation Demonstration (Not-an-fvd) after the FVD Encore and demonstrated that we met these requirements. We demonstrated the safety features and demonstrated that the operator could pause and resume the autonomous exploration at will, and override the autonomy by using the joystick controller. We met the exploration rate in our performance requirements, the clutterness requirement, and the narrow door criterion. We also closed the circle on the other non-functional requirements. Our robot system was light enough to be carried around in disaster settings, narrow enough to enter small spaces, and not hazardous to the victims or first-responders in any form.

Human Detection

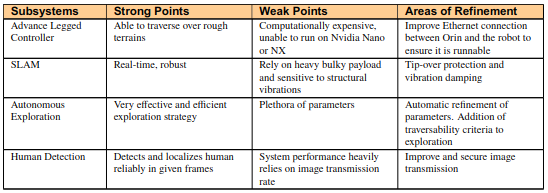

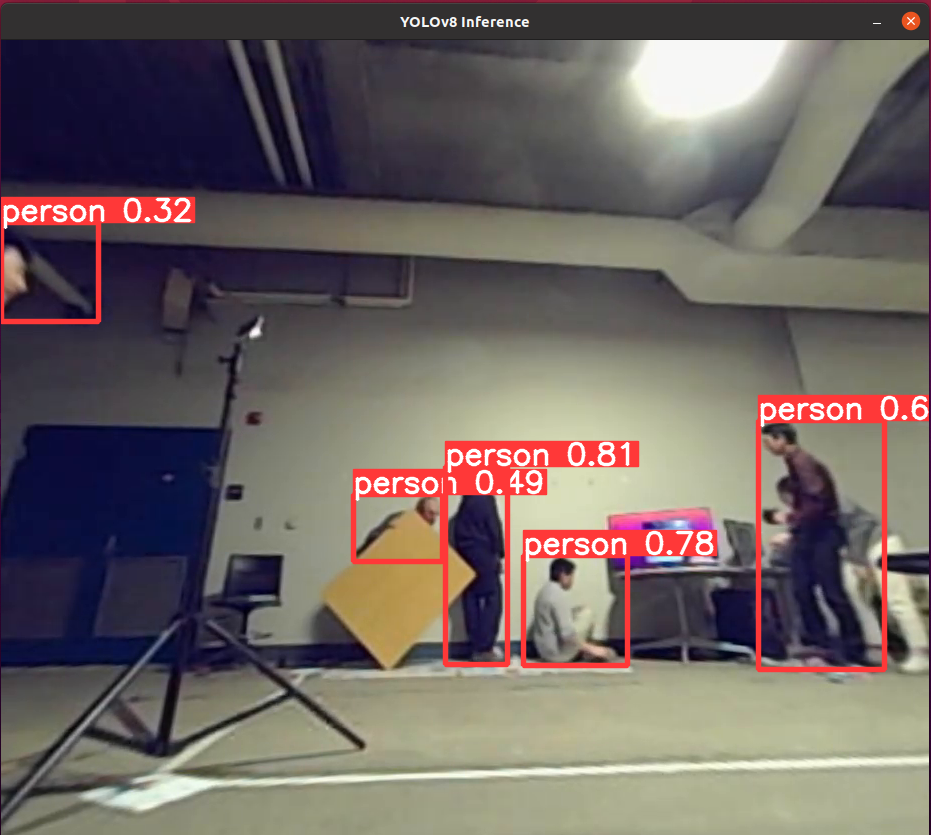

The Human Detection System, utilizing YOLOv8 for detecting and localizing humans, exhibits both strengths and areas for improvement. Its primary strength is its ability to reliably detect and localize humans within given frames, a capability vital for applications where accurate human recognition is crucial for safety and operational efficiency.

However, the system’s performance heavily relies on the image transmission rate, which presents a notable weakness. The dependence on UDP packets from the RealSense camera introduces a vulnerability; if these packets are lost during transmission, YOLOv8 fails to detect anything. This can lead to detection gaps and potentially compromise the system’s reliability in critical situations.

An improvement in image transmission is essential to enhance the system’s robustness. Ensuring secure and stable transmission of image data would guarantee consistent receipt of necessary information by YOLOv8, thereby maintaining the system’s detection capabilities. The team has explored several solutions to improve image transmission speed, including using an Ethernet connection, switching to the 5G channel of the router, and running the human detection program directly inside the payload. However, the speed of image frame transmission remains sporadic. For future improvements, implementing measures such as packet loss recovery or a more reliable transmission protocol could mitigate this risk, leading to a more dependable and effective human detection system.

Spring Semester

Human Detection

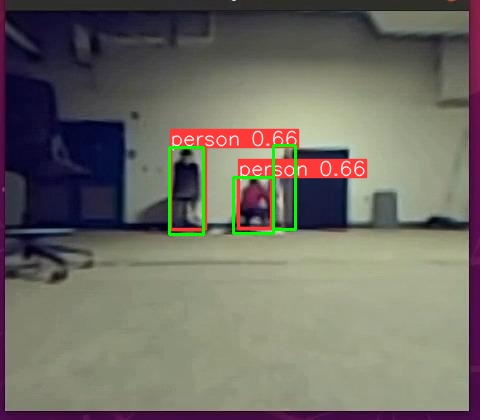

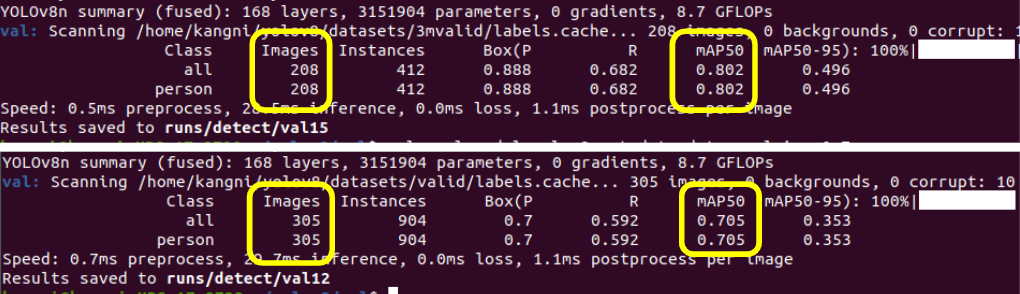

Previously, we tested our system with Dragoon’s RGB dataset, but it doesn’t fit our use case because of the low light condition. To further test the human detection system, our team build up a 500 image dataset. We took a video of humans staying 5 meter and 3 meter away from the robot. Making different postures and hiding behind chair and wood board. We skipped the images that are easy to detect, and handpicked frames that look challenging for testing, as shown in figure 1. Our system reaches 80% precision with the 200 images taken 3 meters away from human. And it reaches 70% precision with the 300 images taken 5 meters away from human.

In SVD, the performance of human detection reached its goal. The performance of human detection subsystem is shown in Fig.3 and Fig.4. The robot is placed 5m away from humans and almost all humans are successfully detected and the functional requirement was met. On the right side of Fig.4, Connie was standing behind Saha so she was missed by YOLO.

Gait Controller Test

We conducted a gait controller test to evaluate the Darkbot mobility system’s ability to traverse rough terrains. The test was manually controlled by a human operator, using our custom Darkbot controller software. Our goal was to meet the requirements outlined in section 4.1 PRM-3. During the demonstration, the system successfully climbed over the debris and maintained balance, as seen in Figure 5. The system’s performance met the maximum height and ruggedness requirements, indicating that it is capable of traversing challenging terrain. The test involved navigating through a 2m² space containing five wooden boards measuring 100cm x 30cm x 2cm and two wooden planks with a 20-degree incline. Despite the challenging conditions, the robot was able to achieve the 8 centimeter height requirement, demonstrating its ability to handle rough terrain.

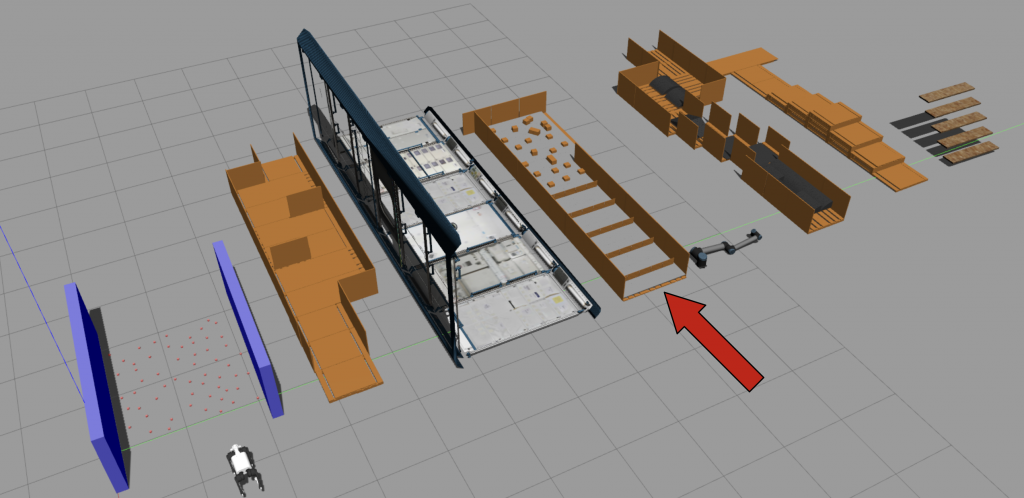

Simulation Test

During the simulation test, we aimed to evaluate the Darkbot’s ability to perform complex tasks by introducing dozens of 2 centimeter cubes in a 2×2 meter squared area. The objective was to ensure that the robot could traverse over the blocks while maintaining balance, rather than simply avoiding the obstacles. Additionally, we created eight tracks (See Figure 6) with varying terrain settings, providing the audience with the option to select a track randomly for testing. One of the tracks included passing through a narrow door, which meets the 8 cm width requirement specified in section 4.1 PRM-4. The simulation test enabled us to thoroughly assess the Darkbot’s performance and ensure that it met the necessary requirements for operation in dynamic environments.