Fall 2024 Overall System Implementation Status

Software Spring 2024 Progress

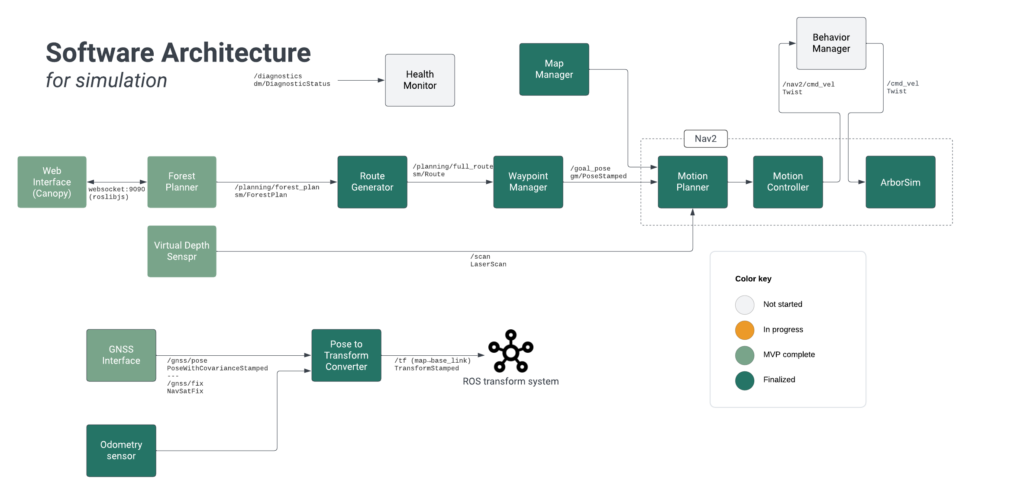

Software Navigation Stack Progress Architecture

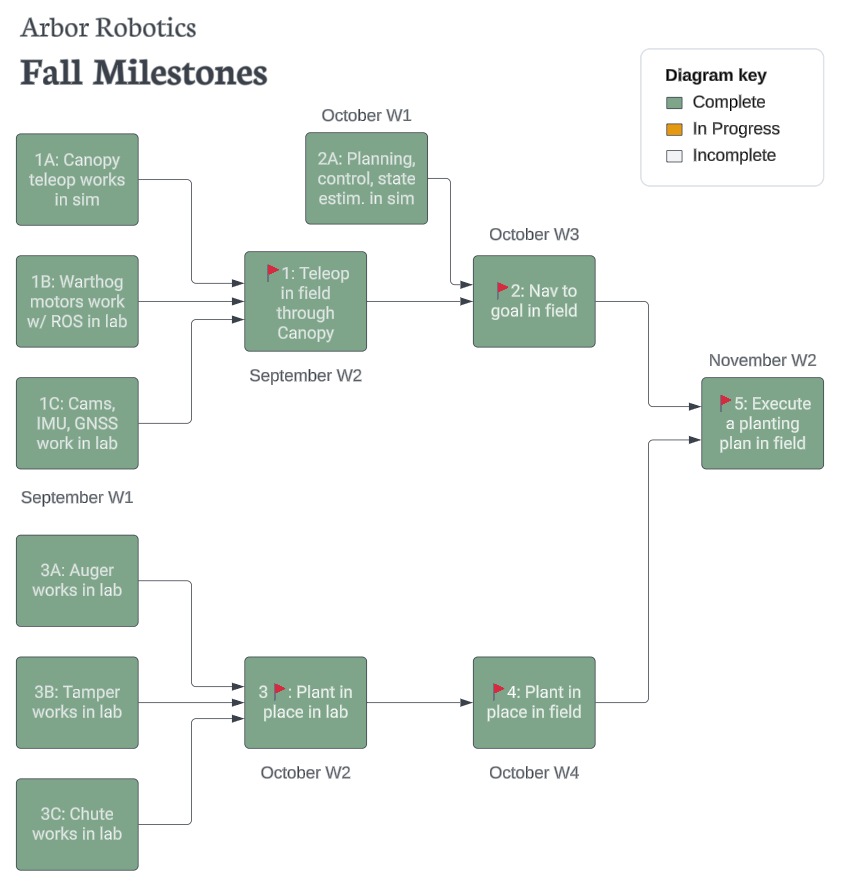

The image above is our most recent roadmap update.

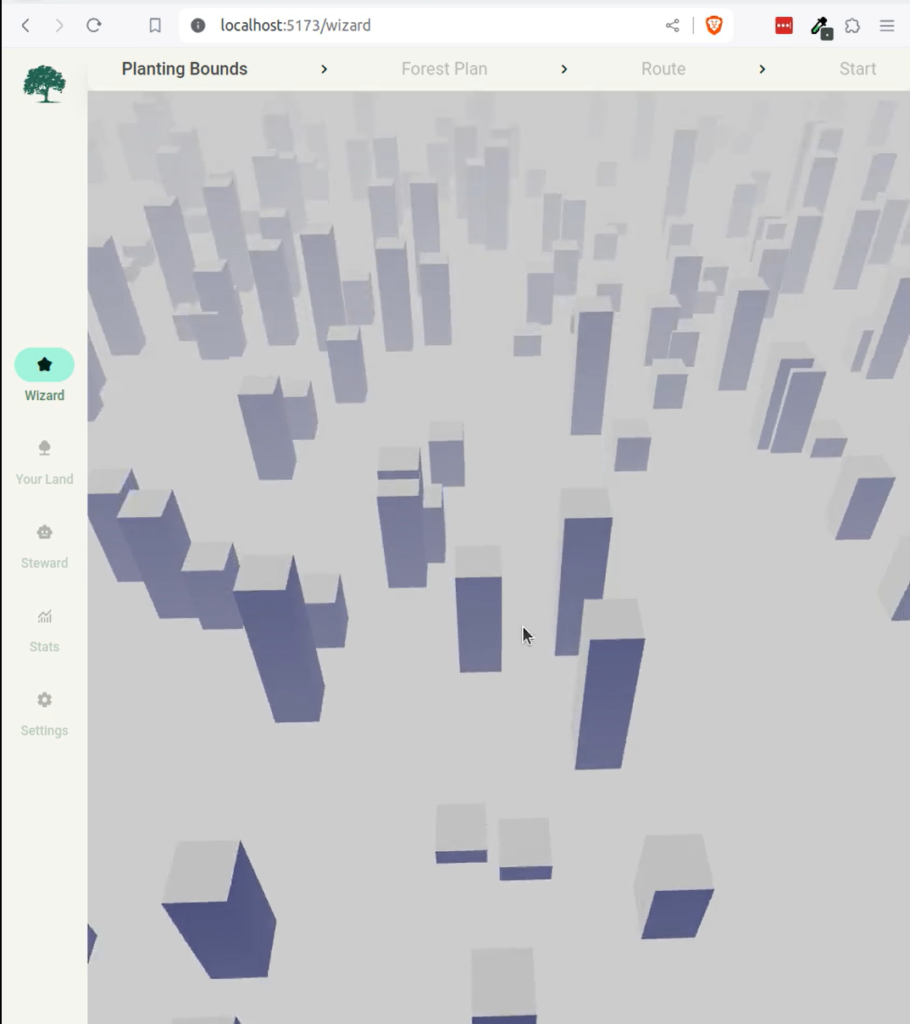

User interface

We have developed the first version of Canopy, our GUI for selecting our planting bounds and showing planting updates. It includes a start button, which allows the robot to leave the IDLE state, a top-down map with the real-time pose of the robot and a progress bar indicating the robot’s progress along the route. The following is our progress so far, it indicates the planting nodes simulated in 3D.

Simulation

For our navigation stack, we are in the process of integrating Nav2, a popular, enterprise-grade navigation platform, into Steward. Nav2 includes a Waypoint Follower plugin that will automatically guide the robot through an ordered list of waypoints.

This video highlights the accomplishments so far: Arbor Sim

Currently, we have switched to the Warthog model from the previous imported Car Model that Unity provided as well as shifted to an HDRP Scene in Unity.

Perception

Our team intends to focus on the perception stack in the Fall semester. Currently, we’ve worked on incorporating perception on a basic level in our simulator to obtain the depth of objects using uses 2D raycasting for depth of objects in the scene.

For further details refer to the Electrical section.

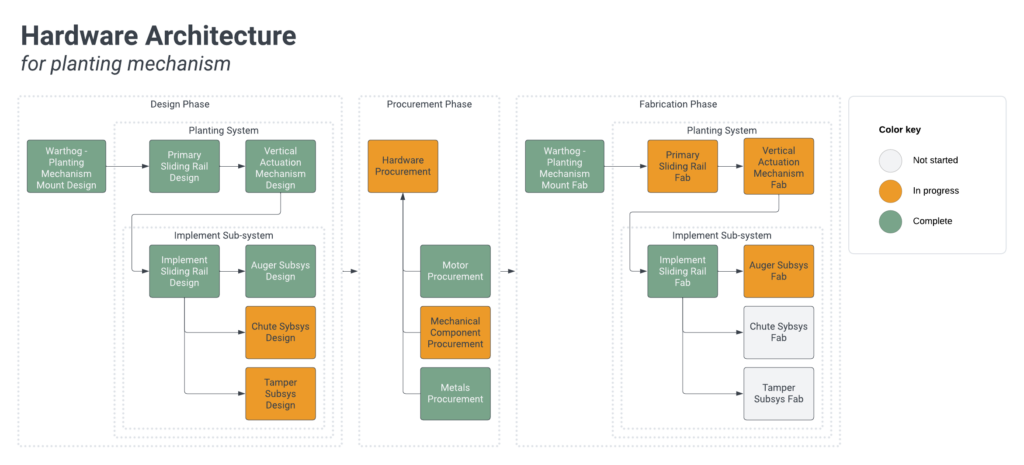

Mechanical Spring 2024 Progress

The image above is our most recent roadmap update.

For the mechanical system, our team is focusing on the planting mechanism for the Spring Semester. We’ve done a great deal of designing, consulting with Jim and Tim from FRC for ideas and verification on the efficacy of our concepts.

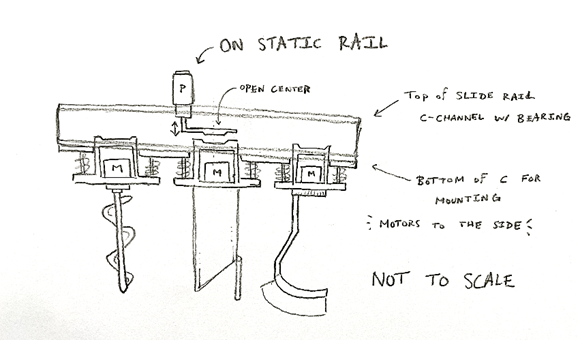

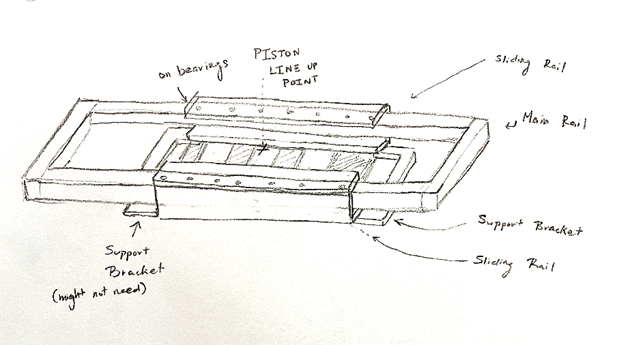

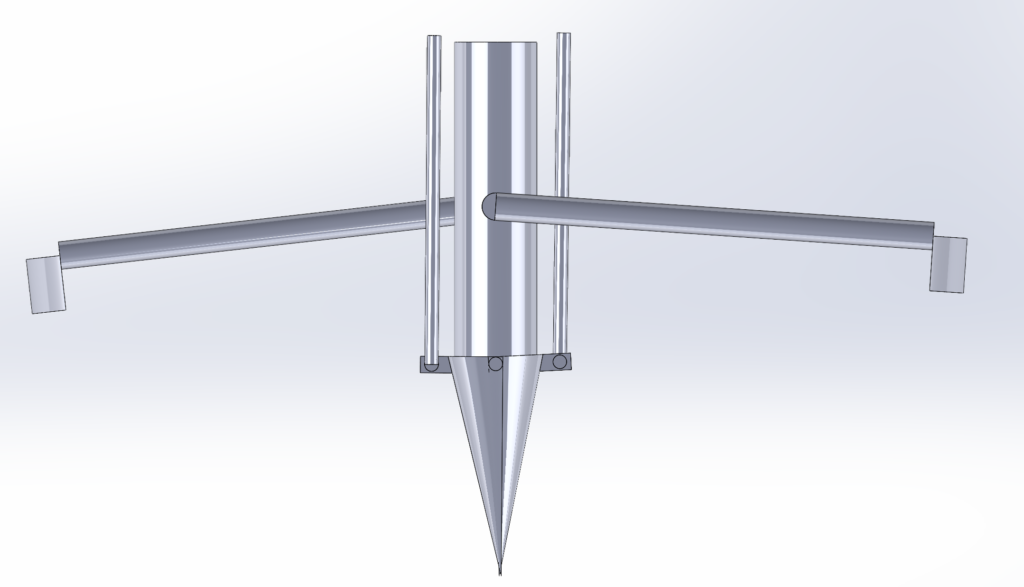

Our current design plans to incorporate a linear slide, where a three-implement system will align itself with the center of the rail for an actuator to bring the implement to the ground. The three implement system consists of: a digger, a plant insertor, and a tamper.

We’ve developed CAD models for a basic plant insertor concept which we will expand on soon. We’ve actually come up with several brainstormed concepts but don’t feel the need to include them all here.

In addition to designing we’ve been doing field tests to gauge how our concepts will fare. The reason we decided to do a three-stage mechanism was because our original concept (only the plant chute), would have required too much power to press into the ground.

Electrical

We selected the ZED camera for outdoor obstacle detection primarily because of its stereo vision technology, which relies on visible light rather than infrared. This feature is advantageous in outdoor environments where sunlight interference can affect infrared-based systems. Additionally, the camera’s robustness ensures durability in outdoor conditions, while its compatibility with machine learning algorithms allows real-time obstacle recognition. With a wide field of view, it effectively covers dynamic outdoor environments, making it the optimal choice for our project’s requirements despite sunlight challenges.

We also selected the integrated NEMA 23 stepper motor for our subsystems actuation. With 2 Nm and a 20:1 Planetary Gearbox it gives a max torque of 30 Nm. Due to savings with shipping and to maintain a reasonable factor of safety for our other high-load tasks, we opted to go with the same NEMA 23 motor for the horizontal motion and vertical motion.

We are currently working on actuating the auger mechanism using a Microcontroller and here is our current progress: