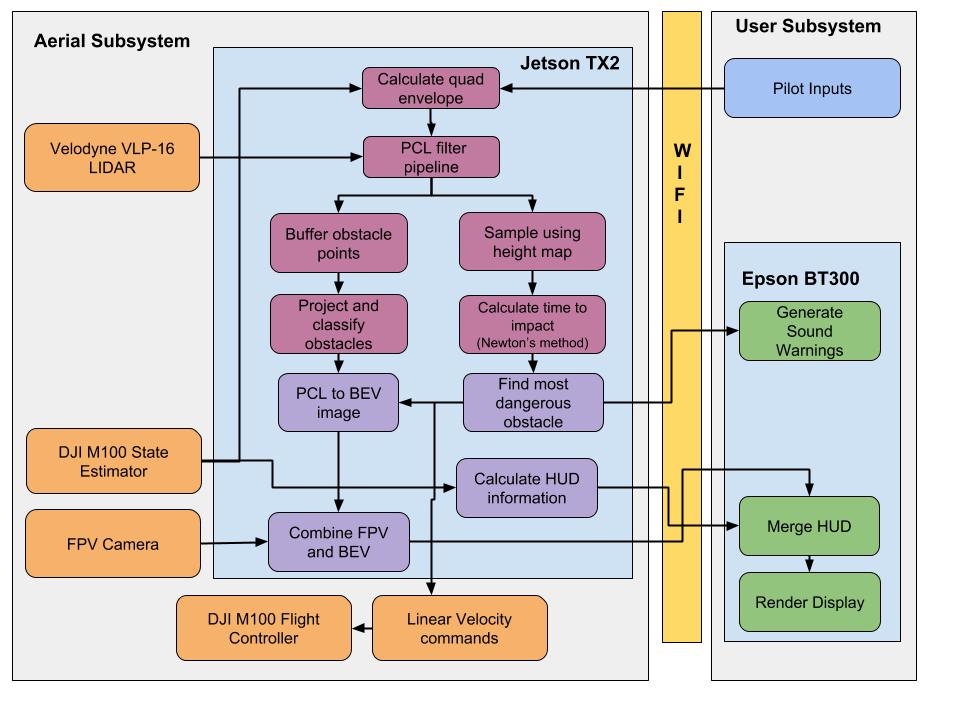

The cyber-physical architecture delineates the functions among different subsystems and goes into details of implementation on a higher level. It also explains the decisions taken based on the trade-studies to identify components, algorithms, etc.

Our system has 2 major subsystems (Aerial and User). The aerial subsystem is further divided into 2 key components – the onboard sensor suit and the onboard computer Jetson TX-2. The user subsystem consists of the pilot with the DJI radio controller and the Epson BT-300 Augmented Reality headset running the FlySense interface. Both the subsystems are interlinked by Wi-Fi communication subsystem to enable transfer of data.

Each of these components are described below:

- Aerial Subsystem

- DJI M100 Quadcopter is the platform where all the algorithms are tested.

- Velodyne VLP-16 LIDAR gives the raw point cloud data for obstacle detection in 3D and 360⁰.

- FPV camera gives the frontal view of the quadcopter with a field of view of 80⁰.

- The state estimation is carried out using the onboard IMU and GPS.

- Onboard computer Jetson TX2 is used for the following functions:

- Calculate Flight Envelope: The flight envelope is calculated from the received pose estimate and pilot inputs. This is the addressable area around aircraft where aircraft can reach in 5 seconds. This does not include sudden malfunction/crash.

- Point Cloud filter pipeline: The raw point cloud is passed through a series of filters that involve cropping, downsampling and outlier removal. The flight envelope calculated is used to extract out only the relevant data and get rid of the extra point cloud data. This is done to reduce required onboard processing.

- Obstacle Classification and Coloring: The filtered point cloud is then used to identify the obstacles in the flight path, classify them into different danger levels based on the maximum possible pilot input and time to impact and color them red/yellow/green based on the same.

- Sound Warnings: Among the obstacles detected, the obstacle with least time to impact is calculated using Newton’s method and used to generate sound warnings.

- Bird’s eye view: The obstacles detected along with the most dangerous obstacle given by the sound warnings code are combined to generate a bird’s eye view image.

- The FPV video and BEV combined together and published at one frequency over Wi-Fi to the Epson.

- Relevant state information of the quadcopter is broadcast over Wi-Fi.

- Override pilot commands: The pilot commands are modified if the most dangerous obstacle is in the immediate path of the quadcopter. The algorithm publishes linear velocity commands for emergency braking.

- The onboard flight controller receives velocity commands and takes control of the vehicle to avoid collision.

- User Subsystem:

- The pilot is the heart of our complete system. He provides commands using the DJI Radio Controller and FlySense interface to navigate the quadcopter safely.

- Pilot inputs are part of all the algorithms in the software stack.

- The Epson BT-300 headset is used to render FPV video, BEV and HUD based on the sensor information and video received from the onboard computer.

- The sound warnings are given to the pilot through the headset as beeps.