The ORB system itself is composed of a mobile manipulator platform, custom-built hardware, and a generalizable software stack, which together form various subsystems as outlined in the cyber-physical architecture. These components work in unison to enable the ORB system to navigate, interact, and perform logistics tasks efficiently in a hospital environment. The system integrates hardware and software in a modular manner, ensuring adaptability to dynamic scenarios and compatibility with hospital workflows. The subsections below provide a detailed description of each subsystem, their roles in the overall architecture, and the evaluations performed to assess their performance and reliability.

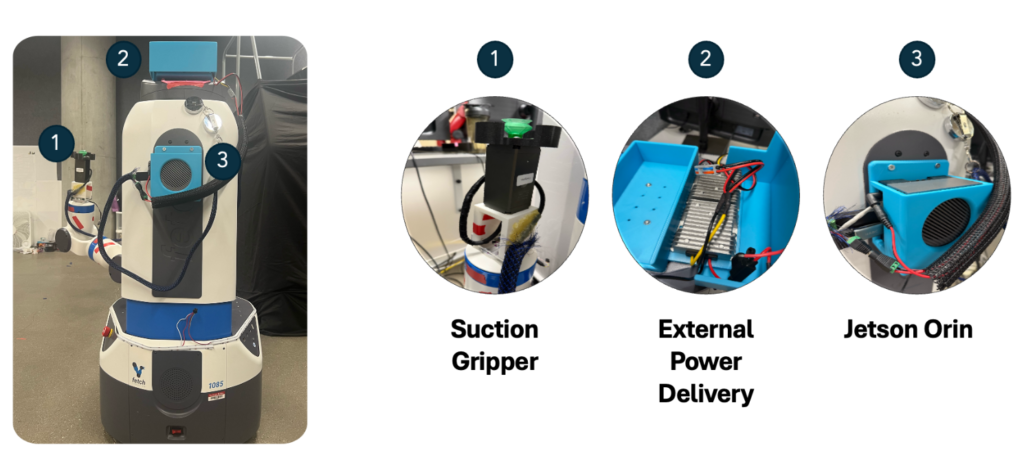

The ORB system is built on the Fetch mobile manipulator platform, which features a flexible design for hospital environments. The Fetch robot is equipped with a mobile base for autonomous navigation and a 7-degree-of-freedom (7-DOF) manipulator mounted on its torso, allowing it to perform complex manipulation tasks such as picking and placing items. This configuration provides the system with the mobility and dexterity necessary to handle logistics tasks in cluttered hospital settings. To accommodate additional functionalities not provided by the platform, such as external compute, a suction gripper, and an external battery, custom 3D-printed parts have been designed and attached to the robot’s structure.

The system is powered by a Jetson Orin module, mounted on the back of the Fetch robot, which runs the generalizable software stack. This software stack enables the ORB to navigate hospital hallways autonomously, locate storage racks, and interact with items on the shelves. Using its integrated sensors and manipulation capabilities, the ORB can identify, grasp, and restock items efficiently.

Subsystem Description

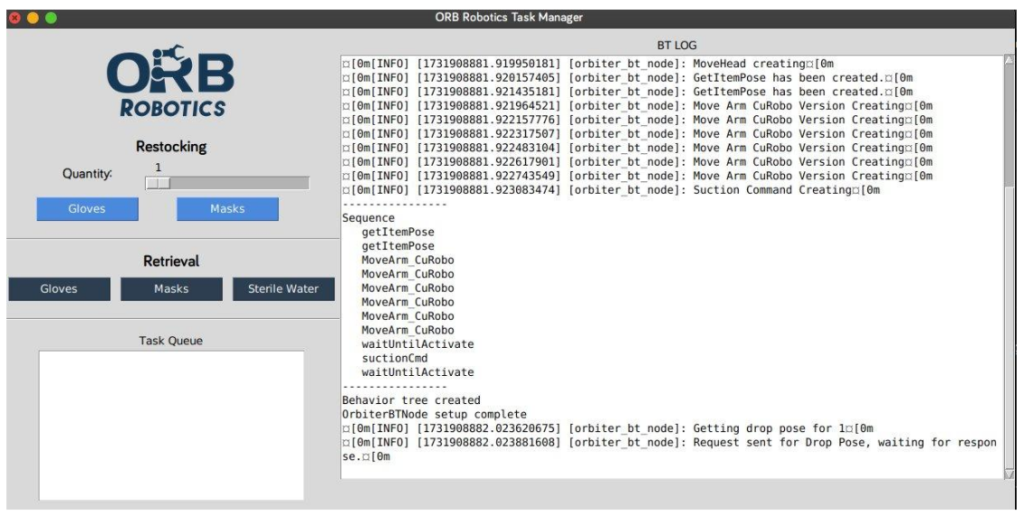

Graphical User Interface

For every restocking or retrieval operation, tasks are added to a thread-safe queue that uses a lock to ensure secure concurrent access. The Task Manager Node, a ROS2 node implemented in the Task Manager Node class, runs in a separate thread. It provides a service for retrieving the next task (/get_next_action) and listens to messages on the /request_clear_restocking_item topic, enabling the removal of specific restocking tasks upon request. The GUI refreshes the task list display every 500 milliseconds, while the ROS2 node interacts with the task queue—either accessing or modifying tasks—through the shared lock mechanism.

Task prioritization is achieved by always placing retrieval tasks at the front of the queue, ensuring they take precedence over restocking tasks. This design guarantees that the robot resumes restocking promptly after completing any retrieval tasks.

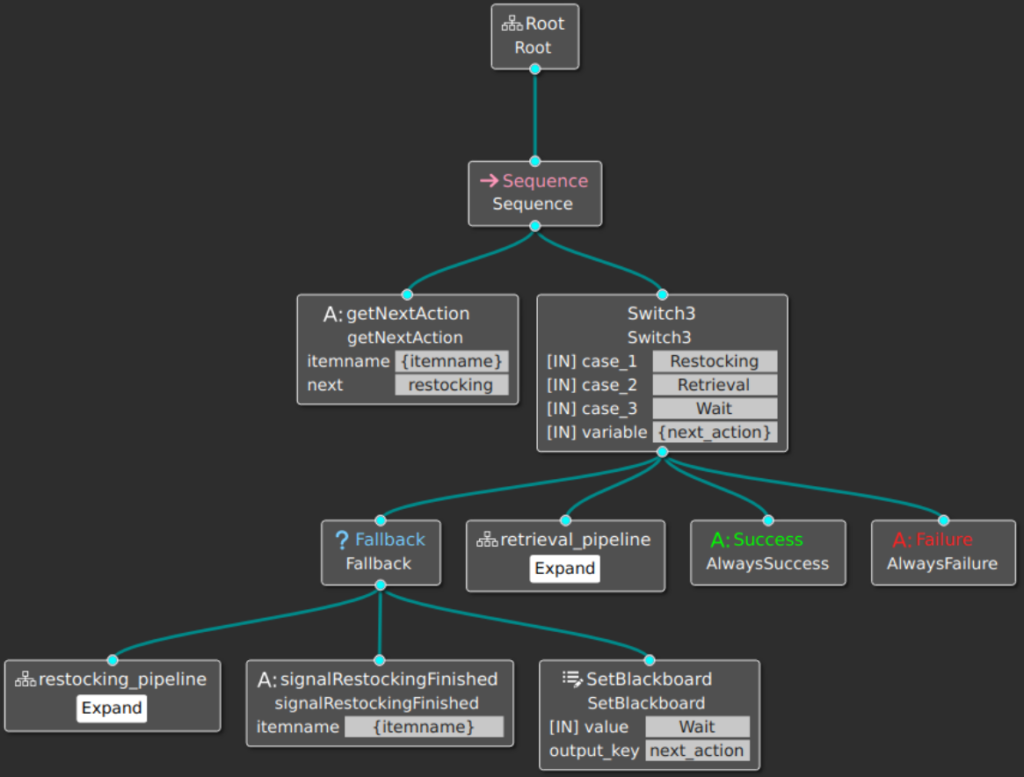

Behavior Tree

The behavior tree process begins with a sequence node that establishes a linear workflow, starting with the getNextAction action. This step determines the next task or behavior the robot should perform, storing the result in the next_action variable, which then guides the tree’s execution.

The Switch node serves as a decision-making unit, assessing the value of next_action to determine the appropriate subtree or sequence for the robot to execute. It includes three scenarios: Restocking, Retrieval, and Wait, each corresponding to specific tasks. If next_action is “Restocking,” the tree transitions to the restocking_pipeline subtree; “Retrieval” directs it to the retrieval_pipeline; and “Wait” indicates no immediate task is assigned. The Restocking branch includes a Fallback node, which enables the robot to retry failed actions, enhancing robustness. Once the Restocking task is completed, the robot uses signalRestockingFinished to mark it as done and resets next_action to “Wait,” signaling readiness for the next task. The restocking_pipeline subtree outlines a detailed sequence of actions required for restocking. This begins with navigation commands (e.g., GoToPose) to move the robot to specific coordinates. Once in position, the robot employs moveHead and getItemPose actions to locate the necessary items using predefined waypoints. It then executes a series of arm movement commands through MoveArm_CuRobo and manages item suction via suctionCmd actions to securely grasp and place items. The MoveArm_CuRobo nodes support various trajectory types (e.g., 0, 1, 2) to execute the precise movements needed for picking and placing items systematically.

Similarly, the retrieval_pipeline subtree is structured to handle retrieval tasks, following a similar workflow of navigation, item detection, and manipulation but tailored for retrieval operations. The robot navigates to a target location, identifies and grasps the required item using its suction mechanism, and transports it to the specified destination. Multiple fallback mechanisms are incorporated to handle reactive responses, such as recovering from suction failures or item drops during navigation. Both pipelines are designed with adaptability in mind, enabling the robot to transition seamlessly between tasks based on the value of next_action.

Navigation

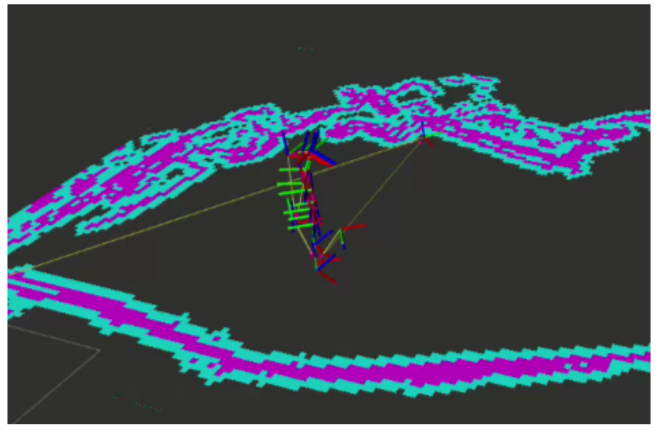

The navigation subsystem for the ORB system leverages ROS 2’s Nav2 Package to enable autonomous navigation in a pre-mapped environment using a 2D LiDAR mounted on the robot’s base. The system employs Adaptive Monte Carlo Localization (AMCL) for accurate pose estimation, with parameters finely tuned to achieve localization errors within 5 cm. Nav2 parameters were optimized for speed and accuracy, focusing on global path planning, real-time obstacle avoidance, and smooth recovery behaviors, allowing the robot to generate efficient paths and navigate at a maximum speed of 0.8 m/s.

To ensure reliability, a fallback mechanism was implemented to handle navigation failures. In such cases, the robot utilizes its onboard camera to detect Aruco markers and navigates directly to the target location in an open-loop manner. This dual-layered approach, combining the primary Nav2-based navigation with a robust fallback strategy, enhances system resilience in dynamic and constrained environments. The navigation system underwent rigorous testing in simulation and real-world hospital like environments, ensuring it meets the operational demands of precision, speed, and reliability.

Scene Understanding

The Scene Understanding module in the Fetch robot performs two critical functions: guiding NVBlox in constructing a dense collision world using RGB-D imagery and providing approximate object and environmental localization. To accomplish this, the robot’s head executes a predetermined sweeping motion through 15 defined waypoints, encompassing the entire environment. The head is controlled using an action controller implemented as a ROS node that takes in input joint angles (head_tilt and head_sweep). This node is bridged with the ROS 2 node by constantly publishing the status of the action controller on a topic. At each waypoint, the module performs the detection of ArUco markers and boxes. To detect boxes from which restocking is performed, we use Grounding DINO. Upon instance detection, the system transitions to visual servoing, centering the instance within the camera’s field of view. This approach ensures accurate depth estimation and mitigates distortion effects caused by peripheral views or curved surfaces. Concurrently, NVBlox is dynamically updated with real-time RGB-D data, facilitating incremental construction of a detailed environmental map.

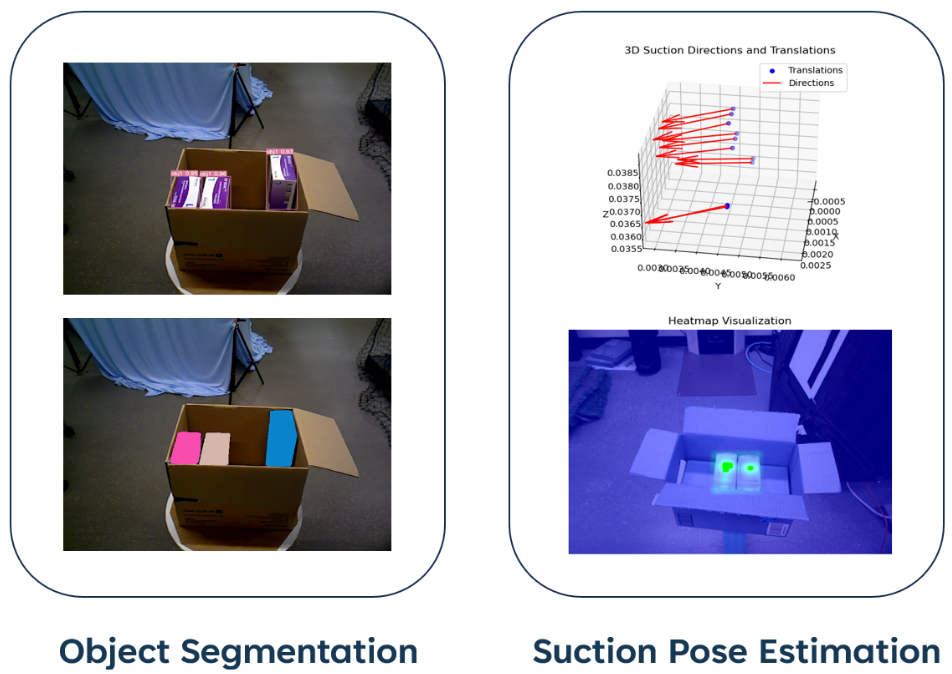

Suction Pose Generation

The generated segmentation masks, along with depth information from the RGB-D sensor, are passed to the Surface Normal Estimation module. This module computes the surface normals of the detected objects by analyzing the geometric properties of the depth data, specifically identifying regions that are flat and perpendicular to the expected suction direction. These flat regions are critical as they ensure a stable and reliable suction attachment for robotic manipulation. The final step involves calculating the suction pose, which includes both position and orientation data optimized for the identified flat spots. This suction pose is relayed back to the Perception Manager, which integrates it into the robot’s task execution pipeline, ensuring precise object grasping and manipulation.

Suction Gripper

The suction gripper interface is implemented as a ROS2 wrapper that interfaces with the manufacturer-provided API, facilitating communication with the Jetson platform via the RS485 communication protocol. The primary functionalities of this interface include: a) Providing suction status feedback through a ROS2 topic. b) Offering a ROS2 service interface for controlling the on/off state of the suction gripper. Manual control of the gripper is unnecessary, as the built-in controller autonomously maintains the vacuum level when suction is activated. Suction status feedback is achieved by monitoring the vacuum pressure within the gripper. The feedback allows us to ensure that the objects grasped properly, and perform a fallback behavior if suction wasn’t able to grasp the object.

Motion Planning

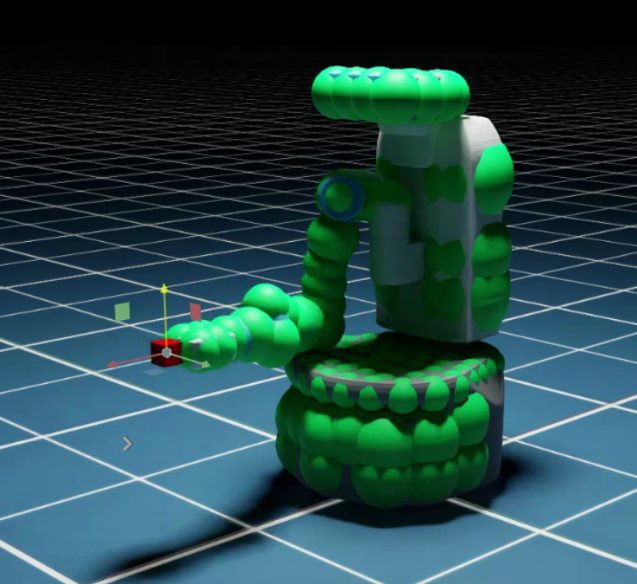

The motion planning subsystem for the Operating Room Bot (ORB) is built around a ROS 2 service node that interfaces with a behavior tree (BT) for high-level task coordination. This node enables the robot to plan and execute trajectories for its manipulator based on the end-effector target pose provided by the BT. When a service request is received, the node computes a collision-free trajectory to move the Fetch robot’s 7-DOF manipulator from its current pose to the specified target pose. The planned trajectory is then published to a ROS 1 manipulator executor node, which executes the trajectory on the Fetch robot, ensuring smooth integration between planning and control.

At the core of the planning system is NVIDIA’s cuRobo, a GPU-accelerated, optimization-based trajectory planner. cuRobo is configured to generate trajectories tailored to specific motion primitives such as “freespace,” “shelf in,” “shelf out,” and others. This enables the system to dynamically enable or disable pose constraints to suit the task.

For example:

● Shelf In/Out Primitives: Pose constraints ensure a safe and precise approach and retraction for grasping items on shelves.

● Freespace Primitive: Pose constraints are released, allowing for collision-free, rapid transitions between points in open areas.

The CuRobo planner is initialized with configuration files specifying the robot’s kinematic and collision models. The system also integrates with an optional NVBlox-based collision environment, allowing the planner to update its collision model dynamically based on Fetch’s RGBD sensor data.