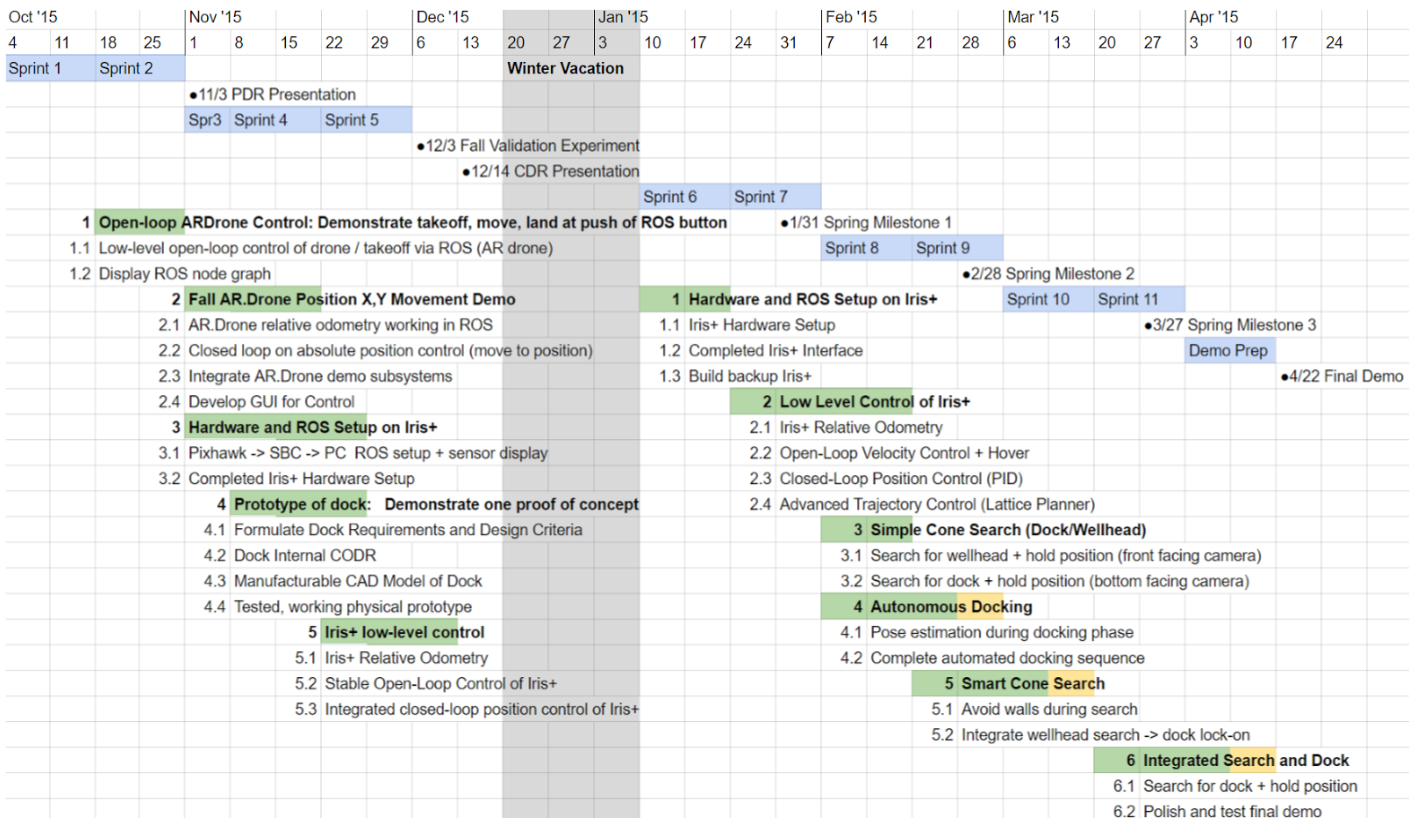

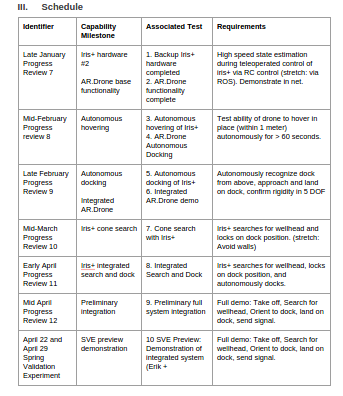

Schedule

Scrum Timeline:

Over the course of the coming year, we will have a total of 11 two-week development sprints.

Detailed planning is broken into two major releases, centered around the two demonstrations in December 2015 and April 2016. Each sprint fits into the overall schedule in the following manner.

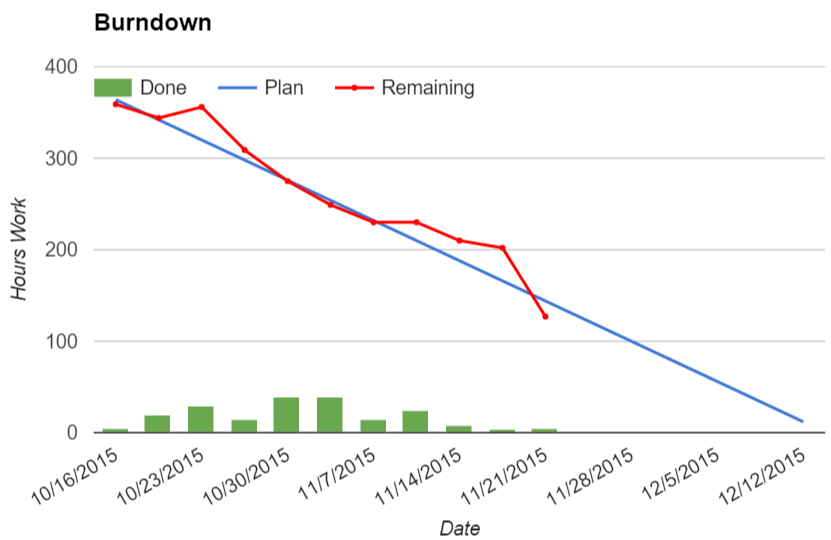

Project progress visualization:

The burndown chart below shows our trajectory with respect to our first release plan, due for completion in December 2015. The plan (blue line) represents an estimated level of focused, productive effort of 10 hours per week per team member.

This first release includes all planned work up until the first Fall demo (see test plan details below).

NOTE: Burndown chart only shown for Fall semester due to focus on implementation for Spring semester.

Burndown chart annotations:

- During the week of 10/23 remaining work spiked due to the inclusion of several missing work items related to the MRSD Project course deliverables.

- Work remaining dropped significantly during the week of 11/21 due to the postponement of work related to autonomous control of the Iris+ quadcopter.

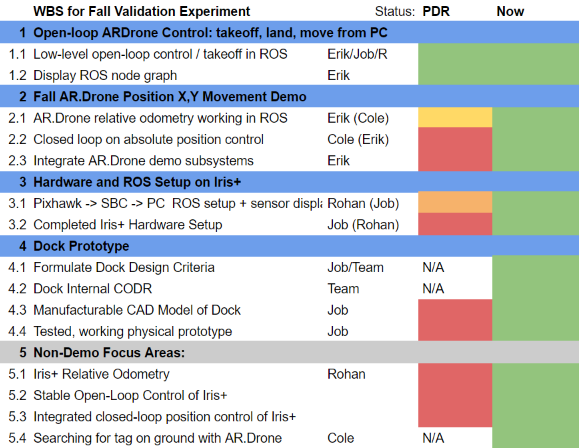

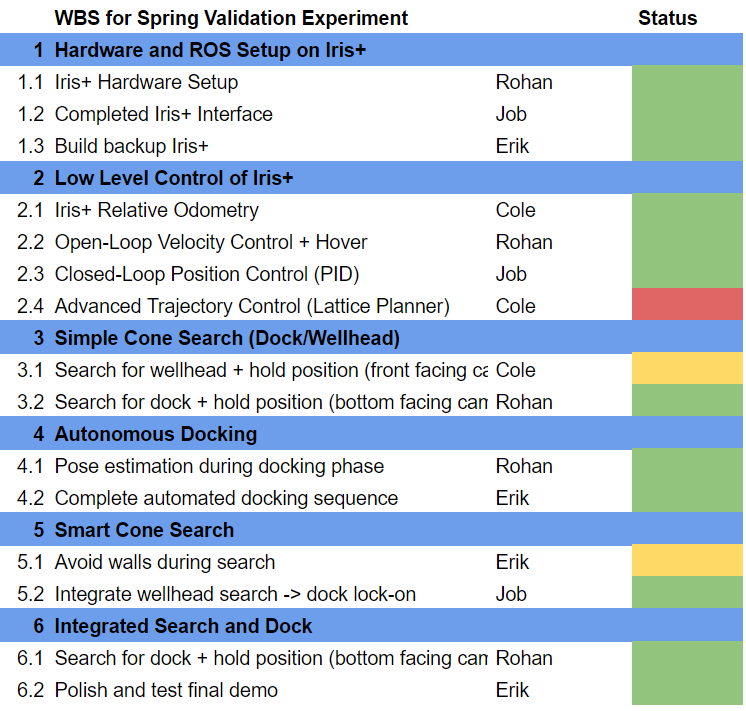

Work Breakdown Structure Summary as of 4/1/2016:

Presenter Schedule:

Fall:

October 22nd – Progress Review 1 – Presenter: Erik Sjoberg

October 29th – Progress Review 2 – Presenter: Cole Gulino

November 12th – Progress Review 3 – Presenter: Rohan Thakker

November 24th – Progress Review 4 – Presenter: Job Bedford

Spring:

Progress Review 7 – January 27th – Presenter: Rohan Thakker

Progress Review 8 – February 8th – Presenter: Erik Sjoberg

Progress Review 9 – February 24th – Presenter: Cole Gulino

Progress Review 10 – March 16th – Presenter: Job Bedford

Progress Review 11 – March 30th – Presenter: Rohan Thakker

Progress Review 12 – April 11th – Presenter: Erik Sjoberg

Test Plan

- Backup Iris+ Drone Hardware

- Objective: The purpose of this test is to demonstrate a second complete, working Iris+ drone with all sensors and hardware integrated.

- Elements: This test will feature the following elements:

- Hardware Subsystem – The complete and working hardware of the Iris+ drone

- ROS Framework – The high-speed integration of our Linux SBC (ROS) and the flight-control firmware running on the Pixhawk microcontroller

- Location: MRSD Lab

- Equipment:

- Iris+ Drone

- Laptop PC

- Personnel:

- Erik Sjoberg – Erik will demonstrate the functioning powered-up hardware

- Procedure:

- Power up drone from battery and demonstrate blinking lights on SBC and PX4 Flow camera

- Connect to drone from PC via Wifi network

- Connect to ROS master running on Iris+ SBC

- Display high-rate IMU and height sensor readings from Iris+ on PC

- Verification Criteria:

- Iris+ SBC and PX4Flow sensor power up successfully

- Able to connect to Iris+ SBC over wireless from PC

- Able to see IMU data at over 150hz via rostopic hz

- Able to see appropriate depth readings from sonar sensor via rostopic echo

- AR.Drone functionality Complete

- Objective: Showcase ability to command drone and detect April Tag

- Elements: This test will feature the following elements:

- Hardware Subsystem – AR.Drone

- ROS Framework – The high-speed integration of our Linux SBC (ROS) and the flight-control firmware running on the Pixhawk microcontroller

- Location: NSH B-level

- Equipment:

- AR.Drone

- Laptop PC

- April Tag

- Personnel:

- Job Bedford – Job will demonstrate the AR.Drone functionality

- Procedure:

- Initiate drone setup packages drivers communication and physical placement

- Take off with drone, via mover node

- Showcase planar movement with commands to drone.

- Showcase April Tag detection pose and orientation estimates

- Verification Criteria:

- Drone should take off with mover noe command

- Drone should obey all flight commands

- rosnode should grant accurate estimate of tag locations within a 1m error margin.

- Autonomous hovering with Iris+

- Objective: The purpose of this test is to demonstrate the ability to autonomously hover with the IRIS+. This will help us in implementing the cone search and docking states.

- Elements: This test will feature the following elements:

- Local Planning subsystem – to test the implementation of the “Hover In Plane” unit of the subsystem

- World Modelling subsystem – to test the implementation of the “Pose Estimation” unit of the subsystem

- Location: NSH B-Level Basement

- Equipment:

- Iris+ Drone

- Laptop

- RC remote of IRIS+ Drone

- Personnel:

- Rohan Thakker – Rohan will be the operator who is required to run the commands for the drone.

- Erik Sjoberg- Manually control the drone using the RC remote

- Procedure:

- Cordone off the area in the B-Level basement for safety.

- Under manual control, take off with the drone and move into desired initial position and orientation.

- Shift to autonomous control and run the command move the IRIS+ drone along a square trajectory.

- Observe as the Iris+ autonomously navigates along the predefined trajectory.

- Shift to manual control and land the robot

- Verification Criteria:

- Iris+ was able to autonomously navigate the reference trajectory with less that +-2m of tracking error.

- AR.Drone Autonomous Docking

- Objective: Showcase AR.Drone ability to land and dock with april tag detection.

- Elements: This test will feature the following elements:

- Hardware Subsystem – AR.Drone

- April tags

- Location: NSH B-level

- Equipment:

- AR.Drone

- Laptop PC

- April Tag

- Personnel:

- Job Bedford – Job will demo the AR.Drone autonomous docking

- Procedure:

- Initiate drone setup packages drivers communication and physical placement

- Job will command drone to take off and position it above the april tags with offset

- Job will initiate the sequence

- Drone will track and position itself above tag.

- Drone will descend and land within designated marker

- Verification Criteria:

- Drone should track and position itself above tag

- Drone should descend and land in area of April Tag, with landing gear in markers

- Marker will denote the level of accuracy in in docking.

- Autonomous docking with Iris+

- Objective: The purpose of this test is to demonstrate the ability to autonomously docking with the IRIS+.

- Elements: This test will feature the following elements:

- Local Planning subsystem – to test the implementation of the “Land” unit of the subsystem

- World Modelling subsystem – to test the implementation of the “Pose Estimation” unit of the subsystem using the APRIL Tag

- Tactical Planning – to test the implementation of “Attempt Docking” unit of the subsystem.

- Location: NSH B-Level Basement

- Equipment:

- Iris+ Drone

- Docking station with APRIL Tag

- Laptop

- RC remote of IRIS+ Drone

- Personnel:

- Rohan Thakker – Rohan will be the operator who is required to run the commands for the drone.

- Erik Sjoberg – Manually control the drone using the RC remote

- Procedure:

- Cordone off the area in the B-Level basement for safety.

- Place the docking station in the center of the area

- Under manual control, take off with the drone and move to a position above the docking station such that the APRIL Tag is in the field of view of the downward facing camera

- Shift to autonomous control and run the command to dock the drone

- Observe the IRIS+ attempt landing

- Repeat from step (iii.), there more times

- Verification Criteria:

- IRIS+ was successfully able to land in the center of the funnels located on the docking station >1 out of four times

- Integrated AR.Drone Demo

- Objective: Demo AR.Drone ability to complete SVE tasks. Merge task into continuous demo of take off, Tornado Search, Wellhead Alignment, Dock Alignment and Docking. This will complete our risk mitigation for the drone back up plan.

- Elements: This test will feature the following elements:

- Hardware Subsystem – AR.Drone

- April tags

- Location: NSH B-level

- Equipment:

- AR.Drone

- Laptop PC

- April Tag

- Wellhead mock up

- Dock marker

- Personnel:

- Job Bedford – Job will demo the AR.Drone integrated demo

- Procedure:

- Initiate drone setup packages drivers communication and physical placement.

- Job will command drone to take off and position it in initial starting area.

- Job will initiate the sequence.

- Drone will proceed to tornado search staying within 7 meter demo area, until it detects wellhead marker.

- Drone will identify wellhead and align itself 1 meter in front of wellhead.

- Drone will then identify and align with dock.

- Drone will then descend and dock on dock.

- Verification Criteria:

- Drone perform tornado search within 7 meter square area.

- Drone identifies and aligns itself 1 meter in front of wellhead.

- Drone descends and docks on dock.

- Drone landing gear will be within circular markers.

- Cone Search with Iris+

- Objective: The purpose of this test is to showcase the system integration for two of the major functional elements of the project: “Search for and Approach Wellhead” and “Align Self with Wellhead”. The integration of these two functional areas is highly critical for the success of the project. By showcasing the integration of the subsystems involved with these two functional elements, we will showcase that two-thirds of the major system functionality has been fully implemented and integrated.

- Elements: This test will feature the following elements:

- Vision subsystem – In order to recognize and lock on dock position, we must showcase that the vision system is able to recognize tages and get position estimates from them.

- Control subsystem – In order to send waypoint information for the predefined cone-search path, we must have accurate position control in order to have waypoint following.

- Navigation system – The entire navigation system will be tested for integration effectiveness. The subsystems involved: vision, sensor fusion, position control, localization, etc.

- Autonomous position locking – The system must be able to lock around a position in order to hover over the dock in preparation for landing. This integration test covers the same subsystems of the navigation system.

- Location: NSH B-Level Basement

- Equipment:

- Iris+ Drone

- Wellhead infrastructure with identifying tag

- Personnel:

- Cole Gulino – will be the operator who is required to run the commands for the drone.

- Erik Sjoberg – will be the backup operator for manual control of the Iris+ in case of emergency.

- Procedure:

- Cordone off the area in the B-Level basement for safety.

- Under manual control, take off with the drone and move into desired initial position and orientation.

- Run the command to commence the cone-search.

- Observe as the Iris+ autonomously navigates the predefined cone-search trajectory until it has located the wellhead and locked on the position of the dock next to it.

- Verify that the Iris+ has position lock with the dock within the tolerance specified in the Verification Criteria.

- Verification Criteria:

- Iris+ has completed its cone-search maneuver.

- Iris+ is hovering over around the center of the tag on the wellhead with a tolerance of 0.5m in any direction.

- Integrate the subsystems

- Objective: By showcasing the integration of the subsystems involved with these three functional elements, we will showcase that the integration for all three functional areas has been completed in the simplest case.

- Elements: This test will feature the following elements:

- Vision subsystem – In order to recognize and lock on dock position, we must showcase that the vision system is able to recognize tages and get position estimates from them.

- Control subsystem – In order to send waypoint information for the predefined cone-search path, we must have accurate position control in order to have waypoint following.

- Navigation system – The entire navigation system will be tested for integration effectiveness. The subsystems involved: vision, sensor fusion, position control, localization, etc.

- Autonomous position locking – The system must be able to lock around a position in order to hover over the dock in preparation for landing. This integration test covers the same subsystems of the navigation system.

- Location: NSH B-Level Basement

- Equipment:

- Iris+ Drone

- Wellhead infrastructure with identifying tag

- Dock infrastructure with identifying tag

- Personnel:

- Cole Gulino – will be the operator who is required to run the commands for the drone.

- Erik Sjoberg – will be the backup operator for manual control of the Iris+ in case of emergency.

- Procedure:

- Cordone off the area in the B-Level basement for safety.

- Under manual control, take off with the drone and move into desired initial position and orientation.

- Run the command to commence the cone-search.

- Observe as the Iris+ autonomously navigates the predefined cone-search trajectory until it has located the wellhead and locked on the position of the dock next to it.

- Verify that the Iris+ has position lock with the dock within the tolerance specified in the Verification Criteria.

- Verify that the Iris+ has successfully landed on the docking infrastructure.

- Verification Criteria:

- Iris+ has completed its cone-search maneuver.

- Iris+ is hovering over around the center of the tag on the wellhead with a tolerance of 0.5m in any direction.

- Iris+ has docked with 5 DOF.

- Preliminary full system integration

- Objective: The purpose of this test is to prepare a simplified version of our complete SVE. This will force us to complete the integration of our various sub-systems in preparation for the final demonstration. This demonstration will not have the polish of our final SVE, however it should contain each of the major required elements in more-or-less working order. Some minor intervention or restarts may be required, but will be allowed.

- Elements: This test will feature the following elements:

- Vision subsystem – In order to recognize and lock on dock position, we must showcase that the vision system is able to recognize tages and get position estimates from them.

- Control subsystem – In order to send waypoint information for the predefined cone-search path, we must have accurate position control in order to have waypoint following.

- Navigation system – The entire navigation system will be tested for integration effectiveness.

- Autonomous position locking – The system must be able to lock around a position in order to hover over the dock in preparation for landing.

- Autonomous docking – The system will complete the full docking procedure.

- Location: NSH B-Level Basement

- Equipment:

- Iris+ Drone

- Wellhead infrastructure with identifying tag

- Dock infrastructure with identifying tag

- Warning tape

- Personnel:

- Erik Sjoberg – Erik will be the operator who is required to run the commands for the drone.

- Procedure:

- Cordon off section of hallway

- Place wellhead at one corner of search area and dock 1m in front of the wellhead

- Place Iris+ on ground at opposite corner of search area facing wellhead within +/- 5 degrees

- Hit START button on PC to initiate sequence

- Confirm Iris+ lifts off and begins searching for wellhead (marker)

- Confirm Iris+ arrives within 3 meter radius of wellhead

- Confirm Iris+ orients above dock in pre-docking position (within 1 meter of dock)

- Confirm Iris+ successfully lands in dock, constrained in 5 DOF

- Restart from procedure iii. if unsuccessful

- Verification Criteria:

- Iris+ autonomously takes off from ground

- Iris+ arrives within 3 meter radius of wellhead

- Dock with docking station, constrained in 5 DOF

- Multiple attempts along with some manual intervention will be allowed

- SVE Preview Demonstration

- Objective: The purpose of this test is to prepare a more-or-less complete version of our SVE. This will be the first demonstration which requires a fully working system without manual intervention. Multiple restarts may be required, but the system must work autonomously.

- Elements: This test will feature the following elements:

- Vision subsystem – In order to recognize and lock on dock position, we must showcase that the vision system is able to recognize tages and get position estimates from them.

- Control subsystem – In order to send waypoint information for the predefined cone-search path, we must have accurate position control in order to have waypoint following.

- Navigation system – The entire navigation system will be tested for integration effectiveness.

- Autonomous position locking – The system must be able to lock around a position in order to hover over the dock in preparation for landing.

- Autonomous docking – The system will complete the full docking procedure.

- Location: NSH B-Level Basement

- Equipment:

- Iris+ Drone

- Wellhead infrastructure with identifying tag

- Dock infrastructure with identifying tag

- Warning tape

- Personnel:

- Cole Gulino – who will be the operator who is required to run the commands for the drone.

- Erik Sjoberg – will be a standby operator to manually control drone in an emergency situation

- Procedure:

- Cordon off section of hallway

- Place wellhead at one corner of search area and dock 1m in front of the wellhead

- Place Iris+ on ground at opposite corner of search area facing wellhead within +/- 5 degrees

- Hit START button on PC to initiate sequence

- Confirm Iris+ lifts off and begins searching for wellhead (marker)

- Confirm Iris+ arrives within 3 meter radius of wellhead

- Confirm Iris+ orients above dock in pre-docking position (within 1 meter of dock)

- Confirm Iris+ successfully lands in dock, constrained in 5 DOF

- Restart from procedure iii. if unsuccessful

- Verification Criteria:

- Iris+ autonomously takes off from ground

- Iris+ arrives within 3 meter radius of wellhead

- Dock with docking station, constrained in 5 DOF

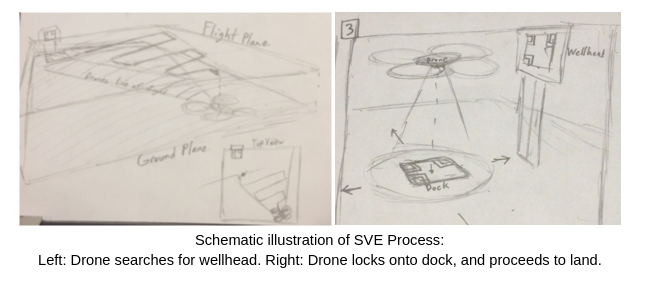

Spring Validation Experiment

Needed Equipment: Iris+ with hardware, wellhead, dock, caution tape, blast shields

Operational Area: 25m2 in B – Level Basement

Test Process:

- Cordon off section of hallway and place blast shields to protect spectators

- Place wellhead at one corner of search area and dock 1m in front of the wellhead

- Place Iris+ on ground at opposite corner of search area facing wellhead within +/- 5 degrees

- Hit START button on PC to initiate sequence

- Confirm Iris+ lifts off and begins searching for wellhead (marker)

- Confirm Iris+ arrives within 3 meter radius of wellhead

- Confirm Iris+ orients above dock in pre-docking position (within 1 meter of dock)

- Confirm Iris+ successfully lands in dock, constrained in 5 DOF

Success Conditions:

Mandatory:

- Iris+ autonomously takes off from ground

- Iris+ arrives within 3 meter radius of wellhead

- Dock with docking station, constrained in 5 DOF

Desired:

- Dock constraints 6 DOF

- Successfully avoid obstacles

Parts List

| Date Requested | Part No. | Part Name | Quantity | Total Price | Website Link | |

|---|---|---|---|---|---|---|

| 10/15/2015 | 3DR IRIS+ Quadcopter | 1 | $599.99 | http://store.3drobotics.com/products/iris | ||

| 10/15/2015 | 3DR IRIS+ Propellers | 4 | $39.96 | http://store.3drobotics.com/products/iris-plus-propellers | ||

| 10/10/2015 | 595-MINNOWMAX-DUAL | MINNOWBOARD-MAX-DUAL | 1 | $145.95 | http://www.mouser.com/ProductDetail/CircuitCo/MINNOWBOARD-MAX-DUAL/?qs=sGAEpiMZZMs9lZI8ah3py%2f9KKP2eiFfZsbAbf7dOFFRyaqaqEqmd8g%3d%3d | |

| 10/25/2015 | Odroid XU-4 Board | 1 | $83.00 | http://ameridroid.com/products/odroid-xu4 | ||

| 11/5/2015 | PX4Flow | 1 | $149.00 | http://store.3drobotics.com/products/px4flow | ||

| 11/5/2015 | Iris+ Battery | 2 | $80.00 | https://store.3drobotics.com/products/iris-plus-battery | ||

| 11/18/2015 | NicaDrone Perment Magnet | 2 | $90.00 | http://nicadrone.com/index.php?id_product=59&controller=product | ||

| 12/14/2015 | 3DR IRIS+ #2 | 1 | $599.99 | http://store.3drobotics.com/products/iris | ||

| 12/14/2015 | PX4Flow #2 | 1 | $149.00 | http://store.3drobotics.com/products/px4flow | ||

| 12/14/2015 | Asus Xtion Pro Live | 1 | $329.99 | http://www.ebay.com/itm/NEW-ASUS-Xtion-PRO-LIVE-B-U-RGB-and-Depth-Sensor-/291637793920?hash=item43e6f79480:g:fJwAAOSwxN5WbQnp | ||

| 10/25/2015 | Odroid XU-4 Board | 1 | $83.00 | http://ameridroid.com/products/odroid-xu4 | ||

| 1/19/2016 | Intel RealSense Camera | 1 | $99.00 | http://click.intel.com/intel-realsense-developer-kit-r200.html |

Total estimated cost: $3170.52

Remaining budget: $829.48

Issues Log

Issues are maintained on the github page:

Column Repository: Column Repository Issues