Our whole fusion algorithm is based on the fixed nature of the infrastructure. Thus, we can treat all the points subtracted from background as true foreground. However, due to the lack of context information, there are chances that multiple pedestrians would be classified as a single pedestrian. This false detection will affect the performance of tracking and trajectory prediction in later stages. This is the reason why we need RGB image to separate those wrongly clustered points at least within the camera field of view.

The LiDAR 3D bounding box will be projected into camera frame with extrinsic and intrinsic matrices given by calibration results. Simultaneously, image 2D bounding box would be generated from either state-of-the-art deep learning detection network. For each overlapping pair of LiDAR and RGB bounding boxes, we recluster all the point cloud data into K groups, with K equals to number of RGB bounding box.

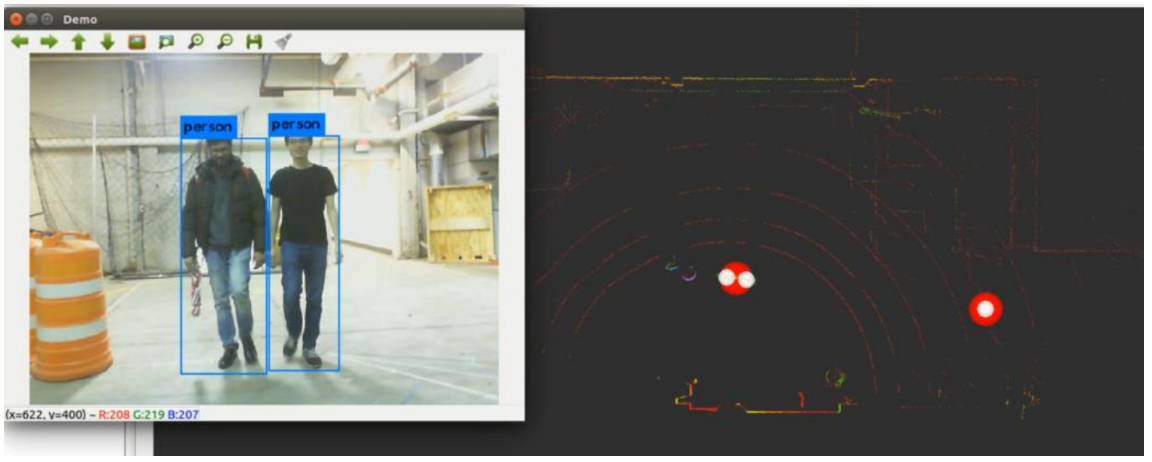

The fusion results are visualized below. There are 2 pedestrians walking within camera frame. Since they are too closed to each other, LiDAR clustering node will treat them as a single pedestrian as indicated by the red point. However, after fused the detection result from YOLO network, the system can accurately detect each of them correctly as shown by the white points.

Example image showing the effectiveness of the fusion algorithm. Whereas the LiDAR-based detection detects a single pedestrian, the fusion algorithm is able to detect two pedestrians.