The manipulation subsystem operates customized tools to perform the desired action of tomato flower pollination and tomato cluster harvesting. Goals for the spring semester include perceiving ArUco markers, publishing viewed coordinates in the spatial frame of the robot. The robot will then use these coordinates to get the desired joint angles (IK), and actuate its lift and telescopic arm to locate the end-effector to the desired location. After reaching the desired location, harvesting or pollination activities take place.

February Progress Updates

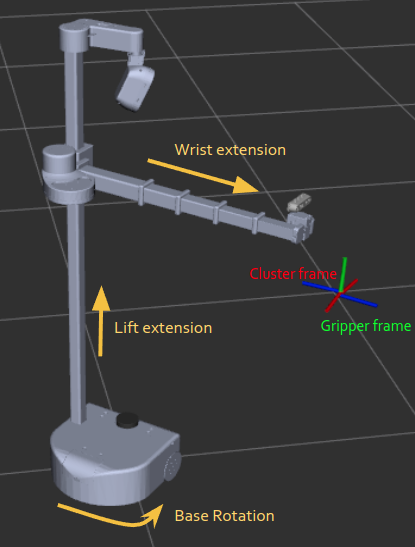

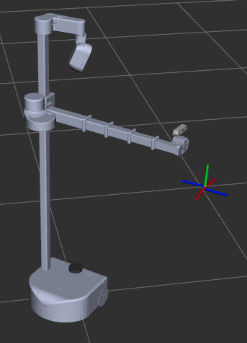

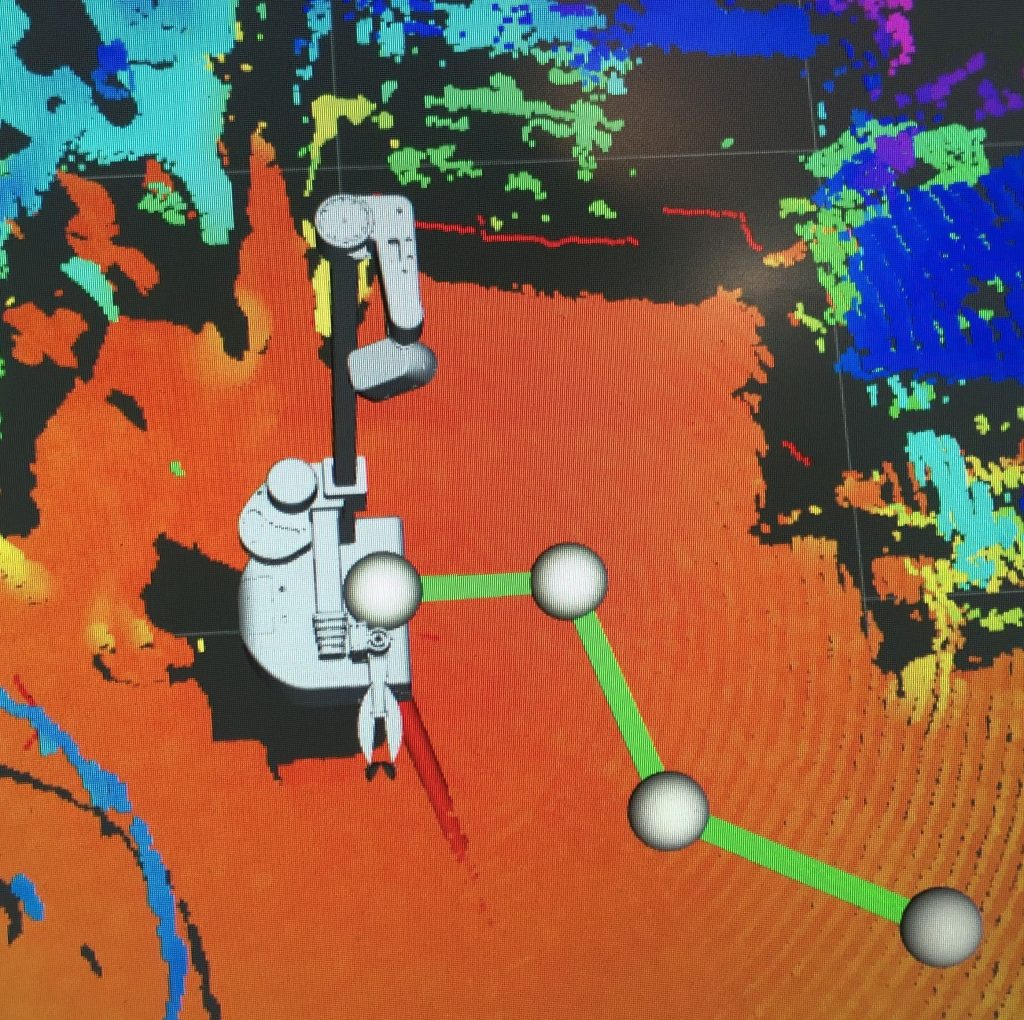

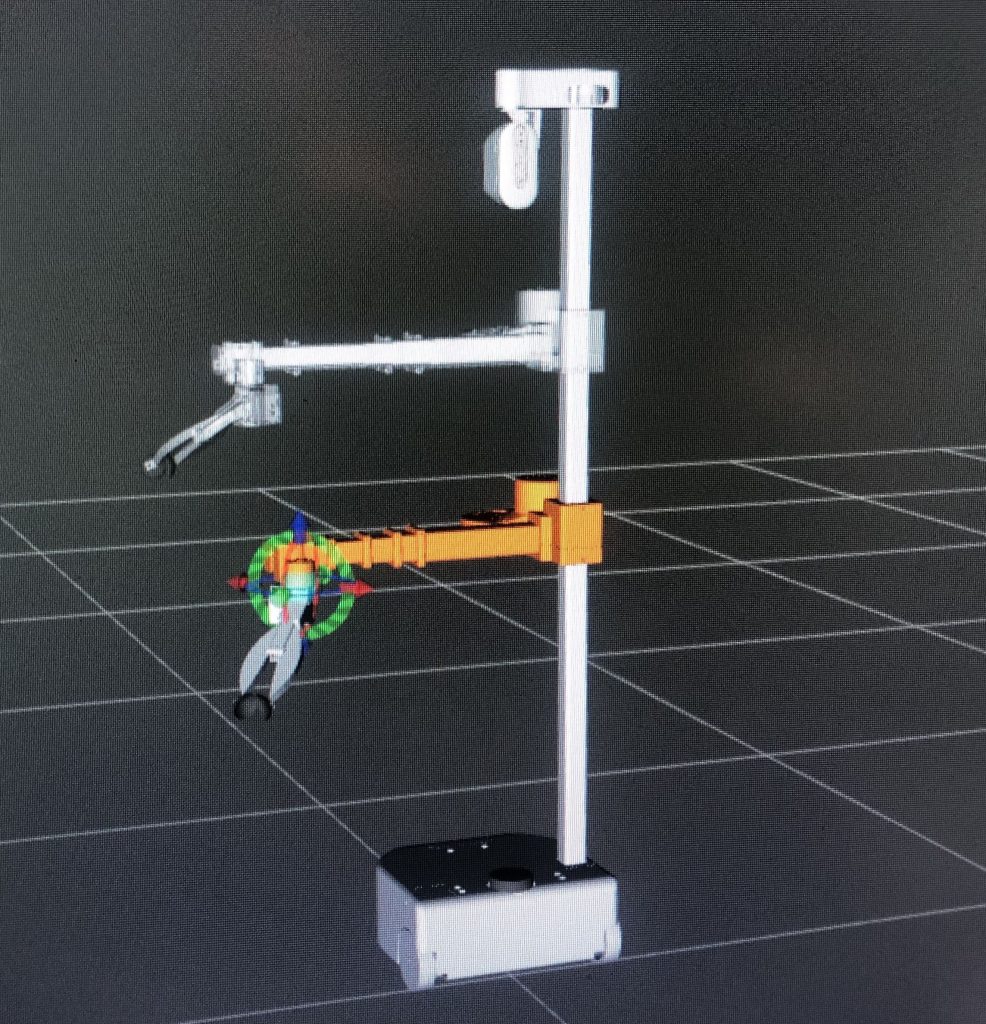

At present, MoveIt and Stretch FUNMAP packages are being explored for simple manipulation tasks. Figure 1 shows trajectory planning in Stretch FUNMAP and Figure 2 shows testing of goal poses in MoveIt. Tests have been conducted to actuate the lift and arm to desired positions using IK scripts. The perception subsystem has been decoupled from manipulation by the use of arUco markers. Integration of the harvester tool with the Hello robot has begun and calibration of the motors using the robot is being done.

March Progress Update

Upon finishing progress review 2, our team realized that implementing an inverse kinematics solution by using Stretch_body API, which are Python packages to interact with the Stretch RE1 hardware is a redundant task. Thus, during the spring break, the manipulation part set a mitigation plan to study stretch ROS packages and implement inverse kinematics using the ROS packages.

The manipulation subsystem subscribed to the ArUco marker topic, which provides translation and orientation viewed in the base_link of the robot. Then by using TF2, the positional difference between the base_link and the end of the fully retracted telescopic arm link at its lowest lift height can be measured. The offset is then added to the distance vector viewed in the base_link to calculate the actual distance between the end of the arm and the ArUco marker.

Due to the Cartesian configuration and the prismatic joints of the robot, the calculated distance is equal to the amount of joint movement required. The manipulation system inputs the displacement to the move_to_pose() function provided in the Stretch ROS package, which actuates the lift joint and arm joint as much as the input values. However, the robot still required some calibration to reach the desired position accurately.

Therefore, the manipulation part worked to reflect some direct calibration to find the right offset values to locate the arm to the desired position. Yet, this inspired us to from the integration perspective as different tools with different URDF files will need corresponding offset values. The results of inverse kinematics tracking fiducial markers are successful.

April Progress Update

As the basic IK algorithm was complete in PR3, the manipulation part worked on extracting fixed coordinates from continuously publishing AruCo coordinates. The part stored initial several poses into a list and averaged them into a single pose. After that, the part added a flag variable to stop the robot from calling back IK function after the arm has reached out to the desired points. Finally, the part made a function for the head camera to perceive the assumed bounding box and actuate the arm toward it with an offset distance obtained between the point of interaction and the wrist camera to have enough field of view. Then the manipulation part made another function where the arm reaches out to the POI using the wrist camera. While exploring ways how to send a fixed coordinate to the actuation function, and how to stop the actuation function looping, our team gained many insights which we believe will be useful during the Fall semester when we stop using ArUco markers but rely entirely on perceiving numbers of fruits.

Manipulation was then integrated with the behavior planner and the tool subsystem. The manipulation subsystem, therefore, subscribes to the coordinates of bounding boxes provided by the perception subsystem through the behavior planner. The arm with the end effector then reaches the bounding box after which the end effector is deployed. For a given point the end of arm reached the location with an accuracy of 3o mm.

Future goals for this subsystem include incorporating the move_base function for better orientation of the base to perform tool operation with higher accuracy.

Fall update

- Base rotation

- Arm, lift actuation using joint states (±2 cm accuracy)

- Tool control via ROS