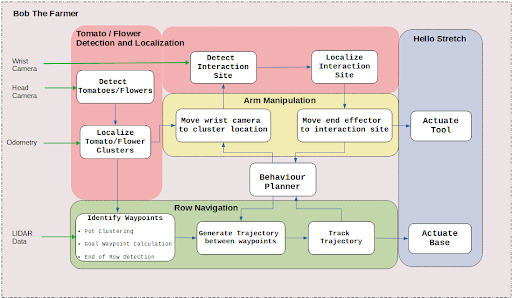

The functional architecture in Fig 1 represents the major functions performed by the robot for pollination and harvesting.

The user inputs to the system are the mode of operation(pollination, harvesting) and the required tool for the given mode. The robot also takes in environmental inputs, which include visual, pose and range data, and processes it in the sense block to extract useful information for perception tasks.

The output from the system is the desired action which is, navigation in the environment and the successful tool actuation.

The major functions of the perception block are:

1. To localize the robot in the vertical farm environment

2. To identify and localize the objects of interest

(tomato bunches to be harvested or tomato flowers to be pollinated)

3. To detect the rows of the vertical farm

The major functions of the navigation block are:

1. To deduce the exact waypoints between the rows at which the robot needs to stop

2. To plan the behavior of the robot according to the robot pose and desired waypoint

3. To track and actuate the robot to the desired waypoint

4. To update the waypoints as the robot navigates between the rows

When the robot reaches a waypoint, it starts performing the functions in the manipulation block.

The major functions of the manipulation block are:

1. To deduce the point of interaction or POI. For pollination, this is the place at which the pollination tool needs to be placed to vibrate the flower. For harvesting, this is the point on the stem where the tomato bunch must be cut.

2. To generate a motion plan for the end effector to reach the POI. Depending on the mode of operation, the plan generated to actuate the tool will be different. For pollination, the motion plan will end with the tool vibrating the pollination site. For harvesting, the plan will also include placing the harvested bunch into the basket.

3. To actuate the tool and perform the task.