Requirements

FR – Functional Requirement

PR – Performance Requirement

NF – Non-Functional Requirement

| FR1 | Accept order list from user |

| PR1 | Interpret work order with 100% accuracy |

| Description | The JSON format order list is processed. |

| FR2 | Autonomously determine positions and orientations of target items |

| PR2 | Autonomously identify object with 90% accuracy |

| Description | The position and orientation are calculated by the perception module using state-of-the art algorithms. The pose must be determined in order to acquire the objects. |

| FR3 | Accurately determine item grasp position |

| PR3 | Autonomously determine item grasp positions within 2 cm from the item on 75% of attempts |

| Description | The perception module outputs position of end-effector for optimal grasping. |

| FR4 | Autonomously picks item from shelf bin |

| PR4 | Autonomously picks item of known pose from shelf bin on 50% of attempts |

| Description | The kinematics planning is done to pick up the items from the shelf. |

| FR5 | Autonomously places item in order bin |

| PR5 | Autonomously places 90% of picked item in order bin from a height of no more than .3 meters |

| Description | Once the item is picked, the robot drops it off it in order bin. |

| FR6 | Must follow the dimensional constraints set by Amazon Picking Challenge |

| PR6 | "Acquire items from bins located at a max height of 1.86m and minimum height of .78m Acquire items from a .27m x .27m shelf bin Be able to lift items up to .5kg mass" |

| Description | The items and shelf units specified by the Amazon Picking Challenge rules add constraints to our design. |

| FR7 | Does not drop items |

| FR8 | Does not damage items |

| Description | During robot operation, the robot should not allow items to fall down. The robot should not deform the items in any way. This ensures we are only adding value. |

| PR7 | Acquire at least 3 items of 10 total attempts in under 20 minutes |

| Description | Maximize the number of items successfully picked and placed in the given time. |

| NF1 | Cost no more than $4000 |

| NF2 | Be completed by May 1st, 2016 |

| Description | MRSD project requirements. |

| NF3 | Transportable or available at ICRA 2016 |

| Description | The robot should be capable of being disassembled and reassembled easily. Alternatively, the robot platform must be available for use at the ICRA competition in Sweden, Stockholm in May 2016. |

| NF4 | Robust to environmental variations including lighting and physical geometry |

| Description | The robot’s perception system should operate reliably under different lighting conditions and changes in physical geometry. This is because the competition lighting conditions cannot be reproduced accurately in our test setup. |

| NF5 | Be available for testing at least 1 day per week |

| Description | We need to test the algorithms on the real platform every week to ensure consistency with simulation model. |

| NF6 | Start and stay within a 2m by 2m boundary (except the end effector) |

| Description | The competition rules state that the robot should stay within the 2m x 2m workcell and only the end effector can reach into the shelf. The shelf is atleast 10cm away from the workcell area. |

| NF7 | Have an emergency stop |

| Description | We require a stop button to halt the manipulator platform in case of accident. |

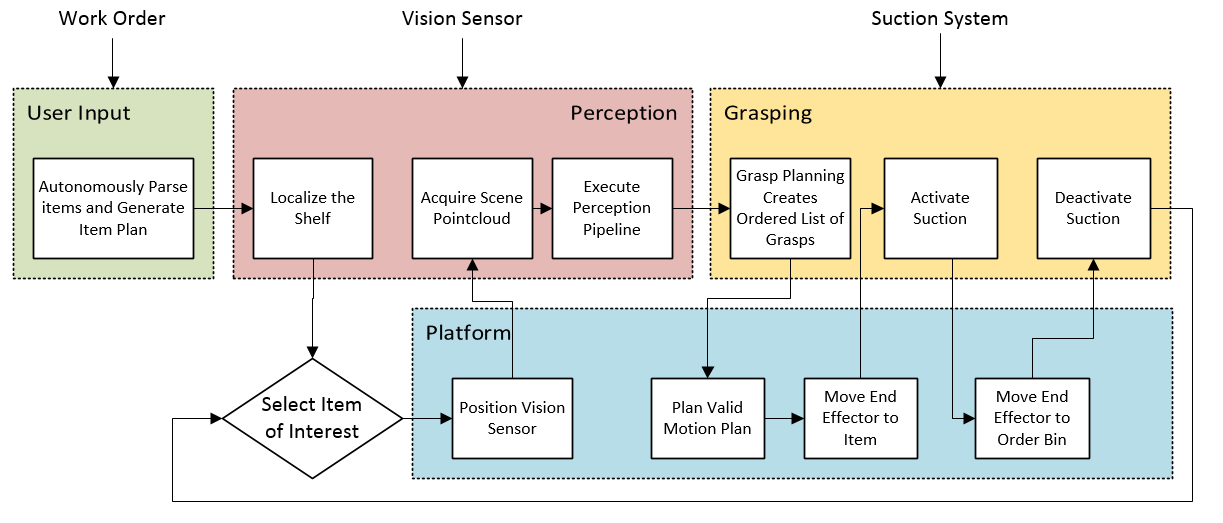

Functional Architecture

Input Handling: The robot autonomously parses the items in the list to generate an item plan. The input handling function uses an algorithm to select small and easy to grasp items and places them in the beginning of the list followed by larger and objects with no definite shape. This system keeps track of the objects modeled in the environment and ensures the system operates correctly and recovers from errors.

Perception: The perception function is responsible for scanning the shelf, scanning individual bins, determining item pose and providing the system with sufficient data to plan the manipulator trajectory to grasp the item from the shelf bin and place it in order bin.

Platform: The platform planner takes the item pose data as input and generates a valid collision-free motion plan to move the arm to a valid grasp position. Further, platform function also determines the reverse path to move the arm towards the order bin.

Grasping: the grasping function decides on the best grasp strategy and orients the end effector with respect to the object pose consisting of a suction system. Once the suction arm is close to the object, the grasping function switches on the suction mechanism and grasps the object

The functional architecture highlights the interaction between the four main functional areas. The user passes a Javascript Object Notation file which includes the item contents of all bins on the shelf and the desired order items. The robot generates an item plan to grasp the maximum number of objects in the desired timeframe. To do so, we plan to have an algorithm in place that assigns different weights such as ease of grasping, points associated etc. to each object. Finally input handling subsystem generates a work order that attempts to maximize the overall score.

Next, the perception system localizes the shelf and assigns it a frame in world coordinates. The details of the target item are passed to perception pipeline, where the Kinect2 RGBD sensor scans the shelf to determine item pose and passes the coordinates to the grasp planner.

Once the perception pipeline returns the item pose, an ordered list of preferred grasps are generated from a precomputed set of valid grasp positions. The list is then sent to the arm planner and the arm planner iterates through the list to find a collision-free motion plan to the item. The suction system is activated and the motion plan is executed by the arm.

Once the motion plan is found, pressure sensor feedback provides the grasp status to the platform and once grasping is detected, the arm withdraws from the shelf. If the grasp was unsuccessful, the system will abort further attempts to grasp the current item and start on the next item in the list. Finally, the arm is moved over the order bin and the suction system is disengaged. The system iterates this loop of grasping and dropping until it has either picked up all items in the dictionary or the time limit has been reached.

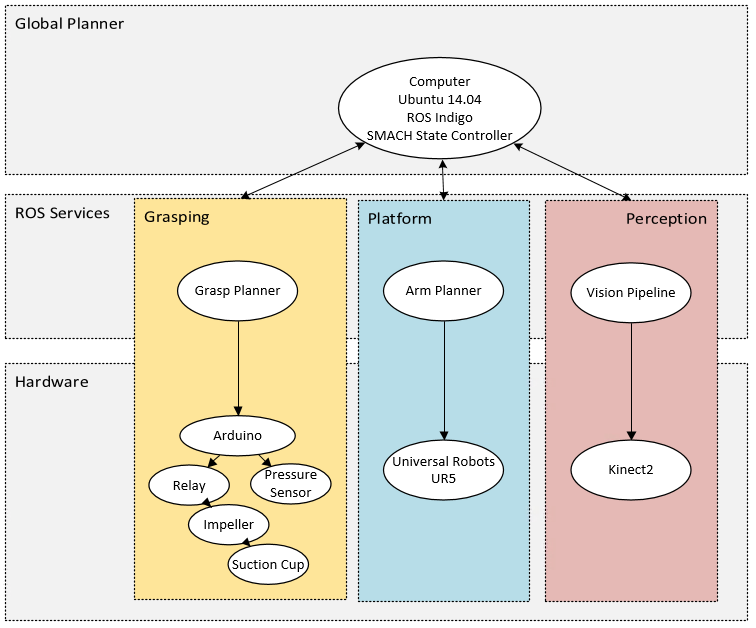

Physical Architecture

The physical architecture diagram shows the interaction of the robot with perception and suction subsystems and various components. As part of the global planner, we have a computer that runs on Ubuntu and ROS Indigo and takes care of the state controller including UR5 motion planning. The Gripper subsystem consists of the suction mechanism which is controlled by the ROS state controller through an Arduino microcontroller and relays. The pressure sensor also interacts with ROS state controller through the Arduino, using the ROSserial protocol and provides the grasp status feedback. On the perception subsystem, we are using the Kinect2 RGBD sensor which boasts higher resolution over the Kinect.

Software Architecture

The high-level software architecture diagram below explains the control and feedback mechanisms necessary in order to implment the functional architecture. The user input, in the form of a text file, is given to the master ROS controller, which begins the SMACH based state machine.

First, the master ROS controller passes in an item of interest which includes the specific bin number. The robot then aligns the Kinect2 and stitches several acquired point clouds together to improve the shelf scene resolution. The item recognition algorithms then determine the item position and orientation on the shelf. Specifically, using image segmentation techniques, bag of words classifiers, and search-based matching the approximate item location is determined. Using this data, the point cloud depth data is downsampled to the region of interest. Using ground truth 3D object data, the known geometry is fit to the scene. Algorithms available in OpenCV and the Point Cloud Library are used to simplify the vision task. This results in a position and orientation of the item of interest in world coordinates.

Finally, arm and item positions are sent to the grasp planner using trained methods of item acquisition, unique to each item. The arm planner uses the grasp list and finds a collision-free motion plan. A microcontroller is responsible for low level suction commands. Once the item is acquired, the ROS controller receives a grasp success signal from the grasp controller and then deposits the item into the order bin. This cycle repeats until all items from the input text file have been acquired.