The following video highlights the implementation of our robotic picker.

UR5 System Overview

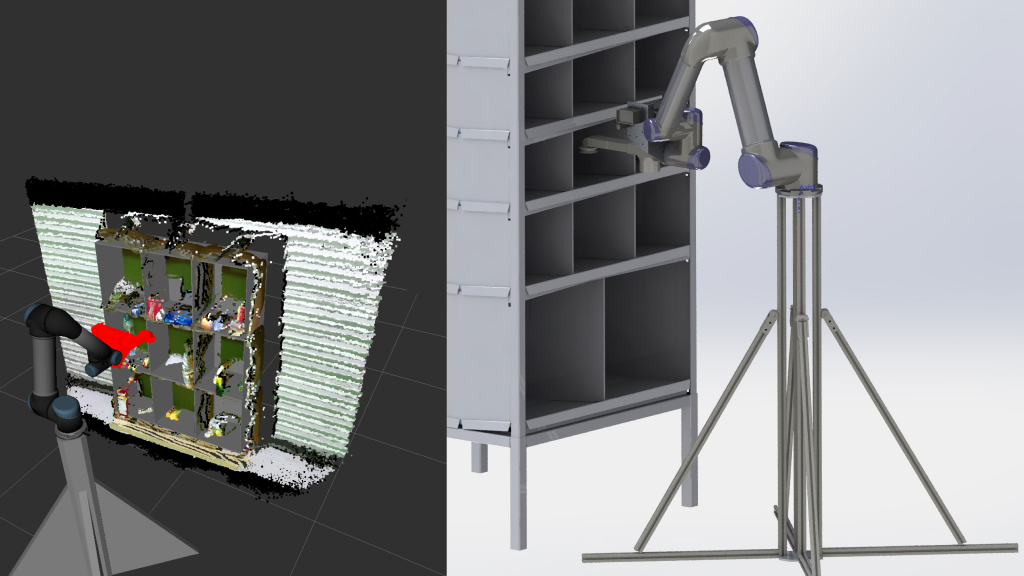

The pick-and-place actions are being developed around the UR5 robotic platform. This system, consists of several major components. The UR5 is a 6 DOF robotic arm that can reach 850mm from its base. The perception system consists of a Kinect2 mounted to the wrist of the UR5. Finally, the grasping and manipulation consists of custom designed suction cups that is bolted to the final joint of the UR5.

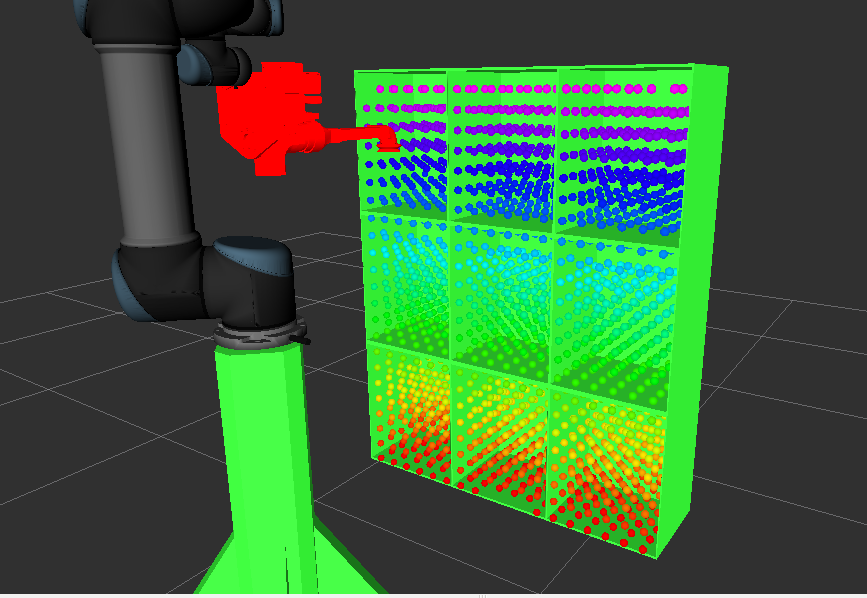

The UR5 platform is initially tested using the Gazebo simulator, allowing us to verify that our path planning works correctly. The shelf is visualized inside RViz using display markers. The pose of the bottom left edge of the shelf bins are published as transforms in ROS. The PR2 and shelf simulated in RViz is shown below. Point clouds are published depicting points inside the shelf the robot can reach in a specified pose.

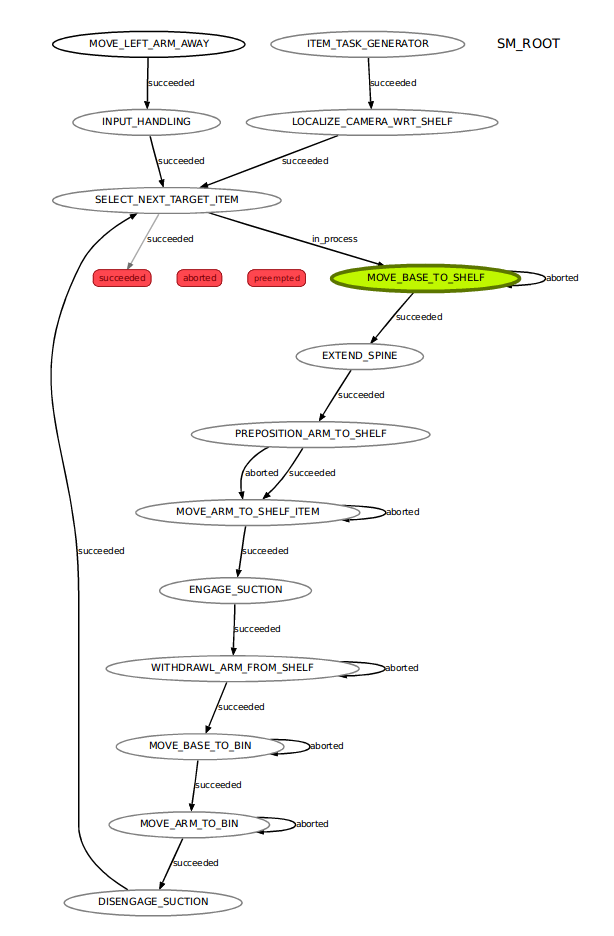

An executive smach state controller is used to define tasks as states. The smach library allows us to easily visualize and debug the state machine. The figure below shows the state machine during run-time. Each state is represented as a node. They are connected with pointed arrows denoting the transition condition. The current state is highlighted in green.

The MoveIt! package is used for planning and executing arm trajectories. It allows motion planning libraries to be easily swapped using plugins. Currently, we are using the OMPL plugin for path planning. We will eventually be switching to the search based planner plugin being developed by SBPL. This planner discretizes the search space and collision models to find very fast and deterministic solutions using the ARA* algorithm

Localization

The localization algorithm matches the ground truth CAD model of the shelf to a depth map captured by the Kinect. An iterative closest point algorithm is used to minimize the distance between points in the scene and the shelf model. Since the UR5 is a stationary manipulator, this algorithm only has to run once at startup.

Perception

CNN Item Identification

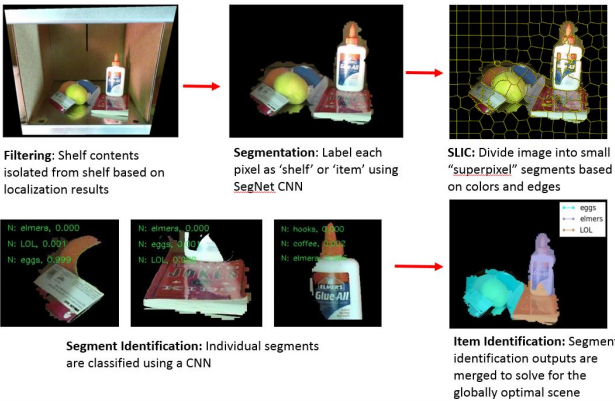

Our primary perception pipeline identifies items using a CNN. First, using the kinematic chain of the robot as well as the location of the shelf, the image is masked using the four corner points of the shelf. Next, a pixel-by-pixel labeling CNN, SegNet, is used to label each pixel in the image as shelf or item. After segmentation, the image is divided into superpixels using the SLIC algorithm. Each SLIC superpixel is then classified using an identification CNN based on Alexnet. Once each superpixel is classified, the outputs are merged to solve for the globally optimal solution. The entire vision process is outlined in the figure below

Dataset Generation

Using a CNN approach for item identification requires a large amount of training data to create a classifier. An automated data collection method was created to generate a database of the 39 possible items. A turntable rotates in 10 degree increments over 360 degrees of rotation and an actuator varies the view angle of the Kinect v2 RGB-D sensor. HSV thresholding and convex hull filters were applied to the images to automatically remove the background from the training images. In total, approximately 100 masked images were captured for each of the 40 items in the item dictionary. These images were rotated, distorted, mirrored, lightened, and darkened to create approximately 400,000 images for future classifier training. An example output training image is shown in figure below.

PERCH Item Identification

A second perception algorithm runs in parallel to CNN identification. PERCH, Perception via Search for Multi-Object Recognition and Localization, globally searches to match known geometric item models to the Kinect point cloud. PERCH specializes in identifying items with known geometry models under heavy occlusions. PERCH was developed by Vankatraman Narayanan and Maxim Likhachev in CMU’s Search Based Planning Lab. An example of PERCH identification can be seen below

Grasping and Grasp Planning

Initial trade studies showed suction systems would be far superior to traditional grippers for this task. After prototyping several solutions, we determined a high flow system was required to deal with imperfect seals of porous items. A custom suction cup gripper was designed which can be attached to the UR5. The gripper shown is capable of acquiring all objects from the 2015 amazon picking challenge list as shown in the figure below.

A database containing pretrained grasp location for each item helps the grasp planner establish how to retrieve the item from the shelf. These grasp locations are selected based identifying flat surfaces (based on surface normals) that are reachable by the UR5.

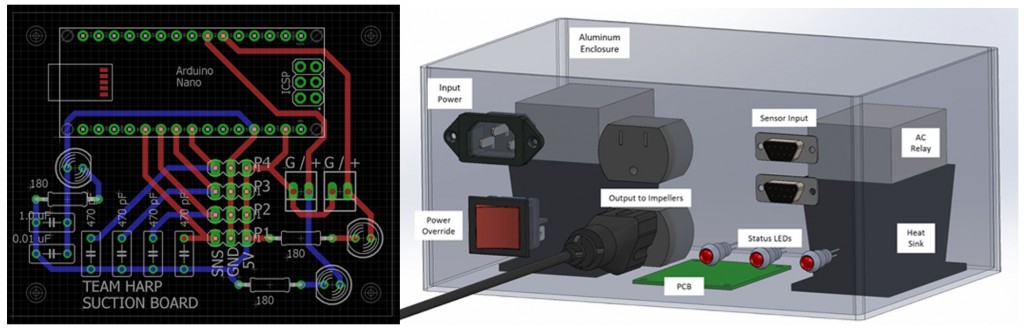

In order to control the vacuum system, a custom PCB (figure shown below) was designed. The board holds an Arduino Nano. This Arduino reads up to four analog sensors (including two pressure sensors), display the status of the circuit via three status LED’s, and triggers two relays which power two impellers used for a suction gripper. The suction data was analyzed and a custom ROS node was written to monitor the state of the system.