Full System Development

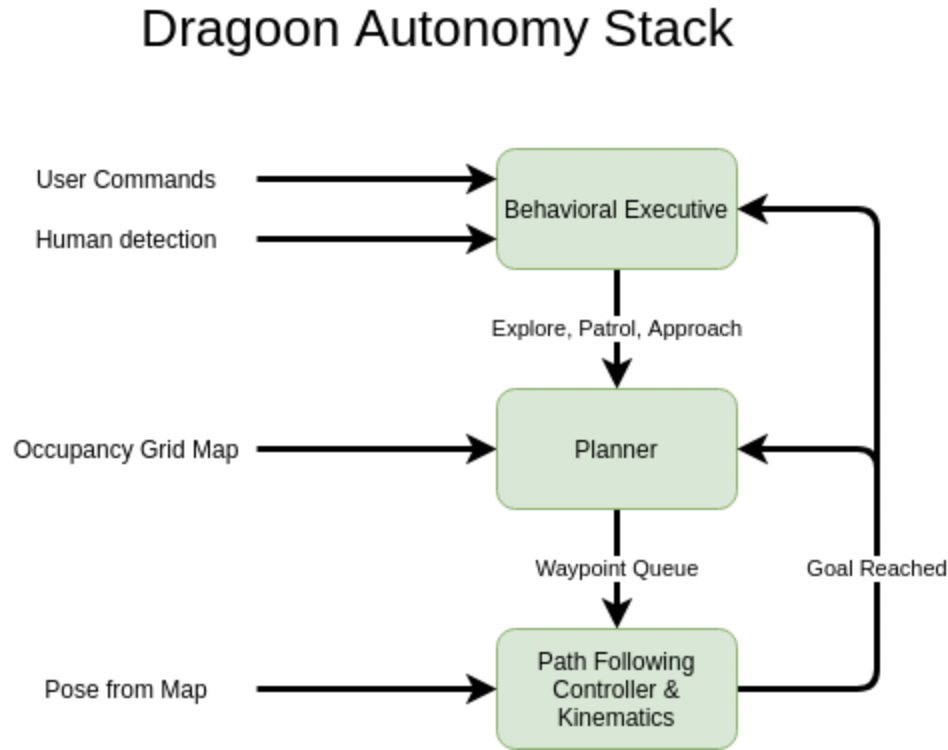

All hardware and software development on the system has been completed. We have also tuned the capabilities of Dragoon so that it is able to perform its tasks in post-disaster scenarios, as demonstrated in our Fall Validation Demonstration (FVD). Below is more information regarding the different subsystems implemented on Dragoon.

Dragoon’s Full Autonomy Test (10/27)

Dragoon’s Autonomy Test in FVD setup (11/10) (external video)

Dragoon’s 7m Human Detection Test with Custom-trained Networks (external video)

Dragoon’s 3m Human Detection Test with Custom-trained Networks (external video)

Dragoon’s first outing after completion of hardware development

Dragoon’s first outing (external video)

Visualization of software stack (external video)

Subsystem Development

Robot Base

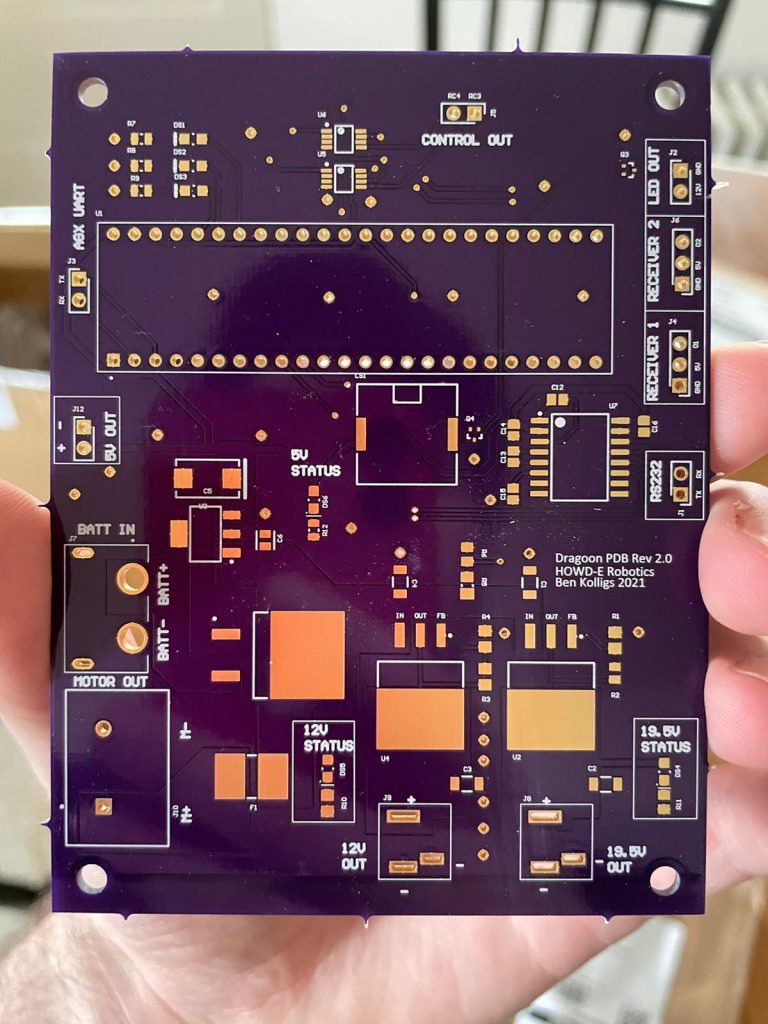

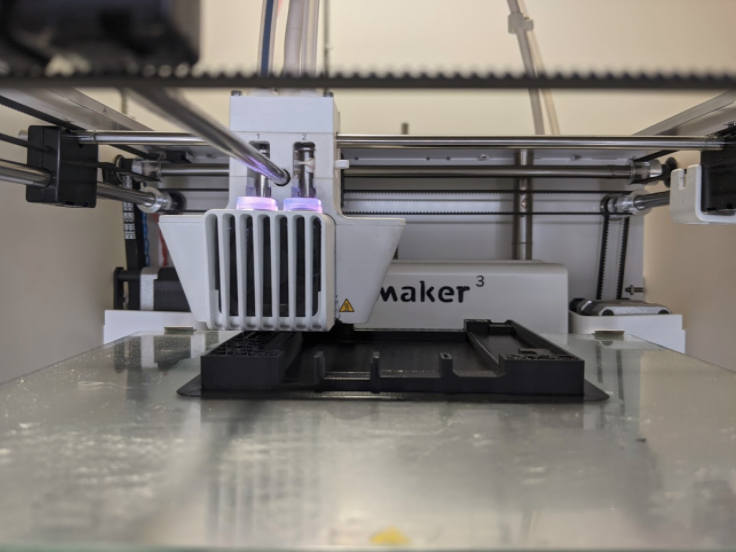

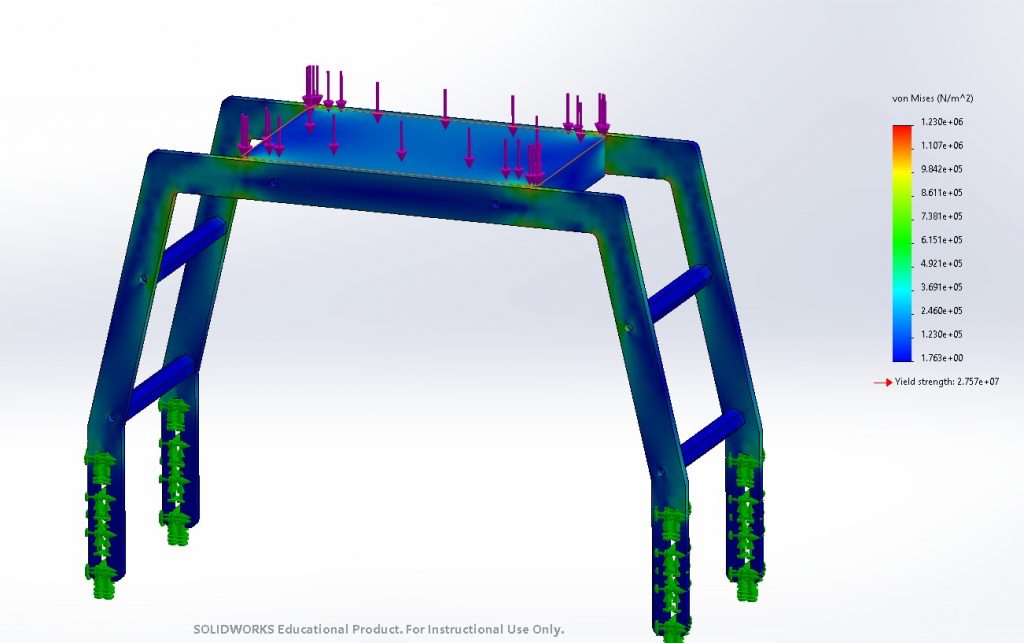

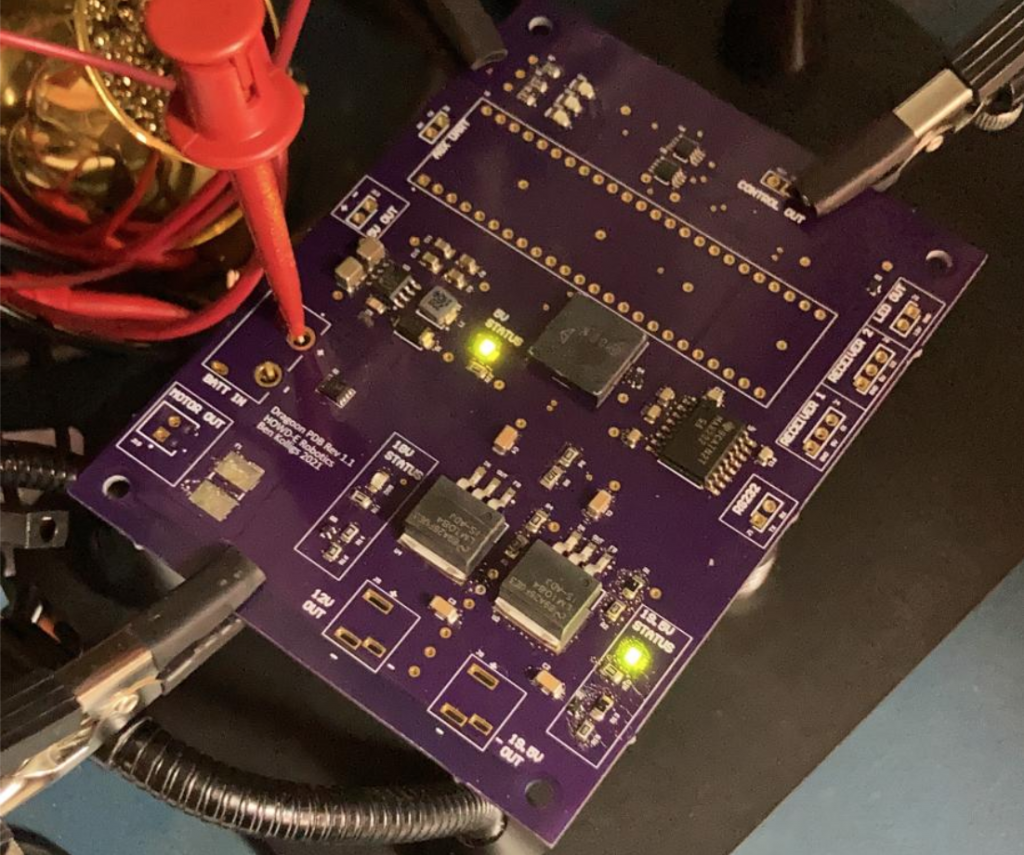

As planned, hardware development was completed during the spring semester. We ordered and received our SuperDroid robot base, which came with a remote controller. We then proceeded to design the layout of the robot and fabricate, either using the 3D printer or the machine shop, the parts needed to put all our parts on the robot. Prior to fabrication, we did load analysis on all our mounts to ensure that they will be sturdy. Alongside the fabrication of parts was the development of the power distribution board of the robot. Each iteration of the board was designed, received from the manufacturer, and then put together using the tools and the reflow oven in the lab.

Simulation of full hardware stack in Rviz (external video)

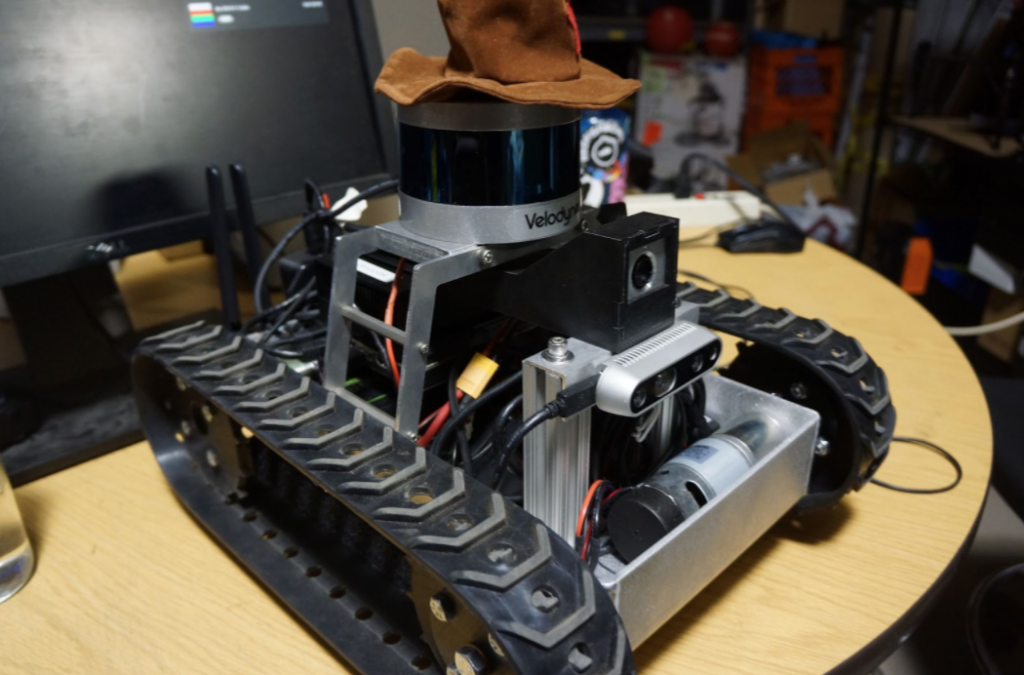

Complete hardware stack

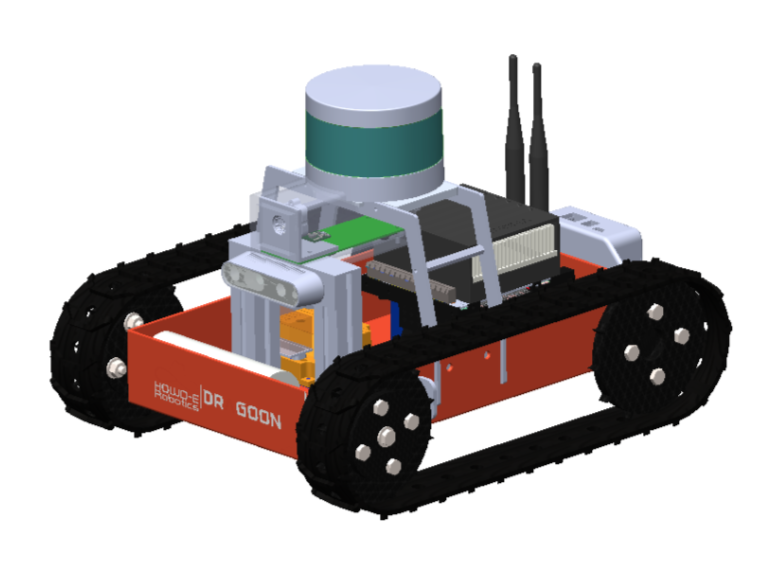

CAD model of complete hardware stack

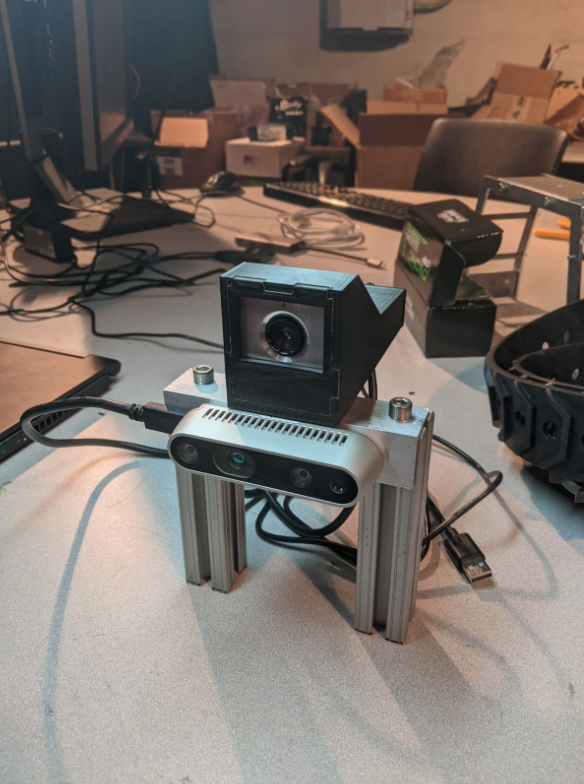

RealSense D435i and Seek Thermal sensors on custom-made sensor mount and casing

Version 2 of PDB

Custom-made LiDAR mount, RealSense/Seek mount, and AGX mount on robot base

3D printing fabrication of battery holder

Load analysis of LiDAR mount

Version 1 of PDB

Sensing

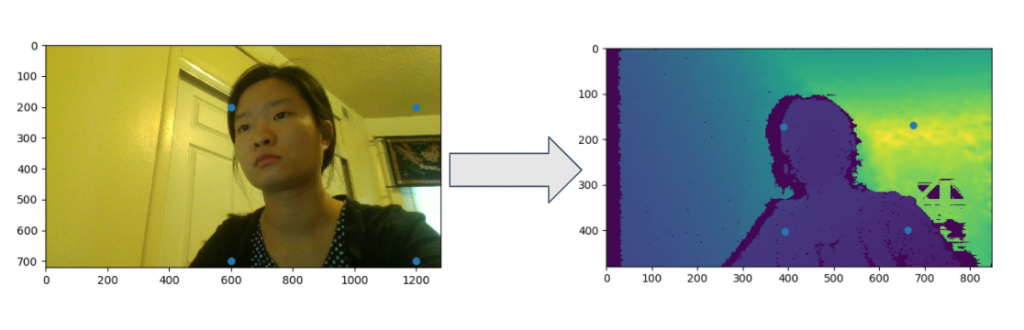

Our sensing subsystem consists of 3 major sensors: the RealSense RGB sensor, the RealSense depth sensor, and the Seek Thermal sensor. All sensors have been calibrated to get their intrinsic parameters, and the extrinsic parameters between the two RealSense sensors have also been obtained. For SVD, we used the registration between the two RealSense sensors in order to detect humans. For FVD, we registered both the RealSense RGB and the Seek Thermal to the RealSense depth in order to extract the location of the human in the frame.

RealSense RGB and Depth and Seek Thermal run on robot’s AGX (external video)

Registration of points between the RealSense RGB sensor and the RealSense depth sensor

Calibration of RealSense

Result of calibration of Seek Thermal sensor

Software

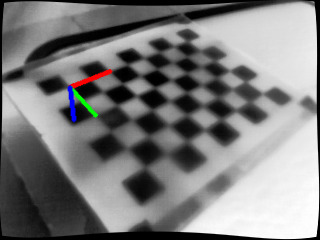

Our software pipeline consists of 3 major components: SLAM, human detection, and autonomy. We are using our Velodyne VLP-16 and the onboard RealSense IMU as inputs to the Cartographer SLAM system, which we have implemented on Dragoon. We are using YOLO to detect humans using both the RealSense RGB and the Seek Thermal; calibration done during the spring semester between the RealSense RGB and the RealSense depth streams allowed us to take the location of the bounding box from the RGB image to the depth image and extract the depth of the human, giving us a 3D pose(s) of the human(s) in the frame. The global poses of humans on the visualizer is then determined by a Kalman filter, which receives information on each human detection seen on each individual frame.

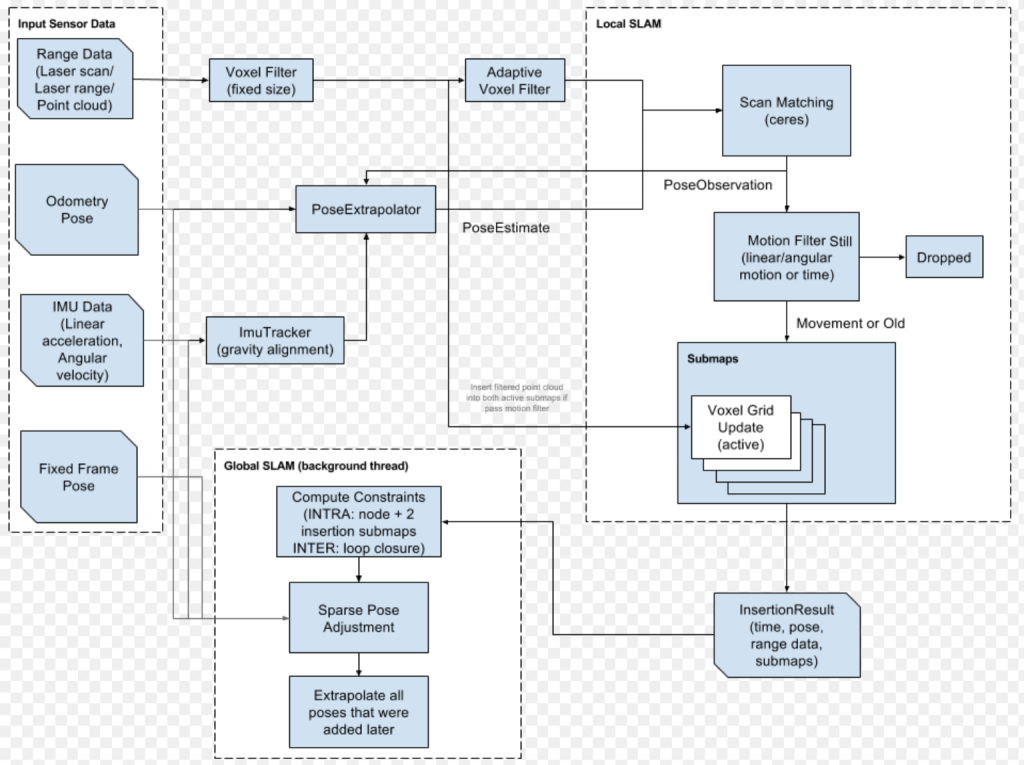

During the fall semester, we completed registration between the Seek Thermal and RealSense, and now feed global poses of humans from both RealSense RGB as well as Seek Thermal into the Kalman filter. Furthermore, we collected custom data and retrained both our RGB and IR YOLO networks to be better able to detect supine humans in dim and occluded conditions. Another major software component we have worked on during the fall semester is Dragoon’s autonomy platform. Dragoon is now capable of autonomously navigating a space such that it does not require human input in order to find survivors. This was done by implementing both a global planner that determines exploration points in previously unexplored spaces, and a local path following controller that guides Dragoon between each exploration point, and a behavioral executive that determines whether Dragoon should explore more of its current area or proceed to the next unseen frontier.

SLAM system working on robot base (external video)

Overview of Cartographer SLAM system

Custom data collection (on left) and occlusion augmentation for retraining (on right)

Diagram of Dragoon’s Autonomy Behavior

Vehicle UI

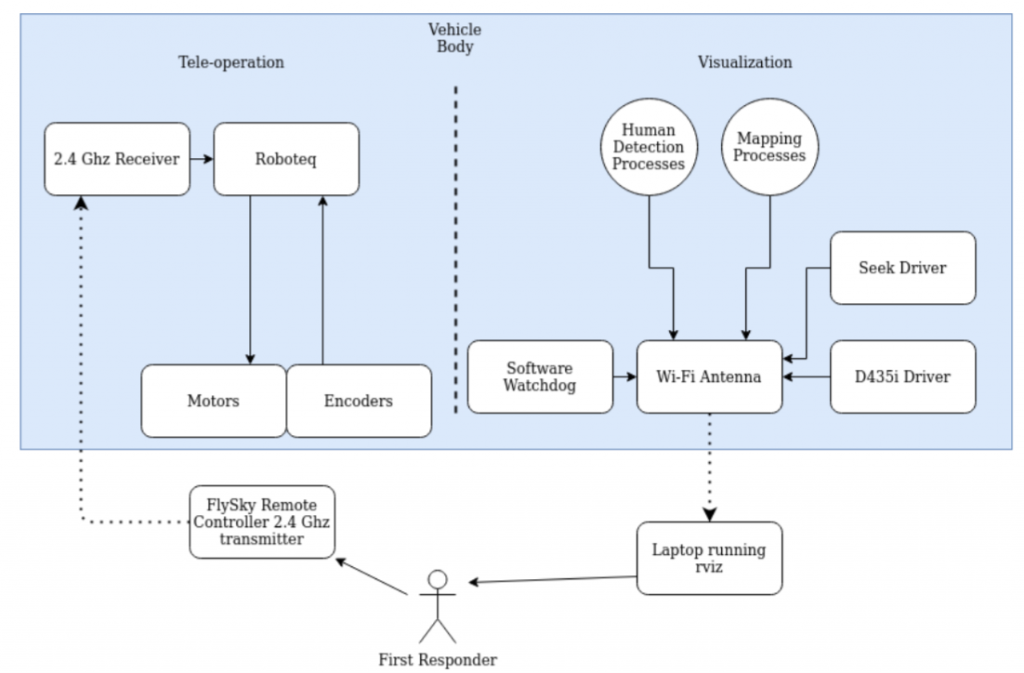

Vehicle UI architecture

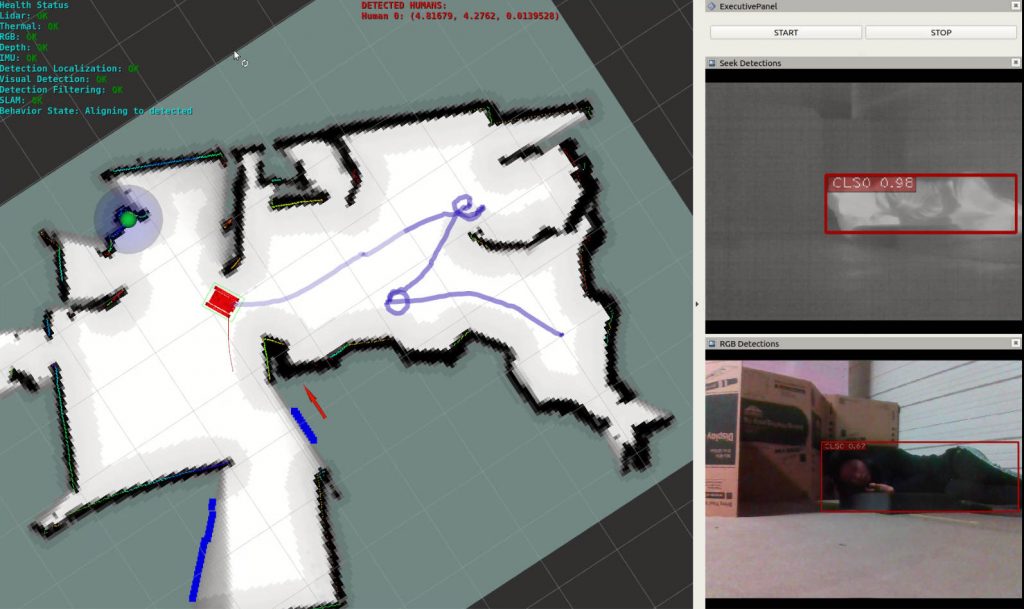

Final visualizer

As seen above, our Vehicle UI displays the Cartographer map (with the location(s) of human(s) drawn on it) as well as data from our sensors through Rviz onto a screen in front of the operator. In the top left corner of the visualizer, the operator can see a watchdog module that indicates the heartbeats of the different sensors. On the top middle part of the screen, the operator is able to see if any humans have been detected and the locations of the humans detected. Red dots on the map indicate “evidences” of human detection as seen on each individual RGB frame, while green dots on the map are the positions of the humans as determined by the Kalman filter using the evidences. The operator can also see the path taken by the robot on the screen. Finally, on the right side, the operator can see the current Seek Thermal and RealSense RGB (both with YOLO running on it) streams.