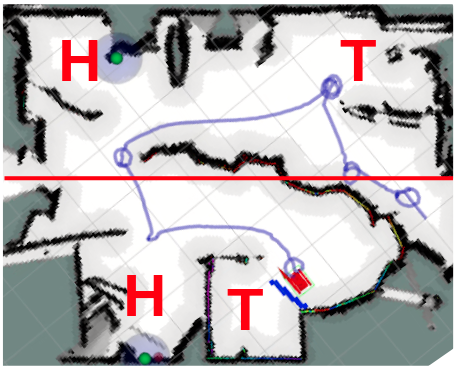

Full system test on 4/12/21. Visualizer on left and test footage on right.

SVD Performance

Required validations:

- Locomotion (M.P.5)

- Procedure:

- Operator powers on the robot and moves the robot forwards and backwards using remote control at a minimum speed of 0.25 m/s.

- Operator turns the robot about its location.

- Validation:

- Robot connects to and is controllable by the remote control

- Robot moves forwards and backwards at a minimum speed of 0.25 m/s.

- Robot turns in place at a minimum angular speed of 36 degrees per second.

- Performance: The robot connected to the remote successfully, and comfortably exceeded our specifications of 0.25 m/s forward/backward motion and turning speed of 36 degrees per second.

- Procedure:

- Perception (M.P.4)

- Procedure:

- Operator initializes perception system and visualizer.

- Robot is placed in one of two scenarios determined by a coin flip.

- Validation:

- Jetson AGX connects to RealSense, Seek and VLP16.

- RealSense depth, RGB, and Seek Thermal streams are operational and stream to visualizer on the operator’s laptop.

- Visualizer displays 2D map and video streams with at least 10Hz frequency.

- Subsystem health status monitor visible and displays “OK” status for all components.

- Performance: The system streamed at least 10Hz frequency from all data streams into the visualizer on the operator’s laptop. Additionally, all major subsystems were successfully monitored and their health status was displayed continuously.

- Procedure:

- SLAM and Basic Human Detection (M.P.0, M.P.1, M.P.3)

- Procedure:

- Operator moves robot at a max speed of 0.2 m/s through the obstacles in the room for at least 2 minutes using only the 2D map and video streams displayed on the visualizer.

- Robot detects and localizes a single human (location determined by coin flip).

- Validation:

- 2D map of room for up to 10m from the robot is displayed and updated on visualizer; the shape of major obstacles are captured in the map.

- The position and travelled path of the robot is displayed on a 2D map on the visualizer.

- The robot detects and localizes a human lying down horizontally and facing the robot within 5 seconds of complete, unobstructed entrance into the RGB FOV.

- The location of the human is displayed on the 2D map of the visualizer. The centroidal accuracy of the localization is within 0.5 meters relative to the mapped room geometry.

- Performance: The robot localized humans to within 0.5m accurately, and was able to detect people lying down after navigating through an obstacle field. We showed that the robot was able to successfully detect people and remembered where they were after leaving the area.

- Procedure:

- Human Detection Conditionality (M.P.0, M.P.1)

- Procedure:

- Robot is placed 7m distance facing a single well-lit human.

- The human transitions between various poses to demonstrate the failure and success modes of human detection at this distance.

- Validation:

- Robot is able to detect and localize the subject within 5 seconds in each of the following positions at 7m distance: standing, seated and side-lying with a limb separated

- Performance: Performance detailed under the “Component Testing and Results” section of “Documents”.

- Procedure:

Additional validations:

- Verified detection beyond 7m (up to 13m for standing human)

- Performed multi-human tracking and localization

- Verified IR human detection up to 13m

FVD Performance

FVD setup (on left) and location of the four “rooms” (on right). “H” represents the chosen room given a “heads” coin flip, while “T” represents the chosen room given a “tails” coin flip.

Procedure

- Place the robot in a simulated 50m2 disaster scenario with dimly lit (<150 lux) lighting and occluding obstacles placed around the room. An example of this is show on the left image above.

- Two human “victims” will be randomly placed in 2 of 4 available rooms in the area at a supine position with at most 25% occlusion; one victim shall be occluded and the other shall not. The location of one victim is one of the two “rooms” in the top half of the right image above, while the other location is one of the two “rooms” in the bottom half of the right image above. Two coin flips determine the location of the humans.

- Send a start signal to the robot.

Validation

Robot explores the room and detects two people at a rate of 10 seconds per square meter with 0.5m accuracy (M.P.0, M.P.1, M.P.2, M.P.7, M.P.8, M.N.6). RGB Stream and 2D map of room for up to 10m from the robot is displayed and updated on visualizer; detected humans and the shape of major obstacles are captured in the map (M.P.3, M.P.4).

Performance

| Metric | Performance |

| Detection under 5s at 7 meters | Detection under 3s at 7 meters |

| Detection under 5s at 3m, 150 lux, 25% occlusion | Detection under 2s at 3m, < 50 lux, < 50% occlusion |

| 2 Detections in 30m2 under 5 minutes | 2 Detections in 30m2 under 3:15 minutes |

Bonus Demonstration

In addition to the official FVD demonstrations we performed, we also were able to demonstrate how robust the system is to perturbation by asking the audience to construct an environment for Dragoon to explore and localize people.

Similar to the environment shown in the left image above, we asked people to place cardboard barriers in an interesting arrangement that would create a challenge for Dragoon.

In one such arrangement that Dragoon had never seen before, it performed well again, finding two people in the 50m2 environment around 3 minutes.

In another environment setup, our team tweaked the setup to highlight a failure case of Dragoon’s exploration algorithm. We placed a barrier in such a way that the space between it and a neighboring barrier was too small. This was registered with the exploration algorithm as an impossible situation to traverse, and so Dragoon did not proceed into every room of the environment, missing a human.

An example of Dragoon’s 5 minute detection test can be seen here.