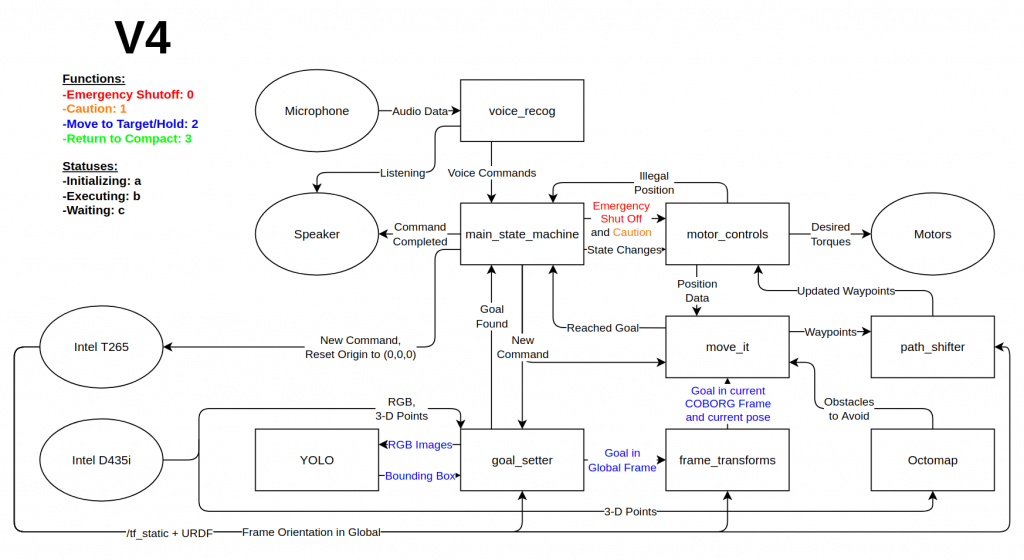

The function of the Coborg system begins at the microphone and the voice_recog node. Voice commands are sent to the Coborg through the microphone and the voice_recog node, which interprets the audio and publishes the parsed commands to the main_state_machine node. The operation of the system revolves around the main_state_machine node which determines the current state of the robot and which commands should be executed. Upon receiving a new command, the goal_setter node collects a series of images, 3d points, and frames from the two Intel RealSense cameras and sends the images to the YOLO node. Inside of the YOLO node, hands are identified in the images, and their bounding boxes are returned to the goal_setter node. The goal_setter determines the ideal target location, based on the position of the hands, and forwards the robot’s new global goal to the move_it node. The move_it node generates a path for the robot to follow from its current position to the global target. The motor_controls and gain_setter node determine which control policy to use and send the lowest-level torque commands to the motors.