Spring Testing and Validation

Perception Subsystem Testing (SVD)

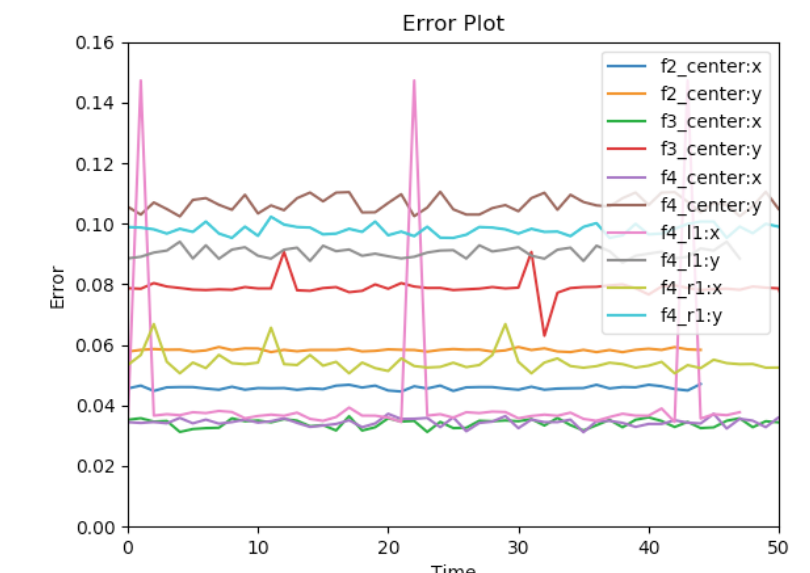

There were two evaluation criteria we tested our system against. The first is to localize objects within 5 meters with less than 20 cm error (M.P.6). We placed the object at various locations on a 5×4 meter grid and recorded the localization output by the sensor pod. The localization error plot is shown below. we can see that for all locations, the maximum error is less than 20 cm.

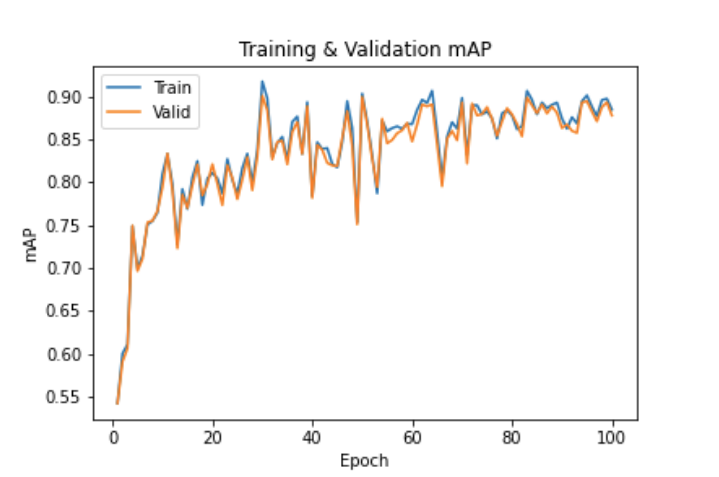

The second criteria is to detect objects with 70% accuracy (M.P.7). Besides visually inspecting the demonstration video, the mean average precision (mean average precision) plot validates this criterion as shown in Fig. 12.

Planning Subsystem Testing (SVD)

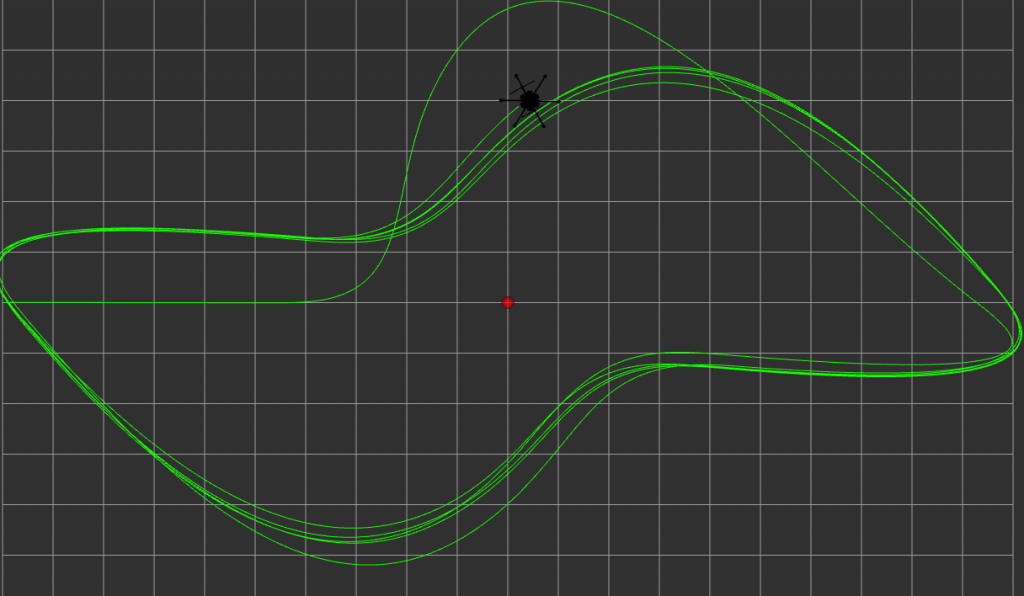

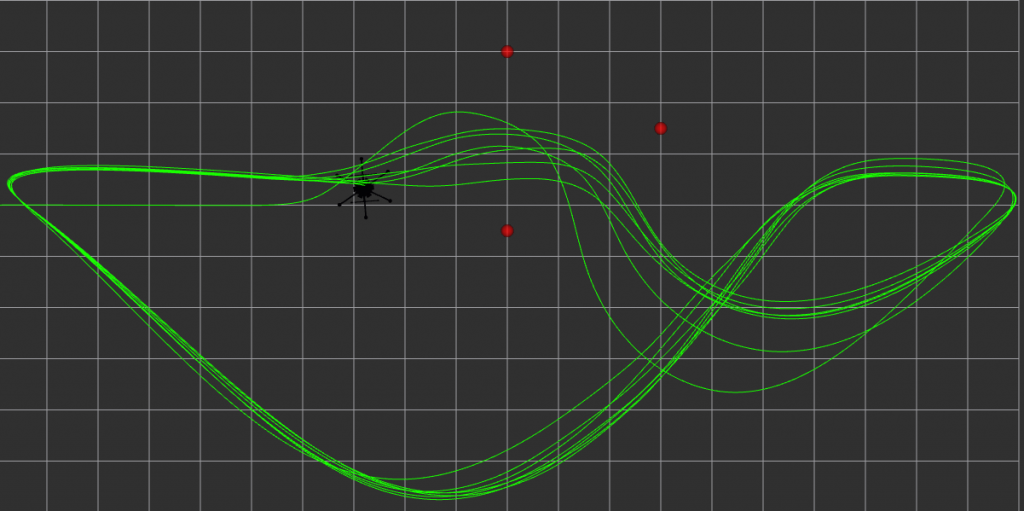

Using the SITL Kinematic simulator we tested the obstacle conditions in which the drone successfully avoids obstacles. We tested the system in three different scenarios: single stationary obstacle, multiple stationary obstacles, and single moving obstacle. Our testing concluded the potential field planner is successful given the following criteria:

- Stationary Obstacle:

- Gaussian sensor noise is less than 0.5 m (applied to the X, Y, and Z directions independently)

- The detection system detects obstacles in at least 50% of motion steps.

- Multiple Obstacles:

- The spacing between obstacles through which the drone flies must be larger than 2x the drone’s diameter.

- Moving Obstacle:

- The obstacles must be moving as fast, or slower, than the drone.

The following video shows the moving and multiple object avoidance scenarios:

Fall Testing and Validation

Hardware in the Loop (HITL) Testing

The simulations in the spring used a simple kinematic controller. Using the DJI Assistant software, we simulated the motion planner and proper interfacing with the low level DJI motor controller. The motion simulation was performed using a DJI dynamic simulator. Screenshots for the results of these tests are shown below.

Cart Testing

To test the integration of both perception and planning subsystems on the final flight platform, the team conducted a series of cart tests. In these tests, the drone sat on a cart with a monitor to visualize both the live perception data and the recommended path. An operator pushed the cart along the path to simulate flight motion. Performing these tests without the overhead of flying the drone accelerated the testing process to resolve any integration issues. This picture shows the setup for this environment.

Finally, these tests were repeated by physically flying the drone according to the FVD specifications. Both the cart tests and the flight tests explored a wide range of testing parameters: single object, multiple objects, moving object, multiple classes, various maximum drone speeds, etc. By scaling the testing up in this manner, the team could validate each subsystem in a simplified setting until reaching the final demonstration environment.