The AACAS project will use a DJI M600 Pro as the flight platform. We acquired the drone from Dr. George Kantor. Upon receiving the drone, we performed a full systems diagnostics examination and conducted a test flight shown following image. All parts, connections, and motors for the system are fully operational, and we expect no complications from the drone in the future.

Interfacing with the Platform

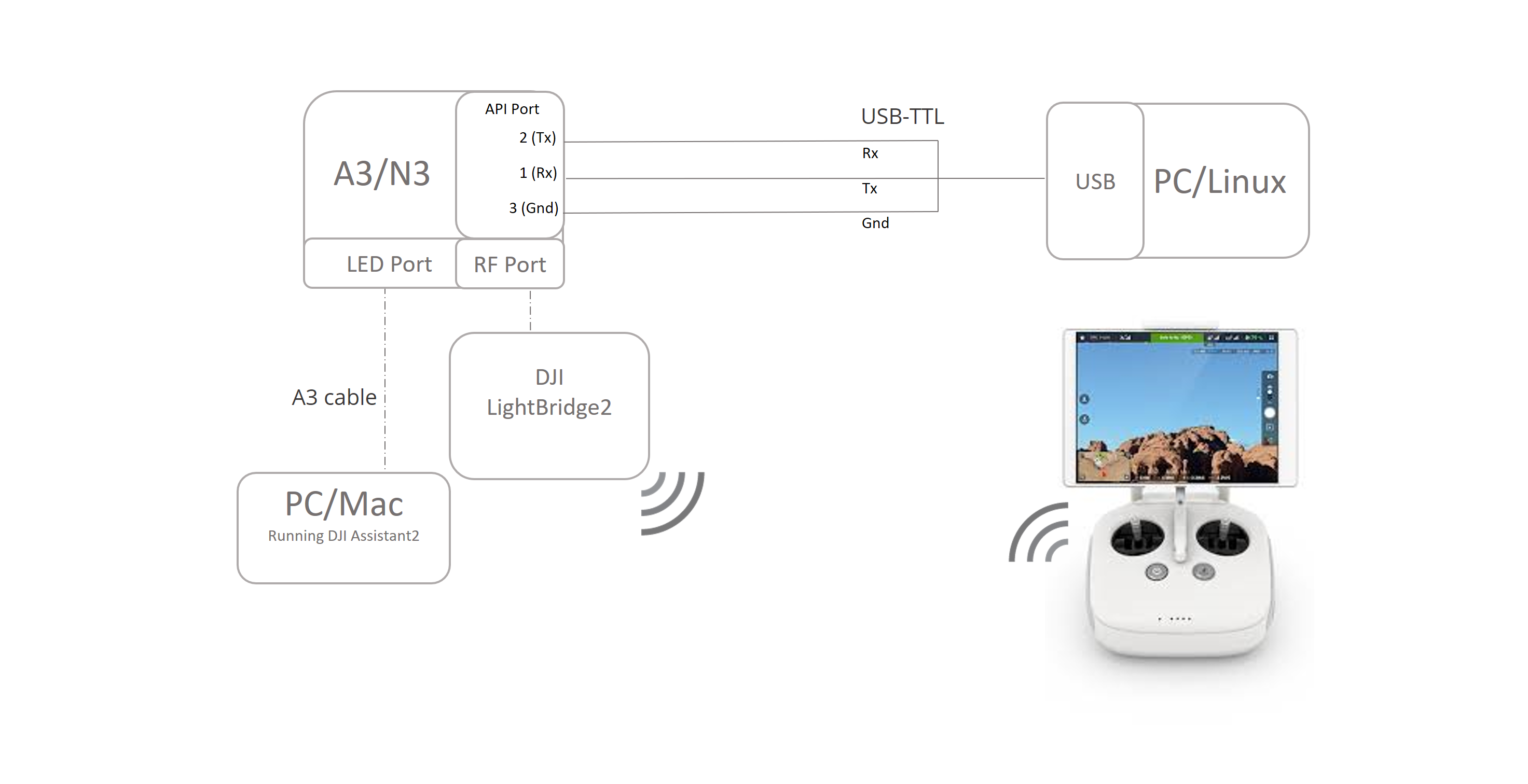

We will connect the drone with ROS using the NVIDIA Jetson Xavier. The block diagram below shows the connections to operate the drone in DJI’s simulation environment. The “A3/N3” block is the flight controller on the DJI drone. It connects to the simulation PC via USB cable (shown in the bottom block). The ROS computer (shown in the right) connects directly to the A3/N3 via a USB-TTL cable.

http://wiki.ros.org/dji_sdk/Tutorials/Getting%20Started

We verified the connection by starting the drone and visualizing the IMU and velocity sensor outputs in RVIZ.

Autonomous Flight

We performed the first fully autonomous flight test! In this flight test we performed the 3 obstacle test from the HITL testing and SVD. The video shows the drones launches autonomously, gains an altitude of 5 meters, and performs a back and forth avoidance path. When the drone flies above the Tartan logo, you can see the avoidance of simulated obstacles.

User Interface

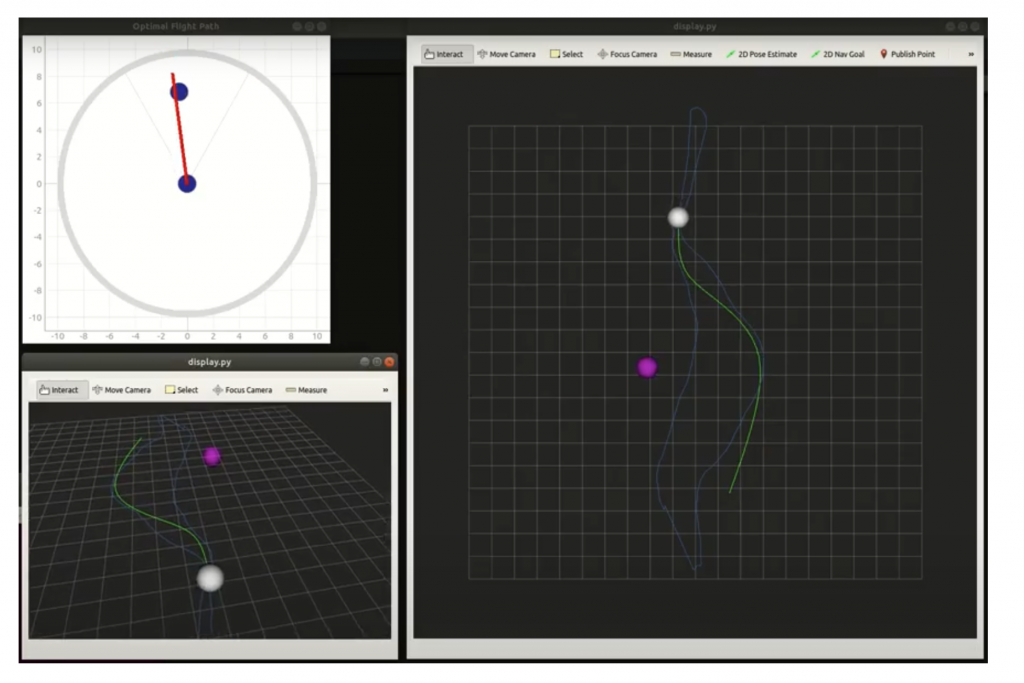

For manual flight, the objects and flight plan need to be relayed to the human pilot. This is done though a verbose UI system. The UI connects to the Xavier via a local connection though a dedicated router. Through this connection, location of objects, future drone trajectory, past drone trajectory, present location and desired velocity is displayed. Below shows a snapshot of a real time test of the UI displaying what the system sees and an optimal path around the objects.

Shown below is the full GUI system. The upper left display shows the immediate direction the pilot must take to avoid the object as well as any detected objects. The right display shows the top down view of the drone (white), the objects (purple), the planned optimal path (green) , and past path (blue). This is also shown in the bottom left but from 3rd person view.