Perception

Perception Pipeline

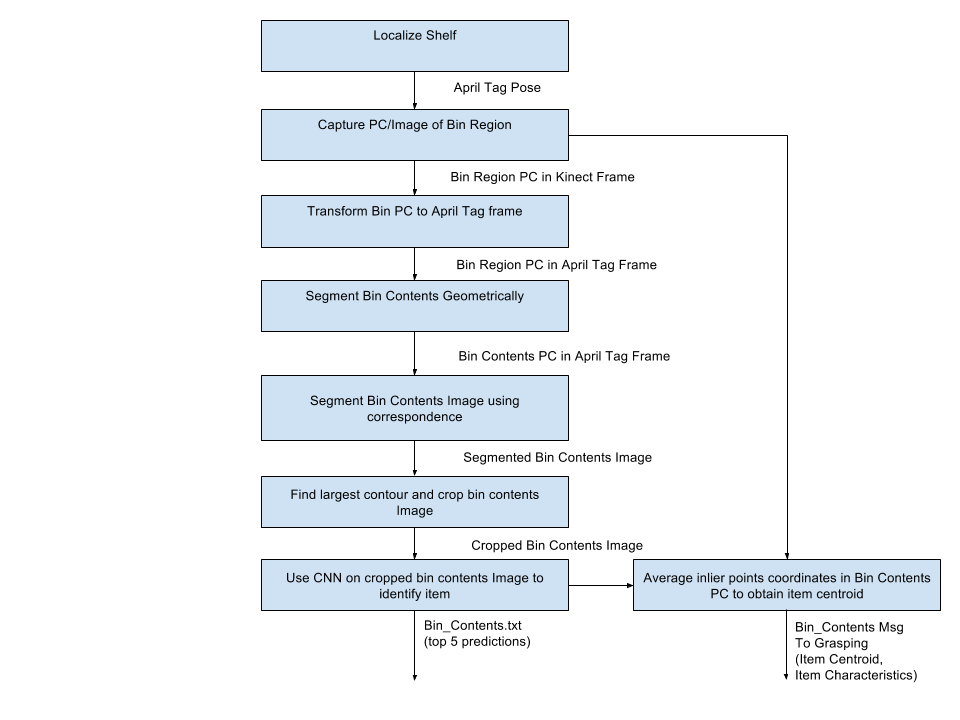

The current system implementation of the Perception subsystem is shown in the following below.

The main vision task was to develop a vision system to localize given items inside the storage system. High precision on item identification and shelf localization was needed for grasping and point cloud segmentation.

Item classification using neural network

The system uses a FCN Classifier for item identification and localization. FCN proved itself to have a high accuracy in item identification, and provides the benefit of pixel-wise labeling which grants valuable information when dealing with occluded cases. The network was trained using images which contained between 1-20 instances of the known competition items. There is a total of 461 images and 4809 instances of items within those images. The images were hand-labeled using the free online service LabelMe. The dataset was preprocessed from LabelMe polygons to standard PASCAL format, and split into training, validation, and test sets.

At runtime the classifier assigns a label to each pixel in an RGB image.

Following is a video for the classification result for both neural networks. The white region for FCN results is the pixel wised labeling for the corresponding item. For easier visualization, bounding box was drawn for both FCN and Faster R-CNN. The ground truth for each item is represented by the green bounding box and the predictions are the red bounding boxes.

Some items are still giving false identifications, or no identifications at all, and the networks need to be trained on a larger data sets and re-tested.

Improve vision robustness

Class filtering and diffusive lighting conditions along with shading were applied to increase the item classification accuracy and robustness under varying lighting conditions. During competition every team will have access to the JSON file that indicates what items are where at the beginning of the task. If FCN labels some pixels as items that does not exist in the bin, these labels are overwritten as background labels to eliminate false positives. Additionally, diffusive lighting was added to remove shadow and reduce reflection, and shades were added to remove ambient lighting. The figure below shows a comparison with and without diffusive lighting.

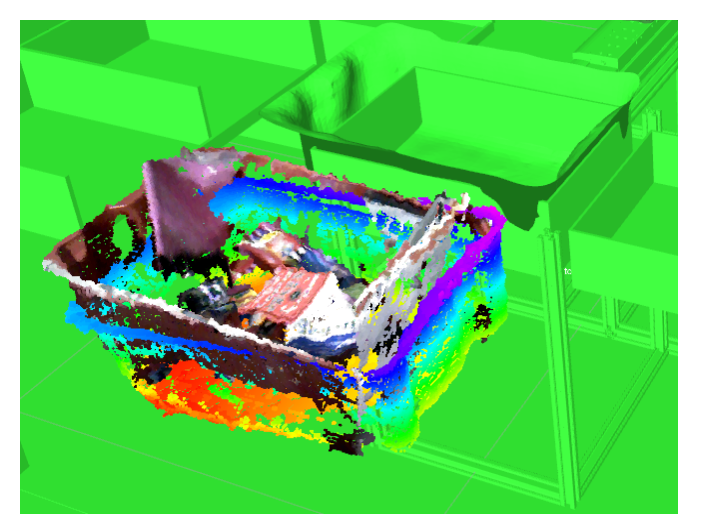

Camera calibration, localization and point cloud calibration

The perception subsystem includes camera calibration, bin localization, and point cloud segmentation capabilities. Asus camera intrinsics (focal length etc) and extrinsics (transformation between depth and RGB cameras) were calibrated using ROS camera calibration packages. AprilTags were used to get the transformation between the camera and the bins, so that a point cloud transformation in the world frame could be obtained. Point clouds were then segmented to remove anything outside of the bin. ICP was used to align a CAD model of the bin with point cloud of the bin. Figure 9 shows the point cloud projected into the RVIZ scene before alignment.