The system can be broadly classified into four subsystems namely:

(1) Autonomous Flight Subsystem

(2) Sensing Subsystem

(3) Signature Detection and Analysis Subsystem

(4) Rescue Package Drop Subsystem

Each of these subsystems is further detailed as below:

1. Autonomous Flight Subsystem

Two of the key requirements for SAR (Search and Rescue) missions are:

- To be able to carry out precise waypoint navigation in a large, partially unknown environment, within strict time constraints.

- To be able to mount a variety of powerful sensors to detect and capture possible human signatures that could be used to pinpoint the exact location for a rescue operation.

The DJI Matrice 100 quadcopter shown below in Figure 1 demonstrates capabilities that satisfy the above constraints and presents a very strong case for use in Aerial Search and Rescue Applications.

Figure 1 DJI Matrice 100

To be able to capture sensor data with sufficient coverage and high resolution, the system needs to establish a systematic search pattern around the area. To achieve this, the system shall implement a sweep of the provided area so that maximum coverage of the area is achieved. The pattern is illustrated in Figure 2.

Figure 2: Localized sweep for maximizing coverage

2. Sensing Subsystem

There sensing subsystem will comprise of three logical sensors namely an RGB camera, thermal camera and a microphone for detecting voice activity. The RGB and Thermal imaging sensing capabilities are provided by a single Flir Duo sensor shown in Figure 4. Since this sensor lacks in built GPS, alternate timestamp based techniques will be used to generate a precise spatiotemporal overlay for the sensor data. This is required for being able to pinpoint the rescue location with high accuracy. Microphones will also be used for more precise signature detection and for breaking ties when there are multiple candidate rescue locations.

Figure 5: FLIR Duo Camera

Microphone will also be used for more precise signature detection, which might act as an important differentiating factor when there are multiple visual cues. For our particular scenario, the sound system needs to satisfy a few criteria. First and foremost, the microphone needs to be a super cardioid microphone (depicted in Figure 6) that picks up sound only from the front of the microphone and eliminates noise from the side and the back. This is essential to keep the propeller noise at a minimum level in the collected sound samples. The next requirement is to ensure the device was capable of storing

First and foremost, the microphone needs to be a super cardioid microphone (depicted in Figure 6) that picks up sound only from the front of the microphone and eliminates noise from the side and the back. This is essential to keep the propeller noise at a minimum level in the collected sound samples. The next requirement is to ensure that the device is capable of storing digitally recorded samples of sound rather than having to connect the microphone to a processing device. This requirement stems from the fact that, the microphone might have to be suspended 10-15 feet from the drone, and building a system with long data cables connecting it to a processing system on the drone would make it complicated. The third requirement is to make sure that the whole suspended system does not weigh more than a few hundred grams. This is to stay within the limits of the weight capacity for Matrice 100 which is 1kg. Lastly, it had to be something that was not very expensive so as to ensure that there is still room in the budget for buying other things.

After doing a lot of research it was finally decided to go with a shotgun microphone with a built-in voice recorder that converts and stores the sound as .wav files which can be easily extracted and processed. Figure 1 below shows an image of the microphone that we decided to purchase. It has a built-in recorder that stores the data in an SD card that can be plugged into a laptop to extract the sound samples.

Figure 6: Supercardioid Microphone

3. Signature Detection and Analysis Subsystem

The ability to detect human signatures accurately is one of the key pieces of a Search and Rescue mission. Apart from impacting design choices in other subsystems like sensors etc, the kind of signatures that are used for human detection, directly impacts the mission complexity that the system can undertake. Some of the key signatures the system will rely on are listed below:

- RGB Imagery: RGB imagery will be used to identify specific patterns of colors associated with human presence. For example, a bright colored tent in the background of a green foliage or brown terrain can be used as a strong indication of human presence in the region.

- Thermal imagery: Due to the fact that all bodies in nature exhibit unique thermal signatures in various situations, using thermal imagery to detect the presence of humans or objects associated with humans (a hot abandoned vehicle for example or hot wood used for fire) can yield very precise results.

- Sound: Sound is yet another useful signature that could be associated with humans in a very distinct way. Similar to thermal fingerprint, there is a very precise sound frequency range that humans produce. This, coupled with other intuitions (associating increased amplitude with a cry for help for example) can potentially be a powerful tool in guiding a search. The one problem with using sound is to be able to filter out the surrounding noise and extract high-quality data which needs to be addressed.

The system shall process the sensor data to generate a sanitized version of it. The sanitized data shall then be discretized into a set of candidates keyed by their spatial and temporal coordinates. The system will then employ advanced machine learning algorithms to classify the candidates based on the presence of signatures and generate a filtered list that is ranked by a score indicating the probability of the candidate being a rescue location. The top element in the ranked list shall be deemed as the likely rescue location to send the drone.

With the objective of search and rescue in wilderness in mind, we decided to go after the following two types of human signatures:

1. Humans themselves

2. Signatures related to human activity:

(a) Bright objects: include bright clothing, tents, or mattresses generally used while hiking,

(b) Hot objects: hot stove, fire, hot water, etc which might indicate human activity

Human detection:

Detecting humans in images is a challenging task owing to their variable appearances and wide range of poses. Our motivation behind developing an algorithm to detect the presence of human beings is that it can be used in various scenarios. More specifically, it can be applied in autonomous search and rescue operations through aerial platforms, which can effectively reduce the equipment cost and risks of injuries of humans.

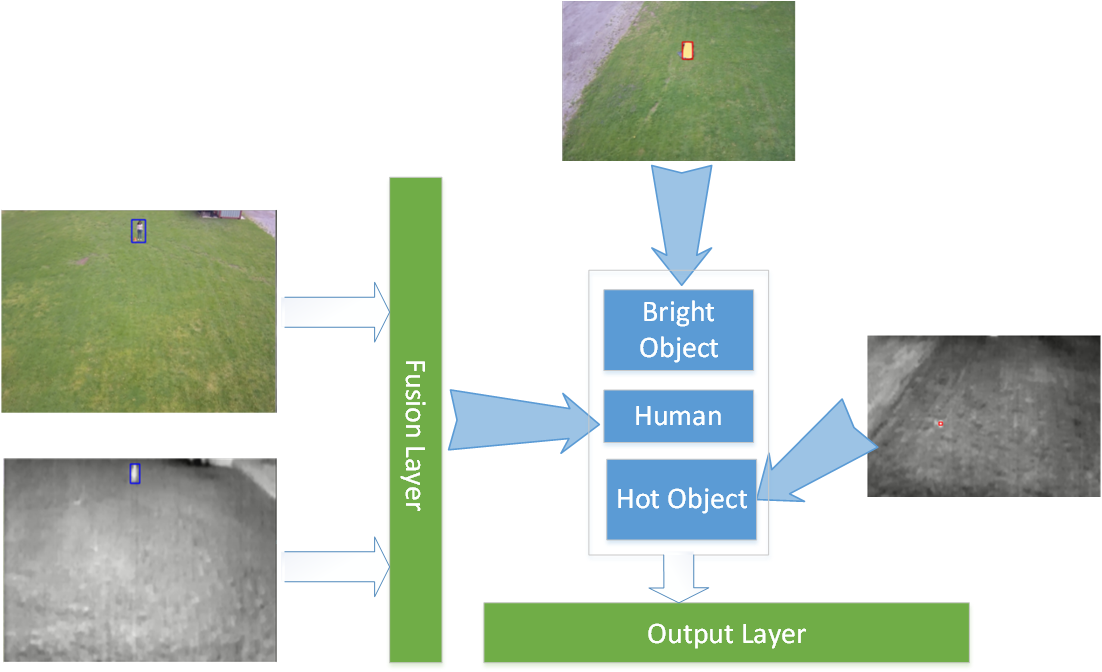

In this project, we firstly implemented Edge detection in images for capturing potential human candidates (ROIs). Then we utilized HOG to extract features and classify whether there are human beings inside the ROIs based on linear support vector machine(SVM). We apply this model to both the RGB images and Thermal images. Plus, we’ve achieve fusing the two results and get a better result in human detection.

Detecting other signatures related to human activity:

- We implemented bright object detection by converting the images to HSV space and thresholding them based on saturation and value to obtain bright features, which was followed by morphological operations to get bright objects.

- For detecting hot objects, we used adaptive thresholding on thermal images.

In the Figure shown below, you can see the overview of signature detection and analysis subsystem. The modeling and analysis processes will be shown in details in the following part.

4. Rescue Package Drop Subsystem

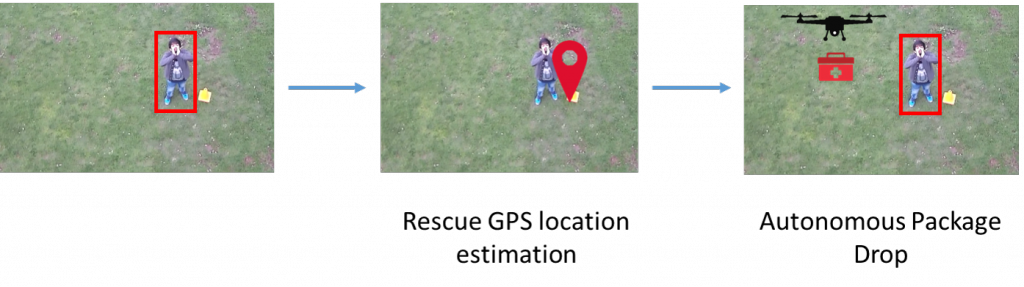

The Rescue Package Drop Subsystem, as the name suggests, consists of the algorithm which estimates the GPS location of the identified signature and the payload which is responsible for dropping a rescue package drop at that location. It will be a single unit with a rescue package and a controller to actuate the dropping mechanism.

As is clear from the graphic above, it has three important components:

- Rescue GPS location estimation algorithm

- Package Drop Mechanism

- System to enable autonomous package drop