Data Capture

Data is a crucial part of our system as it brings the “realism” to our behavior model. This system is built to capture and use data from which we can extract realistic behavior for our models. This includes recording video data by placing a camera at a traffic light intersection. We have collected data from both the simulation environment and from the real world. The simulation environment is the CARLA simulator. In the real world we mounted two cameras at diagonally opposite ends of an intersection and recorded video from them.

Carla

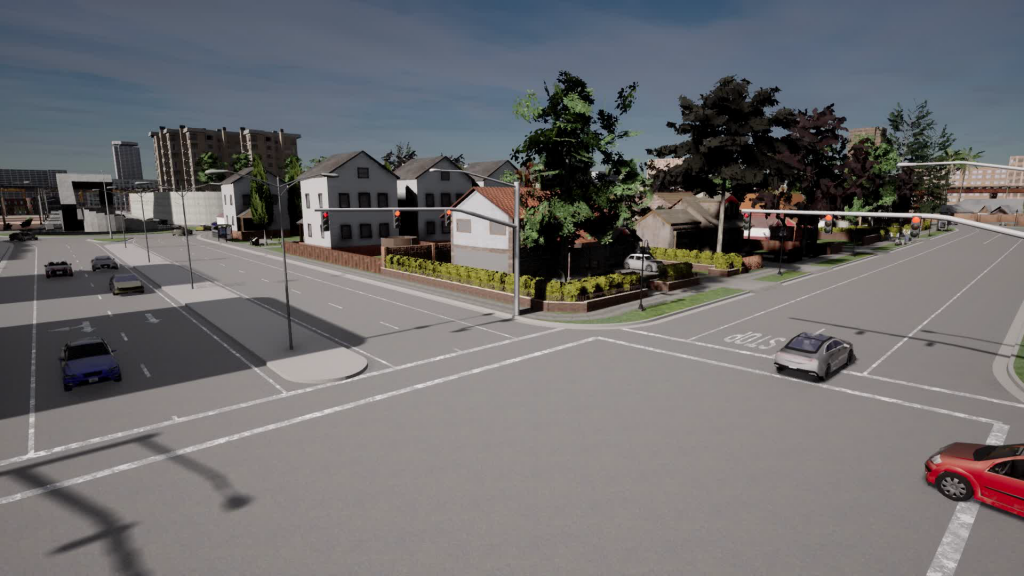

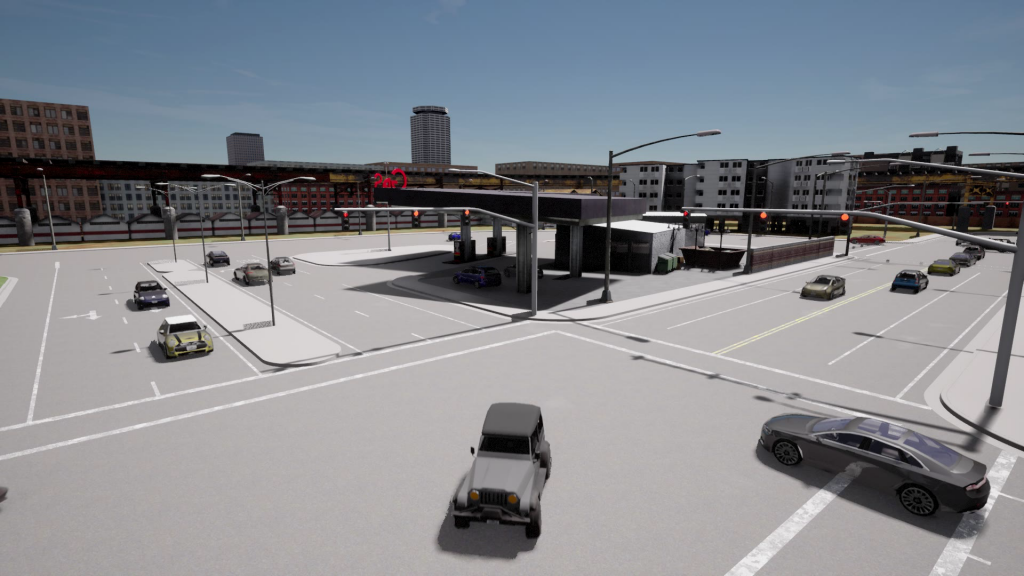

Using CARLA for data capture has two benefits. It helped us identify the best mounting points for the cameras for an ideal video stream. Additionally, it provides us with ground truth information about the state inside simulator, which helped us prototype and test the downstream subsystems. The simulator allows us to vary the location of the traffic camera, the weather, and the density of the vehicles in the simulator. This has helped us train a detection model robust to such variations.

We mount two cameras at the two opposite sides of the intersection to enable 100% view

Real-World

Similar to Carla intersection, we capture videos from opposite sides in a real-world inter-section. We have used a GoPro for data capture as it has enabled us to capture a wide view of the intersection and provides a good frame rate, which retains high quality information for use in the Data Processing subsystem. From prototyping within CARLA, we realized placing the camera higher up is better for us as it prevents occlusion between vehicles. We used tripod stands to manage the vertical position of the camera. Below we see our camera system deployed at Fifth and Craig Intersection, Pittsburgh, PA.

Data Capture Hardware: Go-Pro Hero 8

Camera system at intersection (marked in yellow)

Performance Analysis

We had the following two evaluation criteria for this subsystem:

- Percentage of intersection captured in the recorded video – M.P.1

- Frames per second (fps) of captured video – M.P.2

Mounting two camera on opposite sides of intersection allows us 100% view of the intersection, fulfilling M.P.1.

Both CARLA camera and GoPro allow us to adjust fps to very high values. In our work we captured data at 30 and 60 fps respectively, hence we fulfill M.P.2.

Strengths and Weaknesses

Strengths

- The current traffic camera-based data capture system is a convenient way to capture bulk data from CARLA.

- It allows variations in traffic density, weather and behavioral parameters, thus producing varied training and test cases.

Weakness

- Real world data capture system needs significant setup work before recording data. It requires user involvement in determining good mount positions, avoid occlusions from traffic lights and other traffic signs. It also requires sufficient space on the pavement for placing the tripod.