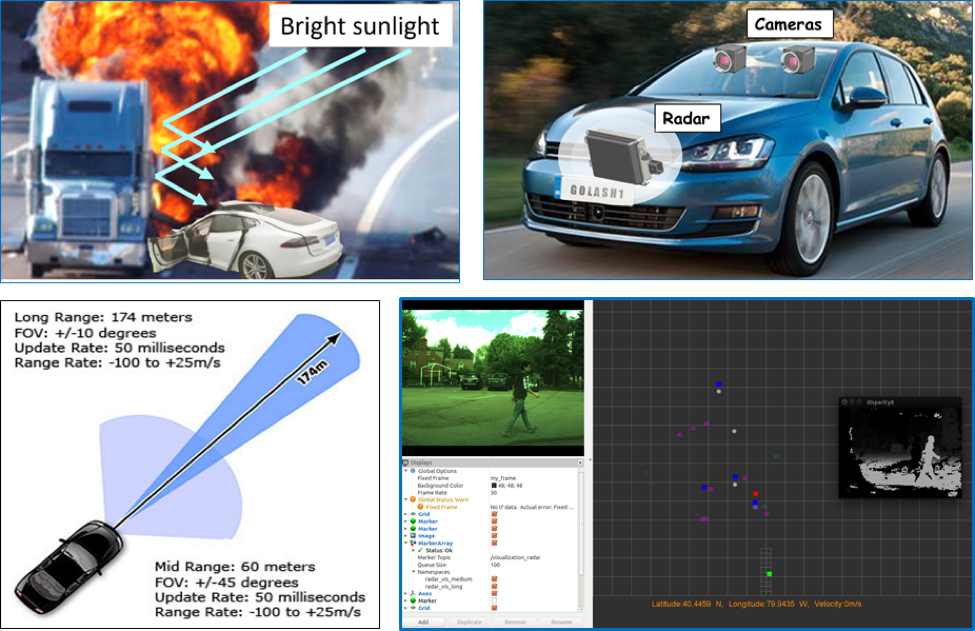

Fig. 1. Use case scenario images

Messla Motors Inc. recently lost one of their autonomous cars even though it was equipped with multiple redundant cameras and LIDAR sensors. This car crashed into a white truck when the sunlight reflected off the truck’s side compromised the car’s sensor system (top-left image in Fig. 1.). When Messla Motors’ stock fell sharply, the CEO of the company, Mr. Dusk, was faced with scrapping their expensive sensor rack project and starting over. Moreover, recent consumer reports had shown that the bulkiness and the difficulty of maintenance were major downsides of the Messla Motors sensor array. The Messla Motors crash scared away many small entrepreneurs and aspiring researchers from venturing into the autonomous vehicle development field. They felt that if a big company like Messla Motors had failed to design a foolproof perception system, then there was no way for them to do so on their limited budget. Consequently, interest and progress in the autonomous vehicle research and development industry started to fall. What could be done about this?

Enter the SeeAll system from Team Aware! Our perception system uses a radar to simultaneously perceive long and short range objects (bottom-left image in Fig. 3). By combining radar and stereo vision technology, our unified system performs in cases where existing vision-based systems might fail, such as in bright sunlight. Our radar system detects all metal objects (vehicles, lamp posts, lane dividers etc.) up to 120 meters away and is unaffected by ambient lighting conditions. Additionally, our radar unit is about 8 times cheaper than a LIDAR unit with similar range and rate capabilities. Under favorable conditions, the stereo vision system accurately identifies vehicles, pedestrians, and obstacles up to 30 meters away. The combined system can thus accurately detect and classify objects using vision and then track their positions and velocities using radar. Our system thus uses sensor fusion to increase our object detection accuracy and overall robustness. Since our perception system uses just three primary sensors, it significantly cheaper than systems like Mr. Dusk’s. Our system is designed to be standalone, and the form factor of our design makes for an adaptable perception system that could be used by any manufacturer of autonomous vehicles.

Mr. Dusk proceeded to scrap his old perception system and try out the SeeAll system from Team Aware instead. He easily installed the sensors into his test vehicle (as depicted in the top-right image in Fig. 3.) within minutes, and then connected the computer system to the car’s display and control systems. He then eagerly entered the car to see our system perform! As the car drove around the city, the SeeAll system accurately identified and located objects of interest. A live video feed and with a grid showing object types, locations and velocities were displayed side-by-side on the infotainment screen (as shown in the bottom-right image in Fig. 3). The car’s control system designed by Messla Motors easily read the relevant parameters from our perception system and maneuvered the car accordingly. During testing, it started to rain. This was not a problem for our system – the cameras were installed within the car’s cabin and the radar is weatherproof. Later, the sun came out and shone brightly into the cameras. This too was not an issue for the SeeAll system, since the radar was unaffected. Just as the car turned back towards to the testing facility, a crazy pedestrian dashed across the road in front of the car. Thanks to our real-time performance and our dual modes of perception, the pedestrian was detected, and the brakes were applied perfectly. Mr. Dusk was completely impressed.

Following Mr. Dusk’s example, smaller entrepreneurs and researchers were emboldened to use the SeeAll sensor system for their projects and autonomous vehicles. Some chose to completely replace their existing system with the SeeAll, while others chose to augment their existing system by integrating our system into theirs. The stereo cameras and the radar work hand-in-hand to create a sensor system that is full-range and real-time. By using stereo vision for object detection and classification and then radar for velocity and position monitoring, we avoiding using expensive components such as LIDARs. This means that our system is low-cost and can therefore be used with minimal risk by even small-scale research and development labs.