This subsystem includes the two 3.2 MP Color CCD PointGrey Grasshopper 3 cameras used for our stereo-vision setup. It also includes the mounting solution for the cameras. This consists of the 8020 aluminum beam onto which the cameras are mounted, the 3D-printed camera housings, and the modified sun-visor mounts using which the rack is installed in the car .

We can consider the Arduino module to be part of this subsystem as well. This is because the Arduino module consists of an in-line 12 VDC voltage regulator that also regulates the power to the cameras. Moreover, in addition to the voltage regulation for the cameras, this module can do two things: 1. hardware trigger the cameras, and 2. communicate GPS data to the computer. The Arduino Micro reads GPS data from the Adafruit Ultimate GPS sensor module at a rate of 5 Hz and sends it via serial port to the computer for processing. The Adafruit GPS sensor has a high sensitivity of -165 dBm and it supports 66 channels. It updates at a rate of up to 10 Hz while consuming very low power, making it apt for use in our project.

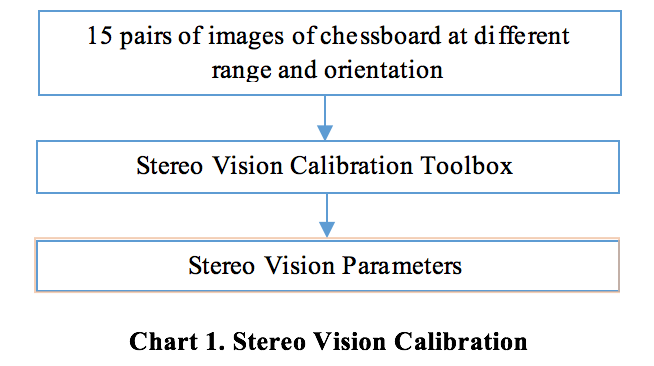

Our stereo system is designed with a long baseline (about 80 cm) in order to ensure accuracy of the depth calculation at relatively long distance (around 60 m). We also use advanced calibration method to compute the extrinsic and intrinsic parameters of the cameras. Our system is currently able to achieve around 88% accuracy. More details will be discussed in the two following sections: Stereo Vision Calibration: The purpose of this subsystem is to compute the intrinsic, extrinsic, relative rotation and translation of two cameras. The flow chart below shows how the stereo vision calibration algorithm works.  The Stereo Vision toolbox from MATLAB is used to compute those parameters. The toolbox takes several images pairs of a chessboard as the input, then it computes an optimized estimate of the mentioned parameters.

The Stereo Vision toolbox from MATLAB is used to compute those parameters. The toolbox takes several images pairs of a chessboard as the input, then it computes an optimized estimate of the mentioned parameters.

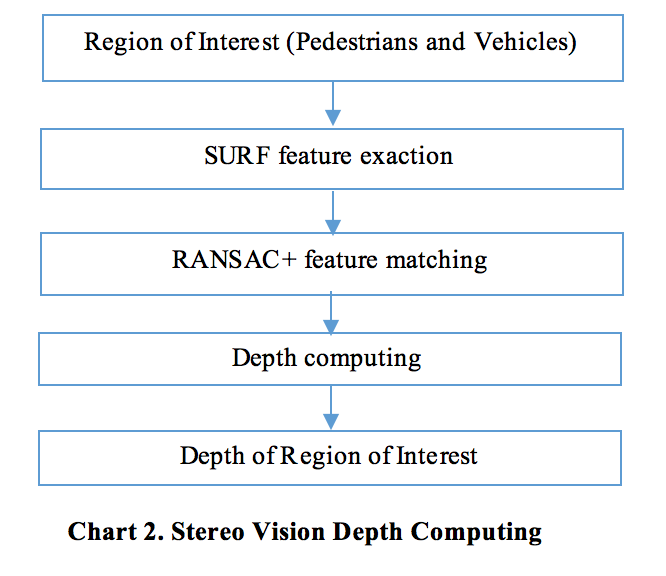

Stereo Vision Depth Computing:  The stereo vision system takes the output from the object detection algorithm and two images captured from the stereo vision as input. Using the SURF feature detector, the system can detect high-quality, unique features in both images. Then we combined RANSAC with a feature matching algorithm in order to get the correct matching pairs of features. Then the subsystem can use the triangulation method in order to compute the depth of the object.

The stereo vision system takes the output from the object detection algorithm and two images captured from the stereo vision as input. Using the SURF feature detector, the system can detect high-quality, unique features in both images. Then we combined RANSAC with a feature matching algorithm in order to get the correct matching pairs of features. Then the subsystem can use the triangulation method in order to compute the depth of the object.

Stereo Vision Results

Depth Map

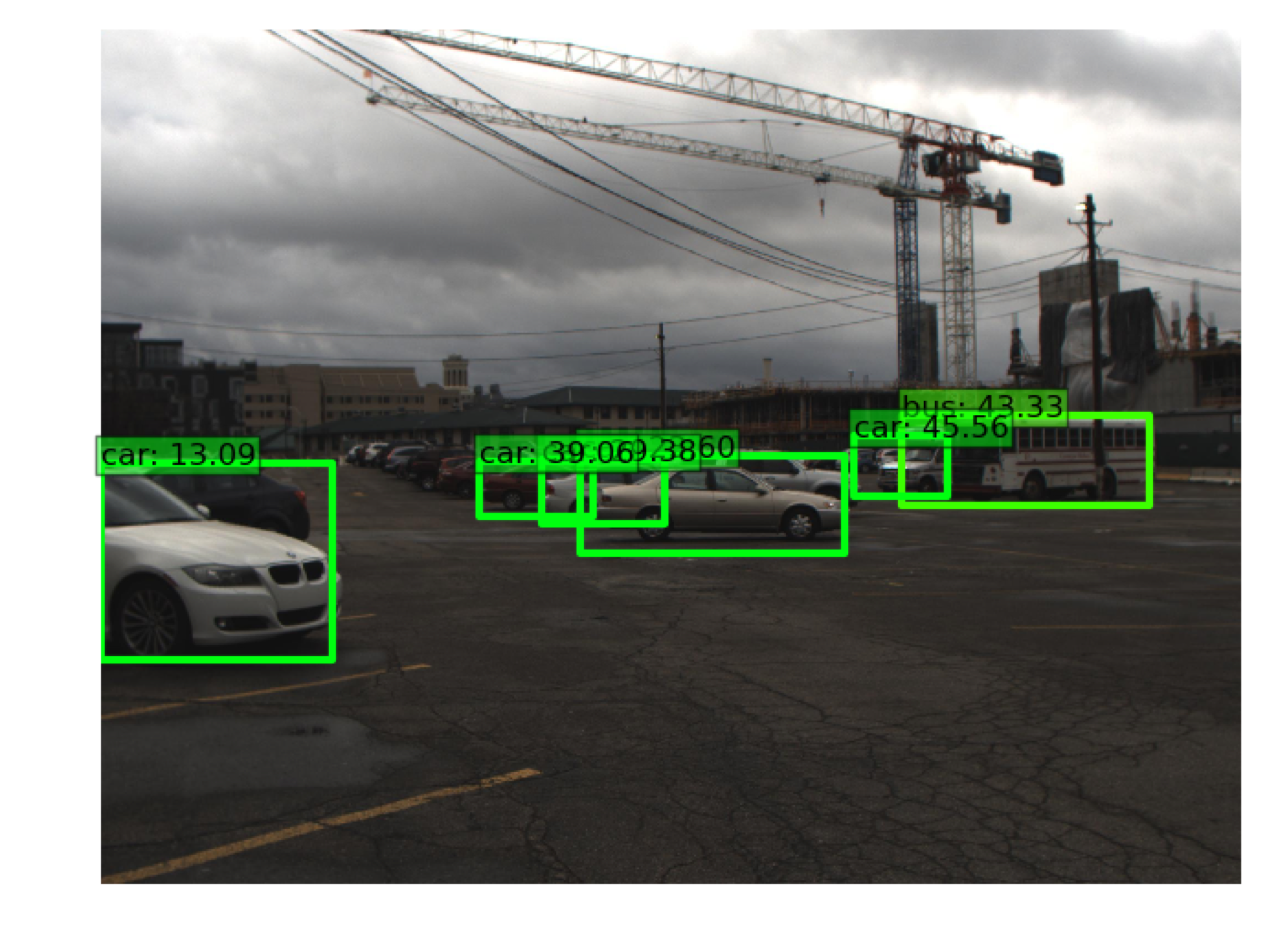

Integrate with Object Detection