Spring Validation Experiment

Our presentation for the SVE is here, and some video clips highlighting our performance can be downloaded here.

In the Spring Validation Experiment, we successfully avoided the moose in 2 out of 4 trials. Both of the failures were due to instability in the ramp-up portion of the test.

The week after the SVE, we worked to fix the ramp-up issues by tweaking the ramp-up velocity profile, and adding a term to explicitly account for a slight offset we had noticed in the zero position of the steering servo. With these two changes, we were able to get our system to work in 75% of trials during testing.

In 20 trials that we ran, the results were:

- 15 successes

- 1 head-on crash: insufficient steering, delay spike

- 3 grazes: insufficient steering

- 1 out-of-road

In the Spring Validation Experiment Encore, we successfully avoided the moose in 4 out of 4 tests. We performed the fourth trial using a suddenly-appearing obstacle instead of a static obstacle that wouldn’t be noticed until it passed a distance threshold.

Strengths/Weaknesses

Strengths

- Planning: Our planning system based on the iLQR algorithm seemed to be able to handle the various combinations of initial and final conditions that we gave it. When the ramp-up section of the SVE failed to keep the robot straight and the robot was angled towards the right, the planner would occasionally chose to evade around the right side of the obstacle, despite the fact that our initial control sequence (initialization to the optimization) was designed to go to the left. In addition to the high-speed evasive maneuver we were testing for our Spring Validation Experiment, we tested the planner in a drift parallel parking situation. In every situation, the planner output a smooth and valid control sequence for the robot to follow.

- Perception: Both our tracking-based and detection-based (optimized for speed) perception systems performed robustly in a wide range of situations.

- Localization: During all of the tests and maneuvers, the robot was accurately localized and only required manual intervention (reinitializing localization) in very rare cases. The robust localization and state estimation also enabled the exploration of more methods in planning, such as extrapolated states for initializing the planner.

- Cyberphysical Architecture: The hardware and software infrastructure of our system were well developed, allowing us to perform multiple tests and prototype with different approaches with minimal downtime. Set-up time was minimal, allowing us to perform a series of tests and demonstrations smoothly. Software was written in a modular fashion, with good use of version control to ensure one bad commit does not break the entire build.

Weaknesses

- Ramp-up: One of the initial conditions for our test is that the robot should be travelling at 3m/s along a straight line before the obstacle is introduced. However, we found that this condition could be hard to achieve, with the robot veering slightly away from the middle of the lane. In some cases, the planner is able to handle the uncertainty and produce a trajectory that avoids the obstacle. In other cases, the robot veers just a little too far off, and is unable to follow the prescribed trajectory without colliding with the obstacle.

- System modeling: Our planner depends heavily on the system parameters that go into our model, and having an accurate model thus becomes a huge requirement for the planner to work well. While we spent a significant amount of time and effort to perform multiple system identification tasks to ensure an accurate model, there was still some level of mismatch between the model and the physical robot, and often the plans directly generated from the planner did not work as well as in simulation.

- Computation/reaction speed: One of the hard limitations our system faces is that of computational speed. We had to increase our obstacle distance from 1m to 1.5m, and switch out the obstacle tracker node for a more streamlined obstacle detector node in order to ensure that the robot had enough time to react and avoid the obstacle.

Fall Validation Experiment

Our Fall Validation Experiment (FVE) involved 3 major demonstrable tests – localization, navigation, and simulation. We also took the chance to demonstrate the robustness of our system, active safety features, as well as our power distribution board running our our new car RWD platform.

The table below summarizes the results of our tests during the FVE.

| Test | Test Description | Success Criteria | Results |

| 1 | Localization Accuracy Test | Localize with a known map with an accuracy of within 15cm | Localized with an accuracy of between 2.5-7cm |

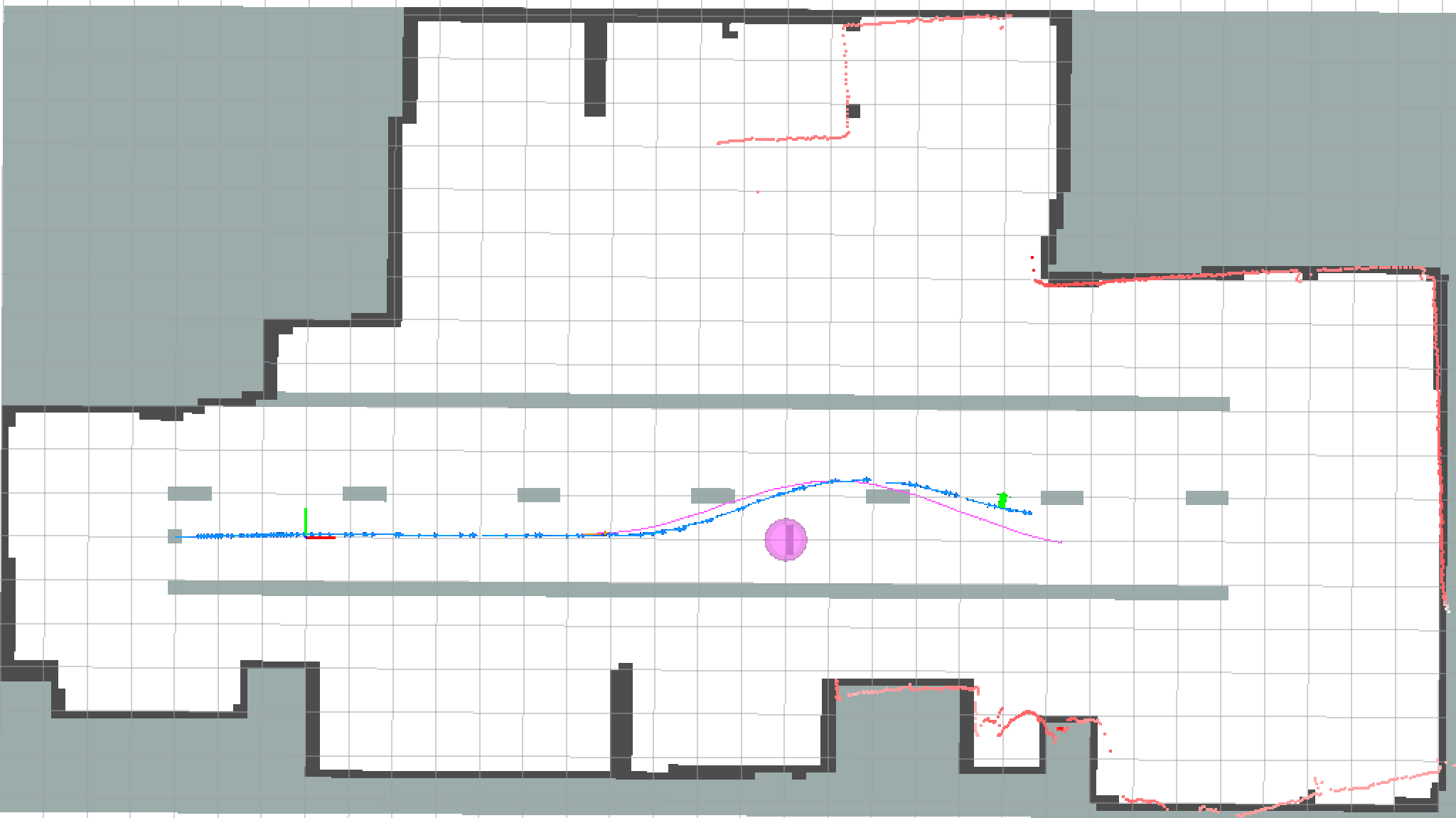

| 2 | Navigation and Obstacle Avoidance Test | Navigate to within 15cm of goal point Avoid static and sudden obstacles |

Point-to-point navigation and obstacle avoidance with goal point accuracies of 3, 10, 15cm |

| 3 | Simulation model for drifting | Demonstrate robot drifting in simulation model.

Demonstrate drifting on actual robot |

Demonstrated driving in circles and drifting in circles in simulation and on the real robot with the same set of inputs |

We were able to comfortably meet all the requirements set for the FVE, with a slight improvement in the navigation results during the FVE encore. There were slight hiccups while initializing the robot during the experiments, but the issue has been identified as a bug in the launch script, and the bug has since been resolved.

A summary video of our fall progress is on our media page.